G1 Garbage Collector Considered Slow? 18 Mar 2012 10:39 AM (13 years ago)

I was recently considering trying out the new G1 garbage collector, see if it was any better than current real time CMS garbage collector. A concurrent soft real-time garbage collector that can compact? Awesome!

I switched one of my production applications to use the new G1 garbage collector and noticed a spike in CPU and diminishing throughput almost instantaniously, what gives? I googled around and stumbled upon this blog post and decided to do my own benchmarking.

I hacked up the following scala script based off the blog post to compare the two garbage collectors. The JDK that was used was JDK7u3 on Solaris on a quad core box.

val map = new java.util.LinkedHashMap[Long, String]()

var i:Long = 0

while(i < math.pow(10000, 2)) {

map.put(i, new String())

map.remove(i - 1)

i = i + 1

if (i % 10000 == 0) {

System.out.println(i)

}

}

CMS:

time scala -J-XX:+UseConcMarkSweepGC GC.scala

real 0m12.477s

user 0m12.364s

sys 0m0.491s

G1:

time scala -J-XX:+UseG1GC GC.scala

real 2m26.121s

user 7m33.234s

sys 0m10.888s

Conclusion:

Just what I saw with my production application, the throughput substantially diminished and the CPU cores spiked. I won't be using the G1 garbage collector any time soon, hopefully Oracle will improve the G1 garbage collector with subsequent releases.

PostgreSQL memory issues with connection pooling 8 Feb 2012 4:46 PM (13 years ago)

I recently learned a hard lesson with PostgreSQL’s long lived connections; persistent connections expand in memory size without giving any back to the OS. I saw our PostgreSQL database go from 1.2gigs (shared_buffers set to 1024m) to 3.8gigs in memory size. Almost 2.6gigs of memory wasted on persistent connections held on by our connection pool. Moral of the story? Configure your connection pool to recycle Postgresql connections.

MySQL ZFS Snapshot Backups 31 Aug 2010 2:08 PM (15 years ago)

This is a followup on my previous post concerning how to correctly snapshot databases on ZFS. Snapshotting MySQL any other way will just lead to corrupt database states, essentially making your backups useless.

Here is my script that I use to snapshot our MySQL database. It uses my zBackup.rb script for the automated backup rotation.

#!/bin/sh

mysql -h fab2 -u usr -ppass -e 'flush tables;flush tables with read lock;'

/usr/bin/ruby /opt/zbackup.rb rpool/mydata 7

mysql -h fab2 -u usr -ppass -e 'unlock tables;'

Ruby LocaleTranslator 20 Jul 2010 1:05 PM (15 years ago)

Today, I am open sourcing my Ruby LocaleTranslator; the translator uses google’s translator API to translate a primary seed locale into various other languages. This eases the creation of multi-lingual sites. Not only can the LocaleTranslator translate your main seed locale into different languages but it can also recursively merge in differences, this comes in handy if you have hand-optimized your translated locales.

Viva Localization!

My projects that use the LocaleTranslator; UploadBooth, PasteBooth and ShrinkBooth.

LocaleTranslator Examples

en.yml

site:

hello_world: Hello World!

home: Home

statement: Localization should be simple!

Batch Conversion of your English locale.

#!/opt/local/bin/ruby

require 'monkey-patches.rb'

require 'locale_translator.rb'

en_yml = YAML::load(File.open('en.yml'))

[:de,:ru].each do |lang|

lang_yml = LocaleTranslator.translate(en_yml,

:to=>lang,

:html=>true,

:key=>'GOOGLE API KEY')

f = File.new("#{lang.to_s.downcase}.yml","w")

f.puts(lang_yml.ya2yaml(:syck_compatible => true))

f.close

p "Translated to #{lang.to_s}"

end

Merge in new locale keys from your English Locale into your already translated Russian locale.

#!/opt/local/bin/ruby

require 'monkey-patches.rb'

require 'locale_translator.rb'

en_yml = YAML::load(File.open('en.yml'))

ru_yml = YAML::load(File.open('ru.yml'))

ru_new_yml = LocaleTranslator.translate(en_yml,

:to=>:ru,

:html=>true,

:merge=>ru_yml,

:key=>'GOOGLE API KEY')

puts ru_new_yml.ya2yaml(:syck_compatible => true)

The Implementation Code

Support Monkey Patches

monkey-patches.rb

class Hash

def to_list

h2l(self)

end

def diff(hash)

hsh = {}

this = self

hash.each do |k,v|

if v.kind_of?Hash and this.key?k

tmp = this[k].diff(v)

hsh[k] = tmp if tmp.size > 0

else

hsh[k] = v unless this.key?k

end

end

hsh

end

def merge_r(hash)

hsh = {}

this = self

hash.each do |k,v|

if v.kind_of?Hash

hsh[k] = this[k].merge_r(v)

else

hsh[k] = v

end

end

self.merge(hsh)

end

private

def h2l(hash)

list = []

hash.each {|k,v| list = (v.kind_of?Hash) ? list.merge_with_dups(h2l(v)) : list << v }

list

end

end

class Array

def chunk(p=2)

return [] if p.zero?

p_size = (length.to_f / p).ceil

[first(p_size), *last(length - p_size).chunk(p - 1)]

end

def to_hash(hash)

l2h(hash,self)

end

def merge(arr)

self | arr

end

def merge_with_dups(arr)

temp = []

self.each {|a| temp << a }

arr.each {|a| temp << a }

temp

end

def merge!(arr)

temp = self.clone

self.clear

temp.each {|a| self << a }

arr.each {|a| self << a unless temp.include?a }

true

end

def merge_with_dups!(arr)

temp = self.clone

self.clear

temp.each {|a| self << a }

arr.each {|a| self << a }

true

end

private

def l2h(hash,lst)

hsh = {}

hash.each {|k,v| hsh[k] = (v.kind_of?Hash) ? l2h(v,lst) : lst.shift }

hsh

end

end

The LocaleTranslator Implementation

You need the ya2yaml and easy_translate gems. Ya2YAML can export locales in UTF-8 unlike the standard yaml implementation that can only export in binary for non-standard ascii.

locale-translator.rb

$KCODE = 'UTF8' if RUBY_VERSION < '1.9.0'

require 'rubygems'

require 'ya2yaml'

require 'yaml'

require 'easy_translate'

class LocaleTranslator

def self.translate(text,opts)

opts[:to] = [opts[:to]] if opts[:to] and !opts[:to].kind_of?Array

if opts[:merge].kind_of?Hash and text.kind_of?Hash

diff = opts[:merge].diff(text)

diff_hsh = LocaleTranslator.translate(diff,:to=>opts[:to],:html=>true)

return opts[:merge].merge_r(diff_hsh)

end

if text.kind_of?Hash

t_arr = text.to_list

t_arr = t_arr.first if t_arr.size == 1

tout_arr = LocaleTranslator.translate(t_arr,:to=>opts[:to],:html=>true)

tout_arr = [tout_arr] if tout_arr.kind_of?String

tout_arr.to_hash(text)

elsif text.kind_of?Array

if text.size > 50

out = []

text.chunk.each {|l| out.merge_with_dups!(EasyTranslate.translate(l,opts).first) }

out

else

text = text.first if text.size == 1

EasyTranslate.translate(text,opts).first

end

else

EasyTranslate.translate(text,opts).first

end

end

end

MacOS X ZFS Samba Transfer Fix 18 Jul 2010 8:19 AM (15 years ago)

I have a very non-standard storage setup at home. The setup is made up of a 3x500G raidz array on ZFS hosted by OSX. For the longest time I could not get files to copy over samba on ZFS. The files would stream just fine but not copy over, they would abort at the 99% transfer point. Well, I have finally found the fix for it; turn off extended attributes!

smb.conf

#vfs objects = notify_kqueue,darwinacl,darwin_streams

vfs objects = notify_kqueue,darwinacl

; The darwin_streams module gives us named streams support.

stream support = no

ea support = no

; Enable locking coherency with AFP.

darwin_streams:brlm = no

As Charles Heston would say, You can have my ZFS when you pry it from my cold dead hands.

viva ZFS on OSX!

STOMP Client on JRuby 16 Jul 2010 10:13 AM (15 years ago)

I recently needed to make use of our ActiveMQ message queue service to scale up write performance of CouchDB. However, there seemed to be a bug with JRuby that kills off the STOMP subscriber every 5 seconds. Digging a bit deeper into the STOMP source, I figured out a way to get around the bug by removing the timeout line.

ActiveMQ let me scale CouchDB writes from 10req/sec to 128req/sec. Huge performance win with very little effort.

STOMP Library Monkey Patch:

# for stomp subscriber

if defined?(JRUBY_VERSION)

module Stomp

class Connection

def _receive( read_socket )

@read_semaphore.synchronize do

line = read_socket.gets

return nil if line.nil?

# If the reading hangs for more than 5 seconds, abort the parsing process

#Timeout::timeout(5, Stomp::Error::PacketParsingTimeout) do

# Reads the beginning of the message until it runs into a empty line

message_header = ''

begin

message_header += line

begin

line = read_socket.gets

rescue

p read_socket

end

end until line =~ /^\s?\n$/

# Checks if it includes content_length header

content_length = message_header.match /content-length\s?:\s?(\d+)\s?\n/

message_body = ''

# If it does, reads the specified amount of bytes

char = ''

if content_length

message_body = read_socket.read content_length[1].to_i

raise Stomp::Error::InvalidMessageLength unless parse_char(read_socket.getc) == "\0"

# Else reads, the rest of the message until the first \0

else

message_body += char while read_socket.ready? && (char = parse_char(read_socket.getc)) != "\0"

end

# If the buffer isn't empty, reads the next char and returns it to the buffer

# unless it's a \n

if read_socket.ready?

last_char = read_socket.getc

read_socket.ungetc(last_char) if parse_char(last_char) != "\n"

end

# Adds the excluded \n and \0 and tries to create a new message with it

Message.new(message_header + "\n" + message_body + "\0")

end

#end

end

end

end

end

A fix for the sound delay with VoodooHDA and Final Cut Pro 1 Jul 2010 7:01 PM (15 years ago)

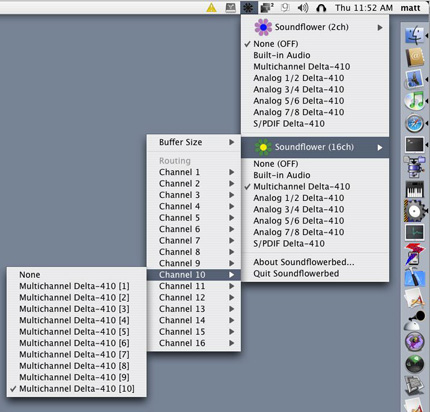

I spent the better part of the day trying to figure out why the Final Cut Pro audio was out of sync. The audio would be delayed by 3 seconds in playback. I thought it was some setting in Final Cut Pro that broke, but eventually came to the conclusion that it was the OS and not Final Cut Pro that was causing the audio delay. The specific cause was the VoodooHDA driver, even though it worked perfect in ordinary applications such as iTunes and Safari it had a delay issue with Final Cut Pro. The Fix? Install SoundFlowerBed and configure your audio output settings in it. This somehow magically fixes the delay issue in the VoodooHDA driver. I thought I should post it here for “internet” record keeping.

CouchDB on ZFS 25 Jun 2010 10:29 PM (15 years ago)

CouchDB was made for next generation filesystems such as ZFS and BTRFS. First off, unlike PostgreSQL or MySQL, CouchDB can be snapshot while in production without any flushing or locking trickery since it uses an append only B-Tree storage approach. That alone makes it a compelling database choice on ZFS/BTRFS.

Second, CouchDB works hand-in-hand with ZFS’s block level compression. ZFS can compress blocks of data as they are being written out to the disk. However, it only does it for new blocks and not retroactively. Now, the awesome part, CouchDB on compaction writes out a brand new database file which can utilize the new gzip compression settings on ZFS. This means you can try out different gzip compression settings just by compacting your CouchDB.

Some tips on running CouchDB on ZFS:

1. Use automated snapshots to prevent $admin error, it is painless with ZFS and CouchDB loves being snapshot

You can give my little ruby script a try for daily snapshots; I use it both on Mac OSX and Solaris for automated ZFS snapshot goodness.

zfs snapshot rpool/couchdb@mysnapshot-tuesday

2. Try out various gzip compression schemes on your CouchDB workload, re-compact the database to use the new gzip compression settings. I personally use the gzip-4 compression for our workload which strikes the perfect balance between space and cpu utilization.

zfs set compression=gzip-4 rpool/couchdb

3. Set the ZFS dataset to 4k block record size and turn off atime. Yes the B-Tree append only approach is elastic on writes but you can have near perfect tiny writes with a small 4k block record size.

zfs set recordsize=4k rpool/couchdb

zfs set atime=off rpool/couchdb

PostgreSQL ZFS Snapshot Backups 23 Jun 2010 11:31 AM (15 years ago)

I recently had a WD Raptor drive die in a server that hosted our PostgreSQL database. I had a ZFS snapshot strategy setup that sent over ZFS snapshots of the live database to a ZFS mirror for backup purposes. Looked good in theory right? Except, I forgot to do one critical thing, test my backups. Long story short, I had a bunch of snapshots that were useless. Luckily I had offsite nightly PostgreSQL dumps that I did test which were used to seed my development database. So in the end I avoided catastrophic data failure.

With that lesson in mind, I reconfigured our backup system to do it correctly after re-reading the PostgreSQL documentation.

Prerequisite: You must have WAL archiving on and have the archive directory under your database directory. For example if your database is under /rpool/pgdata/db1 configure your archive directory under /rpool/pgdata/db1/archives

Completely optional but I highly suggest you automate your backups; My zbackup ruby script is pretty simple to setup.

This is how my /rpool/pgdata/db1 Looks like:

victori@opensolaris:/# ls /rpool/data/db1 archives pg_clog pg_multixact pg_twophase postmaster.log backup_label pg_hba.conf pg_stat_tmp PG_VERSION postmaster.opts base pg_ident.conf pg_subtrans pg_xlog postmaster.pid global pg_log pg_tblspc postgresql.conf

Source for my pgsnap.sh script.

#!/bin/sh

PGPASSWORD="mypass" psql -h fab2 postgres -U myuser -c "select pg_start_backup('nightly',true);"

/usr/bin/ruby /opt/zbackup.rb rpool/pgdata 7

PGPASSWORD="mypass" psql -h fab2 postgres -U myuser -c "select pg_stop_backup();"

rm /rpool/pgdata/db1/archives/*

The process is quite simple. You issue a command to initiate the backup process so PostgreSQL goes into “backup mode.” Second, you do the ZFS snapshot, in this case I am using my zbackup ruby script. Third, you issue another SQL command to PostgrSQL to get out of backup mode. Lastly, since you have the database snapshot you can safely delete your previous WAL archives.

Now, this is all nice and dandy but you should *TEST* your backups, before assuming your backup strategy actually worked.

zfs clone rpool/pgdata@2010-6-17 rpool/pgtest

postgres --singleuser mydb -D /rpool/pgtest/db1

Basically you clone the snapshot and test it by running it under PostgreSQL in single user mode. Once in singleuser mode, test out your backup to make sure it is readable, you can issue a SQL queries to confirm that all is fine with the backup.

ZFS you rock my world

Scala yEnc Decoder 22 Jun 2010 2:07 PM (15 years ago)

SYEnc is a Scala decoder for the yEnc format that is based on Alex Russ’s Java yEnc Decoder. SYEnc was designed to be used as a library by applications needing to use yEnc to decode data. It should be thread-safe, so don’t worry about using it in a threaded context.

Uploaded to github: http://github.com/victori/syenc

Example:

val baos = new ByteArrayOutputStream

SYEnc.decode(new ByteArrayInputStream(stringFromStream.getBytes("UTF-8")), baos)

println(baos.toString)