Selfish AI 1 Feb 2:32 PM (10 days ago)

Selfish AI

This will be a bit more ranty than my usual articles. Fair warning. But I need to put this out there.

Recently, a video by Jeffrey Way of Tailwind/Laracast fame came across my feed. In less than 15 minutes, he managed to succinctly capture everything I absolutely detest about the current moment in the IT industry.

This isn't a response to Jeffrey per se, but to the whole attitude that he captures. (And it shouldn't need to be said, but this is not an attack on Jeffrey, nor should anyone use this as an excuse to attack or otherwise be a jerk to Jeffrey or anyone else.)

He starts off by talking about how the sudden growth of AI has basically torpedoed his business. He recently had to lay off half his company because AI usage had changed the market behavior enough that their revenue was drying up, and "it is what it is."

But then he pivots into his personal struggle getting used to working with an AI code agent/assistant/whatever, and how he's "Done" fighting it, and looking forward to the new future of Autocomplete Code Authorship. "It is what it is." Lamenting that he/we enjoy writing code (and I do) is fine, but "those days are numbered, it is what it is, you need to get on board." The code quality and style may be poor, but it gets the job done so fast that we need to just give up on caring about that.

You can agree or disagree with him about that point, you can lament or celebrate this tectonic shift in what it even means to be a programmer... but bloody hell I am sick and tired of everyone I know viewing AI coding in such purely selfish terms.

Selfish AI usage

All of this discussion, from Jeffrey's video to 99% of what shows up in forums or Mastodon discussions, is about how all of this will impact "me." Me, the developer. Me, the person writing code, who may not be writing code now. Me the person who just got laid off because some accountant thinks that Claude means they need only two engineers now instead of 10. Me the person who just had to lay off half my company. Me, me, me.

What I almost never see is the impact of AI code on our society.

Scarcely a word is said about the fact that essentially all LLMs are built on scraping the Internet, badly, often completely trashing servers as they download the entire site without using any of the polite techniques developed by archivers and search engines over the past 30 years. They're all built on copyright infringement. Which is a felony, as anyone who has crossed Disney well knows. Modern LLMs literally could not exist without violating copyright, as even Sam Altman of OpenAI openly admits. But it's OK, because the ones violating copyright this time have VC backing. The courts are still trying to figure out if Fair Use applies; I and most Free Software developers I know hold that it does not, but that doesn't stop OpenAI and Anthropic from slurping up our open source code to train their models, even if it violates a copyleft license. Nor does it seem to matter that the same companies and VCs have been pushing successfully for years to narrow and circumvent Fair Use, via Digital Restrictions Management (DRM) and other means. What's good for the goose is not good for the gander. But, "it is what it is."

In a few edge cases, large media companies have been able to sue and get a small license fee for scraping their data, but if you're not a billion dollar company, as usual you're SOL. "It is what it is."

Far too little is said about the fact that training AI models is not an entirely digital process. It is backed by an army of over-worked, low-paid, sweatshop-level workers manually labeling data to feed into the machine. Because why wouldn't we outsource painful grunt work to some person in a poor country we don't care about? It's standard procedure if you're a tech company. They already do it for moderation, may as well do it for AI training. OK, it means one of the main selling points of AI is a lie, but, "it is what it is."

More than 2 million people in the Philippines perform this type of “crowdwork”, according to informal government estimates, as part of AI’s vast underbelly. While AI is often thought of as human-free machine learning, the technology actually relies on the labour-intensive efforts of a workforce spread across much of the global south and is often subject to exploitation.

Almost none of my colleagues seem to be focusing on the absolutely massive impact on the electrical grid.

From 2005 to 2017, the amount of electricity going to data centers remained quite flat thanks to increases in efficiency, despite the construction of armies of new data centers to serve the rise of cloud-based online services, from Facebook to Netflix. In 2017, AI began to change everything. Data centers started getting built with energy-intensive hardware designed for AI, which led them to double their electricity consumption by 2023. The latest reports show that 4.4% of all the energy in the US now goes toward data centers.

By 2028, "AI alone could consume as much electricity annually as 22% of all US households."

Some power companies that were planning go all solar from now on in order to avoid destroying the planet (even more than they already have) are now saying they will be bringing new natural-gas-fired plans online in order to keep up with the increased demand from LLM data centers.

Essentially, none of the new renewable electrical capacity the US has built in recent years is going to replacing existing CO2-barfing coal, oil, and gas plants. It's all getting eaten up by AI data centers. That of course drives up electrical rates across the country, which in turn means people are less likely to switch to electric appliances, which means even more CO2 produced.

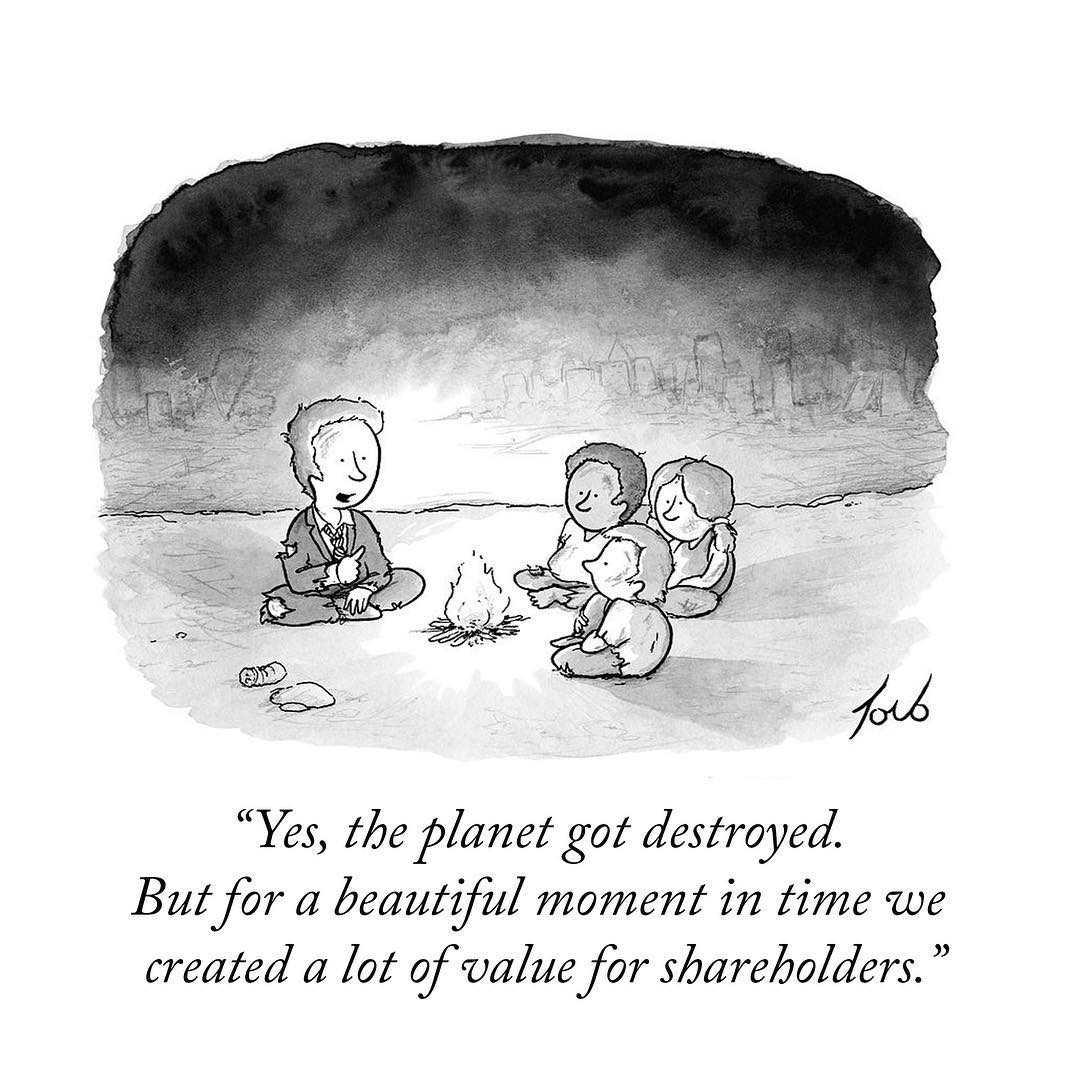

We're already probably past the point that we will have a livable planet by the end of the century. But whatever slim chance we may have to survive as a species gets slimmer with every new data center built. But, you know, "it is what it is."

There's lots of misinformation about the water usage of AI data centers. Most use water for cooling, which uses millions of gallons per day. "[R]esearchers calculated that writing a 100-word email with AI could consume around 500 mL of water (about one typical drinking bottle’s worth) when you account for both data center cooling and power generation." Which sounds like a lot, and it is, but as that article notes, many industries use vastly more than that. What matters is where the data center is; if it's in an area where water is abundant, it's no big deal. If it's in an area that is already experiencing water shortages -- like, say, the entire western half of the US -- the impact could destroy communities. And in aggregate, data centers now use as much water as the entire bottled water industry. But, of course, "it is what it is."

And of course, none of that is even mentioning the fact that even AI company execs say there's a massive bubble, that they're all losing money faster than a roulette table, that the AI construction boom is basically propping up the American economy and when it pops it's going to implode, or that the price you're paying for these services now is unsustainably low and you can expect the price to skyrocket once the tech bros decide they need to actually make money. That's all true, but that's a different long list of issues.

Quite simply, no one seems to give a fuck about the ethical implications of new technology. That's hardly new, to be fair. The VC and tech bro startup crowd have long been of the belief that ethics are just an annoying road bump that gets in the way of profit. But it feels new that so many people who are otherwise invested in Open Source and Free Software (which, I remind you, is an ethical and political framework whether you like it or not) seem to just... not care. Maybe they'll make a nod on the copyright front, but then go and vibe code everything. OK, so it means my daughter won't have a future to grow up in, and may be the last generation of humans that can live below a certain latitude, but, "it is what it is."

No. Fuck no. No it is not. You don't get off that easily.

Collective action

It only "is what it is" because we have collectively, in aggregate, decided that it is. A technology that no one uses can't hurt us; only a technology that we do use.

Every time you, as an individual, shrug your shoulders and say "it is what it is" and do something ethically problematic, you make it that much harder for anyone else to not do so. The pseudo-libertarian lie of "vote with your dollars" is pure bullshit. You have already outvoted me, so I no longer have a choice.

- You consider Amazon unethical? Good fucking luck not doing business with AWS. You basically can't use the Internet.

- You consider Walmart unethical? Too bad, it's the only business left in your small town because all of your neighbors went there for the marginally lower prices.

- You consider Uber and its long history of employees stalking their exes and building its entire business on simply ignoring the law unethical? Tough, the cabs have mostly been driven out of business in many towns so that's all that's left.

- You don't want the carbon footprint of a car, even an electric one? Sucks to be you if you live in most US cities; without a car you're basically screwed.

- Don't want to put your small business on Facebook because they've allowed their systems to be used to enact genocide? Well, I guess you won't have a small business, sorry.

- Don't want to use Apple or Android's Big Brother-in-your-hand? Eh, half the businesses out there basically don't want you unless you're in one of those ecosystems.

Don't want to use AI because it's built on copyright infringement and literally destroying the planet? Well, I guess you can't work in software anymore, sorry. It is what it is.

Every time someone like Jeffrey Way says "it is what it is," it makes it so. It is not inevitable just because Sam Altman tells his over-leveraged investors it is so. It becomes inevitable when you, you personally, decide that you just don't want to think about the externalities or put in the work to find better alternatives.

We are making this choice. But really, that means you have already decided for me. And I curse you and the ground you walk on for it. No, I'm not joking or exaggerating. Burn in hell.

Where do we go from here

I have, to date, not used any AI coding tools, at all. I've actively removed them from my IDE. I don't want them. Not just because of questions of their quality (still lower than a human), or because I will miss writing elegant code (I know I will), but because I want my daughter to have a future, and a planet on which to live. Because I actually do care about respecting copyright.

But, at this point, it's become obvious that I have to either compromise on that, or leave tech entirely. And every time I think about that, I get angry. Angry at you, dear reader, and everyone else who has said "it is what it is" and gone off to spend all day vibe coding.

It's not that the industry is changing that bothers me. I've been a change agent in most organizations I've been in; I switched from nano as a code editor to full on IDEs with all their auto-refactor glory; I don't mind change. I do mind unethical behavior. I do mind being forced into unethical behavior in order to survive.

At some point soon, I will have to figure out how to work with AI coding tools if I want to stay in the industry I've put my entire adult life into. But more importantly, I will have to figure out how to live with myself every time I consider the tons of CO2 and gallons of water involved in every function I write from now on.

See, I can't just say "it is what it is." I will feel that cost in my gut every fucking time. Whether I'll be able to swallow it and accept (as I do for Amazon and Android, after long resisting them) in order to find a job, I don't know. If not, it won't be a tight labor market that forces me out of tech, but every fucking one of my colleagues that decided "it is what it is, ethics don't matter, it's not worth fighting the billionaire VCs that have fucked up everything else they touch already."

If you have already shrugged and said "it is what it is," fuck you. It is exactly that attitude, that lack of care for ethics, that lack of interest in the global implications of our work, that is literally dooming our species. And by forcing -- yes forcing -- everyone to join you in your uncaring attitude through shear force of numbers is abusive. It's despicable.

As I learn how to work with AI coding agents, know that I will be thinking ill of you the entire time. Not because I don't get to write for loops, but because you have made yet another part of the economy impossible to engage with ethically.

This is how societies die. I wish I were being hyperbolic. I really really do. But I have nothing left but contempt for "it is what it is."

No it isn't. It only is what it is because you're OK with what it is, and aren't putting in the work to make it otherwise. Those that don't give a fuck about fair copyright application, about poor people, about our planet, are putting in the work to make it so. And you're letting them.

I do not forgive you.

A survey of data modeling 19 Aug 2025 9:30 AM (5 months ago)

A survey of data modeling

There are many different ways of modeling data. They all have their place, and all have places where they are a poor fit.

The spectrum of options below are defined mainly by the degree to which they differentiate between read and write models, and correspondingly how powerful-but-also-complex they are. "Model" in this case usually corresponds to a class, or a class with one or more composed classes.

Varieties of data modeling

Arbitrary SQL

In this case, there is no formal data definition beyond the SQL (or other database) schema. The application just runs arbitrary SQL queries, both read and write, wherever it sees fit.

In a slightly better variant, SQL queries are all confined to selected objects that act as an API to the rest of the application. Arbitrary code does not call SQL, but it can call a method on this object that will call SQL.

The SQL could be hand-crafted, use a query builder of one kind or another, or a little of each.

This approach may work at a very small scale, where building something more formal isn't worth the effort. However, the tipping point where it is worth the effort comes very, very early.

CRUD

The most widely used approach is known as "Create Read Update Delete" (CRUD). Those are the four standard operations. In this case, the system models a series of data objects called Entities. While technically Entities do not need to correspond 1:1 to a particular database table, in practice that is often the case. An entity could also have dependent tables, the details of which are mostly hidden.

CRUD is usually managed by an ORM, or Object-Relational Mapper. The ORM attempts to hide all SQL logic from the user, providing a consistent interface pattern. A user Reads (loads) an Entity by ID, possibly Updates it (edits some value), and then saves it back to the database. The user only interacts with the Entity object.

There are two main variants of ORM: Active Record, in which the Entity object has direct access to the database connection to load and save itself, and Data Mapper, in which the Entity is ignorant of its storage and a separate service (a mapper, or repository, or various other names) is responsible for the loading and saving. Active Record is often easier to implement from scratch, so it is popular with RAD-oriented tools (like Ruby on Rails or Laravel). It is, however, a vastly inferior design as it severely hinders testing, encapsulation, and more advanced cases. The effort to set up a Data Mapper is almost always worth it, as the effort is not substantially higher for a skilled developer.

CRUD falls down in three key areas:

- It assumes the "read model" and "write model" are the same. While in simple cases that may be true, they often have different validation requirements. For example, "last updated" or "last login time" are likely fields that are not needed on the write model, as the system manages them directly; they are either absent or optional. On the read model, however, we expect them to be always present. That difference cannot be easily captured in a single unified object. (Some workarounds do exist, but they are workarounds only.)

- Relationships. A key value of SQL is data being "relational." That is, Entity A may have a "contains" or "uses" or "is parent of" relationship with Entity B, or with another Entity A. In SQL, this is almost always captured using a foreign key, and many-to-many relationships are captured with an extra join table. Mapping that into objects is often difficult, especially for complex data where an Entity spans multiple tables. It can also lead to severe performance problems, especially the "SELECT N+1 Problem," in which a series of Entity A objects are loaded, then as they are used each one lazily loads its related Entity B, resulting in "N+1" queries.

- Listing and Querying. SQL is very good at building searches across arbitrary data fields. That's what it was built for. Object models frequently are not. They are fine for straight read/write operations, but less so for "find all products that cost at least $100 bought by a customer over the age of 50 in the last 3 months." That generally requires either dropping down to manual SQL to get a list of entity IDs, then doing a bulk-read on them, or a complex query builder syntax (either method calls or a custom string syntax) that translates high level relationships into low-level relationships. Tools like Java Hibernate or Doctrine ORM take the latter approach, which is one reason they are so large and complex.

An ORM in concept also does not offer any native way to create compound views, showing a subset of fields from 3 different related entities, for example. Some ORMs provide a mechanism of some sort, but rarely are they as capable or efficient as just writing SQL.

The impedance mismatch between object models and relational models has been called "The Vietnam of Computer Science," meaning "you keep trying to do it more, and it just gets worse the more you do." Simple ORMs are straightforward to build, but have an upper bound on complexity before they become too unwieldy.

CRAP

There is a variant of CRUD known as Create Read Archive Purge (CRAP), which does not get anywhere near as much use as it should. In this approach, each Entity is not updated in place when modified. Instead, an entirely new copy of the Entity is stored in the database, along with some version identifier. That gives each Entity a history of its state over time, with a built in ability to review that history and revert to an earlier state.

No Entity is deleted; if an entity needs to be deleted, a new version of it is saved that has a "deleted" flag set to true. Any SQL that interacts with the Entity must then be written to exclude older versions and deleted versions, unless specifically instructed not to.

If the historical data of a given Entity is no longer valuable, or is not valuable after a period of time, a separate Purge command can remove old revisions, including removing deleted entities entirely. The time frame for such purges and whether they can be user-triggered varies with the implementation.

The advantage is, of course, the history and rollback ability. It's also relatively easy to extend it to include forward revisions, which are revisions that will become the active revision at some point in the future (either upon editorial approval or some time trigger).

The downside is the extra tracking required, which means every bit of SQL that interacts with a CRAP Entity needs to be aware of its CRAPpiness. Writing arbitrary custom SQL becomes more problematic in this case, as a query that forgets to account for old revisions or deleted entities could result in unexpected data. That is especially true with more complex relationships. It also implies questions like "should Entity A getting a new revision cause Entity B to get a new revision, too? Should Entity A point to Entity B, or a specific revision of Entity B?" All possible answers to those questions are valid in some situations but not others. There may also be performance considerations if there are many revisions of many Entities, although that is a solvable problem with smart database design.

Nonetheless, I would argue CRAP is still superior to CRUD in most editorial-centric environments (news websites, company sites, etc.).

Projections

An extension available to both CRUD and CRAP is Projections. Usually Projections are discussed in the context of CQRS or EventSourcing (see below), but there's no requirement that they only be used there.

A Projection is the fancy name for stored data that is derived from other stored data. When the primary data is updated, an automated process causes the projection to be updated as well. That automation could be in application logic or SQL triggers/stored procedures; I would even consider an SQL View (either virtual or materialized) to be a form of Projection.

Projections are useful when you want the read version of the data structured very differently than the write version, or want it presented in some way that is expensive to compute on-the-fly.

For example, if you want a list of all sales people, their weekly sales numbers, the percentage change from last week, ordered by sales numbers, that could be expensive to compute on the fly. It could also be complex, if that data has to be derived from individual sale records and those sale records are spread across multiple tables, and sales team information is similarly well normalized across multiple tables. Instead, either on a schedule or whenever a sale record is updated, some process can compute that data (either the whole table or just update the one record it needs to) and save it to a sales_leaderboard table. Viewing that information is then a super simple, super fast single-table SELECT query.

If that table ever becomes corrupted or out of date, or we just want to change its structure, the data can just be wiped and rebuilt from the existing primary data. Projections are always expendable. If not, they're not Projections.

A system can use many or a few Projections as needed, built in a variety of ways. As usual, there's more than one way to feed a cat. If heavily used, Projections form essentially the entire read model. There's no need to read Entities from the primary data, except for update purposes.

Technically, any search index (Elasticsearch, Solr, Meilisearch, etc.) is a Projection. There is no requirement that the Projection even be in SQL, just that it is expendable, rebuildable data in a form that is optimized for how it's going to be read.

CQRS

The next level in read/write separation is Command Query Responsibility Segregation** (CQRS). CQRS works from the assumption that the read and write models are always separate.

Often, though not always, the write models are structured as command objects rather than as an Entity per se. That could be low-level (UpdateProduct command with the fields to change) or high-level (ApprovePost with a post ID).

The read models could be structured in an Entity-like way, but do not have to be. CQRS does not require using Projections, though they do fit well.

The advantage of CQRS is, of course, the flexibility that comes with having fully independent read and write models. That allows using the type system to enforce write invariants while having completely separate immutable read models. It also allows separating both read and writes from the underlying Entity definitions; a single update command may impact multiple entities, and a read/lookup can easily span entities.

The downside of CQRS is the added complexity that keeping track of separate read and write models entails. It requires great care to ensure you don't end up with a disjointed mess. Martin Fowler recommends only using it within one Bounded Context rather than the system as a whole (though he does not go into detail about what that means). If the read and write models are "close enough," CRUD with an occasional Projection may have less conceptual overhead to manage.

Event Sourcing

The most aggressive separation between read and write models is Event Sourcing. In Event Sourcing, there is no stored model. The primary data that gets written is just a history of "Events" that have happened. The entire data store is just a history of event objects, with some indexing support.

When loading an object (or "Aggregate" in Event Sourcing speak), the relevant Events are loaded from the store and a "current status" object is built on-the-fly and returned. In practice, in a well-designed system this process can be surprisingly fast. The Event stream also acts as a built-in log of all actions taken, ever.

Event Sourcing also leans very heavily on Projections. Projections can represent the current state of the system as of the most recent event, in whatever form is desired. Storing an Event can trigger a handler that updates Projections, sends emails, enqueues jobs, or anything else.

Importantly, events can be replayed. That means, for example, creating a new Projection requires only writing the routine that creates the projection, then rerunning the entire Event stream on it. It will then build the Projection appropriately. If the Projection is updated, migrating a projected database table is simple: Delete the old one, create the new one, rerun the Event stream. Every database table, search index, etc. except for the Event stream itself are disposable and can be thrown out and recreated at will.

The downside is that Event Sourcing, like CQRS, requires careful planning. It's a very different mental model, and not a good fit for all situations. Banking is the classic example of where it fits, and where a history of actions taken is the most important data. A typical editorial CMS, however, would be a generally poor fit for Event Sourcing, as most of what it's doing is very CRUD-ish. Nearly all events would be some variation on PostUpdated.

Depending on the complexity of the data, building reasonable Projections could be a challenge. If Entities/Aggregates are loaded from the Event stream, they may be easy or complex to reconstitute.

General advice

(This section is, of course, quite subjective.)

All of these models have their trade-offs, and pros/cons. For most standard applications, I would argue that CRUD-with-Projections is the least-bad approach. The ecosystem and known best practices are well established. Edge cases where the read and write models need to differ can often be handled as one-offs, if the system is designed with that in mind. That sort of edges it into CQRS space in limited areas, which is both helpful and risky if viewed as a slippery slope.

Even in a CRUD-based approach, it's possible to have slightly different objects for read and write. If the language supports it, the read objects can be immutable, while the write objects are mutable aside from select key fields (primary key, last-updated timestamp, etc.), which may even be omitted. The line between this split-CRUD approach and CQRS is somewhat fuzzy, though, so be mindful that you don't over-engineer CRUD when you should just use CQRS.

For workflow-heavy applications (like change-approval, or scheduled publishing, etc.), CRAP is likely worth the effort. The ability to have forward and backward revisions greatly simplifies many workflow approaches, and provides a nice audit trail.

Regardless of the approach chosen, it is virtually always worth the effort to define formal, well-typed data objects in your application to represent the models. Using anonymous objects, hashes, or arrays (depending on the language) is almost always going to cause maintenance issues sooner rather than later. Even if using CQRS or just queries that bypass a CRUD ORM, every set of records read from the database should be mapped into a well-typed defined object. That is inherently self-documenting, eliminates (or at least highlights as needing attention) many edge cases, provides a common, central place for in-memory handling of those edge cases (eg, null handling), and so forth.

Additionally, any database interaction should be confined to select, dedicated services whose have exclusive responsibility for interacting with the database and turning results into proper model objects. This is true regardless of the model used.

If doing CRUD or CQRS, it may be tempting to optimize updates to only update individual fields that need updating rather than updating an entire Entity at once, including unnecessary fields. I would argue that, in most cases, this is a waste of effort. Modern SQL databases are quite fast and almost certainly smarter than you are when it comes to performance. If you are using a well-established ORM that already does that, it's fine, but if rolling your own the effort involved is rarely worth it. At that point, you're almost merging CRUD and CQRS commands anyway.

Crell/Serde 1.5 released 15 Jul 2025 10:01 AM (7 months ago)

Crell/Serde 1.5 released

It's amazing what you can do when someone is willing to pay for the time!

There have been two new releases of Crell/Serde recently, leading to the latest, Serde 1.5. This is an important release, not because of how much is in it but what major things are in it.

That's right, Serde now has support for union, intersection, and compound types! And it includes "array serialized" objects, too.

mixed fields

A key design feature of Serde is that it is driven by the PHP type definitions of the class being serialized/deserialized. That works reasonably well most of the time, and is very efficient, but can be a problem when a type is mixed. When serializing, we can just ignore the type of the property and use the type of the value. Easy enough. When deserializing, though, what do you do? In order to support non-normalized formats, like streaming formats, the incoming data is opaque.

The solution is to allow Deformatters to declare, via an interface, that the can derive the type of the value for you. Not all Deformatters can do that, depending on the format, but all of the array-oriented Deformatters (json, yaml, toml, array) are able to, and that's the lion's share of format targets. Then when deserializing, if we hit a mixed field, Serde delegates to the Deformatter to tell it what the type is. Nice.

Sometimes that's not enough, though. Especially if you're trying to deserialize into a typed object, just knowing that the incoming data is array-ish doesn't help. Serde 1.4 therefore introduced a new type field for mixed values: #[MixedField]. MixedField takes one argument, $suggestedType, which is the object type that should be used for deserialization. If the Deserializer says the data is an array, then it will be upcast to the specified object type.

class Message

{

public string $message;

#[MixedField(Point::class)]

public mixed $result;

}

When serializing, the $result field will serialize as whatever value it happens to be. When deserializing, scalars will be used as is while an array will get converted to a Point class.

Unions and compound types

PHP has supported union types since 8.0, and intersection types since 8.1, and mixing the two since 8.2. But they pose a similar challenge to serialization.

The way Serde 1.5 now handles that is to simply fold compound types down to mixed. As far as Serde is concerned, anything complex is just "mixed," and we just defined above how that should be handled. That's... remarkably easy. Neat.

If the type is a union, specifically, then there's a little more we can do.

First, if a union type doesn't specify a suggestedType but the value is array-ish, it will iterate through the listed types and pick the first class or interface listed. That won't always be correct, but since the most common union type will likely be something like string|array or string|SomeObject, it should be sufficient in most cases. If not, specifying the $suggestedType explicitly is recommended.

Second, a separate #[UnionField] attribute extends MixedField and adds the ability to specify a nested TypeField for each of the types in the list. The most common use for that would be for an array, like so:

class Record

{

public function __construct(

#[UnionField('array', [

'array' => new DictionaryField(Point::class, KeyType::String)]

)]

public string|array $values,

) {}

}

In this case, if the deserialized value is a string, it gets read as a string. If it's an array, then it will be read as though it were an array field with the specified #[DictionaryField] on it instead. That allows upcasting the array to a list of Point objects (in this case), and validating that the keys are strings.

Improved flattening, now for the top level

Another unrelated but very cool fix is a long-standing bug when flattening array-of-object properties. Previously, their type was not respected. Now it is. What that means in practice is you can now do this:

[

{x: 1, y: 2},

{x: 3, y: 4},

]

class PointList

{

public function __construct(

#[SequenceField(arrayType: Point::class)]

public array $points,

) {}

}

$json = $serde->serialize($pointList, format: 'json');

$serde->deserialize($json, from: 'json', to: PointList::class);

Boom. Instant top-level array. Previously, this behavior was only available when serializing to/from CSV, which had special handling for it. Now it's available to all formats.

New version requirements

Because compound types were only introduced in PHP 8.2, Serde 1.5 now requires PHP 8.2 to run. It will not run on 8.1 anymore. Technically it would have been possible to adjust it in a way that would still run on 8.1, but it was a hassle, and according to the Packagist stats for Crell/Serde the only PHP 8.1 user left is my own CI runner. So, yeah, this shouldn't hurt anyone. :-)

Special thanks

These improvements were sponsored by my employer, MakersHub. Quite simply, we needed them, so I added them. One of the advantages of eating your own dogfood: You have an incentive to make it better.

Is your company using an OSS library? Need improvements made? Sponsor them. Either submit a PR yourself or contract the maintainer to do so, or just hire the maintainer. All of this great free code costs time and money to make. Kudos to those companies that already do sponsor their Open Source tool chain.

Mildly Dynamic websites are back 15 Mar 2025 9:55 PM (11 months ago)

Mildly Dynamic websites are back

I am pleased to report that my latest side project, MiDy, is now available for alpha testing!

MiDy is short for Mildly Dynamic. Inspired by this blog post, MiDy tries to sit "in between" static site generators and full on blogging systems. It is optimized for sites that are mostly static and only, well, "mildly dynamic." SMB websites, blogs, agency sites, and other use cases where frankly, 90% of what you need is markdown files and a template engine... but you still need that other 10% for dynamic listings, form submission, and so on.

MiDy offers four kinds of pages:

- Markdown pages. These should be familiar to anyone that's worked with any "edit file on disk" publishing tool before.

- Latte templates. Latte is used as the main template engine for MiDy, but you can also create arbitrary pages as Latte templates. Want to have a one-off page where you control the HTML and CSS, but still inherit the overall page layout and theme? Great! Put a Latte template file in your routes folder and you're done.

- Static files. Self-explanatory.

- PHP. For the few cases where you need custom logic, you have a PHP class that can do whatever you'd like. It's still pathed based on the file system, just like any other file, but you get separate handlers for each HTTP method; and you get full arbitrary DI support as well.

The README covers more details, though as it's still at version 0.2.0 the documentation is still a work in progress. And of course, it's built for PHP 8.4 and takes full advantage of many new features of the language, like property hooks and asymmetric visibility. Naturally.

I will be converting this site over to MiDy soon. Gotta dog-food my own site, of course. (And finally get rid of Drupal.)

While I wouldn't yet recommend it as production ready, it's definitely ready for folks to try out and give feedback on, and to run test sites or personal sites on. I don't expect any API changes that would impact content at this point, but like I said, it's still alpha so caveat developor.

If you have feedback, please either open an issue or reach out to me on the PHPC Discord server. If you want to send a PR of your own, please open an issue first to discuss it.

I'll be posting more blog posts on MiDy coming up. Whether before or after I move this site to it, we'll see. :-)

Self hosted photo albums 12 Nov 2024 1:22 PM (last year)

Self hosted photo albums

I've long kept my photo backups off of Google Cloud. I've never trusted them to keep them safe, and I've never trusted them to not do something with them I didn't want. Like, say, ingest them into AI training without telling me. (Which, now, everyone is doing.) Instead, I've backed up my photos to my own Nextcloud server, manually organized them, and let them get backed up from there.

More recently, I've decided I really need a proper photo album tool to carry around "wallet photos" of family and such to show people. A few years back I started building my own application for that in Symfony 4, but I ran into some walls and eventually abandoned the effort. This time, I figured I'd see what was available on the market for self-hosted photo albums for me and my family to use.

Strap yourself in, because this is a really depressing story (with a happy ending, at least).

I reviewed 7 self-hosted photo album tools, after checking various review sites for their top-ten lists. Of those 7:

- 3 were in PHP, 2 were in JavaScript or TypeScript, and 2 were in Go.

- 2 used the MIT license, 2 used the GPL, 1 used AGPL, and two had broken non-free licenses.

- I managed to get one working. 1. Uno.

- Most really pushed you to use their Docker Compose setup to install, none of which actually worked.

Let's have a look at the mess directly.

PiGallery 2 (https://bpatrik.github.io/pigallery2/)

Language: TypeScript License: MIT

PiGallery 2 is intended as a light-weight, directory-based photo album. The recommended way to install it is to use their Docker compose file and nginx conf file... which you have to just manually copy out of Git. (Seriously?) And when I tried to get that to run locally, I could never connect to it successfully. There was something weird with the port configuration, and I wasn't able to quickly figure it out. If I can't get the "easy" install to work, I'm not interested.

Piwigo (https://piwigo.org/)

Language: PHP/MySQL License: GPLv2

Unlike many on here it doesn't provide a Docker image, which is fine so I set one up using phpdocker.io. Unfortunately, it's net installer crashed when I tried to use it, without useful errors. Trying to install manually resulted in PHP null-value errors from the install script. When I looked at the install script, I found dozens upon dozens of file system operations with the @ operator on them to hide errors.

At that point I gave up on Piwigo.

Coppermine (https://coppermine-gallery.net/)

Language: PHP/MySQL License: GPL, version unspecified

When I first visted the Coppermine website, I got an error that their TLS certificate had expired a week and a half before. How reassuring.

Skipping past that, I was greeted with a website with minuscule text, with a design dating from the Clinton presidency. How reassuring.

Right on the home page, it says Coppermine is compatible all the way down to PHP 4.2, and supposedly up to 8.2. For those not familiar with PHP, 4.2 was released in 2002, only slightly after the Clinton presidency. PHP has evolved, um, a lot in 22 years, and most developers today view PHP 4 as an embarrassment to be forgotten. If their code is still designed to run on 4.2, it means they're ignoring literally 20 years of language improvements, including security improvements. How reassuring.

Oh, and the installation instructions, linked in the menu, are a direct link to some random forum post from 2017. How reassuring.

At this point I was so reassured that I Noped right out and didn't even bother trying to install it.

Lemorage (https://lomorage.com/)

Language: JavaScript. (Not TypeScript, raw JS as far as I can tell.) License: None specified.

Although this app showed up on a few top-ten lists, its license is not specified, and installation only offers Windows and Mac. (Really?) The "others" section eventually lets you get to an Ubuntu section, where their recommendation is to install it via... an Apt remote. Which is an interesting choice.

It has a GitHub repo, but that has no license listed at all. Which technically means it's not licensed at all, and so downloading it is a felony. (Yes, copyright law is like that.)

Being a good Netizen, I reached out to the company through their Contact form to ask them to clarify. They eventually responded that, despite some parts of the code being in public GitHub repos, none of it is Open Source.

Noping right out of that one.

PhotoPrism (https://www.photoprism.app/)

Language: Go License: It's complicated

I actually managed to get this one to run! This one also "installs" via Docker Compose, but it actually worked. This is the only one of the apps I reviewed that I could get to work. Mind you, as a Go app I cannot fathom why it needs a container to run, since Go compiles to a single binary.

Their system requirements are absurdly high. Quoting from their site, "you should host PhotoPrism on a server with at least 2 cores, 3 GB of physical memory,1 and a 64-bit operating system." What the heck are they doing? It's Go, not the JVM.

In quick experimentation, it seemed decent enough. The interface is snappy and supports uploading directly from the browser.

However, I then ran into a pickle. The GitHub repository says the license is AGPL, which I am fine with. However, in the app itself is a License page that is not even remotely close to Free Software anything, listing mainly all the ways you cannot modify or redistribute the code.

I filed an issue on their repository about it, and got back a rather blunt comment that only the "Community Edition" is AGPL, which is a different download. The supported version is not.

Noping right out of this one, too.

Photoview (https://photoview.github.io/)

Language: Go, with TypeScript for front-end License: AGPLv3

Another app wants you install via Docker Compose. And when I tried to do so, I got a bunch of errors about undefined environment variables. The install documentation says nothing about setting them, and it's not clear how to do so, so at this point I gave up.

Lychee (https://github.com/LycheeOrg/Lychee)

Language: PHP License: MIT

Lychee is built with Laravel, which I don't care for but I have used very good Laravel-based apps in the past so I had high hopes. It talks about using Docker, but unlike the others here doesn't provide a docker-compose file, just some very long Docker run commands.

Their primary instructions are to git-clone the project, then run composer install and npm. Unfortunately, phpdocker.io is still built using Ubuntu 22.04, which has an ancient version of npm in it, and I didn't want to bother trying to figure out how to upgrade it.

Lychee did offer a demo container, which uses SQLite. That I was able to get to run successfully. However, for unclear reasons it wouldn't actually show any images.

At this point, I gave up.

So what now?

Rather disappointed in the state of the art, I decided to take a different approach. As I mentioned, I use Nextcloud to store all my images. Nextcloud has a photo app, but the last time I used it, it was very basic, and pretty bad. That was a few years ago, though, so I went searching.

Turns out, not only has Nextcloud Photos improved considerably, there's also another extension app on it called Memories. On paper, it looks like it does everything I'm after. A timeline feed, custom albums that don't require duplicating files, you can edit the Exif data of the image to show a title and description, plus some fancy extras like mapping geo information to OpenStreetMap and AI-based tagging, if you have the right additional apps installed. So would it work?

Turns out... yes. The setup was slightly fiddly, but mostly because it took a while to download all the map data and index a half-million photos. Once it did that, though... it just worked. It does almost everything I was looking for. I haven't figured out how to reorder albums or pictures within an album, and it looks like it doesn't support sub-albums. But otherwise, it does what I need. It even has a mobile app (free) that let's me show off selected pictures on my phone, which is what I was ultimately after.

I have always had a love/hate relationship with Nextcloud. In concept, I love it. Self-hosted file server and application hub? Sign me up! Despite being a PHP dev of 25 years, I've never quite understood why PHP made sense for it, though. And upgrades have always been a pain, and frequently break. But its functionality is just so useful. Apps are hit or miss, ranging from first-rate (like Memories) to meh.

But in this case, it ended up being both the cleanest and most capable option, as well as the easiest to get going, provided I already had a Nextcloud server. So, solution found. I am now a Memories user, and will be setting up accounts for the rest of the family, too.

Property hooks in practice 22 Oct 2024 8:32 PM (last year)

Property hooks in practice

Two of the biggest features in the upcoming PHP 8.4 are property hooks and asymmetric visibility (or "aviz" for short). Ilija Tovilo and I worked on them over the course of two years, and they're finally almost here!

OK, so now what?

Rather than just reiterate what's in their respective RFCs (there are many blog posts that do that already), today I want to walk through a real-world application I'm working on as a side project, where I just converted a portion of it to use hooks and aviz. Hopefully that will give a better understanding of the practical benefits of these tools, and where there may be a rough edge or two still left.

One of the primary use cases for hooks is to not use them: They're there in case you need them, so you don't need to make boilerplate getter/setter methods "just in case." However, that's not their only use. They're also really nice when combined with interface properties, and delegation. Let's have a look.

The use case

The project I'm working on includes a component that represents a file system, where each Folder contains one or more Page objects. Pages are keyed by the file base name, and may be composed of one or more PageFiles, which correspond to a physical file on disk.

So, for instance, form.latte and form.php would both be represented by PageFiles, and grouped together into an AggregatePage, form. (Do those file names suggest what I'm doing...?) However, if there's only a single news.html file, then it would be just a PageFile on its own. AggregatePage and PageFile both implement the same Page interface, which includes various metadata derived from the file (title, summary, tags, last-modified time, etc.)

Additionally, a Folder can be represented by a page inside it named index. That means a Folder also implements Page. As you can imagine, this makes the Page interface rather important. But it's actually two interfaces, because there's also PageInformation, which has the bare metadata and a child interface, Page, which adds logic around the file multiplexing. The data about a folder is also lazy-loaded and cached for performance, which means we need to handle that lazy-loading transparently.

(Why am I doing something so weird? It makes routing easier. Stay tuned for more details.)

The 8.3 version

This is exactly the situation where interfaces shine. However, in PHP 8.3, interfaces are limited to methods. That means in PHP 8.3, the various interfaces look like this:

interface Hidable

{

public function hidden(): bool;

}

interface PageInformation extends Hidable

{

public function title(): string;

public function summary(): string;

public function tags(): array;

public function slug(): ?string;

public function hasAnyTag(string ...$tags): bool;

public function hasAllTags(string ...$tags): bool;

}

interface Page extends PageInformation

{

public function routable(): bool;

public function path(): string;

/**

* @return array

*/

public function variants(): array;

public function variant(string $ext): ?Page;

public function getTrailingPath(string $fullPath): array;

}

Several of those are quite reasonable. However, nearly any of the methods that have no arguments... don't really need to be methods. Conceptually, the "title" of a page is just data about it. It's aspect of the page, not an operation. We're used to capturing that as an operation (method), because that's all PHP let us do historically: Properties are basic, and if you expose them directly you lose a lot of flexibility, as well as safety. You cannot have interesting logic for them, and you cannot prevent someone from setting it externally (unless you make it readonly, which has its own challenges). The tools don't let us do it right.

For example, I have a degenerate case implementation called BasicPageInformation, like so:

readonly class BasicPageInformation implements PageInformation

{

public function __construct(

public string $title = '',

public string $summary = '',

public array $tags = [],

public ?string $slug = null,

public bool $hidden = false,

) {}

public function title(): string

{

return $this->title;

}

public function summary(): string

{

return $this->summary;

}

public function tags(): array

{

return $this->tags;

}

public function slug(): ?string

{

return $this->slug;

}

public function hidden(): bool

{

return $this->hidden;

}

public function hasAnyTag(string ...$tags): bool { ... }

public function hasAllTags(string ...$tags): bool { ... }

}

That's... a lot of code. 5 methods that do nothing but expose a primitive property. Of course, I also have the properties public, as the class is readonly. But I cannot rely on that because the interface cannot guarantee the presence of the properties, only the methods. So even though I could just have public properties in this case, they're still not reliable.

Enter Interface Properties

A part of the property hooks RFC, interface properties really deserve to be billed as their own third feature. They integrate well with hooks and aviz, and make those better, but they're a standalone feature.

The change in this case is pretty simple:

interface Hidable

{

public bool $hidden { get; }

}

interface PageInformation extends Hidable

{

public string $title { get; }

public string $summary { get; }

public array $tags { get; }

public ?string $slug { get; }

public bool $hidden { get; }

public function hasAnyTag(string ...$tags): bool;

public function hasAllTags(string ...$tags): bool;

}

interface Page extends PageInformation

{

public bool $routable { get; }

public string $path { get; }

public function variants(): array;

public function variant(string $ext): ?Page;

public function getTrailingPath(string $fullPath): array;

}

Now, instead of read-only methods to implement, the interfaces require readable properties. In this case we don't need to set anything, so the properties are marked to only require a get operation. Whether we satisfy that requirement with a public property, a public readonly property, a public private(set) property, or a virtual property with just a get hook is entirely up to us. In fact, we'll do all of the above.

Right off the bat, that makes BasicPageInformation shorter and easier:

readonly class BasicPageInformation implements PageInformation

{

public function __construct(

public string $title = '',

public string $summary = '',

public array $tags = [],

public ?string $slug = null,

public bool $hidden = false,

) {}

public function hasAnyTag(string ...$tags): bool { ... }

public function hasAllTags(string ...$tags): bool { ... }

}

In this case, simple readonly properties is all we need. We can conform to the interface with about 10 fewer lines of boring, boilerplate code. Neat.

Where it gets more interesting is the other Page implementations.

The PageFile

In 8.3, PageFile looks like this (stripping out irrelevant bits for now to save space):

readonly class PageFile implements Page

{

public function __construct(

public string $physicalPath,

public string $logicalPath,

public string $ext,

public int $mtime,

public PageInformation $info,

) {}

public function title(): string

{

return $this->info->title()

?: ucfirst(pathinfo($this->logicalPath, PATHINFO_FILENAME));

}

public function summary(): string

{

return $this->info->summary();

}

// tags(), slug(), and hidden() all look exactly the same.

public function path(): string

{

return $this->logicalPath;

}

public function routable(): true

{

return true;

}

// ...

}

The PageFile delegates to an inner PageInformation object, and handles some defaults and extra logic. It works, but as you'll note, it's so verbose I didn't want to ask you to read such a long code sample.

In 8.4, we can remove those methods and instead use properties.

class PageFile implements Page

{

public private(set) string $title {

get => $this->title ??=

$this->info->title

?: ucfirst(pathinfo($this->logicalPath, PATHINFO_FILENAME));

}

public string $summary { get => $this->info->summary; }

public array $tags { get => $this->info->tags; }

public string $slug { get => $this->info->slug ?? ''; }

public bool $hidden { get => $this->info->hidden; }

public private(set) bool $routable = true;

public string $path { get => $this->logicalPath; }

public function __construct(

public readonly string $physicalPath,

public readonly string $logicalPath,

public readonly string $ext,

public readonly int $mtime,

public readonly PageInformation $info,

) {}

// The boring methods omitted.

}

Much more compact, much more readable, much easier to digest. In this case, we're using hooks to create virtual properties, which have no internal storage at all. There is no "slug" slot in the memory of PageFile. Internally to the engine, it still looks and acts like a method. Because most of the properties are virtual, we don't need to bother with the set side, as it will be an engine error to even try. There's two special cases, however.

First, $routable is hard-coded to true. We can do that. Just... not with readonly, which cannot have a default value. We'd have to define it un-initialized and then manually initialize it in the constructor, which is too much work. Now, however, we can set it to public private(set) and give it a default value. In theory the class could still modify that property internally, but it's my class and I know I'm not doing that, so there's nothing to worry about.

Second, $title has some non-trivial default value logic. I don't want to run that multiple times, so it's cached onto the property itself. On subsequent calls, $this->title will have a value, so it will just get returned. That makes $title a "backed property," meaning there is a set operation. But we don't want anyone to set the title externally, so again we make it private(set).

Also note that hooked properties cannot be readonly. That means the class cannot be readonly, and the individual promoted constructor properties need to be marked readonly instead. (We could just as easily have made them private(set). It would have the same effect in this case.)

The Folder

The Folder object is even more interesting. It does a number of things that are off-topic for us here, so I'll hand-wave over them and focus on the property refactoring.

In PHP 8.3, Folder works roughly like this:

class Folder implements Page, PageSet, \IteratorAggregate

{

public const string IndexPageName = 'index';

private FolderData $folderData;

public function __construct(

public readonly string $physicalPath,

public readonly string $logicalPath,

protected readonly FolderParser $parser,

) {}

public function routable(): bool

{

return $this->indexPage() !== null;

}

public function path(): string

{

return str_replace('/index', '', $this->indexPage()?->path() ?? $this->logicalPath);

}

public function variants(): array

{

return $this->indexPage()?->variants() ?? [];

}

public function variant(string $ext): ?Page

{

return $this->indexPage()?->variant($ext);

}

public function title(): string

{

return $this->indexPage()?->title()

?? ucfirst(pathinfo($this->logicalPath, PATHINFO_FILENAME));

}

public function summary(): string

{

return $this->indexPage()?->summary() ?? '';

}

// tags(), slug(), and hidden() omitted as they're just like summary().

public function all(): iterable

{

return $this->folderData()->all();

}

public function indexPage(): ?Page

{

return $this->folderData()->indexPage;

}

protected function folderData(): FolderData

{

return $this->folderData ??= $this->parser->loadFolder($this);

}

// Various other methods omitted.

}

(Although not relevant here, PageSet is an interface for a collection of pages. It extends Countable and Traversable, and adds a few other operations like filter() and paginate(). None of its methods are relevant to hooks, though, so we will skip over that.)

That's a lot of code for what is ultimately a very simple design: A folder is given a path that it represents. (Ignore the physical vs logical paths for now, that's also not relevant.) It lazily builds a folderData value that is a collection of Pages the Folder contains. One of those pages may be an index page, in which case the Folder can be treated the same as its index page. If not, there's reasonable defaults.

But that's a lot of dancing around. Let's see if we can simplify it using PHP 8.4.

class Folder implements Page, PageSet, \IteratorAggregate

{

public const string IndexPageName = 'index';

protected FolderData $folderData { get => $this->folderData ??= $this->parser->loadFolder($this); }

public ?Page $indexPage { get => $this->folderData->indexPage; }

public private(set) string $title {

get => $this->title ??=

$this->indexPage?->title

?? ucfirst(pathinfo($this->logicalPath, PATHINFO_FILENAME));

}

public private(set) string $summary { get => $this->summary ??= $this->indexPage?->summary ?? ''; }

public private(set) array $tags { get => $this->tags ??= $this->indexPage?->tags ?? []; }

public private(set) string $slug { get => $this->slug ??= $this->indexPage?->slug ?? ''; }

public private(set) bool $hidden { get => $this->hidden ??= $this->indexPage?->hidden ?? true; }

public bool $routable { get => $this->indexPage !== null; }

public private(set) string $path { get => $this->path ??= str_replace('/index', '', $this->indexPage?->path ?? $this->logicalPath); }

public function __construct(

public readonly string $physicalPath,

public readonly string $logicalPath,

protected readonly FolderParser $parser,

) {}

public function count(): int

{

return count($this->folderData);

}

public function variants(): array

{

return $this->indexPage?->variants() ?? [];

}

public function variant(string $ext): ?Page

{

return $this->indexPage?->variant($ext);

}

public function all(): iterable

{

return $this->folderData->all();

}

// Various other methods omitted.

Now, we've done a few things.

-

folderDatawas already a property, and a method. You had to do that if you wanted caching. Now, they're combined into a single lazy-initializing, caching property. It's still protected, though. - The

indexPagewas always just a silly little wrapper aroundfolderData. Now that wrapper is even thinner, in a property. Code calling it can just blindly assume it's there and use it safely. - The various other simple data from

Page/PageInformationare also now just properties. Also, it's super easy for us to cache them so defaults don't need to be handled again in the future. As before, we make the propertiesprivate(set)so they're read-only to the outside world without any of the shenanigans ofreadonly. - Features like null-coalesce assignment, null-safe method calls, and shortened ternaries make the code overall really nice and compact. (That's not new in PHP 8.4, I just like them.)

In the end, we have less code, more self-descriptive code, and no loss in flexibility. Score! The performance should be about a wash; hooks cost very slightly more than a method call, but not enough that you'll notice a difference.

Declaration interfaces

Another place where interface properties came in handy is in my "File Handlers." The interface for those in PHP 8.3 looks like this:

interface PageHandler

{

public function supportedMethods(): array;

public function supportedExtensions(): array;

public function handle(ServerRequestInterface $request, Page $page, string $ext): ?RouteResult;

}

supportedMethods() and supportedExtensions() are both, well, boring. Those methods will, 95% of the time, just return a static array value. However, the other 5% of the time they will need some minimal logic. That means they cannot be attributes, and have to be methods.

Which means most implementations have this verbose nonsense:

readonly class MarkdownLatteHandler implements PageHandler

{

public function __construct( /* ... */) {}

public function supportedMethods(): array

{

return ['GET'];

}

public function supportedExtensions(): array

{

return ['md'];

}

// ...

}

Which is like... why?

In PHP 8.4, interface properties let us shorten both the interface and implementations to this:

interface PageHandler

{

public array $supportedMethods { get; }

public array $supportedExtensions { get; }

public function handle(ServerRequestInterface $request, Page $page, string $ext): ?RouteResult;

}

class MarkdownLatteHandler implements PageHandler

{

public private(set) array $supportedMethods = ['GET'];

public private(set) array $supportedExtensions = ['md'];

public function __construct(/* ... */) {}

// ...

}

Much shorter and easier! We can just declare the properties directly, with values, and keep them private-set, then never set them. It's marginally faster, too, as there's no function call involved (though in practice it doesn't matter). We don't even need hooks most of the time, just aviz!

And in that other 5%, well, we can use hooks just as well:

class StaticFileHandler implements PageHandler

{

public private(set) array $supportedMethods = ['GET'];

public array $supportedExtensions {

get => array_keys($this->config->allowedExtensions);

}

public function __construct(

/* ... */

private readonly StaticRoutes $config,

) {}

}

One more thing...

There's one other place where PageInformation gets used, and where PHP 8.4's new features help out in hilarious ways.

Another task this project does is loading Markdown files off disk, with YAML frontmatter (which is, you guessed it, PageInformation's properties). The way I'm doing so is to load the file, rip off the YAML frontmatter, and deserialize that into a MarkdownPage object using Crell/Serde. Serde creates an object by bypassing the constructor and then populating it, but one thing that won't be populated is the content property. That gets set by just writing to it afterward.

The relevant loading code looks like this (abbreviated):

public function load(string $file): MarkdownPage|MarkdownError

{

$fileSource = file_get_contents($file);

if ($fileSource === false) {

return MarkdownError::FileNotFound;

}

[$header, $content] = $this->extractFrontMatter($fileSource);

$document = $this->serde->deserialize($header, from: 'yaml', to: MarkdownPage::class);

$document->{$this->documentStructure->contentField} = $content;

return $document;

}

(The property to write the content to is configurable via attributes, for reasons unrelated to the topic at hand.) Problem: That means the content property needs to be publicly writable, which is generally not ideal. Technically we could use a bound closure to dance around that and set it from private scope, but PHP 8.4 lets us do something even more wild:

class MarkdownPage implements PageInformation

{

public function __construct(

#[Content]

public(set) readonly string $content,

public readonly string $title = '',

public private(set) string $summary = '' { get => $this->summary ?: $this->summarize(); },

public readonly string $template = '',

public readonly array $tags = [],

public readonly ?string $slug = null,

public readonly bool $hidden = false,

public readonly array $other = [],

) {}

private function summarize(): string { ... }

// And other stuff.

}

(I'm skipping over the fact that in 8.3 we needed a bunch of extra do-nothing methods, as we've already discussed those benefits.)

That's right. I have found a use case for public(set) readonly! Really, no one is more surprised at this than I am. With this configuration, $content can be set only once, but it can be set externally. Trying to set it a second time, from anywhere, results in an error. (Yes, we could have just used a bound closure, but this is more fun.)

Also note that most properties are just public readonly, which fully satisfies the interface. The exception is $summary, which has more interesting default logic, and thus uses a hook, and thus uses private(set) instead of readonly. Nothing especially new here.

Conclusion

I am overall happy with the result. I think it makes the code cleaner, more compact, and easier to extend. When adding more properties to the PageInformation interface, as I expect I will, adding that property to all the places it gets used will be less work, too.

The one complaint I have is that I do miss the double-short syntax that we removed from the hooks RFC, as it had too much pushback. Since the property hooks above are all get-only, they could have been abbreviated even further to (to use the Folder example):

public private(set) array $tags => $this->tags ??= $this->indexPage?->tags ?? [];

public private(set) string $slug => $this->slug ??= $this->indexPage?->slug ?? '';

public private(set) bool $hidden => $this->hidden ??= $this->indexPage?->hidden ?? true;

I find that perfectly readable, and with less visual noise of the { get wrapped around it. If folks agree, maybe we can try to re-add it in a future version.

So there we are: Interface properties, hooks, and asymmetric visibility, all dovetailing together to make code shorter, tidier, and more flexible. Welcome to PHP 8.4!

You can see a complete diff of all the PHP 8.4 upgrades I made as well. Looks like it shaved off around 150 lines of code, too.

(Note: If you're reading this article in the future, the code this is from will almost certainly have evolved further. This represents the code at the time of this blog post.)

Tukio 2.0 released - Event Dispatcher for PHP 14 Apr 2024 11:24 AM (last year)

Tukio 2.0 released - Event Dispatcher for PHP

I've just released version 2.0 of Crell/Tukio! Available now from your favorite Packagist.org. Tukio is a feature-complete, easy to use, robust Event Dispatcher for PHP, following PSR-14. It began life as the PSR-14 reference implementation.

Tukio 2.0 is almost a rewrite, given the amount of cleanup that was done. But the final result is a library that is vastly more robust and vastly easier to use than version 1, while still producing near-instant listener lookups.

Some of the major improvements include:

- It now uses Topological sorting internally, rather than priority sorting. Both are still supported, but the internal representation has changed. The main benefits are cycle detection and support for multiple before/after rules per listener.

- The API has been greatly simplified, thanks to PHP 8 and named arguments. It's now down to essentially two methods --

listener()andlistenerService(), both of which should be used with named arguments for maximum effect. The old API methods are still supported, but deprecated to allow users to migrate to the new API. - Tukio can now auto-derive more information about your listeners, making registration even easier.

- It now uses the powerful Crell/AttributeUtils library for handling attribute-based registration. That greatly simplified a lot of code while making several new features easy.

- Attributes are now supported on the class level, not just method. That makes building single-method listener services trivially easy.

Listener classes

The last point bears extra mention. While Tukio supports numerous ways of organizing and configuring your listenres, the recommended way to register a listener with is now to use this pattern, with attributes:

#[ListenerPriority(priority: 5)]

#[ListenerAfter(OtherListener::class)]

class CollectListener

{

public function __construct(public readonly Dep $someDependency) {}

public function __invoke(CollectingEvent $event): void

{

$event->add(static::class);

}

}

$provider->listenerService(CollectListener::class);

Now, ensure that CollectListener and OtherListener are both registered with your DI container using their class names. That's it, that's all, you're done. The __invoke() method will be registered as the listener method to call, while you can specify any dependencies it requires in the constructor. The DI container should auto-wire them for you. (If it doesn't, get a better DI container.) Now, any time the CollectingEvent event is fired, the CollectListener service will be loaded, given its dependencies, and then invoked.

Event Optimization: not new, but so so cool!

Tukio supports both runtime and compilable listener providers using the same API. In most cases, you'll want to use the compiled provider for better performance. However, you can get even more performance by telling Tukio ahead of time which events it should expect to see. (Odds are this can be automated by a scan of your codebase, but manually also works.) It will then build a direct lookup table in the compiled listener. The result is a constant-time simple array lookup for those events, also known as "virtually instantaneous." For example:

use Crell\Tukio\ProviderBuilder;

use Crell\Tukio\ProviderCompiler;

$builder = new ProviderBuilder();

$builder->listener('listenerA', priority: 100);

$builder->listener('listenerB', after: 'listenerA');

$builder->listener([Listen::class, 'listen']);

$builder->listenerService(MyListener::class);

$builder->addSubscriber('subscriberId', Subscriber::class);

// Here's where you specify what events you know you will have.

// Returning the listeners for these events will be near instant.

$builder->optimizeEvent(EvenOne::class);

$builder->optimizeEvent(EvenTwo::class);

$compiler = new ProviderCompiler();

// Write the generated compiler out to a file.

$filename = 'MyCompiledProvider.php';

$out = fopen($filename, 'w');

$compiler->compileAnonymous($builder, $out);

fclose($out);

Backward compatibility

Tukio v2 should be 99% a drop-in replacement for Tukio v1. I deliberately tried to keep the old API intact for now to make upgrading easier, though it is marked @deprecated to encourage developers to migrate to the more robust new API methods. If something isn't a clean drop-in, let me know on GitHub and I'll see if it's resolvable.

There is one potential BC challenge: In Tukio v1, if two listeners were specified without any ordering information, they would almost always end up triggering in the order in which they were added. That was not guaranteed, however, and the documentation warned against relying on it. Tukio v2 uses a new, topological sort-based sorting algorithm that is considerably more robust; however, the predictability of "lexically first, fires first" is no longer there. The order in which un-ordered listeners will trigger is unpreditable, though it should be stable. If you find you were inadvertently relying on the implicit ordering before, the fix is to add before/after rules to your listeners to make the intended ordering explicit.

Give it a try in your project today!

Tangled Threads 26 Dec 2023 1:19 PM (2 years ago)

Tangled Threads

Erin Kissane recently posted an excellent writeup about the coming integration of Threads into the Mastdon/ActivityPub/Fediverse world. It is recommended reading for anyone who cares even slightly about the state of the Internet, and especially any server admins and moderators on Mastodon et al. I agree with most of it, although there is one important point on which I think we differ slightly. I want to expand on that here.

Before I continue, full disclosure: I am a moderator (but not admin) on phpc.social, the Mastodon server for anyone even tangentially related to or interested in the PHP programming language or its community. However, this post is my own and has not been endorsed by the other moderators (though I know from conversation at least some of them agree with the general gist). This is not a policy statement from phpc.social, but a recommendation from me.

The problem

Erin's points, if I may summarize for the point of this response, largely boil down to:

- Meta is a fundamentally and irredeemably immoral company.

- Meta happily hosts irredeemably immoral and evil people, as long as it makes them profit.

- Just allowing Threads users to connect to Mastodon users helps provide Meta with value, if only in telemetry on who talks to whom (which they can then sell to advertisers).

- The current tools for dealing with Threads are not great.

- There are no easy answers.

As Erin notes, some server admins have taken to proactively blocking Threads from their servers. Individual users can also block a domain, but it only sometimes works. Some think it's a great thing for the Fediverse to have the 800 lb gorilla of social media connecting to the Fediverse, others are afraid of being sat on.

I fully agree with her about the threat that Meta poses, both to the Fediverse and to the world at large. However, I do not agree that hard-blocking Threads is the way to go. In fact, that would be the worst possible option.

The state of play

Let's start with a few observations.

As of December 2023, Threads has 141 million users. As of August, it had 10.3 million active daily users.

As of December 2023, Mastodon has around 8 million users. Its daily active users is around 1.7 million.

No matter how you slice it, Threads already dwarfs Mastodon by a massive degree. Mastodon is a small fry, and the increased network effect potential of connecting Threads and Mastodon... helps Mastodon more than Threads. (It can help Threads in more nefarious ways through telemetry, but that's a different matter.)

Defederating Threads is effectively a boycott. How effective are boycotts? Maybe 1% of the time? They are most effective when they threaten reputation, not revenue. Well, Meta has no reputation to begin with, and are too big to avoid. Boycotting Meta is guaranteed to accomplish... exactly nothing. (I say this as someone who does not and has never had a Facebook, Instagram, or Whatsapp account. Yes, I've been boycotting them. You see how much that has accomplished.)

What do we do?

So does that mean we totally should welcome Threads in? Not quite. Due to Meta's laughably pathetic stance on content moderation, a substantial portion of those 10.3 million active users are openly racist, transphobic, sexist fascists. Giving them access to 1.7 million new targets (who for historical reasons tend to skew toward all the groups the fascists love to attack) is... not great.

However, that also means giving our 1.7 million users access to 10 million new potential followers/friends/audience members. That's not nothing, and especially for indie artists that rely on social media to promote themselves, that's "willing to stay on Mastodon at all" levels of massive.

Fortunately, it doesn't have to be an either/or. This is what is unique and special about the Fedi/Mastoverse: Individual server mods can aggressively police not just their own users, not just other servers, but users from other servers.