My Fitness: from spreadsheet to an app 3 Oct 2025 4:00 PM (3 months ago)

My Fitness: from spreadsheet to an app

My fitness journey—as is the case with many teenagers—began with bodybuilding. I wanted to look good. Soon after, I found StrongLifts 5x5 and got into powerlifting. It became all about numbers: getting bigger bench, bigger squat, bigger press. Yet, I’ve always been drawn to the notion of Total Athleticism, as coined by Max Shank in one of the articles on T-Nation that I used to read religiously around 20151. Having a 300 bench was cool but I didn’t want to be one of those powerlifters who had massive numbers yet couldn’t run up the stairs. I wanted to also be good at running, calisthenics, kettlebells. Eventually this brought me to “functional fitness” and, of course, CrossFit, which popularized it circa 2000.

CrossFit standards

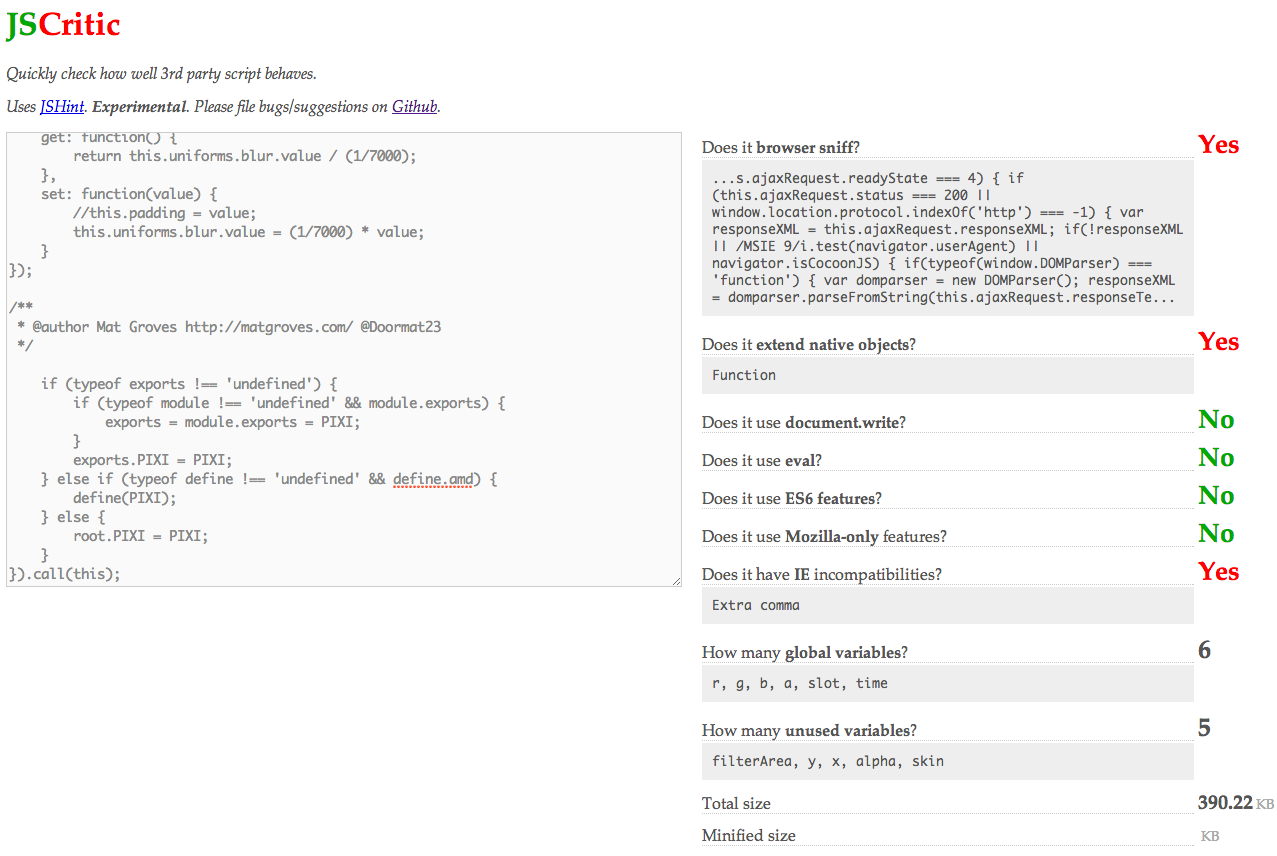

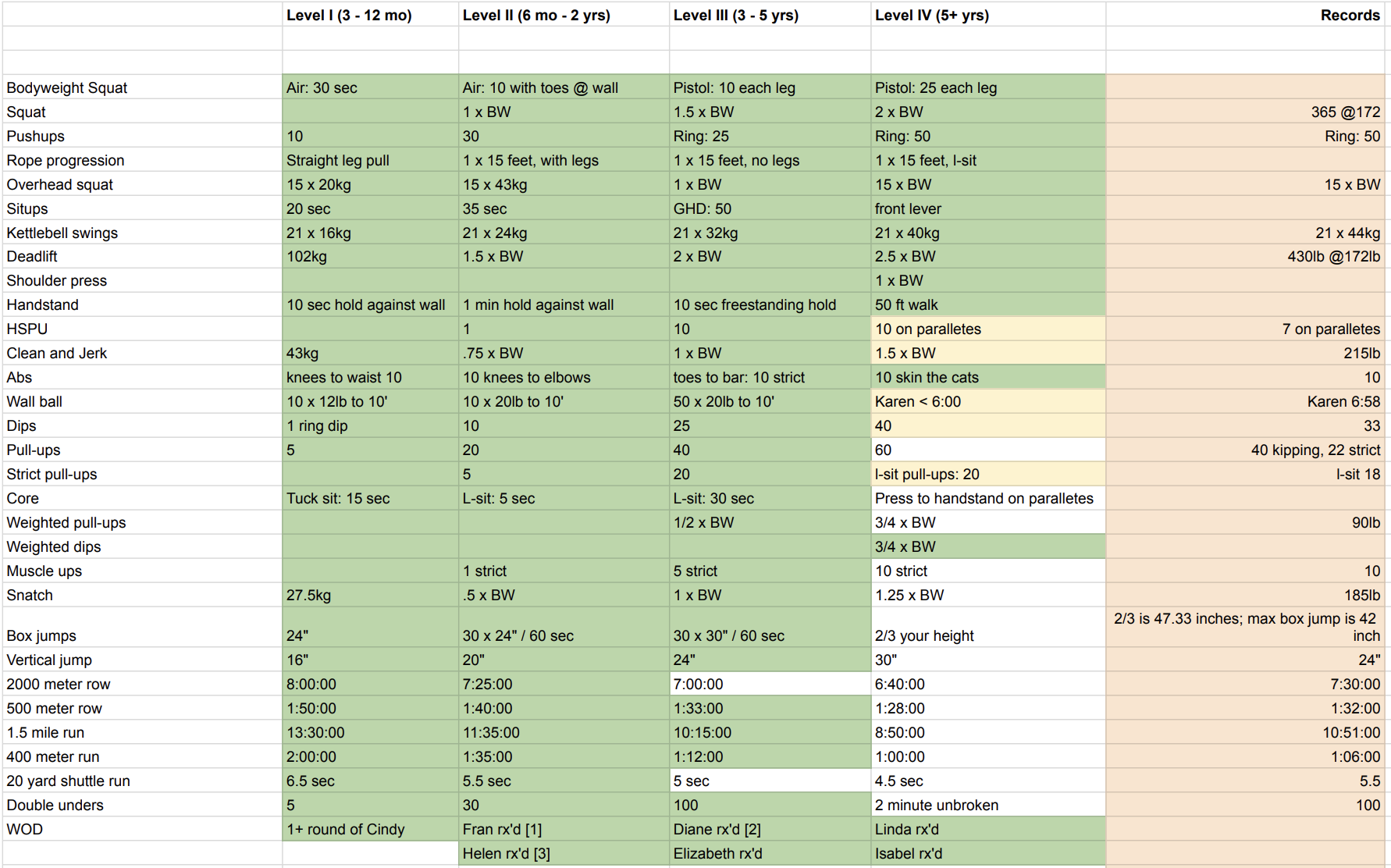

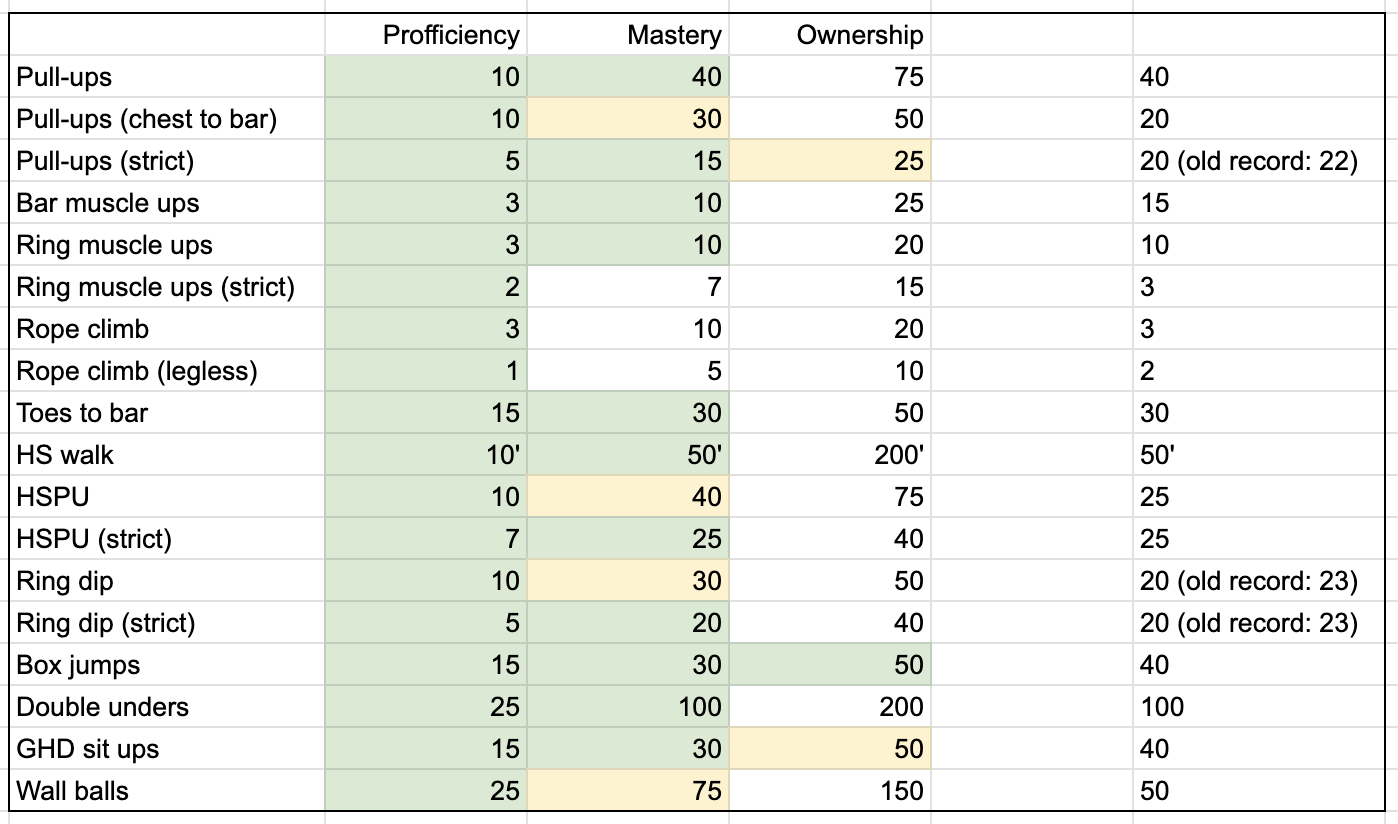

In my fitness circles, CrossFit was still criticized for its reckless high-skill olympic movements performed at high intensity. Blame the epic fail videos of someone doing something stupid and the ignorance around the methodology. While I was on the offense about doing actual CrossFit, I loved the “variable movements” concept. I found a couple of “crossfit athlete standards” posters online and made this spreadsheet to track my progress across multiple domains. It immediately exposed all my gaps: I could squat 2x bodyweight but my snatch was at a measly 100lb and all the speed and work capacity tests were barely at level 2:

If I wanted to be an all-around developed athlete, these were the things I had to work on. The standards also served as an “objective” benchmark. To consider yourself “advanced” here’s how many pull-ups you had to be able to do; and this is how fast your 1 mile run would have to be. It gave me a concrete goal to work towards. These spreadsheets became my north star for the following few years. For a challenge junky like me, they were a perfect long-term obsession.

Strength and Skill

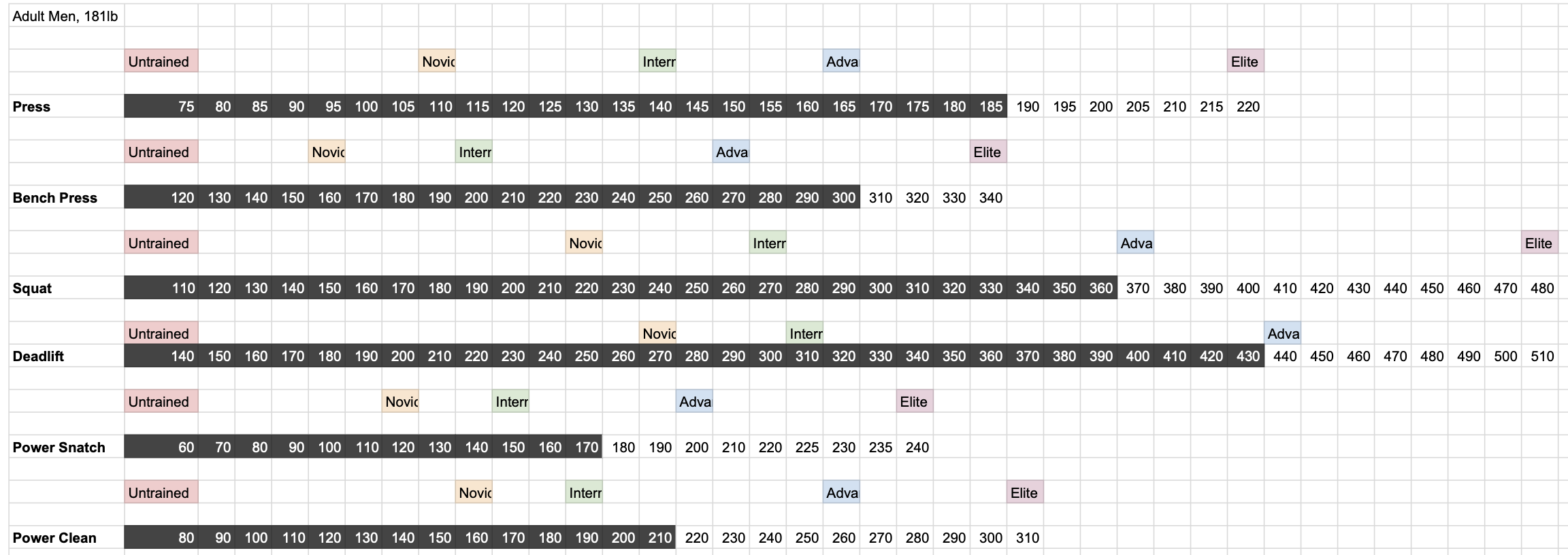

The spreadsheet overload was real. This wasn’t the first one I used. As far back as 2011, I found exrx.net Strength Standards and created this view to understand where I stand strength-wise and what to work on:

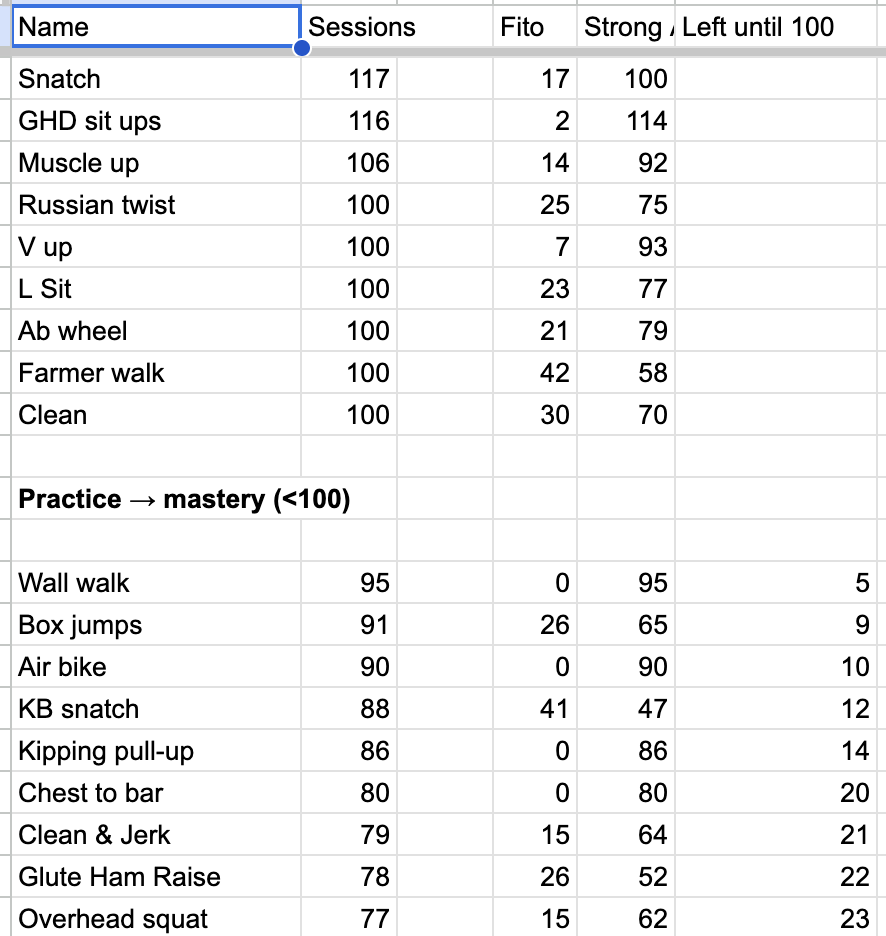

In the last couple years I started tracking my frequency and total-lifetime-session-count of certain movements I wanted to be better at — a concept I wrote about before:

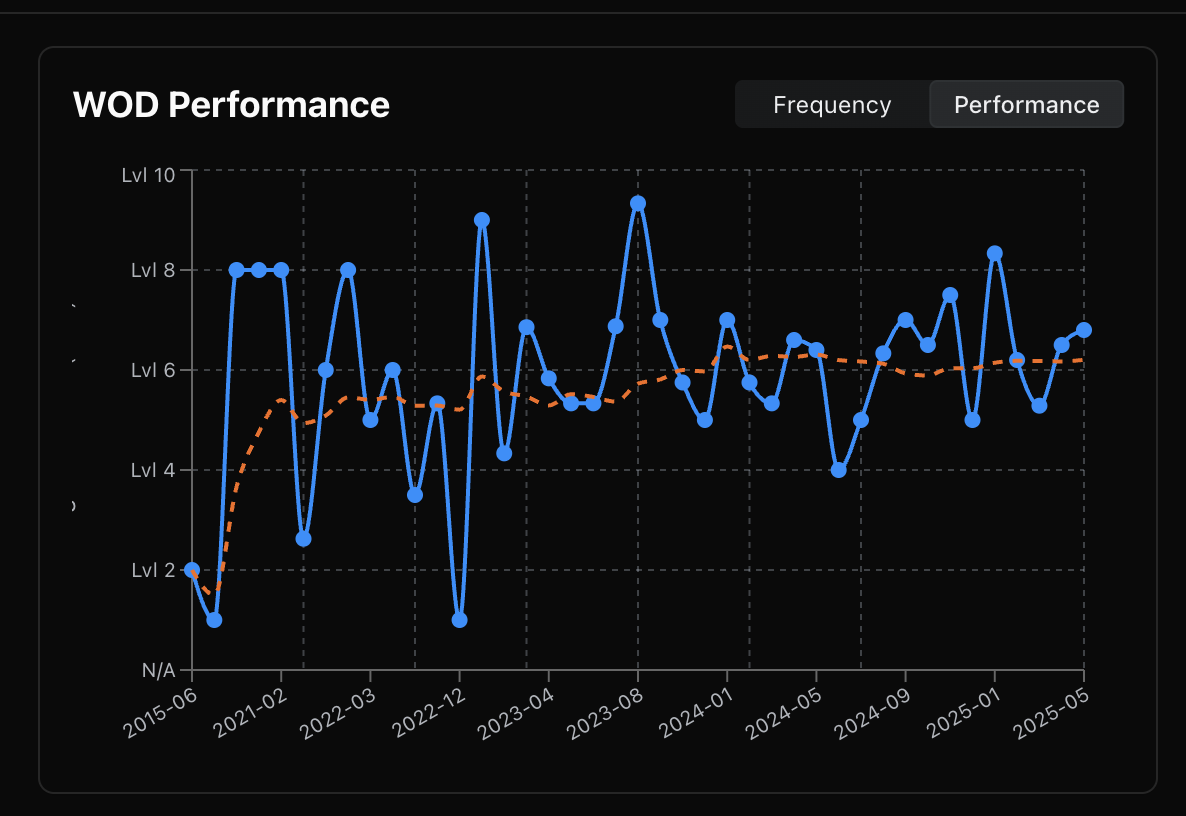

Finally, I tracked my proficiency levels on various CrossFit -specific movements as a way to advance my skill and become fluent in them during WOD’s.

One app to rule them all

The year was 2025.

I was a software engineer.

And I would still manually update a spreadsheet with the number of times I’ve performed a certain movement that I deemed as “needed practice“.

This was embarrassing.

When I embarked on building PRzilla, I realized that perhaps this was the time to ditch manual spreadsheet tracking. I could now build an app that would have all of this backed in:

-

show your fitness level across multiple movement patterns/domains (strength, endurance, gymnastics, work capacity, etc.)

-

show your raw strength benchmarks (squat, deadlift, snatch, push press, etc.)

-

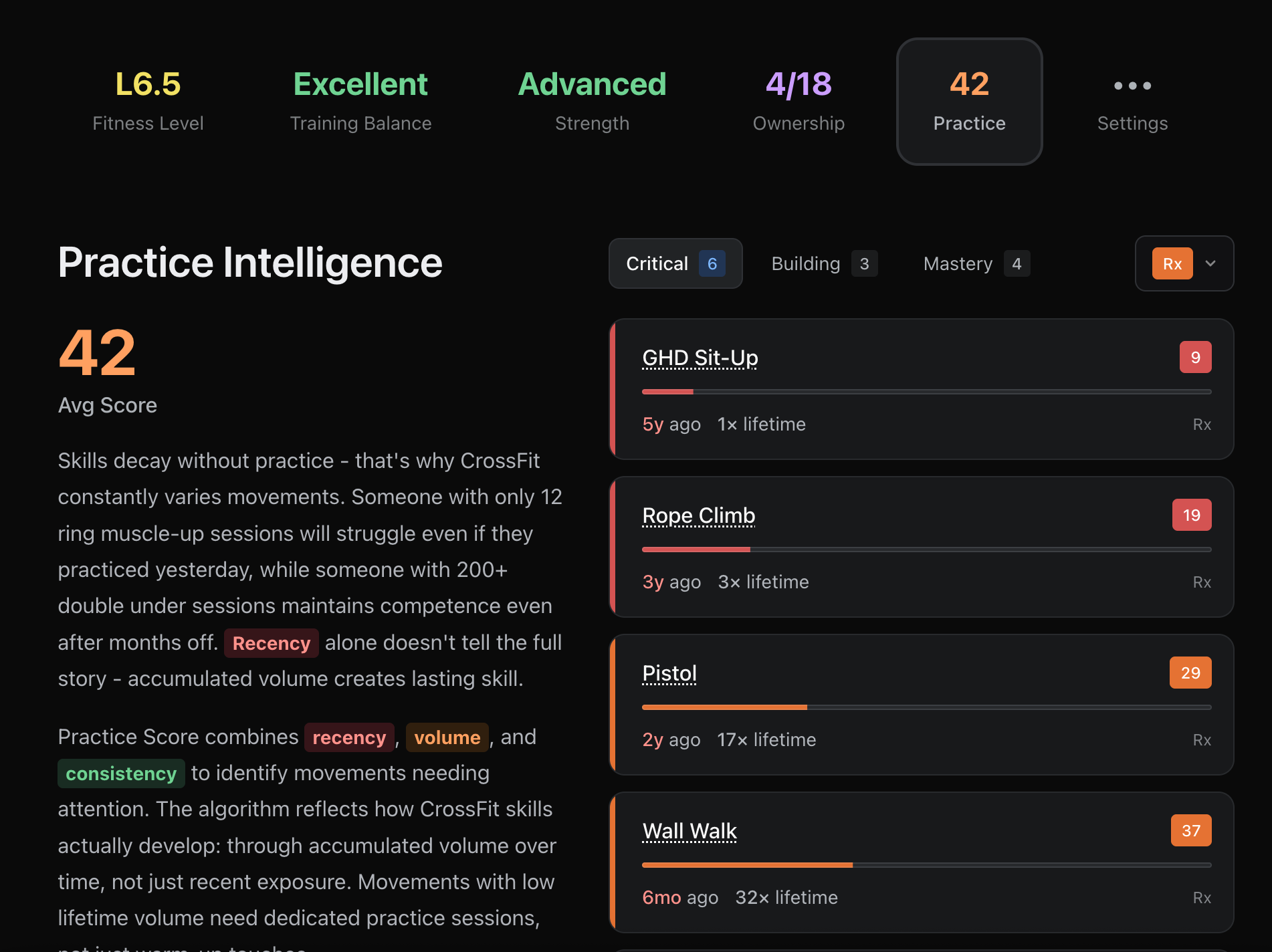

show your skill proficiency as a “lifetime sessions performed”

-

if you’ve done ring muscle-ups only 20 times in your life, you’re unlikely to be better at them vs. someone who has done them 120 times

-

-

show your skill ownership as a “max consecutive reps able to do“

-

being able to do 50 consecutive kipping pull-ups means you own them; this movement is unlikely to be your limiting factor in any WOD that has them

-

Whoop, Apple Fitness, and the rise of quantified fitness

I use Whoop and I absolutely love how it’s able to distill complex/boring HRV/RHR metrics into simple, quantified scores like recovery and strain. Whoop and Apple Fitness—that’s just as big on quantifiable fitness—were a big motivation for this app.

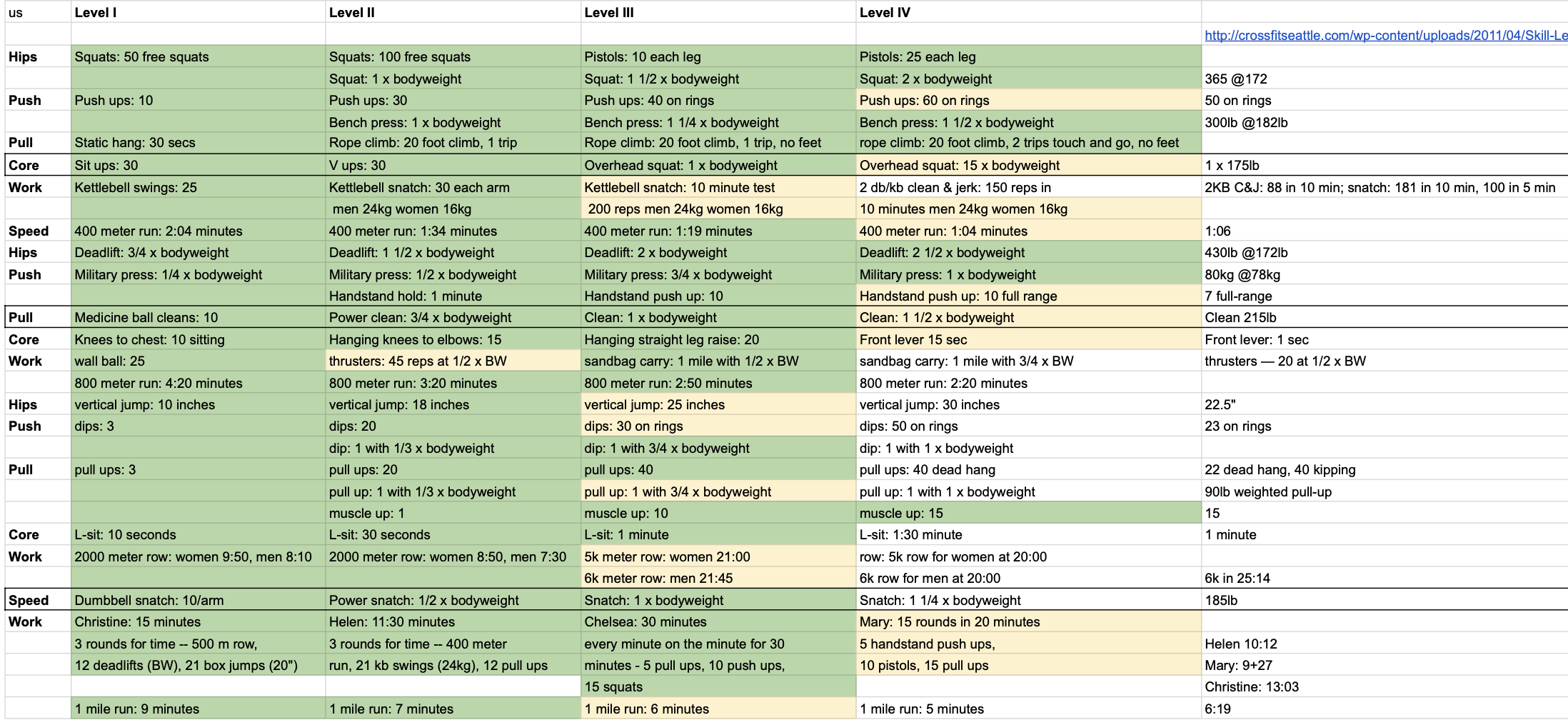

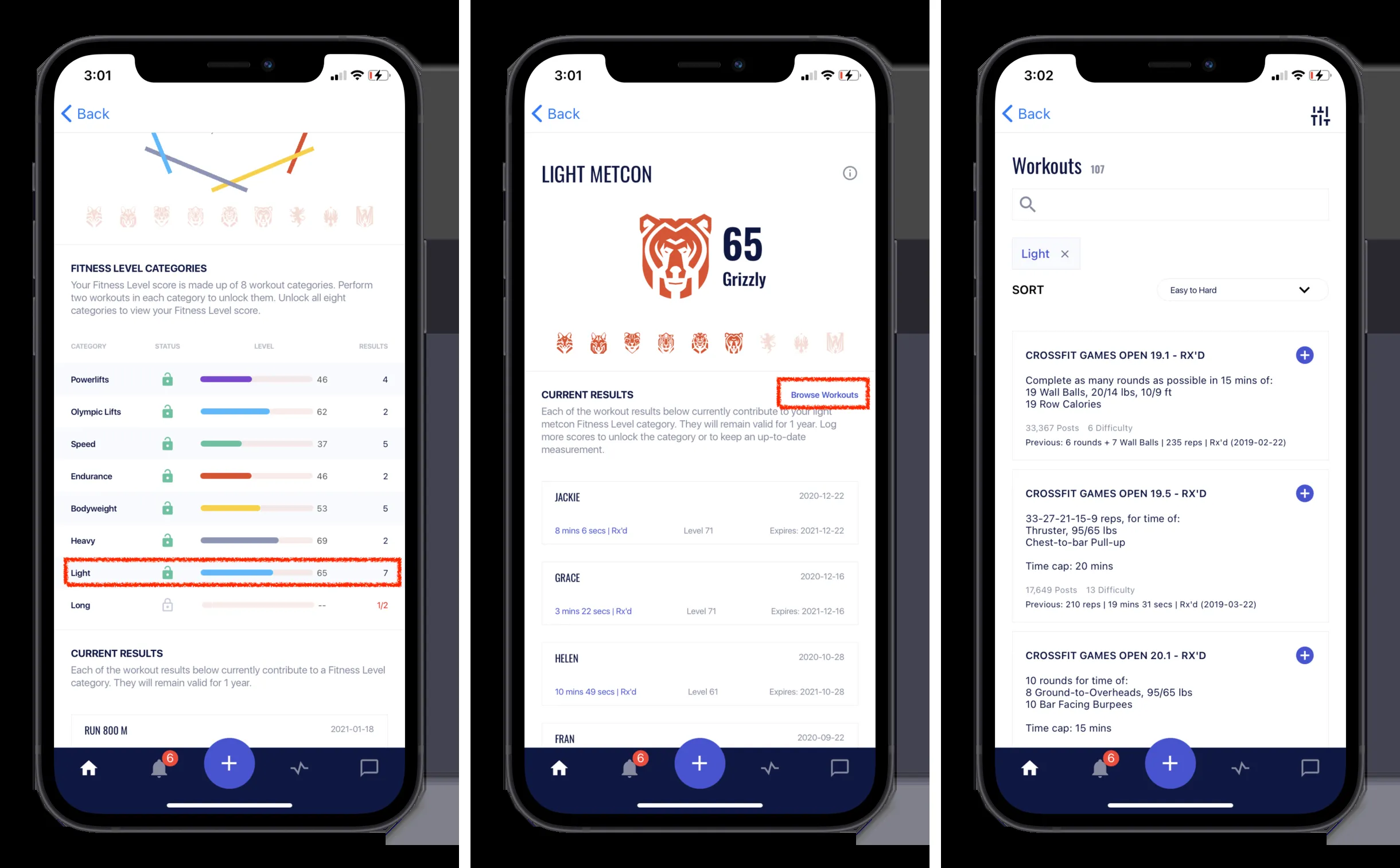

On the other hand there are apps like BTWB which is one of the most extensive Crossfit-style workout tracking tools, but I found its UI unintuitive and UX clunky:

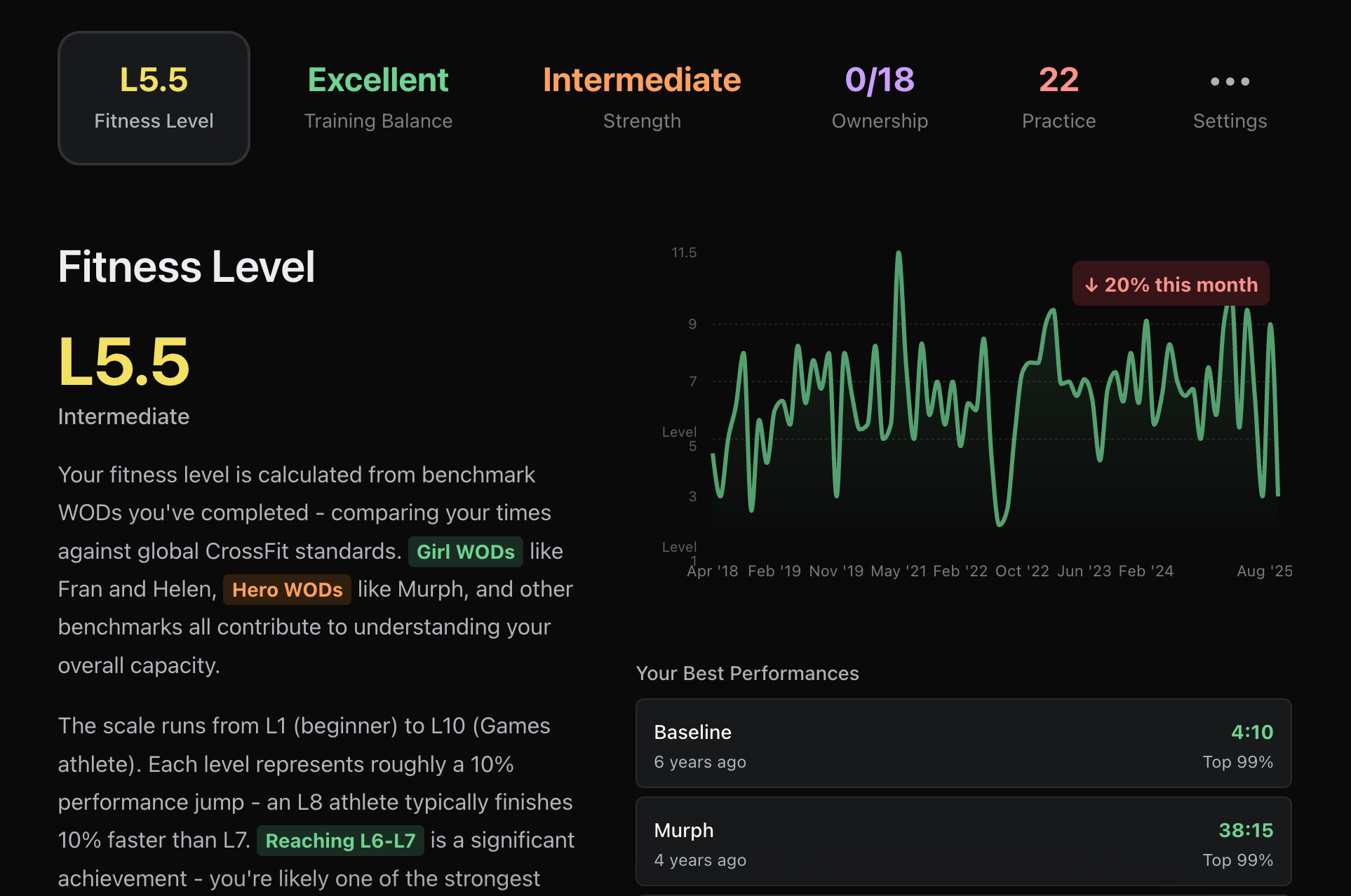

A snapshot of your fitness

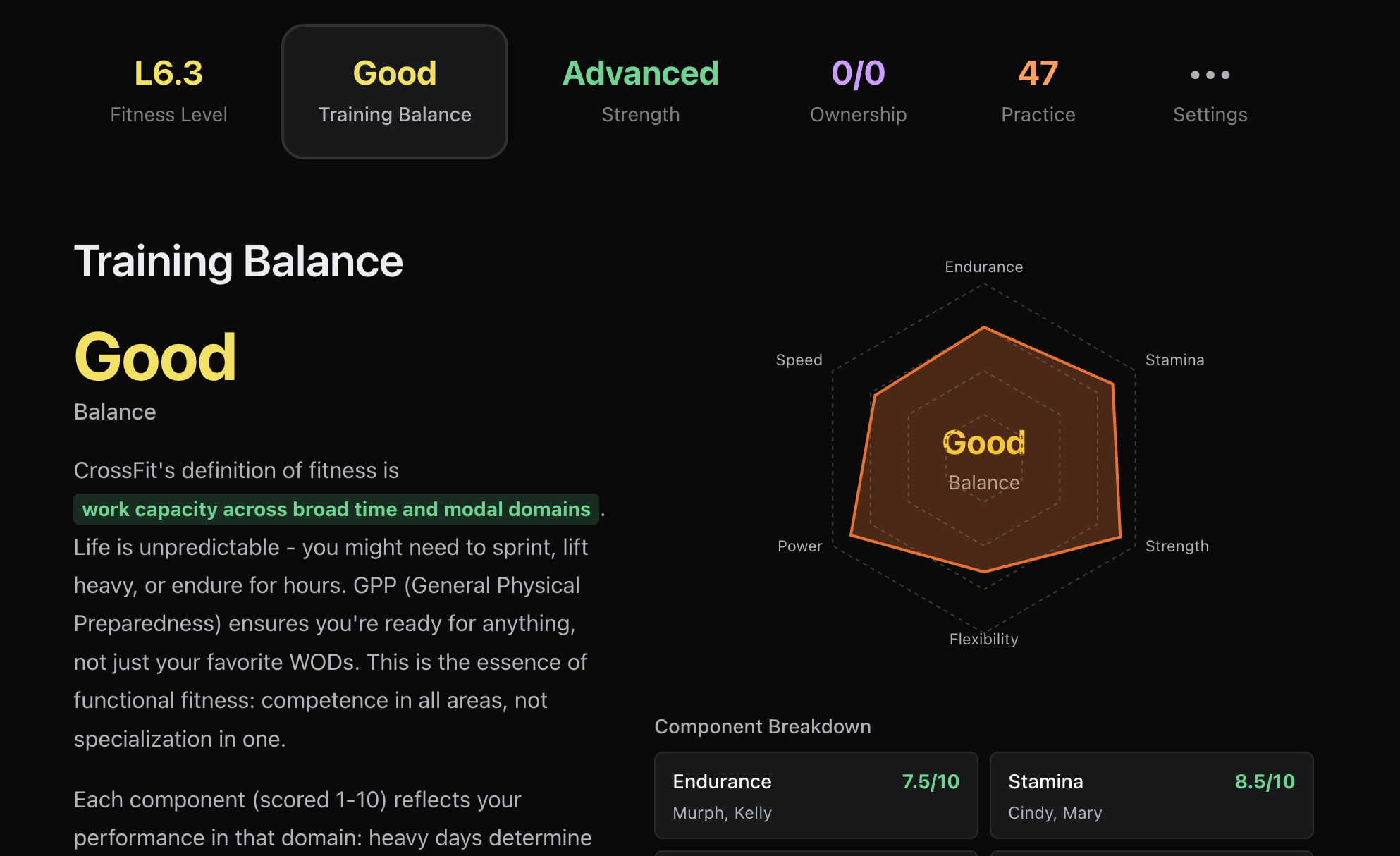

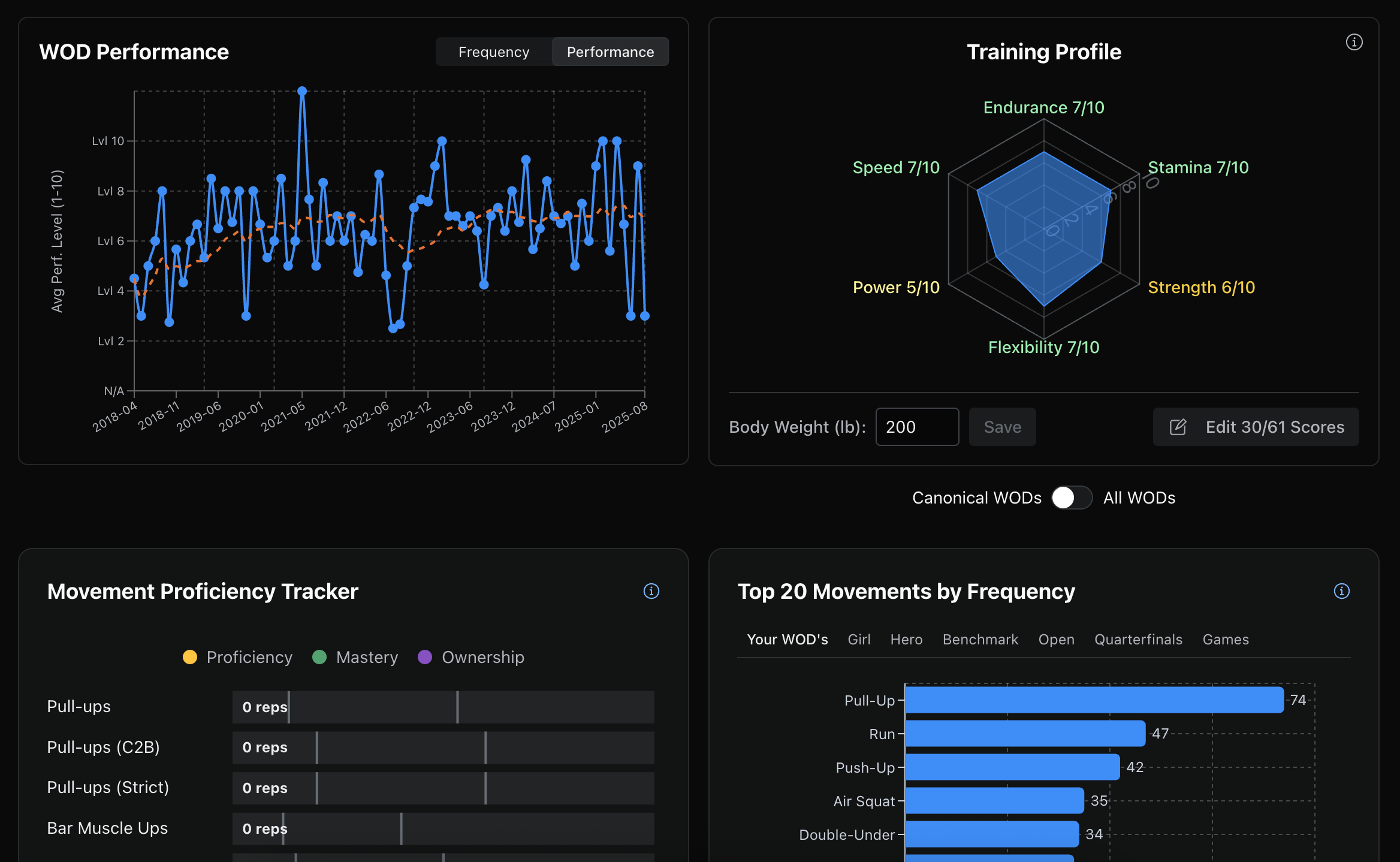

And so I turned all my spreadsheets into a simple snapshot consisting of 5 views: your level, balance, strength, ownership, and practice. These could be easily extended in the future with any other modules: time domain distribution, specific goals tracking like work capacity or endurance. Or even sport-specific ones like Hyrox.

SugarWOD parser

In order to turn all my workout data into beautiful charts, I needed to… have that data in the first place. One issue was that it was split between:

-

SugarWOD — scores of WODs prescribed by my box that I did

-

wodwell.com — common WODs that I did on my own

-

Strong app — traditional strength training workouts that are not WODs (aka sets and reps)

Importing wodwell scores was easy since it was just a map of common wod (fran, murph, etc.) to a score. Strong app export would be a lot more involved since I would have to implement sets and reps tracking (as well as workout sessions, potential rest values, and so on). So I decided to focus on SugarWOD import. And this is where the fun began.

Good news: SugarWOD allows easy export of all of you workout history.

Bad news: SugarWOD data is very… unstructured.

Thanks for reading Juriy’s Substack! Subscribe for free to receive new posts and support my work.

Here’s an example of CSV:

09/14/2022,WOD,24 Minute AMRAP:Row 240m12 Lateral Burpees over Back of Rower48 Double Unders24 Alternating Front Foot Elevated Reverse Lunges (53/35)*,3.073,3+73,Rounds + Reps,,"[{""rnds"":3,""reps"":73}]",,SCALED,As you can see, we have an arbitrary, potentially non-descriptive wod title like “WOD” plus gobbled up, plain-text description like “24 Minute AMRAP:Row 240m12 Lateral Burpees over Back of Rower48 Double Unders24 Alternating Front Foot Elevated Reverse Lunges (53/35)*” that’s missing basic formatting / newlines.

As humans, we’re able to quickly parse this into:

24 Minute AMRAP:

Row 240m

12 Lateral Burpees over Back of Rower

48 Double Unders

24 Alternating Front Foot Elevated Reverse Lunges (53/35)*Thank god we live in the age of LLM’s which are capable of reasoning through a messy jammed up text like this just like we—humans—do.

Another peculiarity was load-based entries. In SugarWOD you can program them in a workout and specify sets and reps, e.g. 5 sets of 3 snatches. Users can then log a value for each of the 5 sets. In order to present your performance on the leaderboard, SugarWOD allows coaches to specify how to score those sets — max value (who got the highest weight)? lowest value (who got the fastest row time)? sum of all values (who did the most work overall)? and so on. The export doesn’t expose this scoring criteria so parser needs to infer it based on the sets data. In the example below, we can see that the scoring was using SUM of 12 sets and so 695 is not the weight user did as a 1RM squat snatch; the real squat snatch values are in the sets field:

05/31/2024,WOD,12 ROUNDS:30 Second CAP:3 Toes to Bar2 Lateral Barbell Burpees1 Squat Snatch*REST 1 Minute.*Increase weight as able.,695,695,Load,,"[{""success"":true,""load"":55},{""success"":true,""load"":55},{""success"":true,""load"":55},{""success"":true,""load"":55},{""success"":true,""load"":55},{""success"":true,""load"":55},{""success"":true,""load"":60},{""success"":true,""load"":60},{""success"":true,""load"":60},{""success"":true,""load"":60},{""success"":true,""load"":60},{""success"":true,""load"":65}]",,RX,In this case it’s “obvious” that 695 wasn’t a 1RM snatch (current world record is 496lbs) but some cases are much less obvious so the parser needs to be very careful there.

LLM-powered pipeline

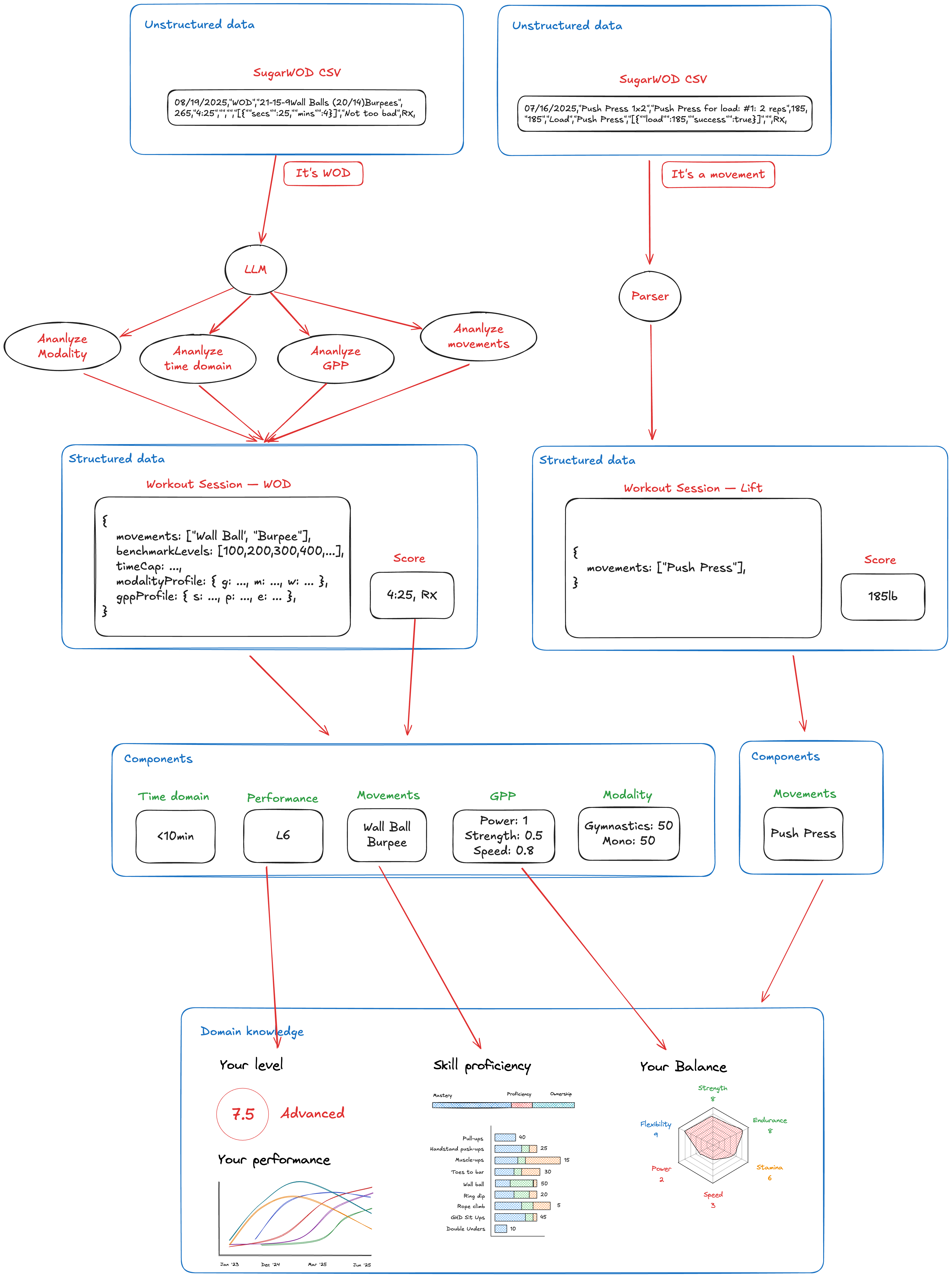

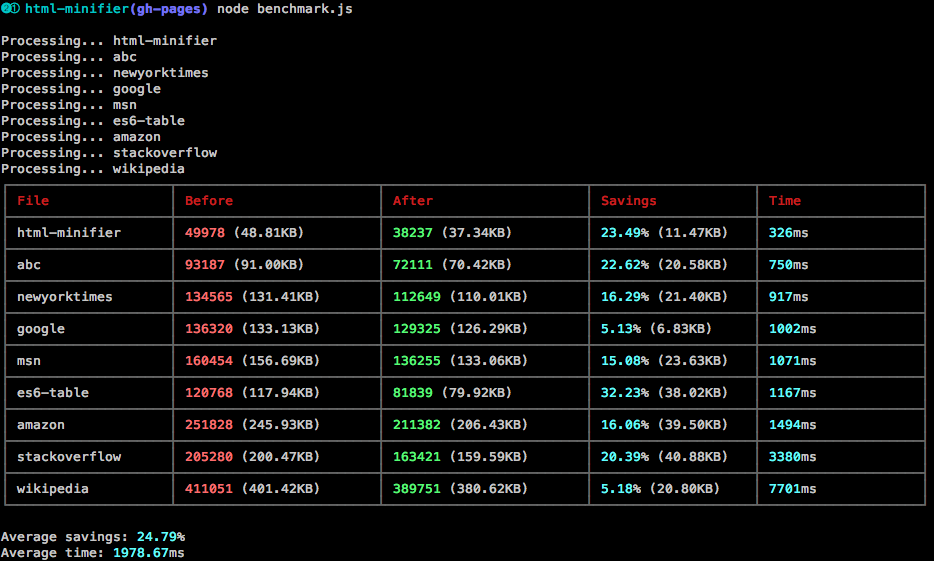

And so after many weeks of experimenting, refining, rewriting, and adjusting based on real data (thanks to amazing volunteers in my gym), I now have a pretty smart and capable pipeline that turns unstructured SugarWOD data into a structured PRzilla data:

One of the biggest findings—and things that slowed me down—was realizing that a giant, monolithic LLM prompt we used to generate giant JSON with a dozen of different fields (gpp, modality, difficulty, benchmarks, classification, etc.) was taking way too much time, was way too expensive, and often produced errors as it tried to do too many things at once.

Parallelization for the win

I then switched to a series of small, targeted LLM parsers/prompts—as seen on the diagram above—for each of the metrics and ran them in parallel. The results were astonishing: faster and cheaper execution and much more accurate results. This also gave me flexibility to run only specific parsers in specific cases; e.g. when parsing your historic data we want to extract movements (to feed it into our proficiency metrics), performance levels (to understand how your fitness level progressed), time domain (to see a time domain breakdown), and so on. We don’t care about coaching/scaling/stimulus module since the workouts are in the past and users don’t need to know that! However, those modules are important for new workouts, when using analyze or generate.

Finally, it allowed me to run these parsers in parallel which meant that a WOD analysis was now taking time_of_slowest_module (usually ~12-15sec) rather than sequential SUM(module1, module2, …) that would usually take up to 40 sec!

Unstructured to structured

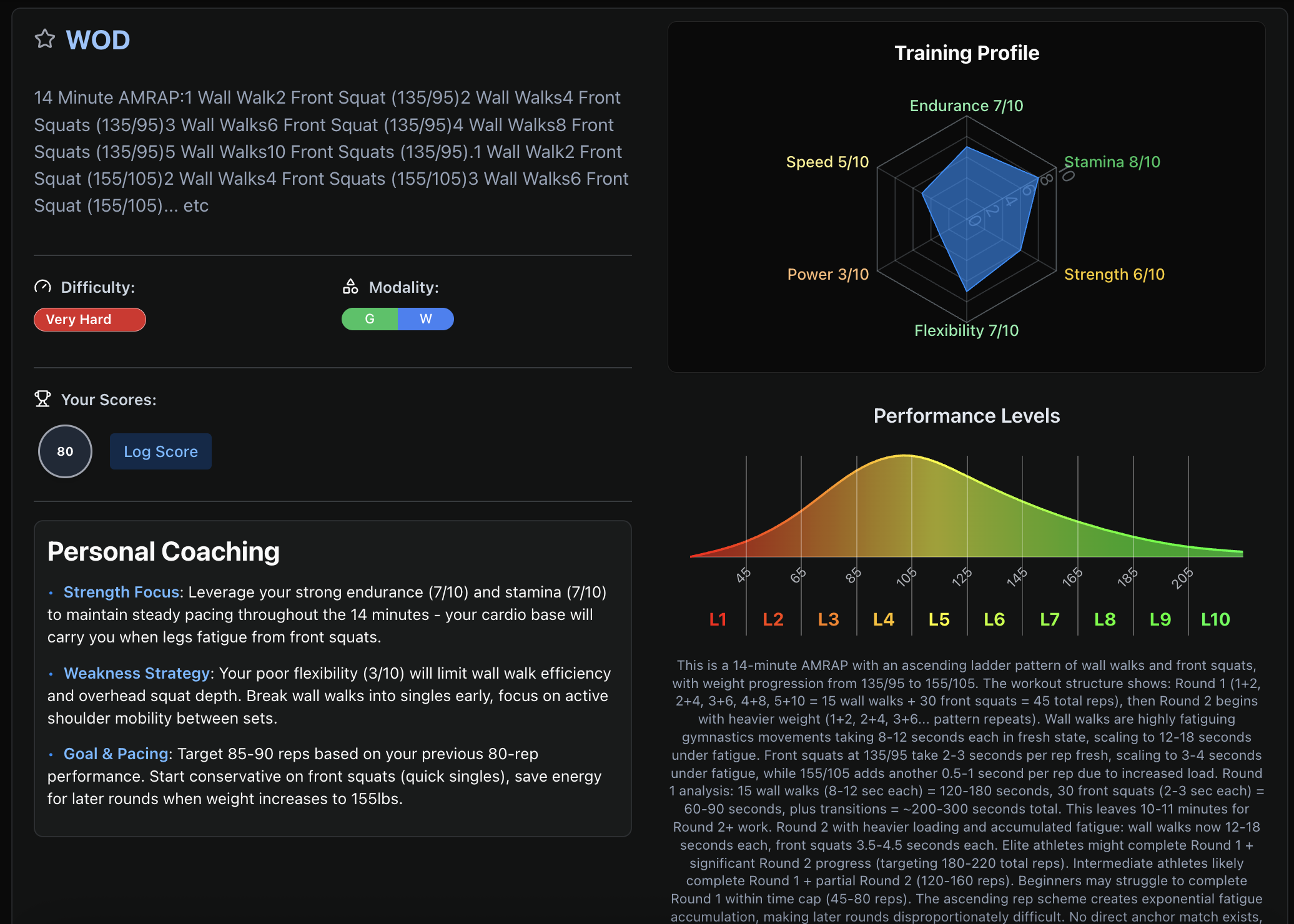

The end result is incredible. We’re able to turn a textual mess like this, into an actual workout and your actual performance. Here we see that 14min AMRAP was properly parsed into movements like “Wall Walk” and “Front Squat”; that it’s an endurance and stamina -heavy workout (yep!), that it’s classified as “Very Hard” and its modality are equally “Gymnastics” and “Weighlifting”. Moreover, AI determined that user’s score of 80 falls right around L3 (which we would likely adjust to L3.5 or L4 due to workout’s difficulty):

Parsing “Wall Walk” as a movement is what allows us to count that towards your practice score! Notice that we now know that you’ve done “Wall Walk” 32 times in your life with the recent one being 6 months ago.

Now that we have this structured data, the possibilities—all of a sudden—are endless. We can easily, and more importantly, automatically show your strength levels: powerlifting, weightlifting, crossfit total:

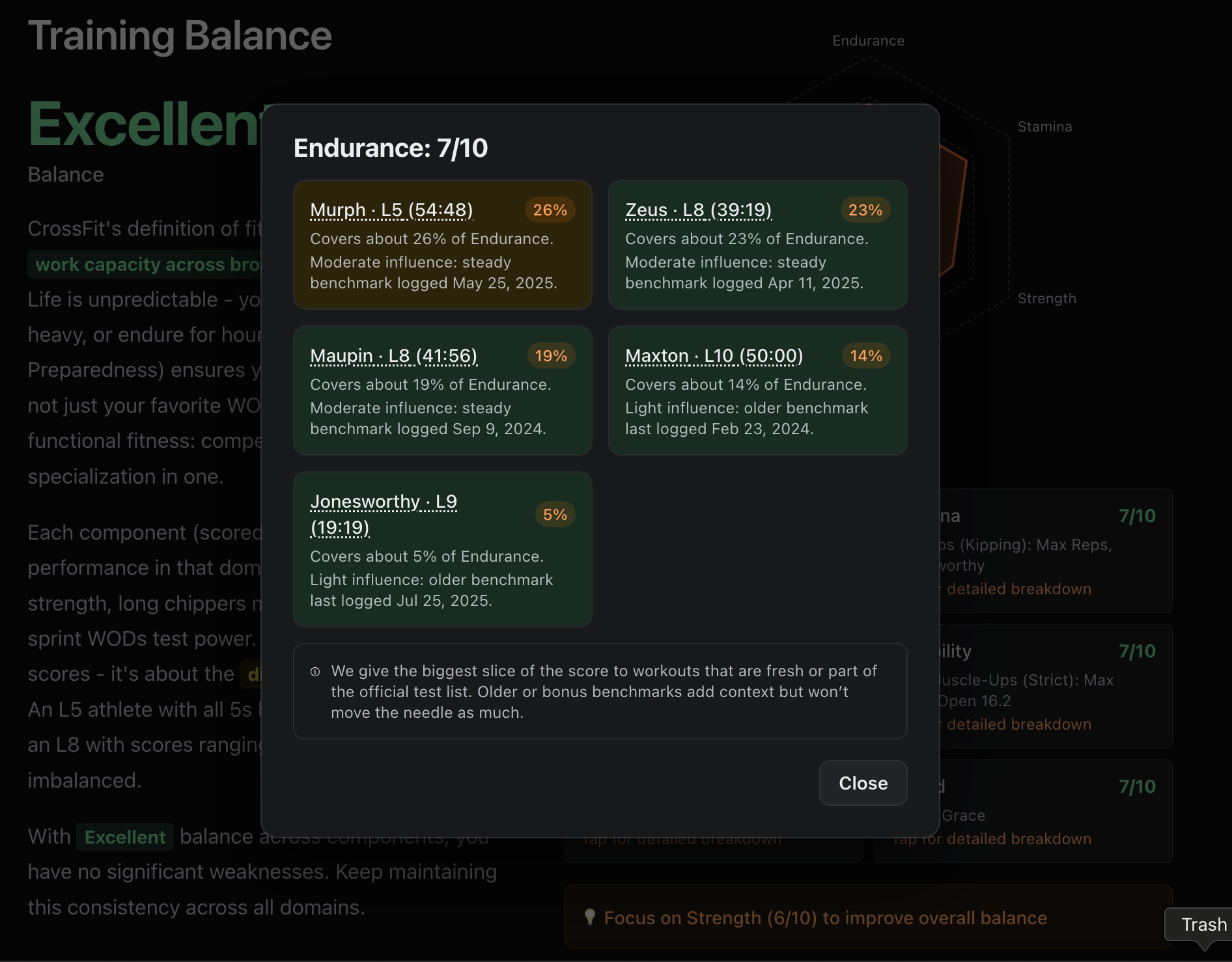

We can derive how good you are at Endurance, Stamina, Power and other GPP components based on your scores on WODs that are high in those:

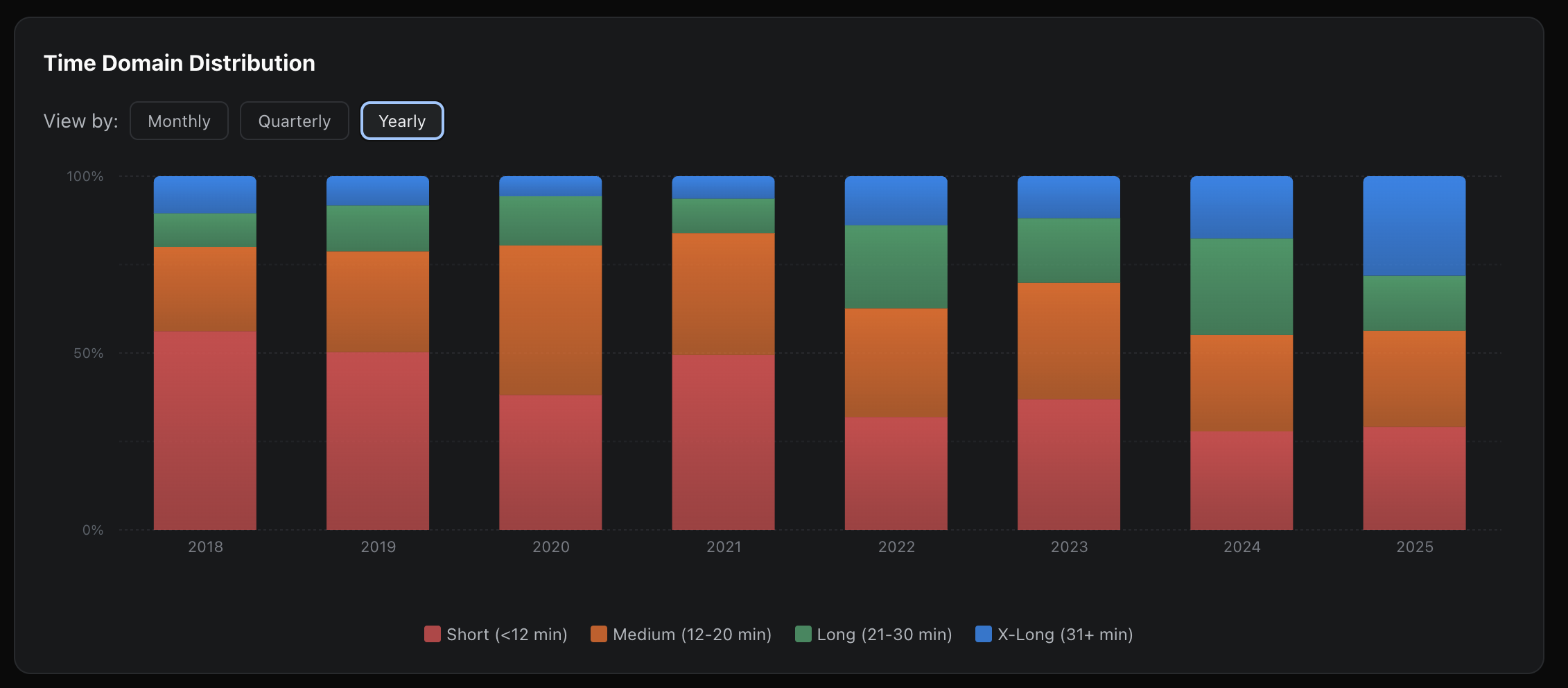

Because we’ve determined time domain of all the wods you’ve ever done, we can show if you’re leaning towards shorter or longer ones. Yes, parsing 1300 entries is expensive but at least we can marvel at the end result 😅. Here is coach Mike’s real data dating back to 2018. You can see that early years prioritize short WODs (<12min) whereas last couple years the focus has shifted towards longer, HYROX-style ones:

And, of course, we have ability to see all the WODs for any given movement (why it’s so important to parse those for all the custom WODs and create proper associations). Here you can see that Mike has done over 232 lifetime front squat sessions over 8 years, 157 as dedicated lifts and 75 as part of WODs:

In the interest of brevity, I’ll stop right here. There are other things powering this pipeline which I’m still refining and perhaps can talk about later: male vs. female benchmarks, age-based adjustment of strength and fitness metrics, smart movement aggregation for practice skill screen, logging import errors like movements that don’t match in our DB, or a smart system of retrying LLM when parsers fail.

End goal

Now that I’ve gotten here, I can’t help but wonder: what’s next? and what’s the end goal? I can now replace spreadsheets with this app but it doesn’t solve all of my use cases. The dream would be to have an app that can track all of my workouts. This means:

-

It needs to be a native (mobile) app

-

Web apps are great but when in the gym and on the go—let’s be honest—we all prefer native apps.

-

-

Replace SugarWOD completely?

-

PRzilla is able to do this by parsing previous data but what about future one?

-

I would need to either:

-

Implement SugarWOD API integration that’s tied to a box directly.

-

Implement some sort of image recognition of a WOD (snap a TV in your box) that can then be logged directly into our system.

-

-

-

Allow custom sets and reps logging

-

This is a big one… and it would allow me to switch completely away from Strong app.

-

But first… I’ll need to port Strong app data into our system (perhaps more on that in later posts!)

-

Alex Viada came out with Hybrid Athlete around the same time.

PRzilla: CrossFit AI companion 9 Sep 2025 4:00 PM (4 months ago)

PRzilla: CrossFit AI companion

Why

When I left LinkedIn, I itched to build something in the space dear to my heart — fitness and CrossFit specifically. I also wanted a challenge of building a full-stack app, something I’ve never done before. The app would solve my pain points but I wanted to release it out there for anyone to use. This meant database, auth, users, and production-level user experience. It would be the biggest project I’ve ever done. With the rise of AI-assisted coding, it was a perfect time.

Problem

I’ve been using SugarWOD to track scores for CrossFit workouts (WODs) prescribed by my gym. But SugarWOD was never designed to be a standalone tracker: it’s missing many WODs, those that are there don’t have any info, and it doesn’t have a way to discover new ones. So I supplemented it with wodwell.com to find more workouts and track their scores.

Wodwell has its own issues: full of ads, a clunky UI, and it's slow. More importantly, I wanted to be in control of my data and wodwell has no export. It also wasn’t great that my workout history and performance data was split between two platforms.

What

All of this sounded like a perfect opportunity to build just that: a full-stack app that has an incredibly easy and fast search through a 1000 most popular WODs. It would allow to log scores for any of them, to track your progression over time, and to favorite WODs for later. As I started coding with AI, I quickly realized I could go even further: we could get insights into WODs via AI analysis (time domain, difficulty, L1-10 benchmarks, etc.)

How

Before I set out on this journey I wanted to define few foundational tenets that were non-negotiable.

AI-driven

“Vibe coding” exploded as I was starting this project. My LinkedIn feed was full with “this is incredible” and “this will never work” posts. I came across Addy’s article on Cline and decided to build this app entirely with AI as a matter of principle. No manual coding. It would be a perfect experiment since an app was not just a trivial one-pager vibe-coded in a day.

Mobile-ready

Always a fun UI challenge and is certainly a must these days unless you provide a native app. In context of CrossFit, you often need to look things up or log your scores while in the gym. Every page needed to be responsive and every UI concept needed to be adopted to small and large screens.

Dark mode

Not a terribly complicated constrain and is largely solved by using the right foundational abstractions but it does add cognitive complexity, especially if you’re working with AI, as you need to ensure it complies and uses the right tokens.

Stateful

Often overlooked aspect but it’s what separates a polished, predictable app from a clunky frustrating experience. URL’s are the source of truth. Important UI state change needs to be reflected in them. Now you have the power to reload it, bookmark it, share it, go back, and so on.

Fast

Next.js is known for SSR support out of the box; this means fast server-driven apps. This was a great opportunity for me to learn and experiment with these concepts.

Big lesson I took from introducing these in the beginning: each constrain is a liability, another dimension to your product surface. Be careful with creating too many from the start. Think iPhone and its lack of copy-paste first few years.

A feature alone is a single point or a line (1D).

- Add a "mobile-ready" constraint, and that line now exists on a 2D plane (feature x device). You have to test both states.

- Add "dark mode," and the plane becomes a 3D cube (feature x device x theme).

- Add "SSR-ready," and you're now in a 4D space.

From Zero to SaaS in 150 Days

I’ve now spent about 5 months working on this daily-ish. I learned a ton about AI assisted coding and wrote about most of it. The lessons never stop and I post them weekly on LinkedIn.

What started as a simple way to see most common WODs, quickly turned into a powerful UI that allows to find just the right workout. With the power of AI, I’ve gone deep on classifying workouts to create helpful data that doesn’t exist anywhere else out there — difficulty, modality, training stimulus, time domain, and workout characteristics via tags.

When I ask AI to summarize the complexity 1 of the app now:

PRZilla is a large-scale production web application with 123,000 lines of TypeScript code across 818 files, featuring 19 database tables, ~109 React components in 304 TSX files, and 67 tRPC API procedures. The codebase includes 1,532 test cases with 256 E2E tests across 40 test files ensuring critical user journeys, 58 service modules handling complex business logic including 6 AI-powered features, and manages 922 predefined workouts with sophisticated scoring algorithms. This represents approximately 2-3 years of full-time development effort , comparable in complexity to a mid-sized SaaS product.

It’s incredible to see the kind of power you wield with AI. The breadth and depth of functionality certainly feels like it would have taken me 2-3 years. I haven’t written any of this code and honestly can’t imagine having to ever write code manually again.

Cutting wood by hand is slow. Using an electric saw freehand is fast, but it’s how you get a crooked cut. The real leverage comes when you bolt the saw in place at a precise angle, set the exact speed, and let it execute a perfect cut in a minute.

That is exactly how I build software now. I don’t write code manually. And I don't just hand a task to an AI. Instead, I architect the system, protect it with guardrails so it stays the course, and give it specific instructions so it knows exactly the path to follow.

My role has changed: I am the architect and the guardrail engineer.

The Hard Part is Still the Hard Part

Having spent a good amount of time not only developing new features but also refactoring, redesigning UI, and fixing bugs, I can tell with good confidence: your app will not fall apart. AI is capable of 95%. The remaining 5% are complex cases that usually reside at the edges of larger system integrations OR are just complex in nature. Those would be also complex for human, likely even more so.

For example, I’ve struggled to implement a well-working lazy loading of WOD cards on the main page because there was already a complex state management of various filters that had to all work in unison and support SSR; introducing lazy loading created X^Y^Z level of state management complexity and AI struggled to keep everything together without small bugs popping up here and there.

These are the fundamentally hard issues inherent to engineering. AI offers no magic wand for challenges like:

-

The "dependency hell" of npm packages.

-

The chaos of flaky end-to-end tests.

-

Navigating features with no documentation.

AI also can’t make your app stable if the underlying structure is rotten: fragmented state, logic duplication, complex branches with subtle bugs. But it’s surprisingly good at finding those and fixing them in a heartbeat.

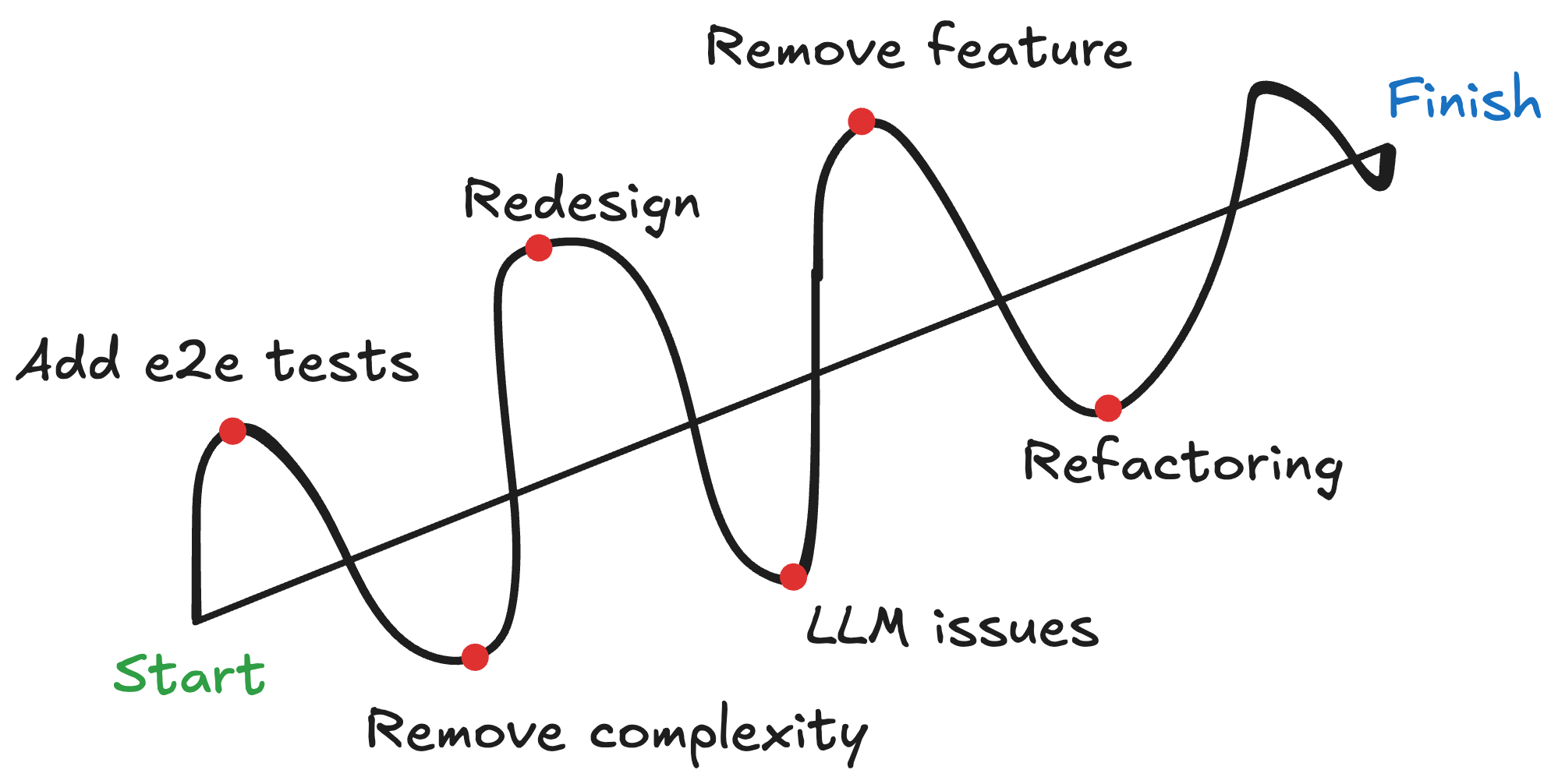

Code != Product

When I look at the app right now I feel like it would have taken me much less time to build the “final” version. Yet, the reality is that development works like this in non-trivial apps:

AI allows you to travel that curvy path much faster. Although you have to be careful because without proper guardrails you can start swinging too far left and right: you created too much code, too many experiments, pushed things to prod too fast, all leading to too much liability.

Production-ready

You develop a feature, you have 1 problem.

You decide to release it into production, now you have 10 problems.

Besides the app looking “good” and working “smooth”, the most important production-level aspect is making sure you don’t break things. In the last 10 years I’ve worked at big companies where, despite often being oncall, you always have dedicated SRE help. You also have a well-oiled infra machine to detect errors in prod and notify you.

Thankfully, for small full-stack apps like mine, platforms like PostHog & Sentry are incredible and provide all-in-one solutions for error monitoring (and more) with generous basic tiers.

No broken windows

I followed a pretty standard, tiered approach to release things safely:

-

TypeScript must pass

-

Linter must pass

-

Unit tests must pass

-

E2E tests must pass

-

Test locally to ensure things work

-

Always push to a branch in production (Vercel makes it easy). This is basically your staging environment since it’s hitting production DB.

-

Manually test feature in prod branch, merge into main if it works well. An even safer option would be to introduce feature flags with gradual rollouts but I didn’t want that complexity just yet.

-

Finally, watch out for spikes in errors following the rollout of a commit.

Big takeaway here was not to trust AI with E2E tests. I didn’t pay too much attention to all of the assertions at first, then quickly discovered that bugs weren’t being caught. Turns out quality of E2E assertions was subpar: tests relied only on visibility checks, many used vague assertions or hard‑coded values, and almost none validated data against the database. Tests were slow and flaky due to waitForTimeout calls and text-based or CSS-class selectors. I ended up adding lint rules (via eslint-plugin-playwright) to ensure AI doesn’t break this in the future.

Good design

I struggled with design at first but later found that it’s often a matter of the right prompt. For example, this was prompted to look like Apple Fitness / Whoop in 2025 with Sonnet 4 (which translates to clean, modern and minimal UI with oversized elements):

Compare to the old one:

To summarize, I think at least 80% of my time was spent on making things polished: figuring out UI/UX, refining UI/UX… endlessly, testing various permutations of an app, thinking through edge cases, ensuring it’s tested well, ensuring it’s feature-complete yet not over-engineered, documenting it well, deploying it correctly, and so on.

In the next post, I’ll dive deeper into some of the fitness-heavy concepts I’ve implemented in the app. We’ll talk more about that colorful “My Fitness” page and the complex LLM-powered pipeline that powers it!

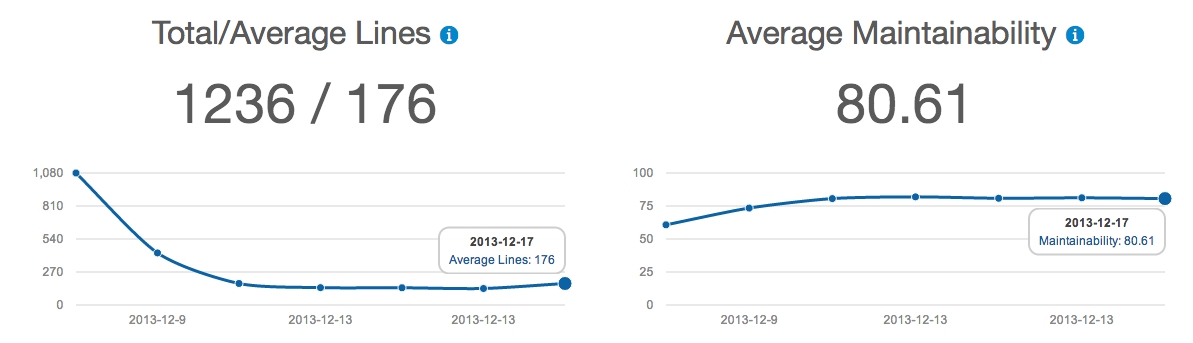

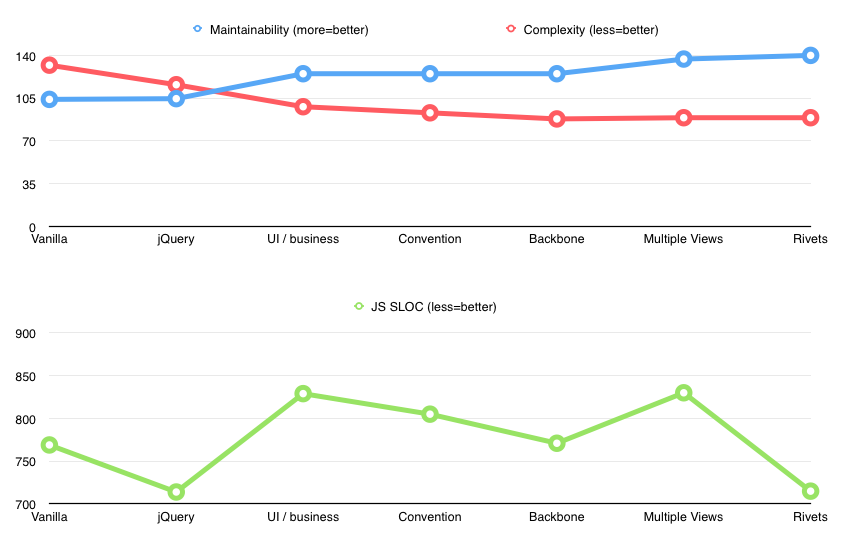

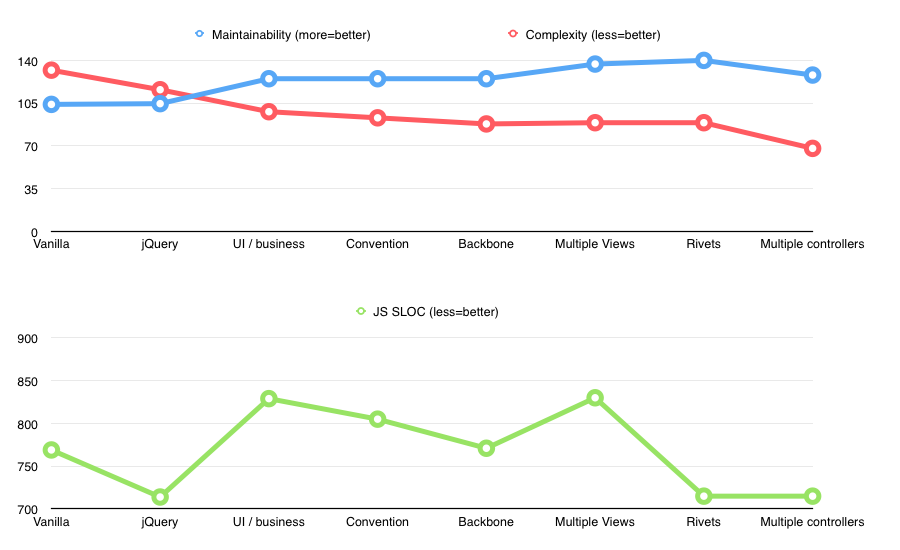

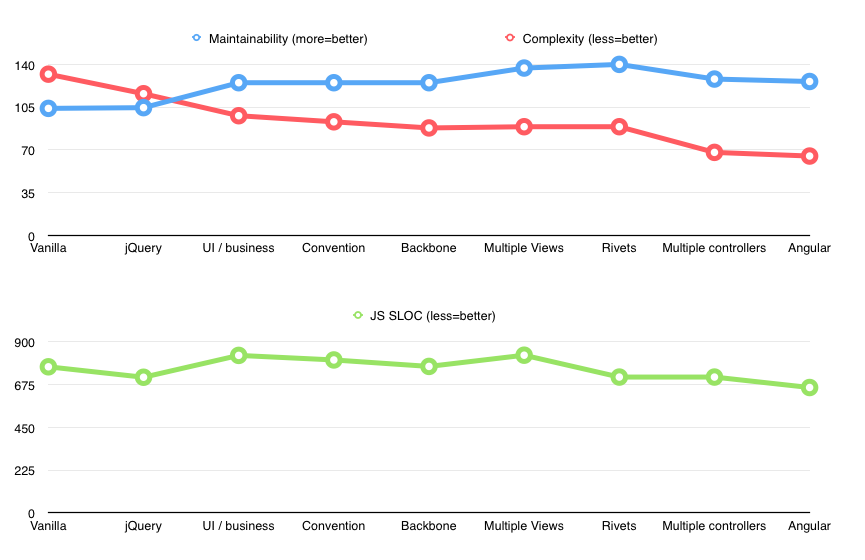

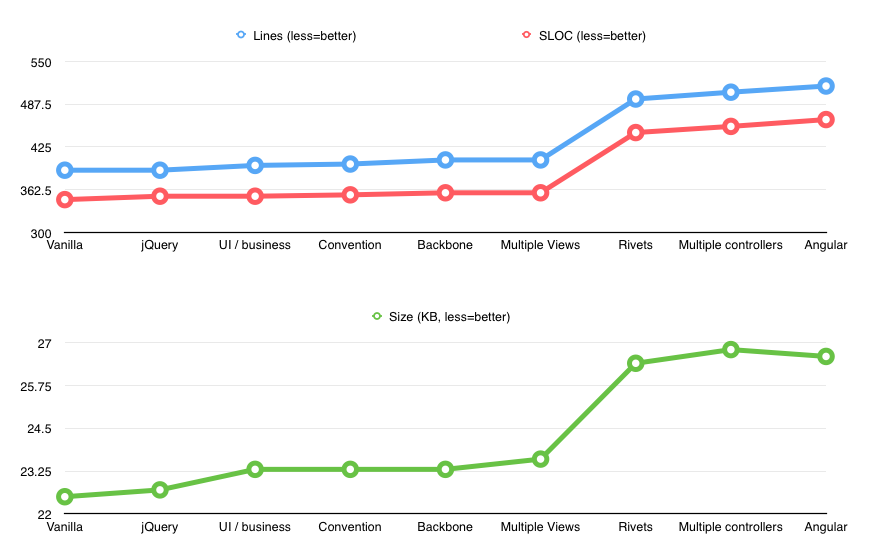

Code metrics don't tell the whole story, but they do provide a rough idea of this app's scale. Recognizing that AI can introduce bloat, I carefully reviewed and streamlined all committed code. I estimate the result is a lean codebase with no more than 15-20% potential cruft.

Using AI to accurately predict CrossFit workout difficulty and performance 30 May 2025 4:00 PM (7 months ago)

Using AI to accurately predict CrossFit workout difficulty and performance

One of the things I’ve geeked out on recently was using AI to assign difficulty and performance bands to a WOD. Not just one but 907 of them (and counting).

I’m building PRzilla.app which allows you to log scores for various WOD’s, track your performance, and see all kinds of cool charts about it: how you’re progressing, what biases and weaknesses there are, movement prioritization.

Performance levels

In order to measure athlete performance, we need to compare it against a set of “objective” levels. For example, it is commonly accepted that Fran can be done within 3 minutes if you’re an elite CrossFit athlete, with the rest of the bands looking like this:

What is a good score for the “Fran” workout?

- Beginner: 7-9 minutes

- Intermediate: 6-7 minutes

- Advanced: 4-6 minutes

- Elite: <3 minutes

Wodwell is likely the biggest database of WOD’s and it shows bands for some of the most common ones but not all of them. I decided to add these bands to PRzilla and add them to all workouts.

But how do we measure all of them?

Ideally, we’d have a dedicated panel of experts going over thousands of WOD’s to figure all of this out. Thankfully, current top-tier AI models are trained on sufficient volume of CrossFit data and have strong-enough reasoning capabilities to do this in much shorter time.

Subjectivity

Here’s the thing: absolute scores are bound to be subjective and context-dependent!

Even though Fran times are “commonly accepted“ as <3, 4-6, 6-7, 7-9 there can also be a decent variation among them when adjusted for male vs. female, year/decade measured (CF performance is usually trending upwards), in general population vs. experienced CrossFitters, in CrossFitters vs. specialized athletes (runners/weightlifters/calisthenic warriors), based on country/area or a specific gym (Mayhem vs. your typical box), and many more.

This makes calculations tricky but I think it’s still possible to create a range that resembles an averaged-out, close-enough representation. Our “5k run” results are likely more lax than the ones actual runners would use. But for most workouts, it’s possible to use reasoning to tell that 6 minute Fran is roughly a (top of) intermediate or a (bottom of) advanced.

More importantly, as long as our scoring system is consistent across the board, it’s great for measuring relative performance: either against yourself over time, or against others.

CrossFit specific AI analysis

Top tier AI models are already trained on a large enough CrossFit data to be able to determine most of these bands. But we need few extra layers of careful orchestration to create a solid system at large:

-

SOTA thinking model(s)

I used mostly Gemini 2.5 Pro (sometimes Sonnet 3.7) since those were the best reasoning models at the moment. -

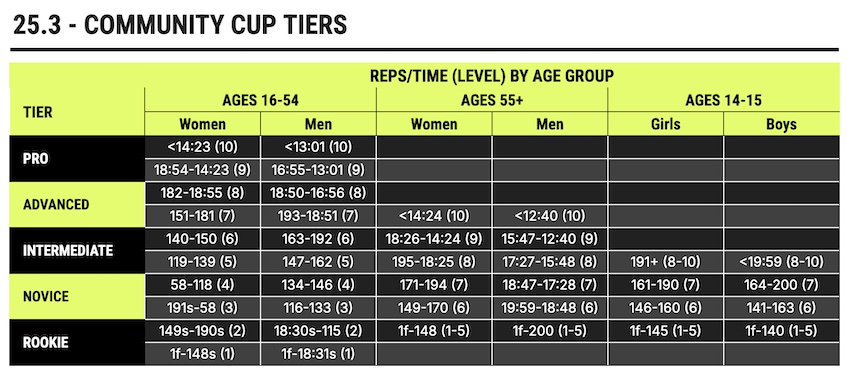

Prompt and context

Make it act like a CrossFit coach / exercise scientist. “Use your extensive CrossFit knowledge”. Give it extra data to consult and base off of to narrow the scope and context, e.g. Community Cup tiers and measurements. -

Memory bank and examples

In practice, models are limited by 100-200K context window so we can’t send our entire JSON consisting of millions of tokens. For relative stability across batches, we need to constantly orient our model to return relatively similar calculations. I used a combination of memory bank + specific detailed documentation for this plan/feature + few examples of already existing calculations and reasoning across varied workouts. Reasoning was performed in batches to prevent hallucinations and tackle cost (this was expensive as is). -

Internal error correction

At the end of calculation, model needs to double-check its own analysis of specific WOD for correctness. -

External error correction

At the end of each batch of calculations, model takes few random existing scores and compares them to a current calculation; this ensures relative stability across many batches.

Percentiles

CrossFit popularized percentile-based scores during the Open, and — as part of Community Cup — they recently rolled out a scoring system that consists of 5 levels that map directly to percentiles — Rookie (<21%), Novice (22-43%), Intermediate (44-65%), Advanced (66-87%), and Pro (>88%).

I initially went with wodwell-inspired 4 tiers of Beginner, Intermediate, Advanced, Elite, then realized that there’s not enough granularity. When working on GPP charts, the scale was 1-10 and plotting “Advanced” on it was washing out the result too much. 60% and 80% could both be considered Advanced but to go from one to another might take you few years! Similarly with GPP wheel chart: if your stamina is at 60% and strength is at 80%, you would want to see that reflected on a chart as unevenness.

Example: Frelen

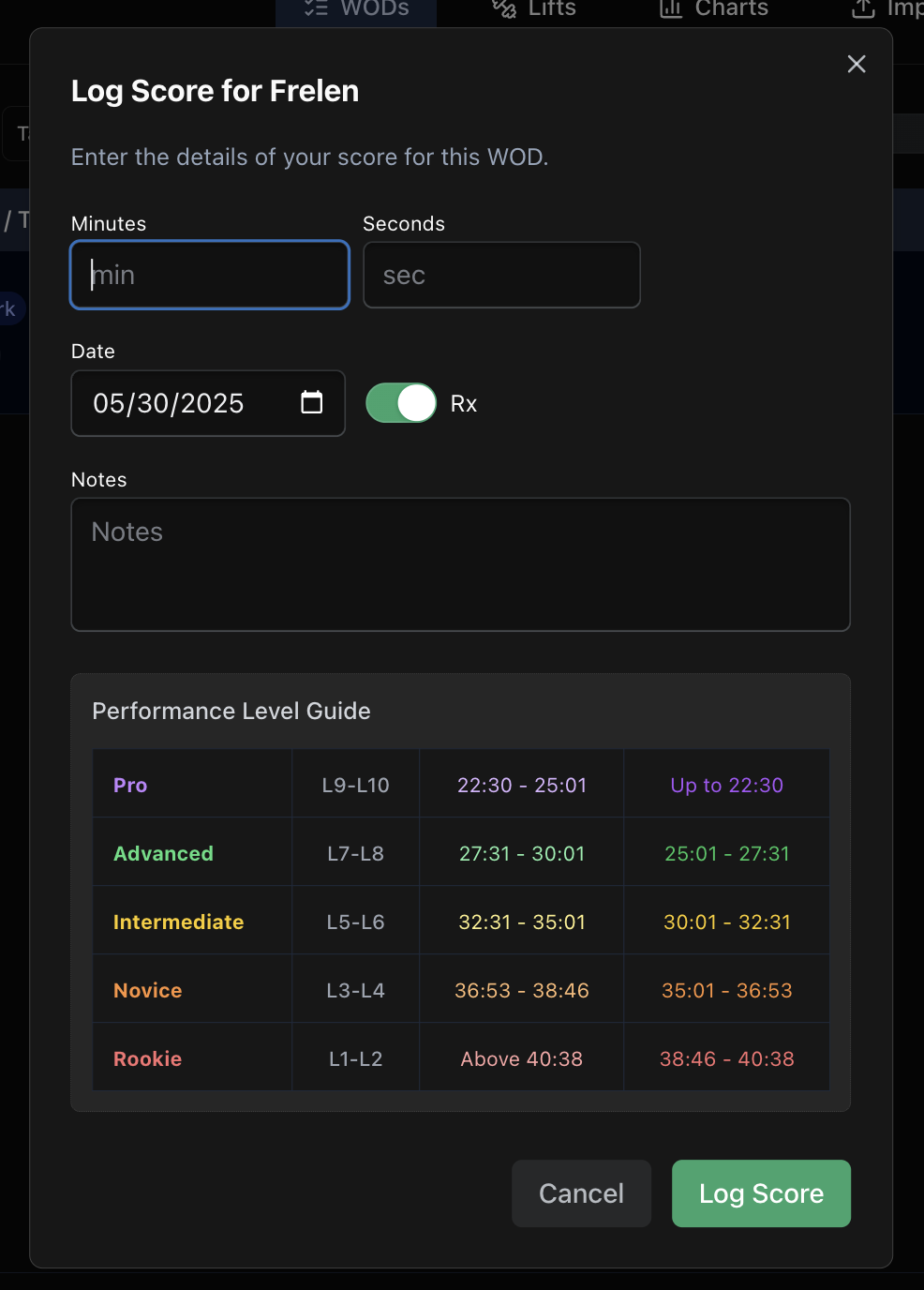

Here is a raw example of one of the calculations and model’s reasoning. I already had a 4 tier system/data that was generated using similar heuristic; each WOD had a difficulty, difficultyExplanation (the one model derived from its reasoning before), type, and levels.

I then used a model to derive 10 levels by giving it existing framework of how we derived those 4 levels + new system of 10 levels + examples.

Notice how it analyses WOD step by step; it understands that it’s similar to “Helen” and “Eva” since both follow a similar triplet pattern of run, x, pull-ups with this one being closer to “Eva” in terms of volume; it calculates rough times for run and thrusters while accounting for fatigue and number of rounds; adjusts edges of 10-tier to be more than 4-tier one and even considers that because it’s a “hard” WOD, beginner level is to be extended by 7min.

This now allows us to see where our scores stand for any WOD, such as this L7 that falls within 4:00-5:00 for Diane.

Before doing a workout, you can take a look at the performance guide and have a better idea which time to shoot for to get into a certain percentile.

AI-derived difficulty

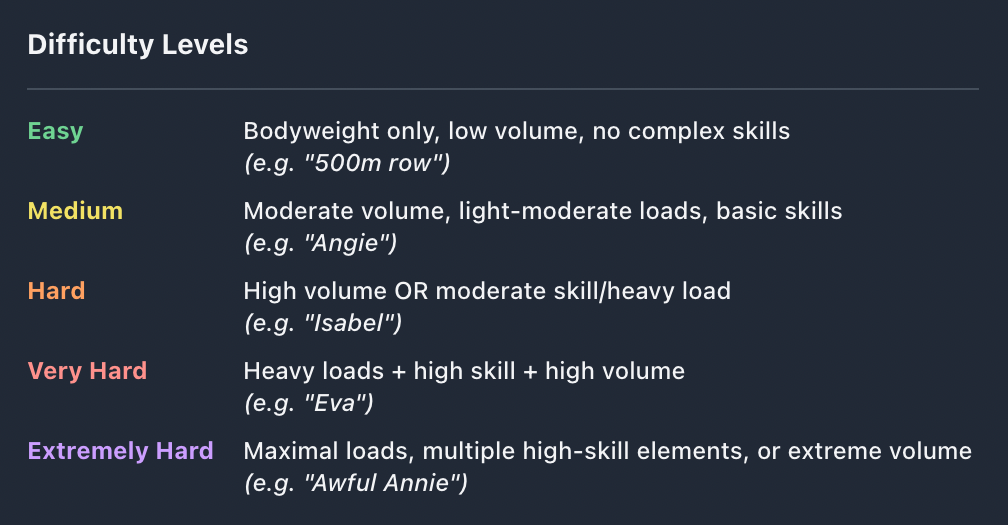

Using similar training and reasoning, I was also able to create difficulty levels for all WOD’s. You’ve already seen “Frelen” categorized as “Hard“ earlier.

Here’s the actual documentation used when orienting AI to work with this data. AI uses this as a framework to understand general structure of a workout, and then adjusts difficulty based on modifiers like volume, skill, and load.

Difficulty examples

This produces strikingly accurate results. Note AI’s explanation for why difficulty is set certain way:

-

1k row — Easy, “A standard benchmark test of 1000 meter rowing speed.“

-

Baseline1 — Easy, “A classic CrossFit introductory benchmark testing basic rowing and bodyweight movement capacity.”

-

Annie 2 — Medium, “Girl WOD (Ladder Couplet). Tests double-under skill proficiency and core endurance in a fast-paced descending rep scheme (50-40-30-20-10).”

-

Wittman 3 — Medium, “Hero WOD (Triplet). 7 rounds combining moderate KB swings, light power cleans, and box jumps. Tests moderate power endurance/conditioning.”

-

Dork 4 — Hard, “Hero WOD (Triplet). 6 rounds combining DUs, heavy KB swings (70lb), and burpees. Tests conditioning, skill, and endurance over significant volume.”

-

Kelly 5 — Hard, “Girl WOD (Triplet). 5 rounds: run, high-vol box jumps, high-vol wall balls. Tests high-volume conditioning/endurance.”

-

Maggie 6 — Very Hard, “Five rounds of high-volume, high-skill gymnastics movements (HSPU, Pull-ups, Pistols). Tests advanced gymnastics capacity and endurance.”

-

The Seven 7 — Very Hard, “Hero WOD. 7 rounds of 7 reps: HSPU, heavy thrusters (135lb), KTE, heavy DL (245lb), burpees, heavy KB swings (70lb), pull-ups. Extremely demanding strength/skill/volume across 7 movements.“

-

Atalanta 8 — Extremely Hard, “Long Murph-style chipper with vest, high volume gymnastics.“

-

2007 Reload 9 — Extremely Hard, “Long row followed by high-skill gymnastics and heavy shoulder-to-overheads demand elite capacity and strength.“

Fun fact: “Extremely Hard“ category did not exist until I introduced Crossfit Games workouts at which point AI proactively came up with it and it made sense as the relative difficulty was objectively increased in those! Only 15 out of 907 are currently categorized as such.

Effort vs Complexity

Some of you will certainly scoff at a “1k row” categorized as easy. A simple movement like that can absolutely be made into a grueling test of strength, grit, endurance and stamina. The difficulty in PRzilla is not about how hard something can be made but how demanding it is on skill/strength/endurance. 1k row is easy in a sense that it can be performed by almost any person and can be completed with little effort as prescribed. You can’t say the same about Amanda that will have you do 21 ring muscle-ups together with 21 squat snatches at 135lb — feats that can take you years to master individually, not to mention being able to superset them.

Extreme skills

Speaking of extremely hard tests, it was interesting to see how AI estimates something like “Triple unders: max reps” or “Free standing handstand push-ups: max reps”:

This is a "Very Hard" test of max unbroken triple-unders. This is an extremely high-skill movement. Even a single rep is a significant achievement for many.

High-skill jump rope variation requiring exceptional timing, coordination, and wrist speed.

- L1: Cannot complete 0 reps (effectively)

- L2: 0 reps

- L3: 0 reps

- L4: 0 reps

- L5: 1-4 reps

- L6: 5-10 reps

- L7: 11-15 reps

- L8: 16-21 reps

- L9: 22-36 reps

- L10: >=36 reps

Because we maintain relative difficulty, even an intermediate score on such tests are a great achievement. And the model understands that beginners (up until level 5) are unlikely to complete even 1.

Timeline and adjusted performance

Once we know your performance levels on all the WOD’s, it’s easy to plot them over time for a chart like this that shows “fitness level” progression and trend. And here’s something even more fun — because we have WOD’s difficulty, we can adjust your score to be more representative of real life performance (meaning that getting “Intermediate” in a “Very Hard” WOD is closer to getting “Advanced“ in “Hard” one):

adjustedLevel = cap(scoreLevel + difficultyBonus, 0, 10)…where difficultyBonus is something simple like:

Easy: -0.5, Medium: +0.0, Hard: +0.5, Very Hard: +1.0, Extremely Hard: +1.5

Work in progress

Give these estimates a try — do they feel right? Could anything be improved? I’m planning to refine these in PRzilla for an even deeper understanding of workout stimulus; similar to community cup, we could be better at gender and age group adjustments. There are also gaps right now with certain WODs that have a timecap and so are a hybrid of time (if completed within timecap) and reps/load (if completed at timecap).

In the future, I’m planning to add an option to input any custom WOD and get an estimate of its difficulty and performance levels.

For Time: 500 meter Row, 40 Air Squats, 30 Sit-Ups, 20 Push-Ups, 10 Pull-Ups

50-40-30-20-10 Reps For Time: Double-Unders, Sit-Ups

7 Rounds For Time: 15 Kettlebell Swings (1.5/1 pood) , 15 Power Cleans (95/65 lb), 15 Box Jumps (24/20 in))

6 Rounds For Time: 60 Double-Unders, 30 Kettlebell Swings (1.5/1 pood), 15 Burpees

5 Rounds For Time: 400 meter Run, 30 Box Jumps (24/20 in), 30 Wall Ball Shots (20/14 lb)

5 Rounds for Time: 20 Handstand Push-Ups, 40 Pull-Ups, 60 Pistols (Alternating Legs)

7 Rounds for Time: 7 Handstand Push-Ups, 7 Thrusters (135/95 lb), 7 Knees-to-Elbows, 7 Deadlifts (245/165 lb), 7 Burpees, 7 Kettlebell Swings (2/1.5 pood), 7 Pull-Ups

For Time: 1 mile Run, 100 Handstand Push-Ups, 200 Alternating Pistols, 300 Pull-Ups, 1 mile Run. Wear a Weight Vest (20/14 lb)

For Time: 1,500 meter Row Then, 5 Rounds of: 10 Bar Muscle-Ups, 7 Shoulder-to-Overheads (235/145 lb)

Javascript quiz. ES6 edition. 3 Nov 2015 3:00 PM (10 years ago)

Javascript quiz. ES6 edition.

Remember that crazy Javascript quiz from 6 years ago? Craving to solve another set of mind-bending snippets no sensible developer would ever use in their code? Looking for a new installment of the most ridiculous Javascript interview questions?

Look no further! The "ECMAScript Two Thousand Fifteen" installment of good old Javascript Quiz is finally here.

The rules are as usual:

- Assuming ECMA-262 6th Edition

- Implementation quirks do not count (assuming standard behavior only)

- Every snippet is run as a global code (not in eval, function, or module contexts)

- There are no other variables declared (and host environment is not extended with anything beyond what's defined in a spec)

- Answer should correspond to exact return value of entire expression/statement (or last line)

- "Error" in answer indicates that overall snippet results in a compile or runtime error

- Cheating with Babel doesn't count (and there could even be bugs!)

The quiz goes over such ES6 topics as: classes, computed properties, spread operator, generators, template strings, and shorthand properties. It's relatively easy, but still tricky. It tries to cover various ES6 features — a little bit of this, a little bit of that — but it's certainly still only a tiny subset.

If you can think of other silly riddle ideas to break one's head against, please post them in the comments. For a slightly harder version, feel free to explore some of the tests in our compat table or perhaps something from TC39 official test suite.

Ready? Here we go.

Here be quiz result

I hope you enjoyed it. I'll try to write up an explanation for these in the near future.

Vote on Hacker News TweetThe poor, misunderstood innerText 31 Mar 2015 4:00 PM (10 years ago)

The poor, misunderstood innerText

innerText property.

That quirky, non-standard way of element's text retrieval, [introduced by Internet Explorer](https://msdn.microsoft.com/en-us/library/ie/ms533899%28v=vs.85%29.aspx) and later "copied" by both WebKit/Blink and Opera for web-compatibility reasons. It's usually seen in combination with textContent — as a cross-browser way of using standard property followed by a proprietary one:

Or as the main webcompat offender in [numerous Mozilla tickets](https://bugzilla.mozilla.org/show_bug.cgi?id=264412#c24) — since Mozilla is one of the only major browsers refusing to add this non-standard property — when someone doesn't know what they're doing, skipping textContent "fallback" altogether:

innerText is pretty much always frown upon. After all, why would you want to use a non-standard property that does the "same" thing as a standard one? Very few people venture to actually check the differences, and on the surface it certainly appears as there is none. Those curious enough to investigate further usually do find them, but only slight ones, and only when retrieving text, not setting it.

Back in 2009, I did just that. And I even wrote [this StackOverflow answer](http://stackoverflow.com/a/1359822/130652) on the exact differences — slight whitespace deviations, things like inclusion of <script> contents by textContent (but not innerText), differences in interface (Node vs. HTMLElement), and so on.

All this time I was strongly convinced that there isn't much else to know about textContent vs. innerText. Just steer away from innerText, use this "combo" for cross-browser code, keep in mind slight differences, and you're golden.

Little did I know that I was merely looking at the tip of the iceberg and that my perception of innerText will change drastically. What you're about to hear is the story of Internet Explorer getting something right, the real differences between these properties, and how we probably want to standardize this red-headed stepchild.

The real difference

A little while ago, I was helping someone with the implementation of text editor in a browser. This is when I realized just how ridiculously important these seemingly insignificant whitespace deviations betweentextContent and innerText are.

Here's a simple example:

See the Pen gbEWvR by Juriy Zaytsev (@kangax) on CodePen.

Notice howinnerText almost precisely represents exactly how text appears on the page. textContent, on the other hand, does something strange — it ignores newlines created by <br> and around styled-as-block elements (<span> in this case). But it preserves spaces as they are defined in the markup. What does it actually do?

Looking at the [spec](http://www.w3.org/TR/2004/REC-DOM-Level-3-Core-20040407/core.html#Node3-textContent), we get this:

This attribute returns the text content of this node and its descendants. [...]In other words,

On getting, no serialization is performed, the returned string does not contain any markup. No whitespace normalization is performed and the returned string does not contain the white spaces in element content (see the attribute Text.isElementContentWhitespace). [...]

The string returned is made of the text content of this node depending on its type, as defined below:

For ELEMENT_NODE, ATTRIBUTE_NODE, ENTITY_NODE, ENTITY_REFERENCE_NODE, DOCUMENT_FRAGMENT_NODE:

concatenation of the textContent attribute value of every child node, excluding COMMENT_NODE and PROCESSING_INSTRUCTION_NODE nodes. This is the empty string if the node has no children.

For TEXT_NODE, CDATA_SECTION_NODE, COMMENT_NODE, PROCESSING_INSTRUCTION_NODE

nodeValue

textContent returns concatenated text of all text nodes. Which is almost like taking markup (i.e. innerHTML) and stripping it off of the tags. Notice that no whitespace normalization is performed, the text and whitespace are essentially spit out the same way they're defined in the markup. If you have a giant chunk of newlines in HTML source, you'll have them as part of textContent as well.

While investigating these issues, I came across a [fantastic blog post by Mike Wilcox](http://clubajax.org/plain-text-vs-innertext-vs-textcontent/) from 2010, and pretty much the only place where someone tries to bring attention to this issue. In it, Mike takes a stab at the same things I'm describing here, saying these true-to-the-bone words:

Internet Explorer implemented innerText in version 4.0, and it’s a useful, if misunderstood feature. [...]Knowing these differences, we can see just how potentially misleading (and dangerous) a typical

The most common usage for these properties is while working on a rich text editor, when you need to “get the plain text” or for other functional reasons. [...]

Because “no whitespace normalization is performed”, what textContent is essentially doing is acting like a PRE element. The markup is stripped, but otherwise what we get is exactly what was in the HTML document — including tabs, spaces, lack of spaces, and line breaks. It’s getting the source code from the HTML! What good this is, I really don’t know.

textContent || innerText retrieval is. It's pretty much like saying:

The case for innerText

Coming back to a text editor... Let's say we have a [contenteditable](http://html5demos.com/contenteditable) area in which a user is writing something. And we'd like to have our own spelling correction of a text in that area. In order to do that, we really want to analyze text the way it appears in the browser, not in the markup. We'd like to know if there are newlines or spaces typed by a user, and not those that are in the markup, so that we can correct text accordingly. This is just one use-case of plain text retrieval. Perhaps you might want to convert written text to another format (PDF, SVG, image via canvas, etc.) in which case it has to look exactly as it was typed. Or maybe you need to know the cursor position in a text (or its entire length), so you need to operate on a text the way it's presented. I'm sure there's more scenarios. A good way to think aboutinnerText is as if the text was selected and copied off the page. In fact, this is exactly what WebKit/Blink does — it [uses the same code](http://lists.w3.org/Archives/Public/public-html/2011Jul/0133.html) for Selection#toString serialization and innerText!

Speaking of that — if innerText is essentially the same thing as stringified selection, shouldn't it be possible to emulate it via Selection#toString?

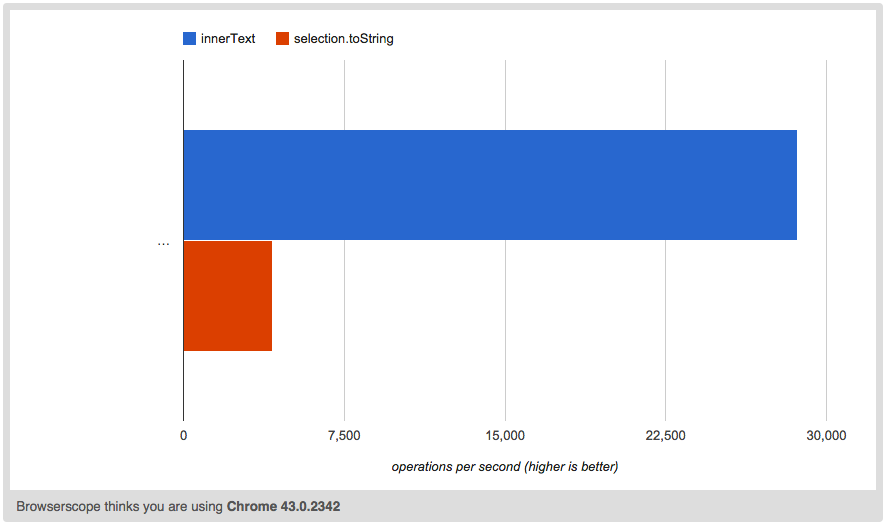

It sure is, but as you can imagine, the performance of such thing [leaves more to be desired](http://jsperf.com/innertext-vs-selection-tostring/4) — we need to save current selection, then change selection to contain entire element contents, get string representation, then restore original selection:

The problems with this frankenstein of a workaround are performance, complexity, and clarity. It shouldn't be so hard to get "plain text" representation of an element. Especially when there's an already "implemented" property that does just that.

Internet Explorer got this right —

Internet Explorer got this right — textContent and Selection#toString are poor contenders in cases like this; innerText is exactly what we need. Except that it's non-standard, and unsupported by one major browser. Thankfully, at least Chrome (Blink) and Safari (WebKit) were considerate enough to immitate it. One would hope there's no deviations among their implementations. Or is there?

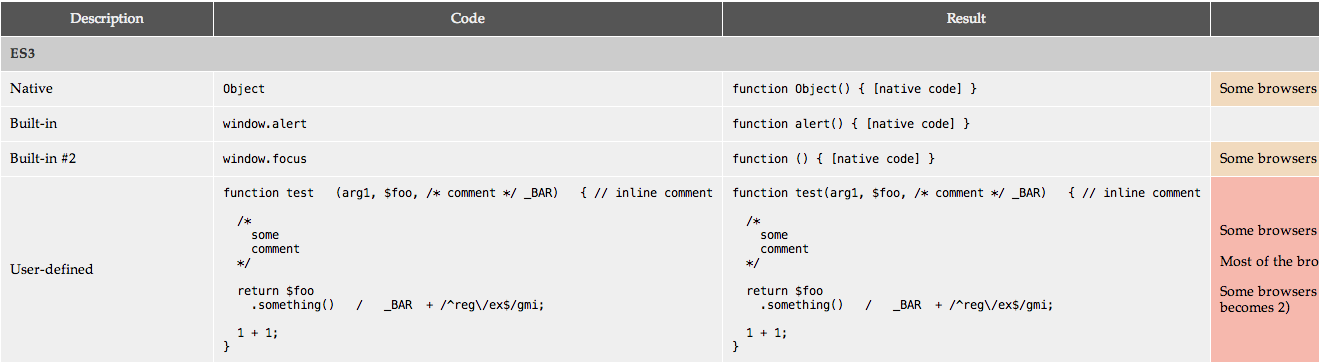

Differences with textContent

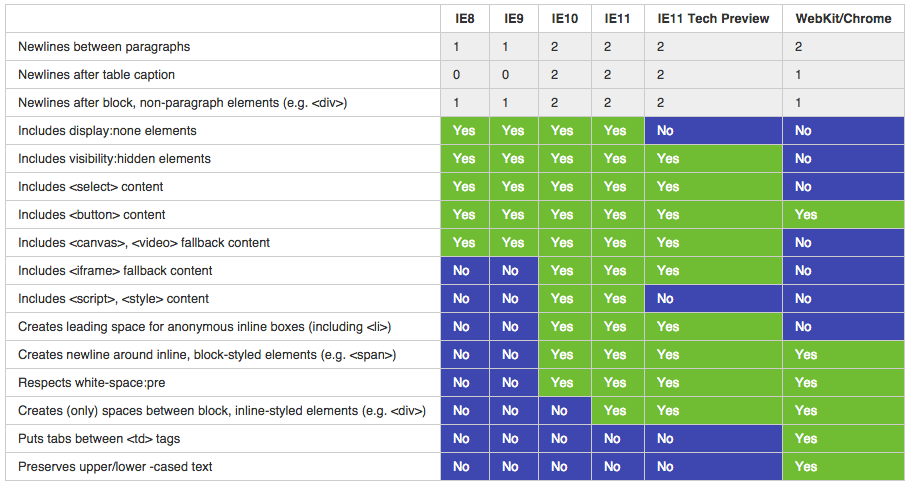

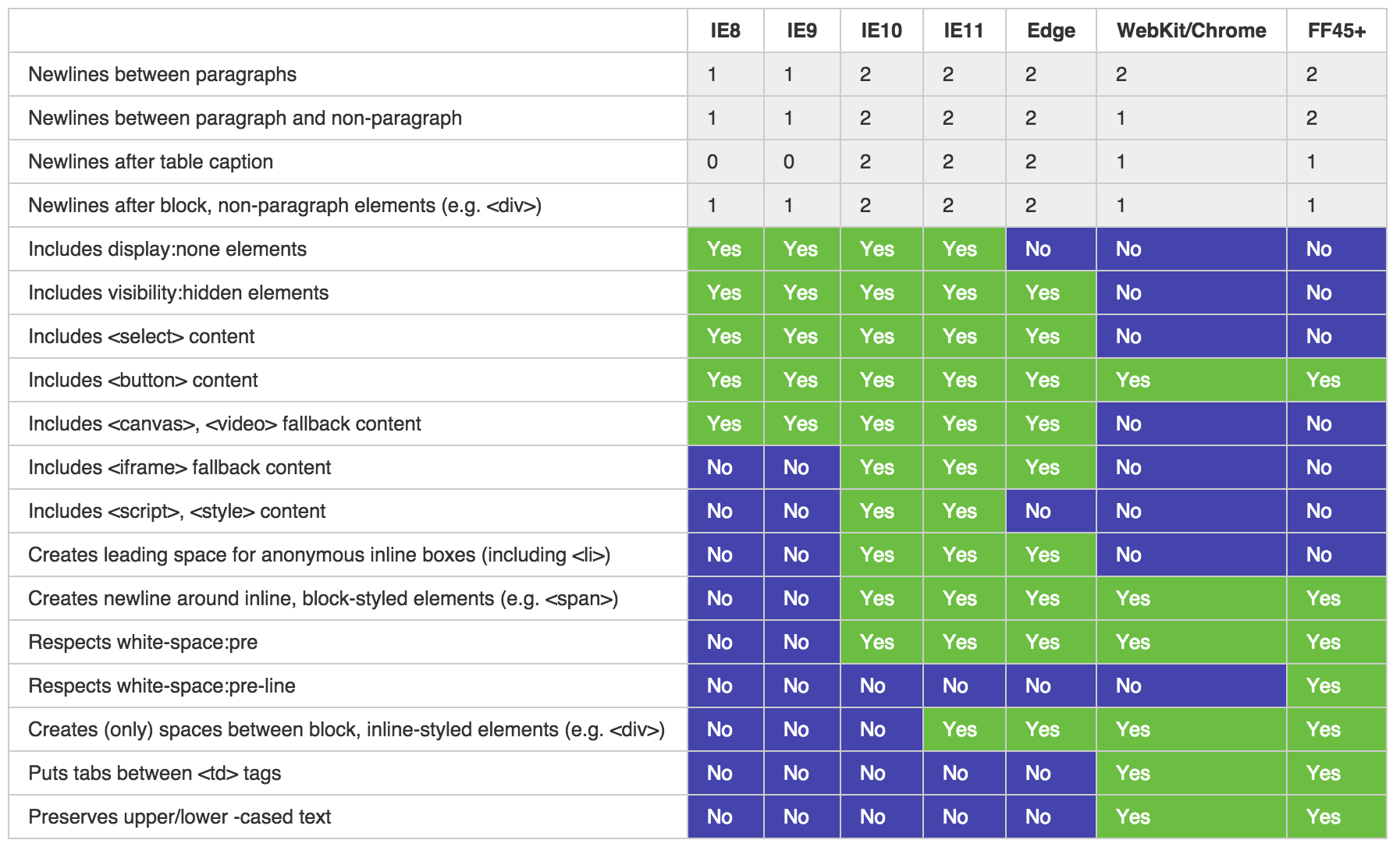

Once I realized the significance ofinnerText, I wanted to see the differences among 2 engines. Since there was nothing like this out there, I set on a path to explore it. In true ["cross-browser maddness" traditions](http://unixpapa.com/js/key.html), what I've found was not for the faint of heart.

I started with (now extinct) [test suite by Aryeh Gregor](https://web.archive.org/web/20110205234444/http://aryeh.name/spec/innertext/test/innerText.html) and [added few more things](http://kangax.github.io/jstests/innerText/) to it. I also searched WebKit/Blink bug trackers and included [whatever](https://code.google.com/p/chromium/issues/detail?id=96839) [relevant](https://bugs.webkit.org/show_bug.cgi?id=14805) [things](https://bugs.webkit.org/show_bug.cgi?id=17830) I found there.

The table above (and in the test suite) shows all the gory details, but few things worth mentioning. First, good news — Internet Explorer <=9 are identical in their behavior :) Now bad — everything else diverges. Even IE changes with each new version — 9, 10, 11, and Tech Preview (the unreleased version of IE that's currently in the making) are all different. What's also interesting is how WebKit copied some of the old-IE traits — such as not including contents of <script> and <style> elements — and then when IE changed, they naturally drifted apart. Currently, some of the WebKit/Blink behavior is like old-IE and some isn't. But even comparing to original versions, WebKit did a poor job copying this feature, or rather, it seems like they've tried to make it better!

Unlike IE, WebKit/Blink insert tabs between table cells — that kind of makes sense! They also preserve upper/lower-cased text, which is arguably better. They don't include hidden elements ("display:none", "visibility:hidden"), which makes sense too. And they don't include contents of <select> elements and <canvas>/<video> fallback — perhaps a questionable aspect — but reasonable as well.

Ok, there's more good news.

Notice that IE Tech Preview (Spartan) is now much closer to WebKit/Blink. There's only 9 aspects they differ in (comparing to 10-11 in earlier versions). That's still a lot but there's at least some hope for convergence. Most notably, IE again stopped including <script> and <style> contents, and — for the first time ever — stopped including "display:none" elements (but not "visibility:hidden" ones — more on that later).

I started with (now extinct) [test suite by Aryeh Gregor](https://web.archive.org/web/20110205234444/http://aryeh.name/spec/innertext/test/innerText.html) and [added few more things](http://kangax.github.io/jstests/innerText/) to it. I also searched WebKit/Blink bug trackers and included [whatever](https://code.google.com/p/chromium/issues/detail?id=96839) [relevant](https://bugs.webkit.org/show_bug.cgi?id=14805) [things](https://bugs.webkit.org/show_bug.cgi?id=17830) I found there.

The table above (and in the test suite) shows all the gory details, but few things worth mentioning. First, good news — Internet Explorer <=9 are identical in their behavior :) Now bad — everything else diverges. Even IE changes with each new version — 9, 10, 11, and Tech Preview (the unreleased version of IE that's currently in the making) are all different. What's also interesting is how WebKit copied some of the old-IE traits — such as not including contents of <script> and <style> elements — and then when IE changed, they naturally drifted apart. Currently, some of the WebKit/Blink behavior is like old-IE and some isn't. But even comparing to original versions, WebKit did a poor job copying this feature, or rather, it seems like they've tried to make it better!

Unlike IE, WebKit/Blink insert tabs between table cells — that kind of makes sense! They also preserve upper/lower-cased text, which is arguably better. They don't include hidden elements ("display:none", "visibility:hidden"), which makes sense too. And they don't include contents of <select> elements and <canvas>/<video> fallback — perhaps a questionable aspect — but reasonable as well.

Ok, there's more good news.

Notice that IE Tech Preview (Spartan) is now much closer to WebKit/Blink. There's only 9 aspects they differ in (comparing to 10-11 in earlier versions). That's still a lot but there's at least some hope for convergence. Most notably, IE again stopped including <script> and <style> contents, and — for the first time ever — stopped including "display:none" elements (but not "visibility:hidden" ones — more on that later).

Opera mess

You might have caught the lack of Opera in a table. It's not just because Opera is now using Blink engine (essentially having WebKit behavior). It's also due to the fact that when it wasn't on Blink, it's been reaaaally naughty when it comes toinnerText. To sustain web compatibility, Opera simply went ahead and "aliased" innerText to textContent. That's right, in Opera, innerText would return nothing close to what we see in IE or WebKit. There's simply no point including in a table; it would diverge in every single aspect, and we can just consider it as never implemented.

Note on performance

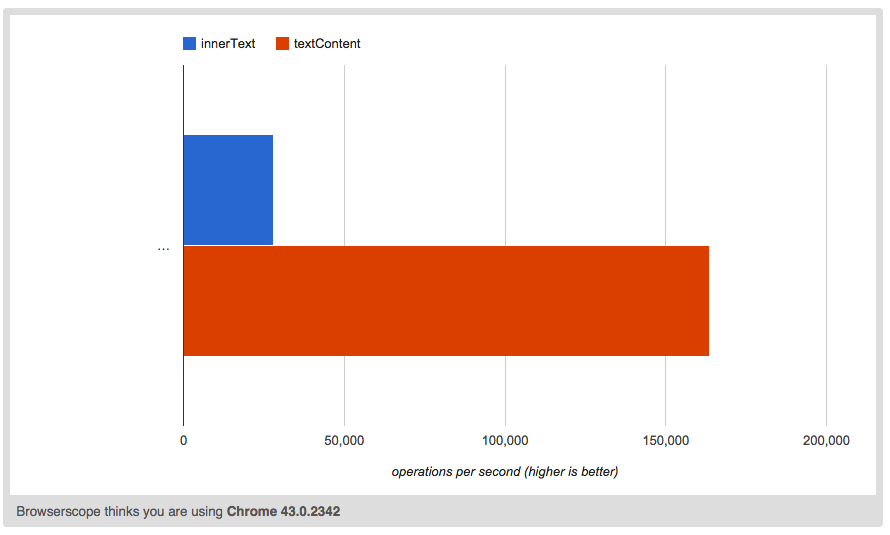

Another difference lurks behindtextContent and innerText — performance.

You can find dozens of [tests on jsperf.com comparing innerText and textContent](http://jsperf.com/search?q=innerText) — innerText is often dozens time slower.

In [this blog post](http://www.kellegous.com/j/2013/02/27/innertext-vs-textcontent/), Kelly Norton is talking about

In [this blog post](http://www.kellegous.com/j/2013/02/27/innertext-vs-textcontent/), Kelly Norton is talking about innerText being up to 300x slower (although that seems like a particularly rare case) and advises against using it entirely.

Knowing the underlying concepts of both properties, this shouldn't come as a surprise. After all, innerText requires knowledge of layout and [anything that touches layout is expensive](http://gent.ilcore.com/2011/03/how-not-to-trigger-layout-in-webkit.html).

So for all intents and purposes, innerText is significantly slower than textContent. And if all you need is to retrieve a text of an element without any kind of style awareness, you should — by all means — use textContent instead. However, this style awareness of innerText is exactly what we need when retrieving text "as presented"; and that comes with a price.

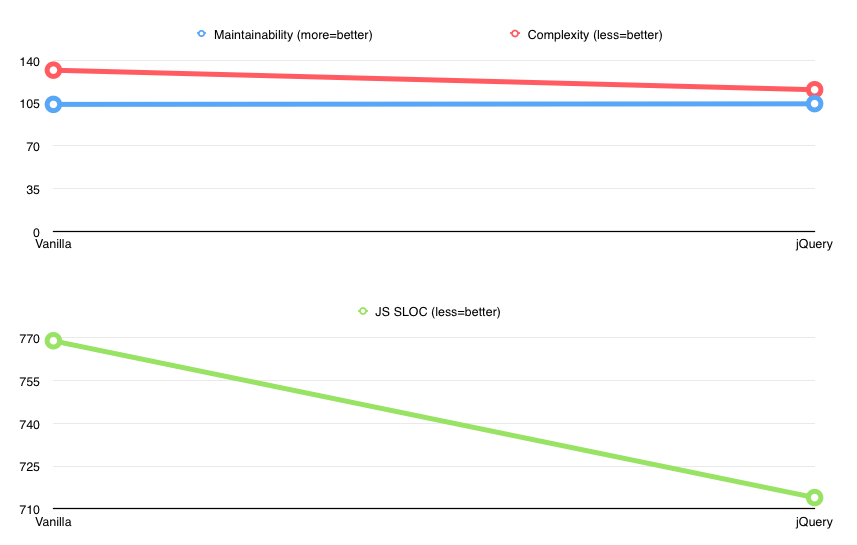

What about jQuery?

You're probably familiar with jQuery'stext() method. But how exactly does it work and what does it use — textContent || innerText combo or something else? Turns out, jQuery [takes a safe route](https://github.com/jquery/jquery/blob/7602dc708dc6d9d0ae9982aadb9fa4615a9c49fa/external/sizzle/dist/sizzle.js#L942-L971) — it either returns textContent (if available), or manually does what textContent is supposed to do — iterates over all children and concatenates their nodeValue's. Apparently, at one point jQuery **did** use innerText, but then [ran into good old whitespace differences](http://bugs.jquery.com/ticket/11153) and decided to ditch it altogether.

So if we wanted to use jQuery to get real text representation (à la innerText), we can't use jQuery's text() since it's basically a cross-browser textContent. We would need to roll our own solution.

Standardization attempts

Hopefully by now I've convinced you thatinnerText is pretty damn useful; we went over the underlying concept, browser differences, performance implications and saw how even an all-mighty jQuery is of no help.

You would think that by now this property is standardized or at least making its way into the standard.

Well, not so fast.

Back in 2010, Adam Barth (of Google), [proposes to spec innerText](http://lists.w3.org/Archives/Public/public-whatwg-archive/2010Aug/0455.html) in a WHATWG mailing list. Funny enough, all Adam wants is to set pure text (not markup!) of an element in a secure way. He also doesn't know about textContent, which would certainly be a preferred (standard) way of doing that. Fortunately, Mike Wilcox, whose blog post I mentioned earlier, chimes in with:

In addition to Adam's comments, there is no standard, stable way of *getting* the text from a series of nodes. textContent returns everything, including tabs, white space, and even script content. [...] innerText is one of those things IE got right, just like innerHTML. Let's please consider making that a standard instead of removing it.In the same thread, Robert O'Callahan (of Mozilla) [doubts usefulness of innerText](http://lists.w3.org/Archives/Public/public-whatwg-archive/2010Aug/0477.html) but also adds:

But if Mike Wilcox or others want to make the case that innerText is actually a useful and needed feature, we should hear it. Or if someone from Webkit or Opera wants to explain why they added it, that would be useful too.Ian Hixie is open to adding it to a spec if it's needed for web compatibility. While Rob O'Callahan considers this a redundant feature, Maciej Stachowiak (of WebKit/Apple) hits the nail on the head with [this fantastic reply](http://lists.w3.org/Archives/Public/public-whatwg-archive/2010Aug/0480.html):

Is it a genuinely useful feature? Yes, the ability to get plaintext content as rendered is a useful feature and annoying to implement from scratch. To give one very marginal data point, it's used by our regression text framework to output the plaintext version of a page, for tests where layout is irrelevant. A more hypothetical use would be a rich text editor that has a "convert to plaintext" feature. textContent is not as useful for these use cases, since it doesn't handle line breaks and unrendered whitespace properly.To which Rob gives a reasonable reply:

[...]

These factors would tend to weigh against removing it.

There are lots of ways people might want to do that. For example, "convert to plaintext" features often introduce characters for list bullets (e.g. '*') and item numbers. (E.g., Mac TextEdit does.) Safari 5 doesn't do either. [...] Satisfying more than a small number of potential users with a single attribute may be difficult.And the conversation dies out.

Is innerText really useful?

As Rob points out, "convert to plaintext" could certainly be an ambiguous task. In fact, we can easily create a test markup that looks nothing like its "plain text" version:See the Pen emXMKZ by Juriy Zaytsev (@kangax) on CodePen.

Notice that "opacity: 0" elements are not displayed, yet they are part ofinnerText. Ditto with infamous "text-indent: -999px" hiding technique. The bullets from the list are not accounted for and neither is dynamically generated content (via ::after pseudo selector). Paragraphs only create 1 newline, even though in reality they could have gigantic margins.

But I think that's OK.

If you think of innerText as text copied from the page, most of these "artifacts" make perfect sense. Just because a chunk of text is given "opacity: 0" doesn't mean that it shouldn't be part of output. It's a purely presentational concern, just like bullets, space between paragraphs or indented text. What matters is **structural preservation** — block-styled elements should create newlines, inline ones should be inline.

One iffy aspect is probably "text-transform". Should capitalized or uppercased text be preserved? WebKit/Blink think it should; Internet Explorer doesn't. Is it part of a text itself or merely styling?

Another one is "visibility: hidden". Similar to "opacity: 0" (and unlike "display: none"), a text is still part of the flow, it just can't be seen. Common sense would suggest that it should still be part of the output. And while Internet Explorer does just that, WebKit/Blink disagrees (also being curiously inconsistent with their "opacity: 0" behavior).

Elements that aren't known to a browser pose an additional problem. For example, WebKit/Blink recently started supporting <template> element. That element is not displayed, and so it is not part of innerText. To Internet Explorer, however, it's nothing but an unknown inline element, and of course it outputs its contents.

Standardization, take 2

In 2011, anotherinnerText proposal [is posted to WHATWG mailing list](http://lists.w3.org/Archives/Public/public-html/2011Jul/0133.html), this time by Aryeh Gregor. Aryeh proposes to either:

- Drop

innerTextentirely - Spec

innerTextto be liketextContent - Actually spec

innerTextaccording to whatever IE/WebKit are doing

innerText:

The problem with (3) is that it would be very hard to spec; it would be even harder to spec in a way that all browsers can implement; and any spec would probably have to be quite incompatible anyway with the existing implementations that follow the general approach. [...]Indeed, as we've seen from the tests, compatibility poses to be a serious issue. If we were to standardize

innerText, which of the 2 behaviors should we put in a spec?

Another problem is reliance on Selection.toString() (as expressed by Boris Zbarsky):

It's not clear whether the latter is in fact an option; that depends on how Selection.toString gets specified and whether UAs are willing to do the same for innerText as they do for Selection.toString....In the end, we're left with [this WHATWG ticket by Aryeh](https://www.w3.org/Bugs/Public/show_bug.cgi?id=13145) on specifying

So far the only proposal I've seen for Selection.toString is "do what the copy operation does", which is neither well-defined nor acceptable for innerText. In my opinion.

innerText. Things look rather grim, as evidenced in one of the comments:

I've been told in no uncertain terms that it's not practical for non-Gecko browsers to remove. Depending on the rendering tree to the extent WebKit does, on the other hand, is insanely complicated to spec in terms of standard stuff like DOM and CSS. Also, it potentially breaks for detached nodes (WebKit behaves totally differently in that case). [...] But Gecko people seemed pretty unhappy about this kind of complexity and rendering dependence in a DOM property. And on the other hand, I got the impression WebKit is reluctant to rewrite their innerText implementation at all. So I'm figuring that the spec that will be implemented by the most browsers possible is one that's as simple as possible, basically just a compat shim. If multiple implementers actually want to implement something like the innerText spec I started writing, I'd be happy to resume work on it, but that wasn't my impression.We can't remove it, can't change it, can't spec it to depend on rendering, and spec'ing it would be quite difficult :)

Light at the end of a tunnel?

Could there still be some hope forinnerText or will it forever stay an unspecified legacy with 2 different implementations?

My hope is that the test suite and compatibility table are the first step in making things better. We need to know exactly how engines differ, and we need to have a good understanding of what to include in a spec. I'm sure this doesn't cover all cases, but it's a start (other aspects worth exploring: shadow DOM, detached nodes).

I think this test suite should be enough to write 90%-complete spec of innerText. The biggest issue is deciding which behavior to choose among IE and WebKit/Blink.

The plan could be:

1. Write a spec

2. Try to converge IE and WebKit/Blink behavior

3. Implement spec'd behavior in Firefox

Seeing [how amazing Microsoft has been](https://status.modern.ie/) recently, I really hope we can make this happen.

The naive spec

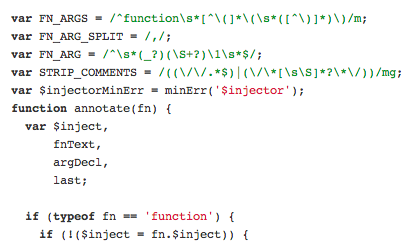

I took a stab at a relatively simple version ofinnerText:

Couple important tasks here:

1. Checking if a text node is within "formatted" context (i.e. a child of "white-space: pre-*" node), in which case its contents should be concatenated as is; otherwise collapse all whitespaces to 1.

2. Checking if a node is block-styled ("block", "list-item", "table", etc.), in which case it has to be surrounded by newlines; otherwise, it's inline and its contents are output as is.

Then there's things like ignoring <script>, <style>, etc. nodes and inserting tab ("\t") between <td> elements (to follow WebKit/Blink lead).

This is still a very minimal and naive implementation. For one, it doesn't collapse newlines between block elements — a quite important aspect. In order to do that, we need to keep track of more state — to know information about previous node's style. It also doesn't normalize whitespace in "true" manner — a text node with leading and trailing spaces, for example, should have those spaces stripped if it is (the only node?) in a block element.

This needs more work, but it's a decent start.

It would be also a good idea to write innerText implementation in Javascript, with unit tests for each of the "feature" in a compat table. Perhaps even supporting 2 modes — IE and WebKit/Blink. An implementation like this could then be simply integrated into non-supporting engines (or used as a proper polyfill).

I'd love to hear your thoughts, ideas, experiences, criticism. I hope (with all of your help) we can make some improvement in this direction. And even if nothing changes, at least some light was shed on this very misunderstood ancient feature.

Update: half a year later

It's been half a year since I wrote this post and few things changed for the better! First of all, [Robert O'Callahan](http://robert.ocallahan.org/) of Mozilla made some awesome effort — he decided to [spec out the innerText](https://github.com/rocallahan/innerText-spec) and then implemented it in Firefox. The idea was to create something simple but sensible. The proposed spec — only after about 11 years — is now [implemented in Firefox 45](https://bugzilla.mozilla.org/show_bug.cgi?id=264412) :) I've added FF45 results to [a compat table](http://kangax.github.io/jstests/innerText/) and aside from couple differences, FF is pretty close to Chrome's implementation. I'm also planning to add more tests to find any other differences among Chrome, FF, and Edge. The spec already revealed few bugs in Chrome, which I'm hoping to file tickets for and see resolved. If we can then also get Edge to converge, we'll be very close to having all 3 biggest browsers behave similarly, making `innerText` viable feature in a near future.

The spec already revealed few bugs in Chrome, which I'm hoping to file tickets for and see resolved. If we can then also get Edge to converge, we'll be very close to having all 3 biggest browsers behave similarly, making `innerText` viable feature in a near future.

Know thy reference 10 Dec 2014 3:00 PM (11 years ago)

Know thy reference

Abusing leaky abstractions for a better understanding of “this”

It was a sunny Monday morning that I woke up to an article on HackerNews, simply named “This in Javascript”. Curious to see what all the attention is about, I started skimming through. As expected, there were mentions of this in global scope, this in function calls, this in constructor instantiation, and so on. It was a long article. And the more I looked through, the more I realized just how overwhelming this topic might seem to folks unfamiliar with intricacies of this, especially when thrown into a myriad of various examples with seemingly random behavior.

It made me remember a moment from few years ago when I first read Crockford’s Good Parts. In it, Douglas succinctly laid out a piece of information that immediately made everything much clearer in my head:

The `this` parameter is very important in object oriented programming, and its value is determined by the invocation pattern. There are four patterns of invocation in JavaScript: the method invocation pattern, the function invocation pattern, the constructor invocation pattern, and the apply invocation pattern. The patterns differ in how the bonus parameter this is initialized.

Determined by invocation and only 4 cases? Well, that’s certainly pretty simple.

With this thought in mind, I went back to HackerNews, wondering if anyone else thought the subject was presented as something way too complicated. I wasn’t the only one. Lots of folks chimed in with the explanation similar to that from Good Parts, like this one:

Even more simply, I'd just say:

1) The keyword "this" refers to whatever is left of the dot at call-time.

2) If there's nothing to the left of the dot, then "this" is the root scope (e.g. Window).

3) A few functions change the behavior of "this"—bind, call and apply

4) The keyword "new" binds this to the object just created

Great and simple breakdown. But one point caught my attention — “whatever is left of the dot at call-time”. Seems pretty self-explanatory. For foo.bar(), this would refer to foo; for foo.bar.baz(), this would refer to foo.bar, and so on. But what about something like (f = foo.bar)()? After all, it seems that “whatever is left of the dot at call time” is foo.bar. Would that make this refer to foo?

Eager to save the world from unusual results in obscure cases, I rushed to leave a prompt comment on how the concept of “left of the dot” could be hairy. That for best results, one should understand concept of references, and their base values.

It is then that I shockingly realized that this concept of references actually hasn’t been covered all that much! In fact, searching for “javascript reference” yielded anything from cheatsheets to “pass-by-reference vs. pass-by-value” discussions, and not at all what I wanted. It had to be fixed.

And so this brings me here.

I’ll try to explain what these mysterious References are in Javascript (by which, of course, I mean ECMAScript) and how fun it is to learn this behavior through them. Once you understand References, you’ll also notice that reading ECMAScript spec is much easier.

But before we continue, quick disclaimer on the excerpt from Good Parts.

Good Parts 2.0

The book was written in the times when ES3 roamed the prairies, and now we’re in a full state of ES5.

What changed? Not much.

There’s 2 additions, or rather sub-points to the list of 4:

- method invocation

- function invocation

- “use strict” mode (new in ES5)

- constructor invocation

- apply invocation

- Function.prototype.bind (new in ES5)

Function invocation that happens in strict mode now has its this values set to undefined. Actually, it would be more correct to say that it does NOT have its this “coerced” to global object. That’s what was happening in ES3 and what happens in ES5-non-strict. Strict mode simply avoids that extra step, letting undefined propagate through.

And then there’s good old Function.prototype.bind which is hard to even call an addition. It’s essentially call/apply wrapped in a function, permanently binding this value to whatever was passed to bind(). It’s in the same bracket as call and apply, except for its “static” nature.

Alright, on to the References.

Reference Specification Type

To be honest, I wasn’t that surprised to find very little information on References in Javascript. After all, it’s not part of the language per se. References are only a mechanism, used to describe certain behaviors in ECMAScript. They’re not really “visible” to the outside world. They are vital for engine implementors, and users of the language don’t need to know about them.

Except when understanding them brings a whole new level of clarity.

Coming back to my original “obscure” example:

How do we know that 1st one’s this references foo, but 2nd one — global object (or undefined)?

Astute readers will rightfully notice — “well, the expression to the left of () evaluates to f, right after assignment; and so it’s the same as calling f(), making this function invocation rather than method invocation.”

Alright, and what about this:

“Oh, that’s just grouping operator! It evaluates from left to right so it must be the same as foo.bar(), making this reference foo”

“Strange”

And how about this:

“Well… considering last example, it must be undefined as well then? There must be something about those parenthesis”

“Ok, I’m confused”

Theory

ECMAScript defines Reference as a “resolved name binding”. It’s an abstract entity that consists of three components — base, name, and strict flag. The first 2 are what’s important for us at the moment.

There are 2 cases when Reference is created: in the process of Identifier resolution and during property access. In other words, foo creates a Reference and foo.bar (or foo['bar']) creates a Reference. Neither literals — 1, "foo", /x/, { }, [ 1,2,3 ], etc., nor function expressions — (function(){}) — create references.

Here’s a simple cheat sheet:

Cheat sheet

| Example | Reference? | Notes |

|---|---|---|

| "foo" | No | |

| 123 | No | |

| /x/ | No | |

| ({}) | No | |

| (function(){}) | No | |

| foo | Yes | Could be unresolved reference if `foo` is not defined |

| foo.bar | Yes | Property reference |

| (123).toString | Yes | Property reference |

| (function(){}).toString | Yes | Property reference |

| (1,foo.bar) | No | Already evaluated, BUT see grouping operator exception |

| (f = foo.bar) | No | Already evaluated, BUT see grouping operator exception |

| (foo) | Yes | Grouping operator does not evaluate reference |

| (foo.bar) | Yes | Ditto with property reference |

Don’t worry about last 4 for now; we’ll take a look at those shortly.

Every time a Reference is created, its components — “base”, “name”, “strict” — are set to some values. The strict flag is easy — it’s there to denote if code is in strict mode or not. The “name” component is set to identifier or property name that’s being resolved, and the base is set to either property object or environment record.

It might help to think of References as plain JS objects with a null [[Prototype]] (i.e. with no “prototype chain”), containing only “base”, “name”, and “strict” properties; this is how we can illustrate them below:

When Identifier foo is resolved, a Reference is created like so:

and this is what’s created for property accessor foo.bar:

This is a so-called “Property Reference”.

There’s also a 3rd scenario — Unresolvable Reference. When an Identifier can’t be found anywhere in the scope chain, a Reference is returned with base value set to undefined:

As you probably know, Unresolvable References could blow up if not “properly used”, resulting in an infamous ReferenceError (“foo is not defined”).

Essentially, References are a simple mechanism of representing name bindings; it’s a way to abstract both object-property resolution and variable resolution into a unified data structure — base + name — whether that base is a regular JS object (as in property access) or an Environment Record (a link in a “scope chain”, as in identifier resolution).

So what’s the use of all this? Now that we know what ECMAScript does under the hood, how does this apply to this behavior, foo() vs. foo.bar() vs. (f = foo.bar)() and all that?

Function call

What do foo(), foo.bar(), and (f = foo.bar)() all have in common? They’re function calls.

If we take a look at what happens when Function Call takes place, we’ll see something very interesting:

Notice highlighted step 6, which basically explains both #1 and #2 from Crockford’s list of 4.

We take expression before (). Is it a property reference? (foo.bar()) Then use its base value as this. And what’s a base value of foo.bar? We already know that it’s foo. Hence foo.bar() is called with this=foo.

Is it NOT a property reference? Ok, then it must be a regular reference with Environment Record as its base — foo(). In that case, use ImplicitThisValue as this (and ImplicitThisValue of Environment Record is always set to undefined). Hence foo() is called with this=undefined.

Finally, if it’s NOT a reference at all — (function(){})() — use undefined as this value again.

Are you feeling like this right now?

Assignment, comma, and grouping operators

Armed with this knowledge, let’s see if if we can explain this behavior of (f = foo.bar)(), (1,foo.bar)(), and (foo.bar)() in terms more robust than “whatever is left of the dot”.

Let’s start with the first one. The expression in question is known as Simple Assignment (=). foo = 1, g = function(){}, and so on. If we look at the steps taken to evaluate Simple Assignment, we’ll see one important detail:

Notice that the expression on the right is passed through internal GetValue() before assignment. GetValue() in its turn, transforms foo.bar Reference into an actual function object. And of course then we proceed to the usual Function Call with NOT a reference, which results in this=undefined. As you can see, (f = foo.bar)() only looks similar to foo.bar() but is actually “closer” to (function(){})() in a sense that it’s an (evaluated) expression rather than an (untouched) Reference.

The same story happens with comma operator:

(1,foo.bar)() is evaluated as a function object and Function Call with NOT a reference results in this=undefined.

Finally, what about grouping operator? Does it also evaluate its expression?

And here we’re in for surprise!

Even though it’s so similar to (1,foo.bar)() and (f = foo.bar)(), grouping operator does NOT evaluate its expression. It even says so plain and simple — it may return a reference; no evaluation happens. This is why foo.bar() and (foo.bar)() are absolutely identical, having this set to foo since a Reference is created and passed to a Function call.

Returning References

It’s worth mentioning that ES5 spec technically allows function calls to return a reference. However, this is only reserved for host objects, and none of the built-in (or user-defined) functions do that.

An example of this (non-existent, but permitted) behavior is something like this:

Of course, the current behavior is that non-Reference is passed to a Function call, resulting in this=undefined/global object (unless bar was already bound to foo earlier).

typeof operator

Now that we understand References, we can take a look in few other places for a better understanding. Take, for example, typeof operator:

Here is that “secret” for why we can pass unresolvable reference to typeof and not have it blow up.

On the other hand, if we were to use unresolvable reference without typeof, as a plain statement somewhere in code:

Notice how Reference is passed to GetValue() which is then responsible for stopping execution if Reference is an unresolvable one. It all starts to make sense.

delete operator

Finally, what about good old delete operator?

What might have looked like mambo-jumbo is now pretty nice and clear:

- If it’s not a reference, return true (

delete 1,delete /x/) - If it’s unresolvable reference (

delete iDontExist)- if in strict mode, throw SyntaxError

- if not in strict mode, return true

- If it’s a property reference, actually try to delete a property (

delete foo.bar) - If it’s a reference with Environment Record as base (

delete foo)- if in strict mode, throw SyntaxError

- if not in strict mode, attempt to delete it (further algorithm follows)

Summary

And that’s a wrap!

Hopefully you now understand the underlying mechanism of References in Javascript; how they’re used in various places and how we can “utilize” them to explain this behavior even in non-trivial constructs.

Note that everything I mentioned in this post was based on ES5, being current standard and the most implemented one at the moment. ES6 might have some changes, but that’s a story for another day.

If you’re curious to know more — check out section 8.7 of ES5 spec, including internal methods GetValue(), PutValue(), and more.

P.S. Big thanks to Rick Waldron for review and suggestions!

Refactoring single page app 31 Aug 2014 4:00 PM (11 years ago)

Refactoring single page app

A tale of reducing complexity and exploring client-side MVC

Skip straight to TL;DR.

Kitchensink is your usual behemoth app.

I created it couple years ago to showcase everything that Fabric.js — a full-blown <canvas> library — is capable of. We’ve already had some demos, illustrating this and that functionality, but kitchensink was meant to be kind of a general sandbox.

You could quickly try things out — add simple shapes or images or SVG’s or text; move them around, scale, rotate, delete, group, change colors, opacity; experiment with locking or z-index properties; serialize canvas into image or JSON or SVG; and so on.

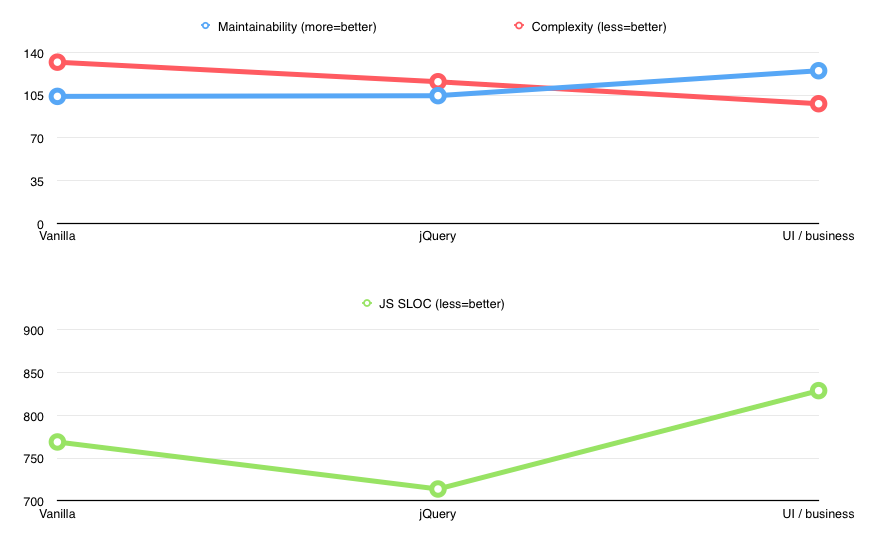

And so there was a good old, single kitchensink.js file (accompanied by kitchensink.html and kitchensink.css) — just a bunch of procedural commands and conditions, really. Pressed that button? Add a rectangle to the canvas. Pressed another one? Load an image. Was object selected on canvas? Enable that button and update its text. You get the idea.