Templafy DevOps setup presentation at DevOpsDays Copenhagen 2023 10 May 2023 4:00 PM (last year)

Last week I did my [first conference talk](https://devopsdays.org/events/2023-copenhagen/program/rasmus-kromann-larsen) in a few years at DevOpsDays Copenhagen 2023. I really enjoyed the conference as well - lots of interesting people and great Open Spaces sessions that served to both connect people and also have much more in-depth conversations about audience provided topics.

My talk was a whirlwind tour of the build pipelines we use at Templafy to push our code to production. As part of the presentation I did an actual deployment to production and described what was going on and why we ended up there. Practical tips on the approaches we had taken to evolve to this place.

Original abstract below in case it is removed from the DevOpsDays site at some point.

## The Road to Production: How our build pipelines evolved

All software must go to production to provide value and this road has a lot of different approaches. At Templafy we believe in shipping our code as quickly as possible. Over the last 3 years, we have been evolving our build pipelines to keep up with onboarding many new colleagues while reducing the risk of breakage through more tests and static analysis. Today we ship 10.000 pull requests to production per year with a dynamically scaling fleet of build agents that has more compute (240 cores and nearly 1 TB of RAM) than our actual production environment at peak.

In this talk, we will explore the problems we have faced and the solutions we picked - what worked and what did not work? Along the way there will be practical tips that can be applied at any level of build automation no matter if you are just starting out or already have an advanced setup.

The demos will be based on Azure DevOps but the problems discussed also apply to build services like GitHub Actions and others.

Original abstract below in case it is removed from the DevOpsDays site at some point.

## The Road to Production: How our build pipelines evolved

All software must go to production to provide value and this road has a lot of different approaches. At Templafy we believe in shipping our code as quickly as possible. Over the last 3 years, we have been evolving our build pipelines to keep up with onboarding many new colleagues while reducing the risk of breakage through more tests and static analysis. Today we ship 10.000 pull requests to production per year with a dynamically scaling fleet of build agents that has more compute (240 cores and nearly 1 TB of RAM) than our actual production environment at peak.

In this talk, we will explore the problems we have faced and the solutions we picked - what worked and what did not work? Along the way there will be practical tips that can be applied at any level of build automation no matter if you are just starting out or already have an advanced setup.

The demos will be based on Azure DevOps but the problems discussed also apply to build services like GitHub Actions and others.

React, Webpack, TypeScript presentation at Vertica 31 May 2017 4:00 PM (7 years ago)

Yesterday at Vertica in collaboration with Aarhus .NET User Group I presented my talk: "ASP.NET without Razor: React, Webpack and TypeScript". Demos and slides can be found [here](https://github.com/rasmuskl/react-webpack-typescript). ## Abstract React has been gaining popularity for single page apps but how does it fit into ASP.NET web apps? How can we use it in combination with Visual Studio without turning our regular workflow upside down? At Templafy we recently migrated our existing Knockout.js frontend to React. As part of this journey, we had to decode all the node.js guides on using React and convert them into a working solution. In the end we settled on the combination of React, Webpack and TypeScript. This talk is a condensed version of our experiences. In the session we explore what React and Webpack are, how they work and how they differ from the tools we usually use in ASP.NET. We will also have a brief introduction to TypeScript and what benefits it adds. After looking at these technologies individually we will look at how they can work together in an ASP.NET web app. This will also include a closer look at the development workflow with hot reloading and the advantages and disadvantages of the entire setup.

React, Webpack and TypeScript presentation at Microsoft 3 Apr 2017 4:00 PM (8 years ago)

This Friday I gave a [repeat](https://www.meetup.com/Copenhagen-Net-User-Group/events/238615694/) of my "ASP.NET without Razor: React, Webpack and TypeScript" talk at Microsoft in Lyngby in collaboration with Copenhagen .NET User Group.

The event had around 260 atteendees and the talk was a bit shorter this time, as I only had an hour (originally the talk was around 2 hours).

It was a 2 talk event with Anders Hejlsberg following my session with a great introduction to TypeScript and where it it is headed.

Demos and slides can be found [here](https://github.com/rasmuskl/react-webpack-typescript).

## Abstract

React has been gaining popularity for single page apps but how does it fit into ASP.NET web apps? How can we use it in combination with Visual Studio without turning our regular workflow upside down?

At Templafy we recently migrated our existing Knockout.js frontend to React. As part of this journey, we had to decode all the node.js guides on using React and convert them into a working solution. In the end we settled on the combination of React, Webpack and TypeScript. This talk is a condensed version of our experiences.

In the session we explore what React and Webpack are, how they work and how they differ from the tools we usually use in ASP.NET. We will also have a brief introduction to TypeScript and what benefits it adds. After looking at these technologies individually we will look at how they can work together in an ASP.NET web app. This will also include a closer look at the development workflow with hot reloading and the advantages and disadvantages of the entire setup.

Demos and slides can be found [here](https://github.com/rasmuskl/react-webpack-typescript).

## Abstract

React has been gaining popularity for single page apps but how does it fit into ASP.NET web apps? How can we use it in combination with Visual Studio without turning our regular workflow upside down?

At Templafy we recently migrated our existing Knockout.js frontend to React. As part of this journey, we had to decode all the node.js guides on using React and convert them into a working solution. In the end we settled on the combination of React, Webpack and TypeScript. This talk is a condensed version of our experiences.

In the session we explore what React and Webpack are, how they work and how they differ from the tools we usually use in ASP.NET. We will also have a brief introduction to TypeScript and what benefits it adds. After looking at these technologies individually we will look at how they can work together in an ASP.NET web app. This will also include a closer look at the development workflow with hot reloading and the advantages and disadvantages of the entire setup.

React, Webpack, TypeScript presentation at Templafy 1 Mar 2017 3:00 PM (8 years ago)

Yesterday at Templafy in collaboration with Copenhagen .NET User Group I presented my talk: "ASP.NET without Razor: React, Webpack and TypeScript".

Demos and slides can be found [here](https://github.com/rasmuskl/react-webpack-typescript).

## Abstract

React has been gaining popularity for single page apps but how does it fit into ASP.NET web apps? How can we use it in combination with Visual Studio without turning our regular workflow upside down?

At Templafy we recently migrated our existing Knockout.js frontend to React. As part of this journey, we had to decode all the node.js guides on using React and convert them into a working solution. In the end we settled on the combination of React, Webpack and TypeScript. This talk is a condensed version of our experiences.

In the session we explore what React and Webpack are, how they work and how they differ from the tools we usually use in ASP.NET. We will also have a brief introduction to TypeScript and what benefits it adds. After looking at these technologies individually we will look at how they can work together in an ASP.NET web app. This will also include a closer look at the development workflow with hot reloading and the advantages and disadvantages of the entire setup.

Demos and slides can be found [here](https://github.com/rasmuskl/react-webpack-typescript).

## Abstract

React has been gaining popularity for single page apps but how does it fit into ASP.NET web apps? How can we use it in combination with Visual Studio without turning our regular workflow upside down?

At Templafy we recently migrated our existing Knockout.js frontend to React. As part of this journey, we had to decode all the node.js guides on using React and convert them into a working solution. In the end we settled on the combination of React, Webpack and TypeScript. This talk is a condensed version of our experiences.

In the session we explore what React and Webpack are, how they work and how they differ from the tools we usually use in ASP.NET. We will also have a brief introduction to TypeScript and what benefits it adds. After looking at these technologies individually we will look at how they can work together in an ASP.NET web app. This will also include a closer look at the development workflow with hot reloading and the advantages and disadvantages of the entire setup.

Test Cloud Presentation at Mjølner event 12 Nov 2015 3:00 PM (9 years ago)

Mjølner was kind enough to invite Karl Krukow and me along with our evangelist Mike James to come and talk at their Xamarin seminar.

They have posted a blog post about the event [here](http://mjolner.dk/events/xamarin-videos-how-to-build-and-test-apps-with-xamarin/).

The talk that me and Karl did was a rehash of our [Evolve 2014 talk](/2015/06/18/xamarin-evolve-presentation/) from last year.

The presentation was recorded and is available on YouTube:

What is Xamarin.UITest? 30 Oct 2015 4:00 PM (9 years ago)

One of the projects I have been working on at Xamarin is leading development on our C# test framework Xamarin.UITest.

I recently wrote a guest post over on the Xamarin blog about some of the background and design that went into creating the framework. The Xamarin blog has been removed so I have moved the post here.

## What is Xamarin.UITest?

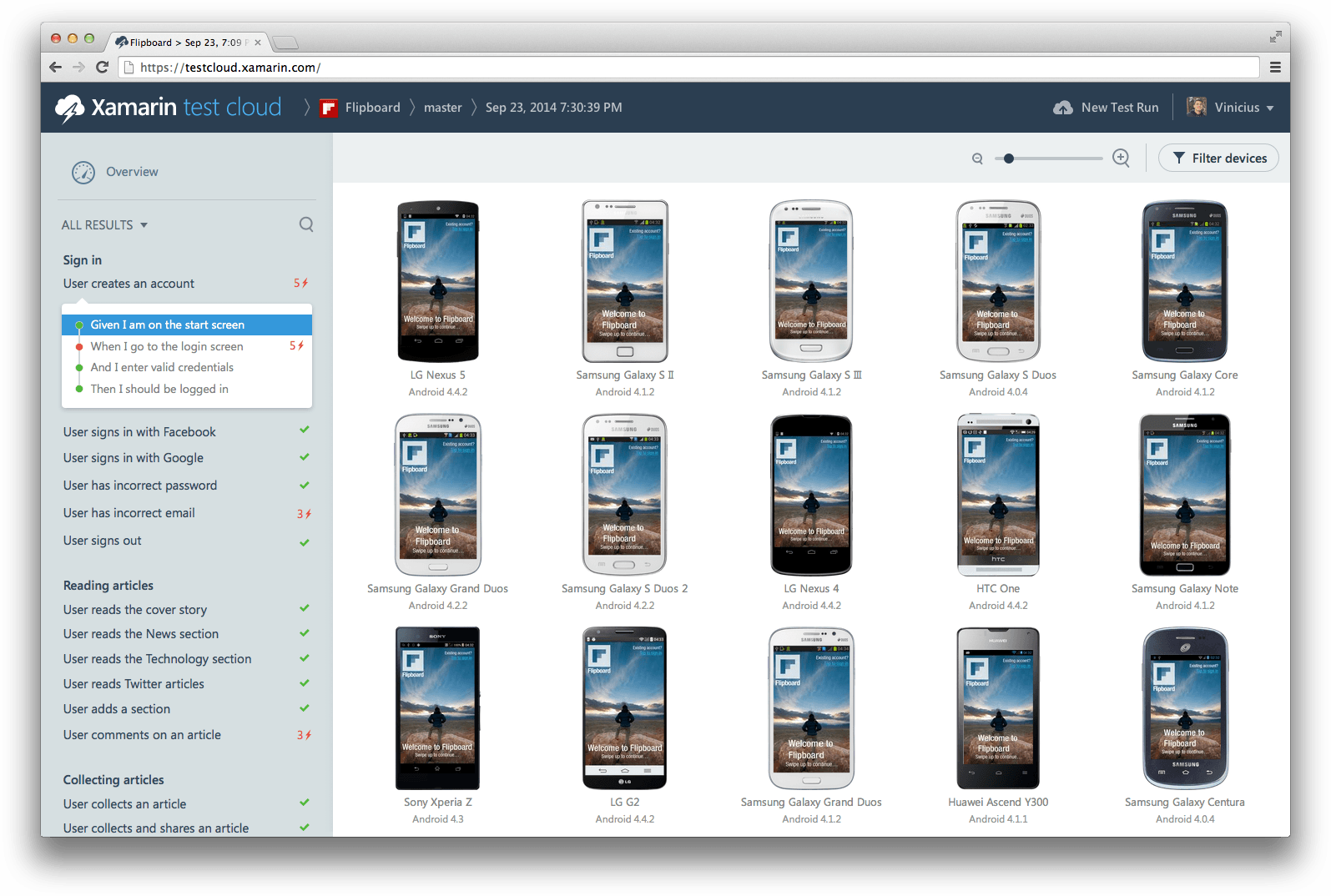

A key component of Xamarin Test Cloud is the development of test scripts to automate mobile UI testing. The Xamarin Test Cloud team started working on Xamarin.UITest in December of 2013 and released a public version at Xamarin Evolve in October, 2014. In this blog post, I'm going to share some thoughts and advice about the framework and our design decisions.

What is Xamarin.UITest?

Xamarin.UITest is a C# test automation framework that enables testing mobile apps on Android and iOS. Mobile automation is far from an easy endeavor, and Xamarin.UITest aims to provide a suitable abstraction on top of the platform tools to let you focus on what to test. Tests are written locally using either physical devices or simulators/emulators and then submitted to Xamarin Test Cloud for more extensive testing across hundreds of devices.

Available from NuGet, there are two restrictions for non-Xamarin Test Cloud users: 1) they can only run on simulators/emulators and 2) the total duration of a single test run cannot exceed 15 minutes.

### A Simple Example

Here is a simple example of a test written with Xamarin.UITest:

```csharp

[Test]

public void MyFirstTest()

{

var app = ConfigureApp

.Android

.ApkFile("MyApkFile.apk")

.StartApp();

app.EnterText("NameField", "Rasmus");

app.Tap("SubmitButton");

}

```

First, we configure the app that we're going to be testing. Then we enter "Rasmus" into a text field and press a button.

The main abstraction in Xamarin.UITest is an app. This is the gateway for communicating with the app running on the device. There is an iOSApp, an AndroidApp, and an IApp interface containing all of the shared functionality between the platforms, allowing cross-platform app tests to be written against the IApp interface.

### Design Goals

Xamarin.UITest is designed with a few design goals in mind, which help focus our efforts and provide a level of consistency. Some of the goals are inspired by Mogens Heller Grabe and his Rebus project. Goals are only as good as the reasons that back them, so let's take a look at some of the goals for Xamarin.UITest and why we decided that each of them was important.

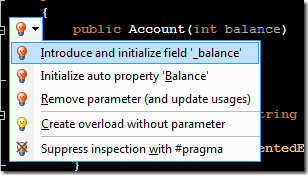

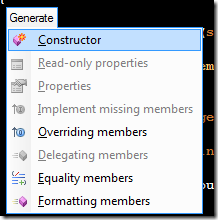

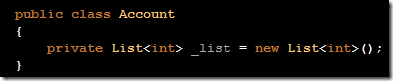

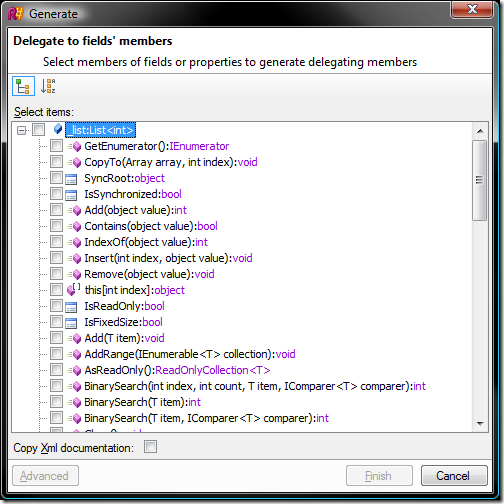

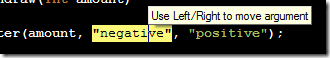

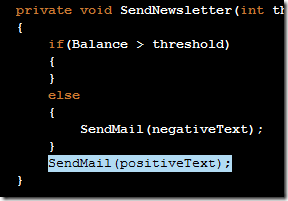

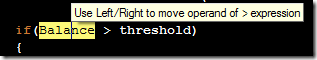

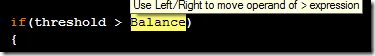

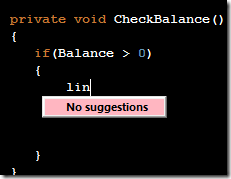

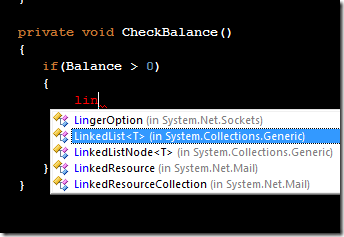

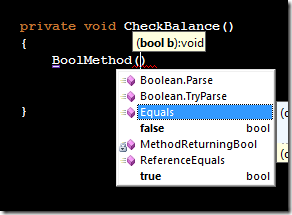

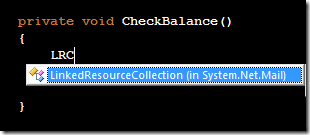

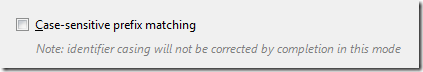

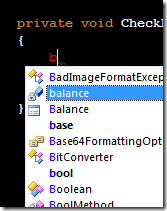

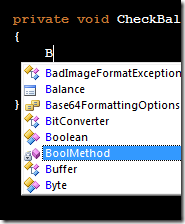

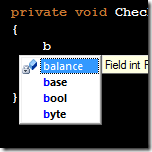

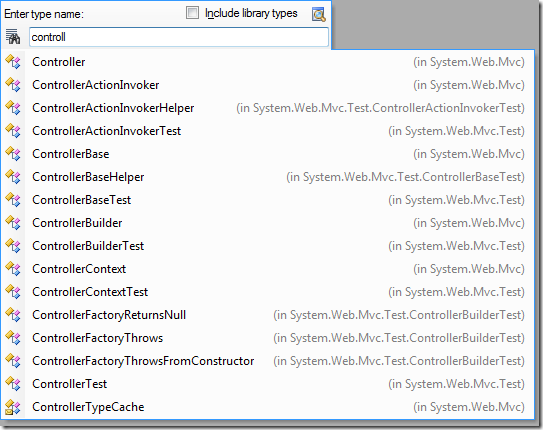

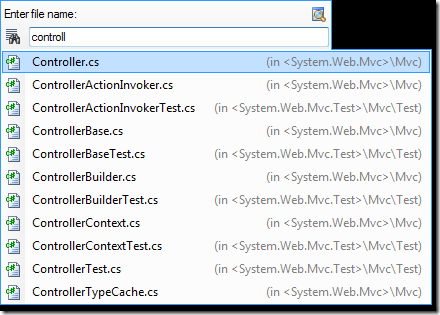

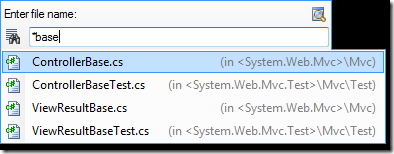

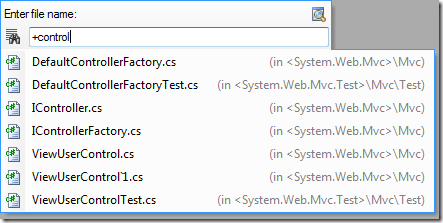

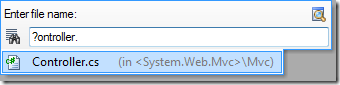

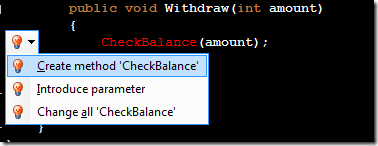

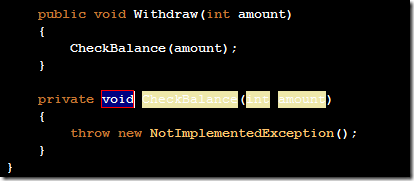

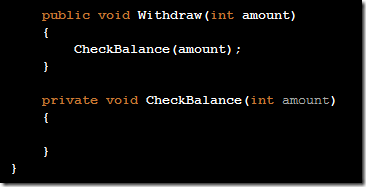

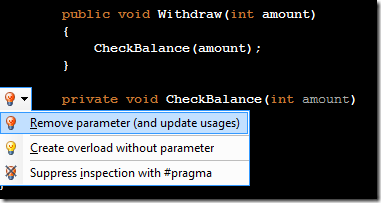

#### Discoverable

Part of the power of C# is amazing tools; for example, we have come to depend heavily on IntelliSense. One goal in designing Xamarin.UITest was to harness these tools and make as much functionality as possible discoverable through IntelliSense. In order to do this, you must minimize the number of entry points that the end user has to know about.

At the time of this writing, the only entry point for writing tests in Xamarin.UITest is the static ConfigureApp fluent interface. Once you have this entry point, everything else in the framework can be discovered through IntelliSense. The only exception is the TestEnvironment static class, which provides a bit of contextual information about the test environment that can be helpful when configuring the app.

#### Declarative

Mobile testing is hard. Platforms and tools are constantly changing and, as a result, the underlying framework often has to adapt. In addition to the rapid pace of change, the test has to perform on a wide range of devices with different sizes and processing power.

We built Xamarin.UITest with this in mind. We strive to provide a succinct interface for describing intent, such as the interactions you want performed or what information you are interested in.

A common issue in testing that's very evident in mobile testing is waiting: you tap a button and have to wait for the screen to change before you can interact with the next control. The easy solution is to use a Thread.Sleep call to wait just enough time, but what amount of time is “enough”? This leads to slow tests that wait too long or tests that are brittle because they're pushing the limits. A better solution would be wait for a change in the app. In Xamarin.UITest, one option is app.WaitForElement, which will continuously poll the app. However, waiting is an artifact of making the test work. The scenario we are trying to solve is to interact with two controls. Our solution for most gestures is to automatically wait if the element is not already present on the screen. In the best case, this alleviates the tester from worrying about details that are not important to the test. The only downside is that a failure will be a bit slower.

#### No Visible External Dependencies

In recent years, .NET has been greatly enhanced by technology such as NuGet, which allows us to create software that utilizes many other libraries, but there are still has a few problems. One of these problems is versioning, and a prime example is depending on a NuGet package that depends on a specific version of a popular package such as Newtonsoft.Json. This then restricts you in your own Newtonsoft.Json version and could possibly mismatch with other NuGet packages you want to use.

For Xamarin.UITest, our aim is to have no visible external dependencies. This doesn't mean that we code everything from the ground up; rather, we take care to not use any types from our dependencies in our public interface, so that we can use ILMerge (or ILRepack in our case) to combine everything into a single assembly with our dependencies internalized. In the case that we need something that is available on our public interface, we could open the framework up and provide a separate integration NuGet package. A nice example of this approach can also be seen in [Rebus](https://github.com/rebus-org/Rebus).

#### Helpful Errors

Errors happen. Mobile testing exercises many components and interacts with quite a few external systems, and there may be prerequisites or other environment settings that are not set up properly. In these cases, we often have no choice but to report the error, because our aim is to provide the best possible information about what went wrong. In addition, if we have any information that might help the user resolve the problem, we attempt to include this information in the error message as well.

### More Information

For more information, follow the tutorials in our documentation. Karl Krukow and I also did a presentation featuring a general overview of Xamarin Test Cloud, a demo of Xamarin.UITest, and a live stream of one of the Xamarin Test Cloud labs that you can watch [here](https://www.youtube.com/watch?v=PQMBCoVIABI).

What is Xamarin.UITest?

Xamarin.UITest is a C# test automation framework that enables testing mobile apps on Android and iOS. Mobile automation is far from an easy endeavor, and Xamarin.UITest aims to provide a suitable abstraction on top of the platform tools to let you focus on what to test. Tests are written locally using either physical devices or simulators/emulators and then submitted to Xamarin Test Cloud for more extensive testing across hundreds of devices.

Available from NuGet, there are two restrictions for non-Xamarin Test Cloud users: 1) they can only run on simulators/emulators and 2) the total duration of a single test run cannot exceed 15 minutes.

### A Simple Example

Here is a simple example of a test written with Xamarin.UITest:

```csharp

[Test]

public void MyFirstTest()

{

var app = ConfigureApp

.Android

.ApkFile("MyApkFile.apk")

.StartApp();

app.EnterText("NameField", "Rasmus");

app.Tap("SubmitButton");

}

```

First, we configure the app that we're going to be testing. Then we enter "Rasmus" into a text field and press a button.

The main abstraction in Xamarin.UITest is an app. This is the gateway for communicating with the app running on the device. There is an iOSApp, an AndroidApp, and an IApp interface containing all of the shared functionality between the platforms, allowing cross-platform app tests to be written against the IApp interface.

### Design Goals

Xamarin.UITest is designed with a few design goals in mind, which help focus our efforts and provide a level of consistency. Some of the goals are inspired by Mogens Heller Grabe and his Rebus project. Goals are only as good as the reasons that back them, so let's take a look at some of the goals for Xamarin.UITest and why we decided that each of them was important.

#### Discoverable

Part of the power of C# is amazing tools; for example, we have come to depend heavily on IntelliSense. One goal in designing Xamarin.UITest was to harness these tools and make as much functionality as possible discoverable through IntelliSense. In order to do this, you must minimize the number of entry points that the end user has to know about.

At the time of this writing, the only entry point for writing tests in Xamarin.UITest is the static ConfigureApp fluent interface. Once you have this entry point, everything else in the framework can be discovered through IntelliSense. The only exception is the TestEnvironment static class, which provides a bit of contextual information about the test environment that can be helpful when configuring the app.

#### Declarative

Mobile testing is hard. Platforms and tools are constantly changing and, as a result, the underlying framework often has to adapt. In addition to the rapid pace of change, the test has to perform on a wide range of devices with different sizes and processing power.

We built Xamarin.UITest with this in mind. We strive to provide a succinct interface for describing intent, such as the interactions you want performed or what information you are interested in.

A common issue in testing that's very evident in mobile testing is waiting: you tap a button and have to wait for the screen to change before you can interact with the next control. The easy solution is to use a Thread.Sleep call to wait just enough time, but what amount of time is “enough”? This leads to slow tests that wait too long or tests that are brittle because they're pushing the limits. A better solution would be wait for a change in the app. In Xamarin.UITest, one option is app.WaitForElement, which will continuously poll the app. However, waiting is an artifact of making the test work. The scenario we are trying to solve is to interact with two controls. Our solution for most gestures is to automatically wait if the element is not already present on the screen. In the best case, this alleviates the tester from worrying about details that are not important to the test. The only downside is that a failure will be a bit slower.

#### No Visible External Dependencies

In recent years, .NET has been greatly enhanced by technology such as NuGet, which allows us to create software that utilizes many other libraries, but there are still has a few problems. One of these problems is versioning, and a prime example is depending on a NuGet package that depends on a specific version of a popular package such as Newtonsoft.Json. This then restricts you in your own Newtonsoft.Json version and could possibly mismatch with other NuGet packages you want to use.

For Xamarin.UITest, our aim is to have no visible external dependencies. This doesn't mean that we code everything from the ground up; rather, we take care to not use any types from our dependencies in our public interface, so that we can use ILMerge (or ILRepack in our case) to combine everything into a single assembly with our dependencies internalized. In the case that we need something that is available on our public interface, we could open the framework up and provide a separate integration NuGet package. A nice example of this approach can also be seen in [Rebus](https://github.com/rebus-org/Rebus).

#### Helpful Errors

Errors happen. Mobile testing exercises many components and interacts with quite a few external systems, and there may be prerequisites or other environment settings that are not set up properly. In these cases, we often have no choice but to report the error, because our aim is to provide the best possible information about what went wrong. In addition, if we have any information that might help the user resolve the problem, we attempt to include this information in the error message as well.

### More Information

For more information, follow the tutorials in our documentation. Karl Krukow and I also did a presentation featuring a general overview of Xamarin Test Cloud, a demo of Xamarin.UITest, and a live stream of one of the Xamarin Test Cloud labs that you can watch [here](https://www.youtube.com/watch?v=PQMBCoVIABI).

Presentation at Xamarin Evolve 2014 17 Jun 2015 4:00 PM (9 years ago)

As part of my work for Xamarin, I was lucky enough to get the chance to present at the main stage at Xamarin Evolve last year with Karl Krukow.

Our talk was about mobile testing with [Xamarin Test Cloud](http://xamarin.com/test-cloud) and my part specifically was about the test framework I have been working on: [Xamarin.UITest](http://developer.xamarin.com/guides/testcloud/uitest/)

The presentation is available on YouTube - my part starts at around 21:30. Also there is our neat demo with live streaming from one of the actual Test Cloud labs at around 57:00.

Env Reboot Diaries - The First Day 29 Sep 2013 4:00 PM (11 years ago)

Today was the first day of my new job. I've always been a Windows user except for when using university computers - and my professional career has mainly consisted of .NET C# development. My new job is in a polyglot environment, the main language is Ruby - but there's also CoffeeScript and Clojure. I'll also be doing it on a Macbook Pro instead of my usual Windows machine. I thought it would be interesting to capture some of the thoughts as I go through learning a new OS and a new development stack. ## The Macbook Pro I spent most of the day setting up my temporary machine and doing some research for the first feature I'm assisting on. I was curious about how I'd like the Macbook Pro. I haven't had much luck with Apple products in the past. I've owned both an iPhone and an iPad and ended up selling both, usually due being annoyed with too few configuration options. Regarding the Macbook Pro, I think I might survive it. It's a nice piece of hardware, to be sure. I like the crisp display and the feel of both keyboard and trackpad are very good. The keyboard layout (Danish) will definitely take some getting used to, but I'm hoping it won't be much worse than learning `fn` key combinations on any other new laptop these days. Likes so far: * The virtual screens and navigating options are pleasant to work with. I've actually just been working on the Macbook without an external screen today. * The terminal. Tab completion etc seems more natural than both `cmd` and PowerShell. Will have to look at term replacements though, obviously. * Stronger package management. I've been using `homebrew` and `homebrew-cask` to install stuff. Installing Spotify with `homebrew cask install spotify` is a winner in my book. This has improved a bit on the Windows side of things with [Chocolatey](http://chocolatey.org/) though. Dislikes so far: * Having to enter my credit card information to install free apps from AppStore. * Also having to choose 3 security questions to install free apps - with crappy choices. * I'm not too keen on the dock yet either. But maybe it'll grow on me. ## Text Editor vs IDE I've been addicted to perfecting IDE use for quite some time. Give me Visual Studio and ReSharper and I'll slice and dice C# code with my hands behind my back. Tools like ReSharper are huge boosters - not just for writing code, but also for molding existing code into new shapes and even more importantly, for navigating, reading and understanding code. And while I am a huge fan - I've also come to realize that these tools sometimes become a prison. Introducing new technology that depends on some new file type into the Microsoft world more or less requires Visual Studio integration. In my experience, developers will be very reluctant to adopt it (myself included), if it doesn't have Intellisense for instance. So while I've considered starting out with Jetbrains' RubyMine, I've decided to try a text editor instead - at least for now. I actually thought I was going to pick Sublime Text, but in the end I decided to give vim a try. I've run through `vimtutor` tonight and plan to do it again tomorrow - and got a basic `.vimrc` config up and running. For now, I'm going to try and keep the number of plugins down - but have install [Vundle](https://github.com/gmarik/vundle) for managing plugins, the [solarized](https://github.com/altercation/vim-colors-solarized) theme and [vim-airline](https://github.com/bling/vim-airline) as an improved status bar.

My Git + PowerShell setup for .NET development 22 Sep 2013 4:00 PM (11 years ago)

I've been using git for a couple of years and thought I would document my setup. Git's linux heritage shows and while it's not many tools that I use via a shell, it's actually a real breeze. So I've mainly been using it through PowerShell.

## Git

I run the plain [Git for Windows](http://msysgit.github.io/) installation.

My only comment for the installation is that I usually choose the option (not default) to use checkout-as-is, commit-as-is for line endings. I mainly work with .NET projects and prefer to keep my Windows line endings in the repository to avoid any problems.

### .gitattributes

The line endings configuration can give problems in a mixed team - and recently I've been using a `.gitattributes` file in the root of my repositories with the following content:

```

* -text

```

This will instruct git to not mess with any line endings in the repository across the team, regardless of the installation options, which is nice as long as you don't have a mix of platforms.

### .gitignore

I usually build my `.gitignore` file as needed - I always do `git status` before committing, so it's been quite a while since something has slipped by. My minimal `.gitignore` will usually look something like this:

```

bin

obj

*.csproj.user

*.suo

packages

```

Generally I prefer to use NuGet for all possible dependencies and avoid checking the binary files in to keep the overall repository size down.

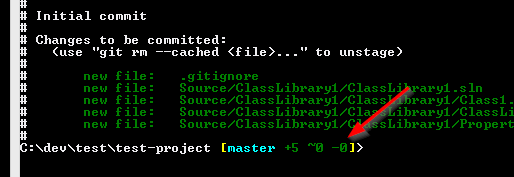

### posh-git

I use posh-git to get a bit of contextual information about my repository and some nice tab completion.

I've been using git for a couple of years and thought I would document my setup. Git's linux heritage shows and while it's not many tools that I use via a shell, it's actually a real breeze. So I've mainly been using it through PowerShell.

## Git

I run the plain [Git for Windows](http://msysgit.github.io/) installation.

My only comment for the installation is that I usually choose the option (not default) to use checkout-as-is, commit-as-is for line endings. I mainly work with .NET projects and prefer to keep my Windows line endings in the repository to avoid any problems.

### .gitattributes

The line endings configuration can give problems in a mixed team - and recently I've been using a `.gitattributes` file in the root of my repositories with the following content:

```

* -text

```

This will instruct git to not mess with any line endings in the repository across the team, regardless of the installation options, which is nice as long as you don't have a mix of platforms.

### .gitignore

I usually build my `.gitignore` file as needed - I always do `git status` before committing, so it's been quite a while since something has slipped by. My minimal `.gitignore` will usually look something like this:

```

bin

obj

*.csproj.user

*.suo

packages

```

Generally I prefer to use NuGet for all possible dependencies and avoid checking the binary files in to keep the overall repository size down.

### posh-git

I use posh-git to get a bit of contextual information about my repository and some nice tab completion.

posh-git is rather simple to install by following the instructions in the main [repository](https://github.com/dahlbyk/posh-git).

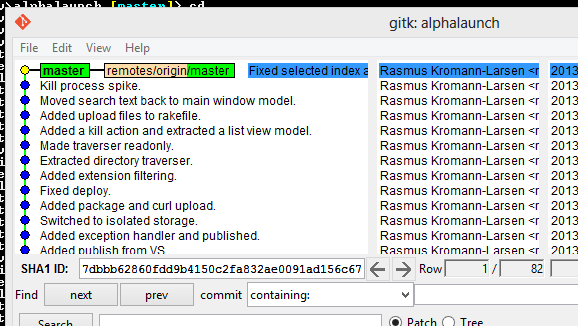

### gitk

Working in a shell environment is fine for many of the every day operations, sometimes a bit of GUI can be nice to get an overview. Git includes `gitk` which while a bit basic usually works just fine. I usually launch it with `gitk --all` to see all branches.

posh-git is rather simple to install by following the instructions in the main [repository](https://github.com/dahlbyk/posh-git).

### gitk

Working in a shell environment is fine for many of the every day operations, sometimes a bit of GUI can be nice to get an overview. Git includes `gitk` which while a bit basic usually works just fine. I usually launch it with `gitk --all` to see all branches.

If you want a more advanced GUI for Git, you can either download [SourceTree](http://www.sourcetreeapp.com/) from Atlassian or [GitHub for Windows](http://windows.github.com/).

## PowerShell

My PowerShell setup mainly consists of my profile, which is loaded when PowerShell starts. On my system it's found under:

```

C:\Users\Rasmus\Documents\WindowsPowerShell\Microsoft.PowerShell_profile.ps1

```

You can however access it through PowerShell using `$PROFILE` variable. So you can easily edit it with:

```

notepad $PROFILE

```

After you've made changes to your profile, you'll have to reload it into the current PowerShell session with:

```

. $PROFILE

```

My full PowerShell profile is available in this [gist](https://gist.github.com/rasmuskl/3786798).

### General purpose aliases

I have two aliases set up that I use often, but are not entirely Git related. First off I have `np`:

```

set-alias -name np -value "C:\Program Files\Sublime Text 3\sublime_text.exe"

```

This is just always set up to open my current text editor whenever it's [Sublime Text](http://www.sublimetext.com/) or [Notepad++](http://notepad-plus-plus.org/) and used to do quick edits.

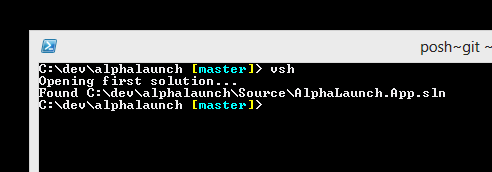

My other alias is `vsh`, which is just short for "Visual Studio here". What it'll do is to search recursively from the current folder and open the first solution it encounters. It'll give you a quick standard way to open your solution from the root of a repository where you generally want your shell most of the time anyway.

```powershell

function vsh() {

Write-Output "Opening first solution..."

$sln = (dir -in *.sln -r | Select -first 1)

Write-Output "Found $($sln.FullName)"

Invoke-Item $sln.FullName

}

```

If you want a more advanced GUI for Git, you can either download [SourceTree](http://www.sourcetreeapp.com/) from Atlassian or [GitHub for Windows](http://windows.github.com/).

## PowerShell

My PowerShell setup mainly consists of my profile, which is loaded when PowerShell starts. On my system it's found under:

```

C:\Users\Rasmus\Documents\WindowsPowerShell\Microsoft.PowerShell_profile.ps1

```

You can however access it through PowerShell using `$PROFILE` variable. So you can easily edit it with:

```

notepad $PROFILE

```

After you've made changes to your profile, you'll have to reload it into the current PowerShell session with:

```

. $PROFILE

```

My full PowerShell profile is available in this [gist](https://gist.github.com/rasmuskl/3786798).

### General purpose aliases

I have two aliases set up that I use often, but are not entirely Git related. First off I have `np`:

```

set-alias -name np -value "C:\Program Files\Sublime Text 3\sublime_text.exe"

```

This is just always set up to open my current text editor whenever it's [Sublime Text](http://www.sublimetext.com/) or [Notepad++](http://notepad-plus-plus.org/) and used to do quick edits.

My other alias is `vsh`, which is just short for "Visual Studio here". What it'll do is to search recursively from the current folder and open the first solution it encounters. It'll give you a quick standard way to open your solution from the root of a repository where you generally want your shell most of the time anyway.

```powershell

function vsh() {

Write-Output "Opening first solution..."

$sln = (dir -in *.sln -r | Select -first 1)

Write-Output "Found $($sln.FullName)"

Invoke-Item $sln.FullName

}

```

### Git aliases

I have two main aliases for interacting with Git, namely `ga` and `gco`.

My alias for adding everything to the staging area is `ga`. For a long time I'd use `git add .` usually and then `git add -A` whenever I also had deletes - but I'm happy with `ga` now. As a bonus it also does a `git status` so I'm forced to review what the heck I'm doing.

``` powershell

function ga() {

Write-Output "Staging all changes..."

git add -A

git status

}

```

After staging files I have to commit obviously. I got a bit annoyed with typing `git add -m "blah"` all the time and came up with `gco`. Besides being shorter, it has 2 little twists:

- If you add `-a` or `-amend` it'll do a `git commit --amend` for overwriting the last commit. Useful for fixing typoes or unsaved files that didn't make it into the commit.

- Under most circumstances you can leave out the surrounding quotes and it'll work just fine. So you can write `gco message` instead of `gco "message"`. If you're using special chars like apostrophes in your messages however, you still have to add the quotes.

``` powershell

function gco() {

param([switch]$amend, [switch]$a)

$argstr = $args -join ' '

$message = '"', $argstr, '"' -join ''

if ($amend -or $a) {

Write-Output "Amending previous commit with message: $message"

git commit -m $message --amend

} else {

Write-Output "Committing with message: $args"

git commit -m $message

}

}

```

I also have a `gca` alias, which is basically `gco -a` - but I don't use it often. You can grab it from the [profile gist](https://gist.github.com/rasmuskl/3786798).

### Git aliases

I have two main aliases for interacting with Git, namely `ga` and `gco`.

My alias for adding everything to the staging area is `ga`. For a long time I'd use `git add .` usually and then `git add -A` whenever I also had deletes - but I'm happy with `ga` now. As a bonus it also does a `git status` so I'm forced to review what the heck I'm doing.

``` powershell

function ga() {

Write-Output "Staging all changes..."

git add -A

git status

}

```

After staging files I have to commit obviously. I got a bit annoyed with typing `git add -m "blah"` all the time and came up with `gco`. Besides being shorter, it has 2 little twists:

- If you add `-a` or `-amend` it'll do a `git commit --amend` for overwriting the last commit. Useful for fixing typoes or unsaved files that didn't make it into the commit.

- Under most circumstances you can leave out the surrounding quotes and it'll work just fine. So you can write `gco message` instead of `gco "message"`. If you're using special chars like apostrophes in your messages however, you still have to add the quotes.

``` powershell

function gco() {

param([switch]$amend, [switch]$a)

$argstr = $args -join ' '

$message = '"', $argstr, '"' -join ''

if ($amend -or $a) {

Write-Output "Amending previous commit with message: $message"

git commit -m $message --amend

} else {

Write-Output "Committing with message: $args"

git commit -m $message

}

}

```

I also have a `gca` alias, which is basically `gco -a` - but I don't use it often. You can grab it from the [profile gist](https://gist.github.com/rasmuskl/3786798).

Joining Xamarin 13 Aug 2013 4:00 PM (11 years ago)

After some 3 years working as an independent consultant I'm excited to announce that I'm joining [Xamarin](http://www.xamarin.com) in October. Working as a consultant has brought me many interesting experiences and may do so again some day, but for some time I've been looking for a company with the right profile to join. I've mainly been looking for a highly skilled team building exciting stuff without too much corporate overhead, with a great vision, where I could really make an impact. Xamarin seems to fit the bill perfectly.

## Xamarin Test Cloud

More specifically, I will be joining the Xamarin team in Århus responsible for the [Xamarin Test Cloud](http://xamarin.com/test-cloud) - a cloud platform for BDD-style UI automation testing Android and iOS apps on actual physical devices without having to deal with the devices yourself. The mobile device market has crazy fragmentation due to the number of OS versions, screen sizes, customizations and just sheer number of different models. [Nat Friedman](http://www.nat.org/) (Xamarin CEO) gave a nice overview of the problem in the Xamarin Evolve 2013 keynote this year ([video: The State of Mobile Testing](http://xamarin.com/evolve/2013#keynote-72:12)) and also proceeded to give an overview to the Xamarin Test Cloud ([video: Xamarin Test Cloud](http://xamarin.com/evolve/2013#keynote-80:44)).

## New challenges

First of all since I'm joining the Xamarin team in Århus and I live in Copenhagen, I will be spending quite a bit more time riding trains back and forth. It's important to be a part of the team and I've also planned to read up on tips for optimizing remote work - [Scott Hanselman](http://www.hanselman.com/) comes to mind, especially his tips on [video portals](http://www.hanselman.com/blog/VirtualCamaraderieAPersistentVideoPortalForTheRemoteWorker.aspx).

I'm currently investigating possible office spaces in Copenhagen for my remote work - suggestions are very welcome.

Besides working remotely my new main programming environment will no longer be .NET and C# in Visual Studio, but rather Ruby in some yet undecided editor on a Mac. It's always refreshing to try something new! But while I'll be writing Ruby, Xamarin does have a heavy investment in C#, so I'm sure my C# knowledge will come in handy anyway.

Exciting times.

After some 3 years working as an independent consultant I'm excited to announce that I'm joining [Xamarin](http://www.xamarin.com) in October. Working as a consultant has brought me many interesting experiences and may do so again some day, but for some time I've been looking for a company with the right profile to join. I've mainly been looking for a highly skilled team building exciting stuff without too much corporate overhead, with a great vision, where I could really make an impact. Xamarin seems to fit the bill perfectly.

## Xamarin Test Cloud

More specifically, I will be joining the Xamarin team in Århus responsible for the [Xamarin Test Cloud](http://xamarin.com/test-cloud) - a cloud platform for BDD-style UI automation testing Android and iOS apps on actual physical devices without having to deal with the devices yourself. The mobile device market has crazy fragmentation due to the number of OS versions, screen sizes, customizations and just sheer number of different models. [Nat Friedman](http://www.nat.org/) (Xamarin CEO) gave a nice overview of the problem in the Xamarin Evolve 2013 keynote this year ([video: The State of Mobile Testing](http://xamarin.com/evolve/2013#keynote-72:12)) and also proceeded to give an overview to the Xamarin Test Cloud ([video: Xamarin Test Cloud](http://xamarin.com/evolve/2013#keynote-80:44)).

## New challenges

First of all since I'm joining the Xamarin team in Århus and I live in Copenhagen, I will be spending quite a bit more time riding trains back and forth. It's important to be a part of the team and I've also planned to read up on tips for optimizing remote work - [Scott Hanselman](http://www.hanselman.com/) comes to mind, especially his tips on [video portals](http://www.hanselman.com/blog/VirtualCamaraderieAPersistentVideoPortalForTheRemoteWorker.aspx).

I'm currently investigating possible office spaces in Copenhagen for my remote work - suggestions are very welcome.

Besides working remotely my new main programming environment will no longer be .NET and C# in Visual Studio, but rather Ruby in some yet undecided editor on a Mac. It's always refreshing to try something new! But while I'll be writing Ruby, Xamarin does have a heavy investment in C#, so I'm sure my C# knowledge will come in handy anyway.

Exciting times.

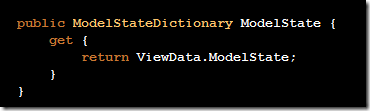

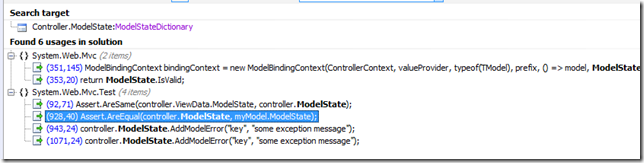

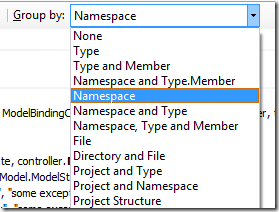

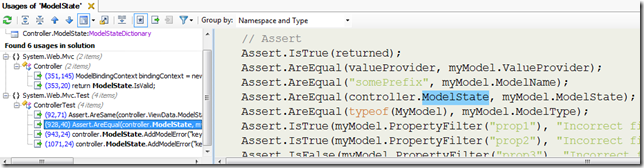

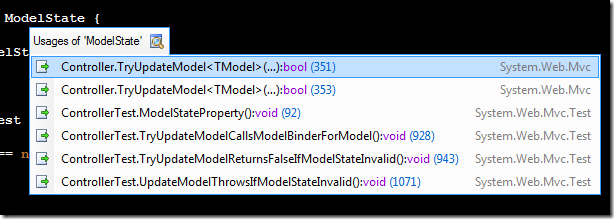

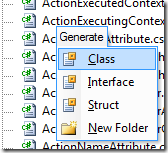

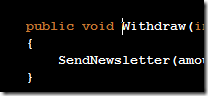

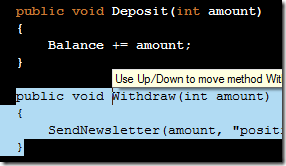

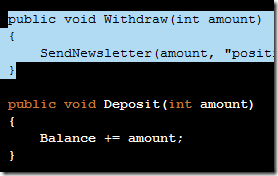

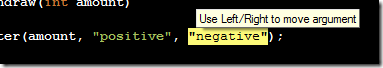

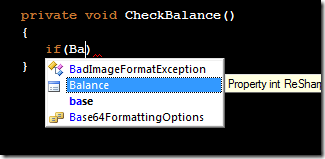

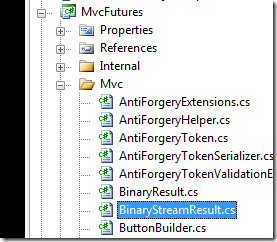

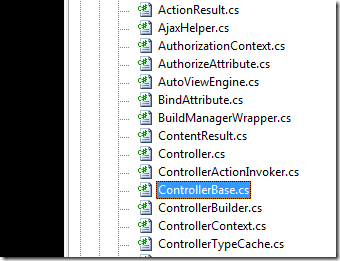

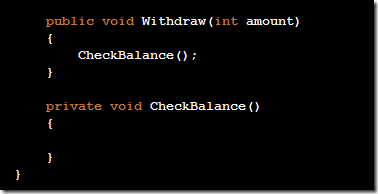

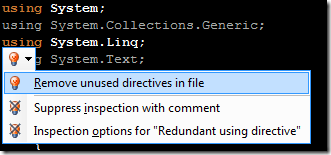

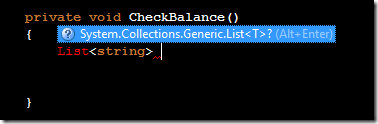

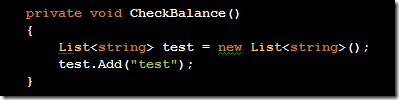

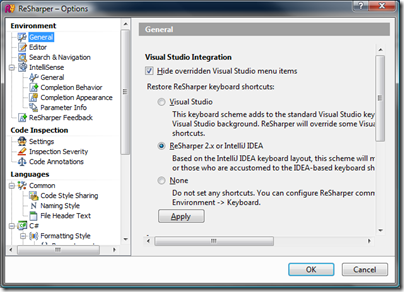

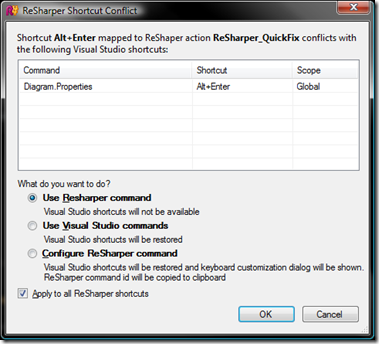

Releasing my ReSharper Course Material 12 Jun 2013 4:00 PM (11 years ago)

I've decided to release my ReSharper course material under the [Creative Commons Attribution 3.0 license](http://creativecommons.org/licenses/by/3.0/).

The material can be found at on [GitHub](http://github.com/rasmuskl/ReSharperCourse).

A short description of the course can be found in the git repository README (pasted below).

The precompiled exercises-PDF can be downloaded [here on GitHub](https://github.com/rasmuskl/ReSharperCourse/raw/master/Source/ReSharper%20Exercises.pdf).

# Introduction

This is my basic [ReSharper](http://www.jetbrains.com/resharper/) course material developed in 2012 - based on ReSharper 6.1, although a lot of the material is still relevant.

It should provide enough content for 4 to 6 hours of entertainment. The course focuses on progressivly harder exercises and hands-on experience over a lot of talk.

Exercises are generated through the ASP.NET MVC site found in `Source/CourseTasks`.

# Topics

- Why use ReSharper?

- Navigation

- Code Interaction

- Code Analysis

- Code Generation

- Refactoring

- Completion modes

- Refactoring combos

- Usage Inspection

- Solution Refactorings

- Move Code

- Navigating Hierarchies

- Inspect This

# Licensing

Course material is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License.

For source code found in the Source folder - please check individual projects for license information (Rebus and BlogEngine.NET).

I've decided to release my ReSharper course material under the [Creative Commons Attribution 3.0 license](http://creativecommons.org/licenses/by/3.0/).

The material can be found at on [GitHub](http://github.com/rasmuskl/ReSharperCourse).

A short description of the course can be found in the git repository README (pasted below).

The precompiled exercises-PDF can be downloaded [here on GitHub](https://github.com/rasmuskl/ReSharperCourse/raw/master/Source/ReSharper%20Exercises.pdf).

# Introduction

This is my basic [ReSharper](http://www.jetbrains.com/resharper/) course material developed in 2012 - based on ReSharper 6.1, although a lot of the material is still relevant.

It should provide enough content for 4 to 6 hours of entertainment. The course focuses on progressivly harder exercises and hands-on experience over a lot of talk.

Exercises are generated through the ASP.NET MVC site found in `Source/CourseTasks`.

# Topics

- Why use ReSharper?

- Navigation

- Code Interaction

- Code Analysis

- Code Generation

- Refactoring

- Completion modes

- Refactoring combos

- Usage Inspection

- Solution Refactorings

- Move Code

- Navigating Hierarchies

- Inspect This

# Licensing

Course material is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License.

For source code found in the Source folder - please check individual projects for license information (Rebus and BlogEngine.NET).

Microsoft DDC 2013 Reflections 26 Apr 2013 4:00 PM (11 years ago)

A few weeks ago, I attended and spoke at this years Danish Developer Conference by Microsoft. The conference was run in both Horsens and Copenhagen and both venues were cinemas. I gave a talk with Mads Kristensen with the topic of Visual Studio productivity tips. Mads covered plain Visual Studio and I gave a whirlwind tour of what productivity with ReSharper could look like. ## The venue I loved both venues, MegaScope in Horsens and Cinemaxx in Copenhagen, presenting in a cinema is just amazing. Forget everything about presentation resolutions and just fire away - Cinemaxx's projectors were 4K (4096 x 2304px). Having 60+ m2 of screen estate makes everything much simpler. Combined with comfortable seats it was really enjoyable. We had an entire cinema as a Speakers Lounge as well. ## My talk As previously mentioned, I gave my ReSharper whirlwind tour. The talk has very few slides and focuses on giving a quick overview of the most basic features in ReSharper. If you are looking specifically for my ReSharper slides, I have some old presentation blog posts containing a richer set. I think it will be the last time that I am going to give my basic ReSharper talk, unless specifically requested - since I have given it quite a few times now. I might be tempted to create a more advanced ReSharper talk at some point though. Maybe I will actually speak about some C# related stuff next time. ## Other talks I was generally happy with all the talks I saw, but I want to recommend 2 talks specifically, if you happen to get a chance to see them at a user group or at another conference: #### Advanced Unit Testing (Danish: Unit testing for viderekommende) [Mark Seemann](http://blog.ploeh.dk/) is a very experienced speaker and a passionate proponent of automated tests. This talk gives an introduction to some of the patterns to avoid brittle tests, especially in regards to test object construction and equality. In many regards it reflects some of the painful experiences I have gone through over the years. #### Bigger, Faster, Stronger: Optimizing ASP.NET 4 and 4.5 Applications [Mads Kristensen](http://madskristensen.net/) has given this talk so many times but it is better every time and it touches on so many helpful things to optimize your web pipeline from the server to the client. The talk is based around the [Web Developer Checklist](http://webdevchecklist.com/) - so if you can't see the talk live, at least take a look at the checklist.

Surviving no media keys on your new keyboard 18 Mar 2013 4:00 PM (12 years ago)

I've recently acquired a new keyboard, after using my trusty old Logitech for many years. I've come to rely on my media keys and the volume wheel for controlling Spotify or other apps.

My solution is to use [AutoHotKey](http://www.autohotkey.com) to bind the following combinations after a short conversation with [Mark](http://www.improve.dk) (although we don't entirely agree on the layout):

- Win + Numpad 4 - Previous track

- Win + Numpad 5 - Play / pause

- Win + Numpad 6 - Next track

- Win + Numpad 8 - Volume up

- Win + Numpad 2 - Volume down

- Win + Numpad 7 - Mute

Here's the script to add to AHK:

``` autohotkey

#Numpad4::Send {Media_Prev}

#Numpad5::Send {Media_Play_Pause}

#Numpad6::Send {Media_Next}

#Numpad2::Send {Volume_Down}

#Numpad7::Send {Volume_Mute}

#Numpad8::Send {Volume_Up}

```

... and on a final semi-unrelated note, I'll recommend my new mechanical keyboard - [Das Keyboard S Ultimate Silent](http://www.daskeyboard.com/model-s-ultimate-silent/). It's far from silent - but it's an awesome keyboard. The keys have a very nice feel as you're typing along and the keyboard itself is rather heavy (almost 2kg) and thus stay completely in place when typing.

Setting up Web Deploy 3.0 / MSDeploy 26 Sep 2012 4:00 PM (12 years ago)

I'm currently on the path of converting one of my sites from SFTP deployments to using [Web Deploy 3.0](http://www.iis.net/downloads/microsoft/web-deploy) and thought it might be interesting to document the process and the pitfalls that I run into. My approach is roughly based on [this guide](http://www.iis.net/learn/publish/using-web-deploy/configure-the-web-deployment-handler), but it wasn't a complete fit for me, so here we go.

## Motivation

So why would you want to use Web Deploy for deploying web sites? Compared to regular file copy or FTP deployments, Web Deploy offers the option of running a dedicated deployment service on your server, that is actually aware of IIS and can help you make your deployments as smooth as possible. In my case, my SFTP service had started to lock random assemblies recently, and since I'd been wanting upgrade to Web Deploy anyway, I thought now might be as good a time as any.

Web Deploy can do a bunch of things for you, such as syncing IIS sites (6, 7 and 8), deploying packages, archiving sites by offering a multitude of different providers. This post is dedicated to deploying a rather simple site that is already bin deployable.

## My setup

My setup is a remote server running Windows Server 2008 R2 with IIS 7.5 and a development environment on a Windows 7 Ultimate machine.

The site is an ASP.NET 4.0 mixed WebForms / MVC project. The application itself manages database migrations, so they're not in scope for the post either.

## Setup steps

1 - Created a dedicated deployment user for use with deployment. It's nice to know that everything is locked down, when you open up remote access.

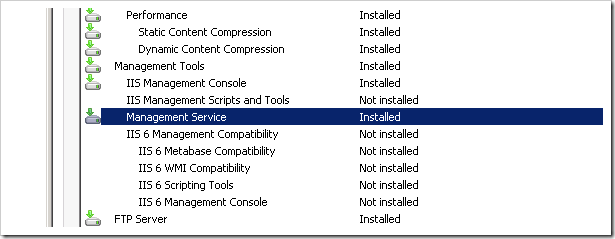

2 - Installed Management Service role for my IIS in Server Manager.

3 - Changed Web Management Service to Start automatically (delayed) and specified a specific deployment user.

3 - Changed Web Management Service to Start automatically (delayed) and specified a specific deployment user.

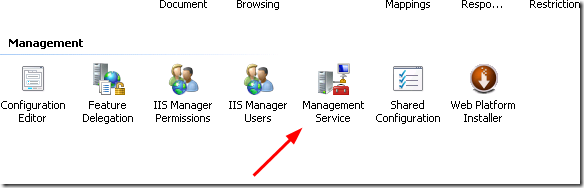

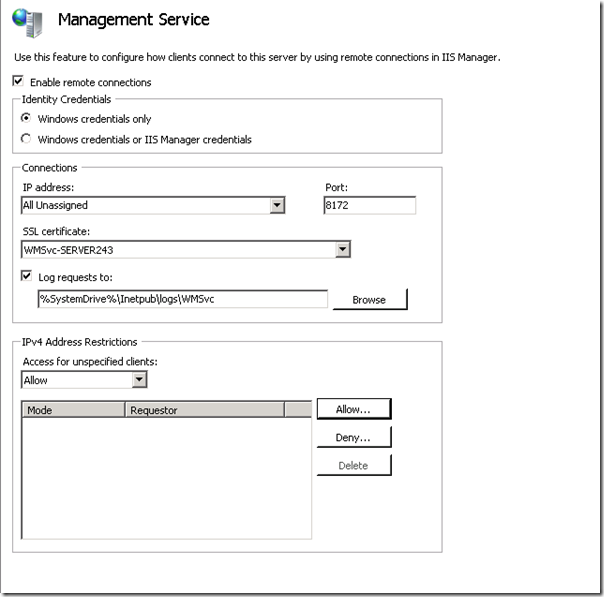

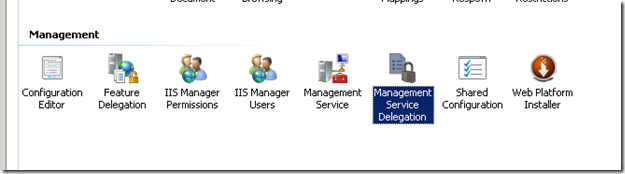

4 - Configured Management Service within IIS.

4 - Configured Management Service within IIS.

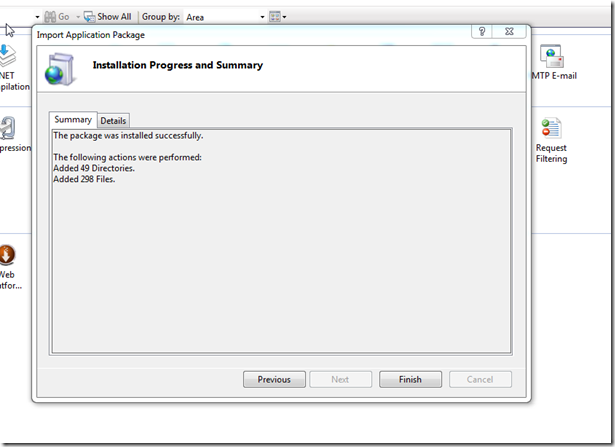

Like this:

Like this:

5 - Created a new site in IIS. Gave the deployment user access to the site folder on the web server.

6 - Gave the deployment user access to the site through IIS Manager Permissions.

5 - Created a new site in IIS. Gave the deployment user access to the site folder on the web server.

6 - Gave the deployment user access to the site through IIS Manager Permissions.

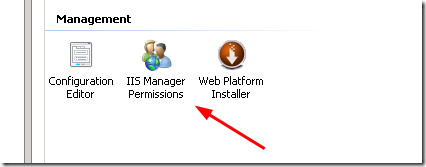

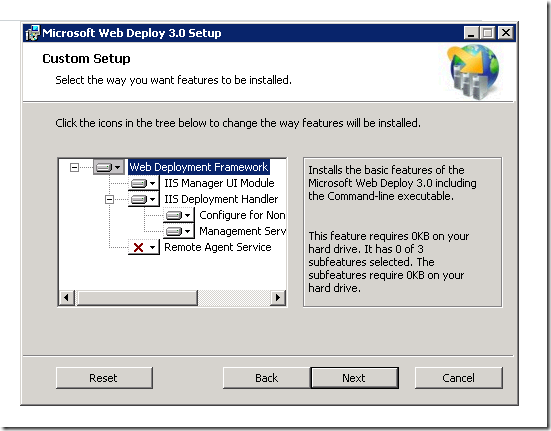

7 - Installed Web Deploy 3.0 including IIS Deployment Handler (wasn't visible in the first custom install). Not using Platform installer. The IIS Deployment handler install option was not visible the first time I tried, because I hadn't installed the Management Service in IIS.

7 - Installed Web Deploy 3.0 including IIS Deployment Handler (wasn't visible in the first custom install). Not using Platform installer. The IIS Deployment handler install option was not visible the first time I tried, because I hadn't installed the Management Service in IIS.

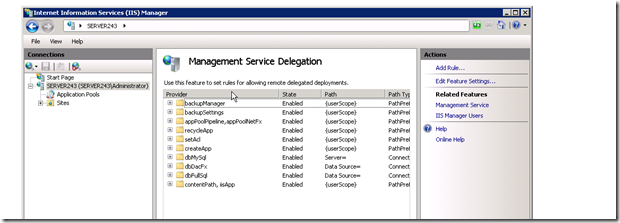

8 - The guide told me to add rules, but rules already existed in Management Service Delegation.

8 - The guide told me to add rules, but rules already existed in Management Service Delegation.

(Already existing rules:)

(Already existing rules:)

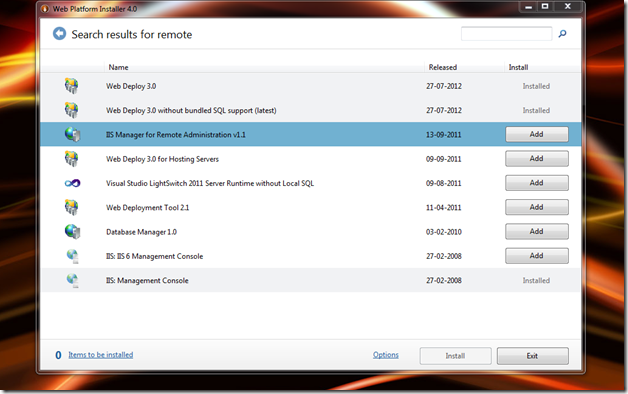

9 - Installed IIS on my local machine. Was rather freshly paved, so I hadn't yet. I'm guessing most of you can skip this step.

10 - Wasn't able to ‘Connect to Site' as mentioned in test guide - so I installed IIS Manager for Remote Administration v1.1 using Web Platform Installer.

9 - Installed IIS on my local machine. Was rather freshly paved, so I hadn't yet. I'm guessing most of you can skip this step.

10 - Wasn't able to ‘Connect to Site' as mentioned in test guide - so I installed IIS Manager for Remote Administration v1.1 using Web Platform Installer.

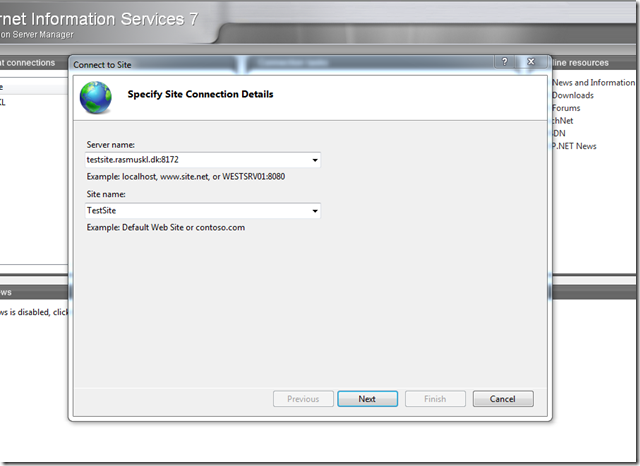

11 - Connected to the Site.

11 - Connected to the Site.

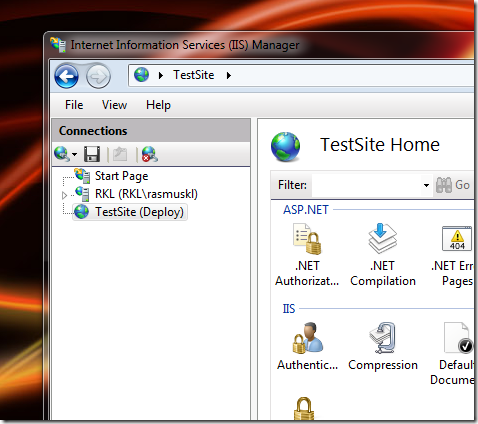

12 - Selected the site.

12 - Selected the site.

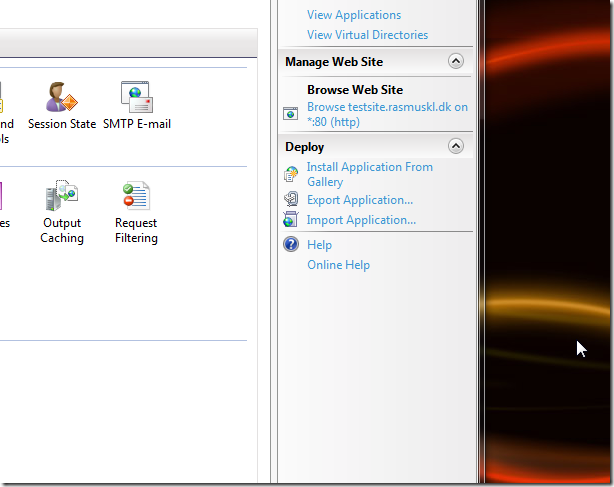

13 - ... aaaaand imported my application package that I'd created through Visual Studio.

13 - ... aaaaand imported my application package that I'd created through Visual Studio.

14 - Profit!

14 - Profit!

## Conclusion

Now this is a rather crude picture guide. But hopefully it'll still be useful to some people. I know I'll check it next time I'm setting up Web Deploy.

My next goals are to adapt my rake scripts for the application to create the package on my TeamCity server and add one-click deployments directly from TeamCity.

## Conclusion

Now this is a rather crude picture guide. But hopefully it'll still be useful to some people. I know I'll check it next time I'm setting up Web Deploy.

My next goals are to adapt my rake scripts for the application to create the package on my TeamCity server and add one-click deployments directly from TeamCity.

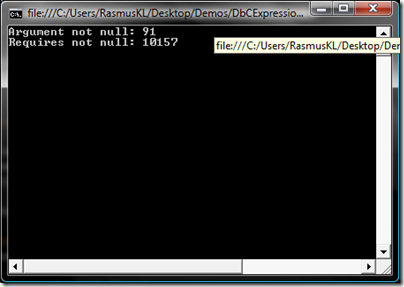

MOW2012: Exploring C# DSLs: LINQ, Fluent Interfaces and Expression Trees 18 Apr 2012 4:00 PM (13 years ago)

I gave my Exploring C# DSLs: LINQ, Fluent Interfaces and Expression Trees talk today at [Miracle Open World 2012](http://mow2012.dk) about C# Domain Specific Languages. The slides are now available [here](http://www.slideshare.net/rasmuskl/exploring-c-dsls-linq). ... and the demo source is available as a git repository on bitbucket [here](http://bitbucket.org/rasmuskl/mow2012dsltalk/). Note that some of the source is just mocked implementation, the goal was not really to show production level quality - but rather concepts. The quality of the few tests and commit messages reflect this.

Converting a Mercurial repository to Git (Windows) 11 Mar 2012 4:00 PM (13 years ago)

After going through the pain of (re-)discovering how to convert a Mercurial repository into a Git repository on Windows, I thought I'd share how easy it really is. I've bounced back and forth between Mercurial and Git a few times - my current preference is Git, mainly because I like Git's branching strategy a bit better - but really, they're both excellent choices. I still find the best analogy for comparing them is that [Git is MacGyver and Mercurial is James Bond](http://importantshock.wordpress.com/2008/08/07/git-vs-mercurial/). You can find quite a few [posts](http://arr.gr/blog/2011/10/bitbucket-converting-hg-repositories-to-git/) [describing](http://candidcode.com/2010/01/12/a-guide-to-converting-from-mercurial-hg-to-git-on-a-windows-client/) how to convert - but many of the steps mentioned in those guides are not needed if you have [TortoiseHg](http://tortoisehg.bitbucket.org/) installed, which most Windows Mercurial users do. ## Prerequisites As I already mentioned, this guide expects that you have [TortoiseHg](http://tortoisehg.bitbucket.org/) installed on your system. For the actual conversion, we're going to be using a Mercurial extension called [hggit](https://github.com/schacon/hg-git) that enables Mercurial to push and pull from Git repositories. You can either clone the [hggit](https://github.com/schacon/hg-git) repository on GitHub or grab a zipped version [here](https://github.com/schacon/hg-git/downloads). What we need is the **hggit** folder from the clone or zip file - put this some place handy and remember the path. ## Preparing the Git repository In this guide we're going to be pushing our repository to a local Git repository - so let's create a bare repository - this way you'll avoid Git complains about [pushing to a non-bare repository](http://gitready.com/advanced/2009/02/01/push-to-only-bare-repositories.html). Open a command prompt, create a directory for the new repository and from within the directory execute: `git init -bare` That's it - our Git repository is ready. Alternatively you could push directly to a Git repository on either [GitHub](http://www.github.com), [Bitbucket](http://www.bitbucket.org) or other provider. ## Enabling hggit in Mercurial Now we need to let Mercurial know about the hggit extension. This is done by adding it to the **.hgrc** or **mercurial.ini** file in your home directory (for me that'd be **c:\users\rasmuskl\mercurial.ini**). In the config file, find the **[extensions]** section - or add it at bottom if it's not already there. Then add a reference to the hggit extension followed by the path of the hggit folder: ``` hggit = c:\path\to\hggit ``` ## Converting the repository To convert the repository, simply open your command prompt, navigate to your Mercurial repository and do: `hg push c:\path\to\bare\git\repository` And you're done. You can now either clone the bare repository to a working directory - or push it to your GitHub or Bitbucket account.

Tips for decluttering Visual Studio 2010 27 Feb 2012 3:00 PM (13 years ago)

[Mogens Heller Grabe](http://mookid.dk/oncode/) wrote a [nice post](http://mookid.dk/oncode/archives/2725) about reducing the amount of clutter in your Visual Studio the other day - and I thought I'd chime in with a few tips.

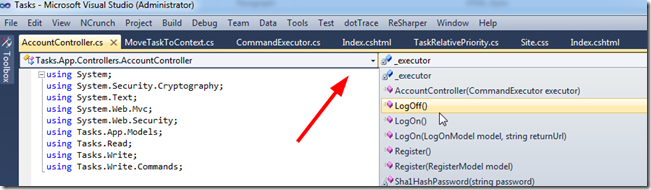

## Hiding the Navigation bar

First up we have the navigation bar - which is taking up a line of your precious screen estate.

To remove it, jump to:

Tools -> Options -> Text Editor -> All Languages

Uncheck ‘Navigation bar'. For extra bonus points, check ‘Line numbers'.

## Bringing back the Configuration Manager

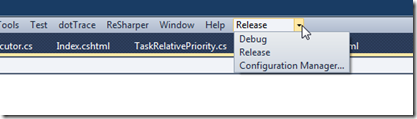

The following tip I got from [Rasmus Wulff Jensen](http://www.rwj.dk/) when I mentioned that the only thing I really like from the standard Visual Studio toolbars is the ‘Configuration Manager' drop down that allows me to switch between Debug and Release builds. He showed me a neat trick to put it in the top toolbar.

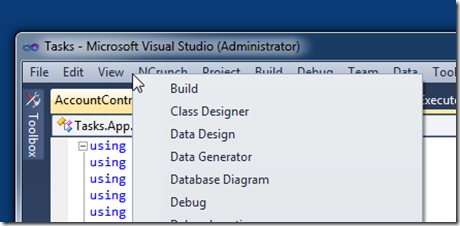

Right click on the tool bar to bring up the tool bar selection.

To remove it, jump to:

Tools -> Options -> Text Editor -> All Languages

Uncheck ‘Navigation bar'. For extra bonus points, check ‘Line numbers'.

## Bringing back the Configuration Manager

The following tip I got from [Rasmus Wulff Jensen](http://www.rwj.dk/) when I mentioned that the only thing I really like from the standard Visual Studio toolbars is the ‘Configuration Manager' drop down that allows me to switch between Debug and Release builds. He showed me a neat trick to put it in the top toolbar.

Right click on the tool bar to bring up the tool bar selection.

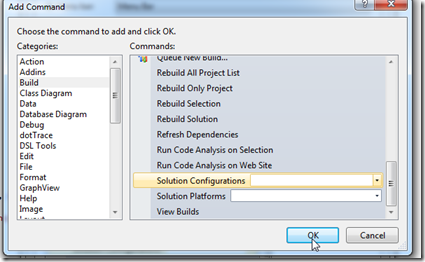

Choose ‘Customize'. Change the tab to ‘Commands' and move focus to the bottom of the list, like so:

Choose ‘Customize'. Change the tab to ‘Commands' and move focus to the bottom of the list, like so:

The hit the ‘Add Command' button and go to the ‘Build' category. Scrolling to the bottom, you will find a command labeled ‘Solution Configurations'. Pick it.

The hit the ‘Add Command' button and go to the ‘Build' category. Scrolling to the bottom, you will find a command labeled ‘Solution Configurations'. Pick it.

You now have an inline configuration manager on your top toolbar without taking up extra space. Same trick can be applied to any other commands.

You now have an inline configuration manager on your top toolbar without taking up extra space. Same trick can be applied to any other commands.

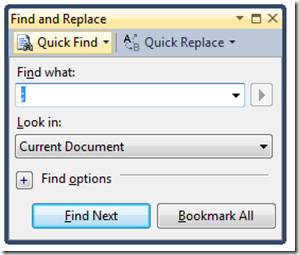

## Docking the Find dialog box

The ‘Find and Replace' dialog is probably one of the most used dialogs in Visual Studio - however with the default settings, you get a floating dialog that doesn't seem to want to go away after you're done using it.

## Docking the Find dialog box

The ‘Find and Replace' dialog is probably one of the most used dialogs in Visual Studio - however with the default settings, you get a floating dialog that doesn't seem to want to go away after you're done using it.

If you dock it - like so:

If you dock it - like so:

... and unpin it, it will behave nicely and disappear when you're done searching or press ESC.

## Switch to a dark theme

This is more a matter of taste. Personally I've been using dark themes for Visual Studio forever. My eyes feel way more relaxed after a day of using a dark theme. My theory is that since computer monitors use [additive colors](http://en.wikipedia.org/wiki/Additive_color) (with white being a full blast mix of red, green and blue and black being no light), a dark theme simply emits way less light.

... and unpin it, it will behave nicely and disappear when you're done searching or press ESC.

## Switch to a dark theme

This is more a matter of taste. Personally I've been using dark themes for Visual Studio forever. My eyes feel way more relaxed after a day of using a dark theme. My theory is that since computer monitors use [additive colors](http://en.wikipedia.org/wiki/Additive_color) (with white being a full blast mix of red, green and blue and black being no light), a dark theme simply emits way less light.

If you want, you can download my personal theme [here](/files/RKL-blue-theme-vs2010-2012-02-28.zip) (ReSharper specific). It's the same as I've previously posted, except I've adjusted it to work properly with Razor views too.

If you want, you can download my personal theme [here](/files/RKL-blue-theme-vs2010-2012-02-28.zip) (ReSharper specific). It's the same as I've previously posted, except I've adjusted it to work properly with Razor views too.

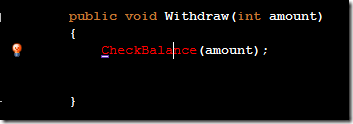

Peer Reviews - Why bother? 2 Jan 2012 3:00 PM (13 years ago)

Working with code is tricky business - the larger and more complex the code base, the more tricky. Ingraining micro-processes into your work day can help fix some of the issues, some of the broken windows that grow into almost any code base over time. Peer reviews is a great starting point.

There are many forms of peer review - but this post is mainly about informal check-in reviews. The process is simple: Any commit to the code repository must be signed off by another member of the team. Many argue that small commits are okay to go unchecked - but the size of a “small commit” grows in my experience. My counterargument is that a small commit will only take 30 seconds or less. Simply bring up the change set, go over the changes, discuss anything needed informally, then add "Review: <initials>" to the commit message and fire away.

## Benefits?

One of the first things you'll notice when you introduce peer reviews is **catching common commit mistakes**. These include small changes in files made while testing or debugging that were not meant to be committed, files that were not related to the current commit and, if your reviewer is alert, files missing from the commit.

Another small side effect is a **subconscious increase in code quality**. Knowing that someone else will review your code closely will increase the mental barrier to introducing hacks and other peculiarities that

Working with code is tricky business - the larger and more complex the code base, the more tricky. Ingraining micro-processes into your work day can help fix some of the issues, some of the broken windows that grow into almost any code base over time. Peer reviews is a great starting point.

There are many forms of peer review - but this post is mainly about informal check-in reviews. The process is simple: Any commit to the code repository must be signed off by another member of the team. Many argue that small commits are okay to go unchecked - but the size of a “small commit” grows in my experience. My counterargument is that a small commit will only take 30 seconds or less. Simply bring up the change set, go over the changes, discuss anything needed informally, then add "Review: <initials>" to the commit message and fire away.

## Benefits?

One of the first things you'll notice when you introduce peer reviews is **catching common commit mistakes**. These include small changes in files made while testing or debugging that were not meant to be committed, files that were not related to the current commit and, if your reviewer is alert, files missing from the commit.

Another small side effect is a **subconscious increase in code quality**. Knowing that someone else will review your code closely will increase the mental barrier to introducing hacks and other peculiarities that sometimes sneak into code.

While many developers focus more on writing self-documenting, readable code, getting another pair of eyes on the code is great for clarifying the intent of the code - uncovering small scale refactorings such as renames and extractions. The earlier you **uncover and discuss minor design issues** like these and further **aligning team coding styles**, the better shape the code base is likely to end up. Aligning coding styles across multiple teams is a hard task, any improvement is worth taking. Once in a while a peer review will uncover larger design issues and ultimately lead to discarding the code under review and going for a different solution. This is not always a pleasant experience, but it's **easier to kill your darlings when nudged in the right direction** by a colleague.

In line with the last paragraph, reviews also **often spur discussions about larger things like domain concepts and architecture** - it just seems to come up more when looking at concrete issues in the code base. Likewise, the reviewer is investing some of this time in the code and putting his name on it, thus **increasing shared code ownership** of the code.

Lastly, just seeing how other developers work can **give insight in other developers IDE and tool tricks**. Being a keyboard-junkie myself, I often find myself exchanging IDE / productivity tips during reviews.

## Conclusion

Information code reviews are, in my opinion, one of the cheaper ways to directly affect code quality - assuming it's taken seriously of course. You might notice that many of these benefits are the same as with pair programming - and they are. Pair programming is usually harder to get started on and not suited for all assignments, although most teams ought to do way more pair programming than they are. Peer review is broadly applicable. Try it with your team for a week or a month - if I'm wrong and nothing improves, I'll buy you a beer next time we meet :-)

Check your backups - unexpected SQL Server VSS backup 29 Nov 2011 3:00 PM (13 years ago)

Your backup is only as good as your last restore. I recently changed my backup strategy on my SQL Server 2008 from doing a full nightly backup to doing incremental nightly backups and only a full backup each week. SQL Server incremental backups base themselves on the last full backup. This is nice when you go to restore them since you will only need the full backup + the incremental backup, not any intermediary backups.

However, a few days back I wanted to check some queries on a larger dataset and decided to check my backups at the same time. Fetched full + incremental backups from the server and started the local restore:

``` sql

RESTORE DATABASE [testdb]

FROM DISK = N'C:\temp\full.wbak'

WITH FILE = 1, NORECOVERY, REPLACE

RESTORE DATABASE [testdb]

FROM DISK = N'C:\temp\incremental.bak'

WITH FILE = 1, RECOVERY

```

The first backup went through fine, but restoring the incremental backup resulted in the following error message:

This differential backup cannot be restored because the database has not been restored to the correct earlier state.

SQL Server refused to restore my incremental database - this is only supposed to happen if there has been another full backup in between. I double checked the backups I had fetched, checked that I had the set up the new backups correctly and that the old backup job was gone. Everything seemed fine.

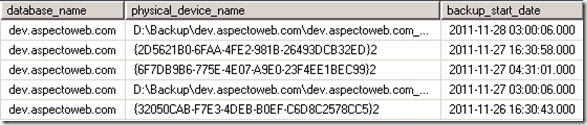

I then explored the backup history a bit further with a query adjusted from the one found in [this](http://blog.sqlauthority.com/2010/11/10/sql-server-get-database-backup-history-for-a-single-database/) post:

``` sql

SELECT TOP 10

s.database_name,

m.physical_device_name,

s.backup_start_date

FROM msdb.dbo.backupset s

INNER JOIN msdb.dbo.backupmediafamily m ON s.media_set_id = m.media_set_id

WHERE s.database_name = DB_NAME() -- Remove this line for all the database

ORDER BY backup_start_date DESC

```

The result showed that there had indeed been backups in between my nightly runs:

Further research revealed that backup devices with a GUID name are virtual backup devices and the times of backups matched the daily schedule of our bare metal system backup. Turns out that [R1Soft's backup software](http://www.r1soft.com/windows-cdp/) integrates with SQL Server's VSS writer service to perform backups when it finds databases on disk.

Disabling the VSS writer service returned the backups to a working state (VSS backup + my own incremental would also have worked). I did consider skipping my own nightly backups (since the VSS backup is super fast) and just using the R1Soft one, but decided against it for now - my own management is already set up and if I do need to restore, grabbing the backup from the external backup is much slower and more tedious than having it on disk already.

Further research revealed that backup devices with a GUID name are virtual backup devices and the times of backups matched the daily schedule of our bare metal system backup. Turns out that [R1Soft's backup software](http://www.r1soft.com/windows-cdp/) integrates with SQL Server's VSS writer service to perform backups when it finds databases on disk.

Disabling the VSS writer service returned the backups to a working state (VSS backup + my own incremental would also have worked). I did consider skipping my own nightly backups (since the VSS backup is super fast) and just using the R1Soft one, but decided against it for now - my own management is already set up and if I do need to restore, grabbing the backup from the external backup is much slower and more tedious than having it on disk already.

NHibernate Flushing and You 14 Jun 2011 4:00 PM (13 years ago)

Working with NHibernate, you will eventually have to know something about flushing. Flushing is the process of persisting the current session changes to the database. In this post, I will explain how flushing works in NHibernate, which options you have and what the benefits and disadvantages are. As you work with the NHibernate session, loading existing entities and attaching new entities, NHibernate will keep track of the objects that are associated with current session. When a flush is triggered, NHibernate will perform dirty checking; inspect the list of attached entities to determine what needs to be saved and which SQL statements are required to persist the changes. NHibernate offers several different flush modes that determine when a flush is triggered. The flush mode can be set using a property on the session (usually when opening the session). Out of the box NHibernate defaults to **FlushMode.Auto** which is a flush mode that offers a minimum of surprises while providing decent performance. Auto will flush changes to the database when a manual flush is performed (using ISession.Flush()), when a transaction is committed and when NHibernate deems that an auto flush is necessary to serve up-to-date results in response to queries. While the auto flush is convenient, it does cause a few disadvantages as well. To determine whether an auto flush is required before executing a query NHibernate has to inspect the entities attached to the session. This is clearly a performance overhead and unfortunately as application complexity (and thus likely session length, number of queries and number of attached entities) increases, the cost will be in the ballpark of O(q*e) - quadratic growth based on number of **q**ueries and **e**ntities. Furthermore auto flushes are not always easy to predict, especially in complex systems - this can lead to unexpected exceptions if using things like NHibernates merge and replicate features (a blog post all by itself). A better solution for bigger applications is **FlushMode.Commit**, this flush mode will flush on manual flushes and when transactions are committed. Avoiding auto flushes provides quite a few performance opportunities, it will potentially require fewer SQL statements (multiple changes to the same data), it will cause fewer round trips to the database and thus enable better batching. What you need to understand before changing your flush mode to FlushMode.Commit is that your queries may return stale results until you commit transactions. However, this is generally what people expect when working with transactions, so it is usually not a problem. In some cases, you might have to perform a manual flush, but it makes sense to reduce the number of these (since they defeat the benefit of the flush mode). NHibernate offers two more (usable) flush modes. **FlushMode.Always** will trigger a flush before every query and is thus generally not useful except for maybe some special edge cases. **FlushMode.Never** will cause the session to only flush when manually flushing - this can be useful to create a read-only session (better performance and more assurance that no flushes are performed). For read-only / bulk needs, it's also practical to look into IStatelessSession (low memory / performant for bulk operations) and ReadOnly on queries and criterias introduced in NHibernate 3.1.

Countdown timer 25 May 2011 4:00 PM (13 years ago)

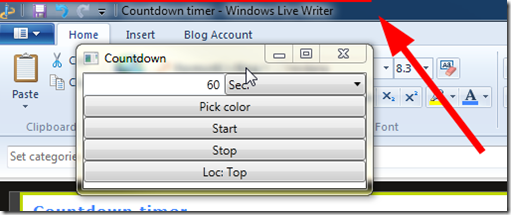

I demoed a small app today at the Demo Dag session at Community Day.

The app was developed at an ANUG Code Dojo - and the purpose is simply to create timers that are a few pixels high either at the bottom or top of your screen - to be used for running Pomodoros or other timing needs - like an informal timer for a presentation.

I got a few requests for the app, so I've uploaded the source to Bitbucket [here](http://bitbucket.org/rasmuskl/countdown/downloads) (there's also a v. 0.1 zip file with an executable - if you don't want to build from source).

Bear in mind that this app was hacked together in a few hours (with the purpose of learning WPF actually - we got sidetracked) - so don't expect quality code or an excellent polished app. It has quirks - you have been warned :-)

Enjoy.

Enjoy.

Slides from ANUG VS Launch Event 23 May 2010 4:00 PM (14 years ago)

I spoke last week about ReSharper 5 at [ANUG](http://www.anug.dk)'s Visual Studio 2010 launch event. Here are the slides from my presentation. The slides are in danish and probably won't make too much sense as most of my presentation was done demoing stuff - but they should give the gist of it. [Slides](/files/ReSharper-5-ANUG-VS-Launch.pptx) If you have any questions on my presentation, feel free to shoot me a mail here on the blog :-)

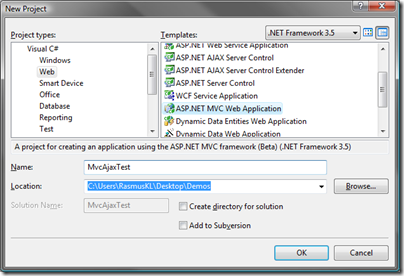

Slides from Miracle Open World 19 Apr 2010 4:00 PM (15 years ago)

Last week I gave two talks at MOW2010. Was an awesome conference and the 80% talks + 80% networking concept really held true. Hope to be going again next year - as speaker or otherwise. Here is the slides from my two talks. ## Increasing productivity with ReSharper This talk is about optimizing the mechanical part of your work. See how a keyboard-centric focus can speed up your work and how to navigate codebases easily independent of size. Visual Studio 2010 has introduced more advanced keyboard features, but ReSharper is still king, so it will be the main focus of the talk. While this session will contain a lot of fast-paced flashy keyboard shortcuts, it will also contain basic techniques and advice for you to get started with your own keyboard. [Slides](/files/Productivity-with-ReSharper.pptx) ## Practical ASP.NET MVC 2 ASP.NET MVC is the new kid on the Microsoft block. This talk will give you a short introduction to the framework and the new features in ASP.NET MVC 2. After the introduction, we will dig into some practical experiences and common situations of actually implementing a system using ASP.NET MVC. Detours will include other alternative open-source web frameworks and maybe even some JavaScript. [Slides](/files/Practical-ASP.NET-MVC.pptx)

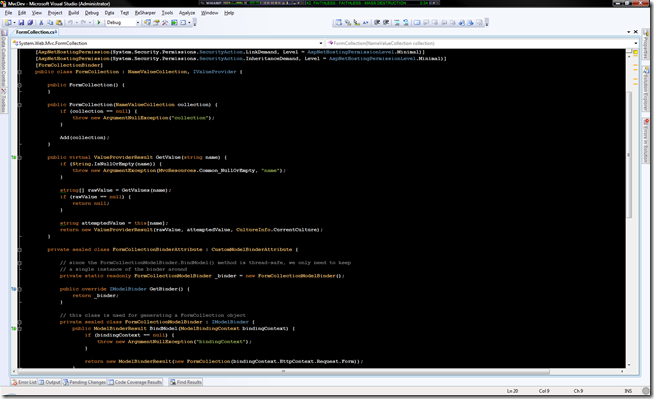

Black / Blue Visual Studio 2010 + ReSharper 5 Theme 19 Apr 2010 4:00 PM (15 years ago)

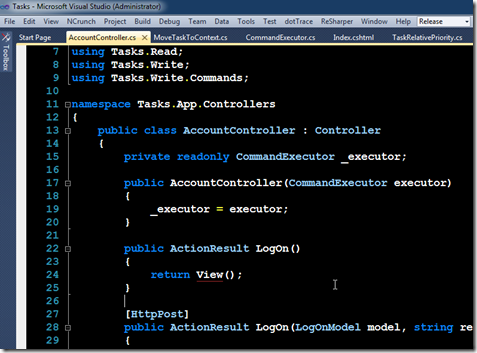

I have been using black background in Visual Studio for as long I can remember. I started out using Rob Conery's black / orange TextMate theme, but last year I created my own black theme with a blueish style. Today at the [ANUG](http://www.anug.dk) code dojo we tweaked it to actually look alright with the changes in Visual Studio and especially ReSharper.

You can download the theme [here](/files/RKL-blue-theme-vs2010-2010-04-20.zip) if you want a nice black theme.

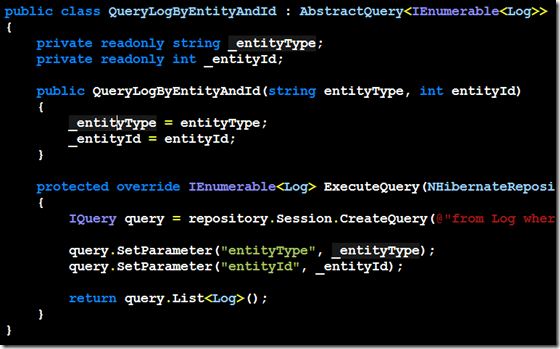

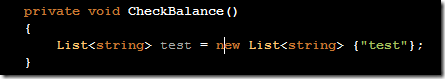

It looks like this:

Selection: