Export and download themes and extensions from Qlik Cloud 27 Jan 2024 3:00 PM (last year)

Third-party themes and extensions cannot be exported via the user interfaces in Qlik Cloud.

They can, however, be downloaded via public APIs, in the following ways:

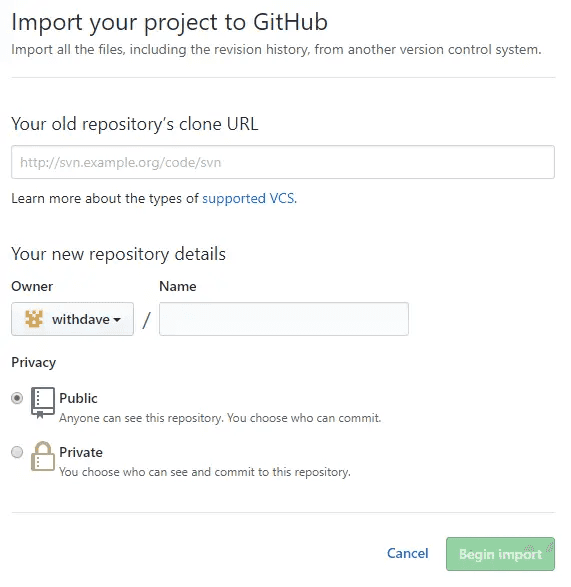

- Using Qlik Application Automation - use the extension blocks from the

Qlik Platform Operationsconnector- For general information on how to use this connector, refer to the getting started guide.

- Add a

List Extensionsblock. - Filter the block output to match the name of your extension.

- Add a

Get Extension Archiveblock to retrieve the base64 encoded archive containing the archive. - Use a file storage connector to store the archive to your service of choice, move it to another tenant, version control it, or base64 decode it to convert it back to an archive (online services are available).

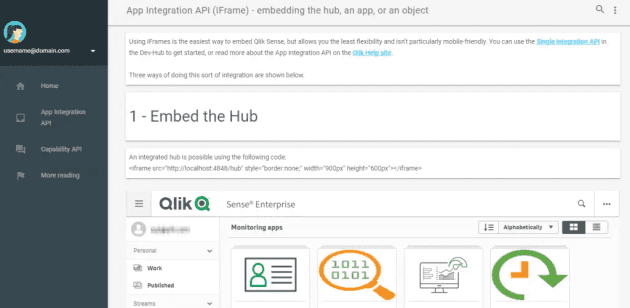

- Using the APIs in an active browser session

- Navigate to the extensions view in the management console of your tenant, to ensure you have an active session.

- Navigate to https://tenant.region.qlikcloud.com/api/v1/extensions - this will produce a list of your extensions. Identify the extension you wish to download and copy the

idvalue of that extension. - Navigate to https://tenant.region.qlikcloud.com/api/v1/extensions/{id}/file - replace

{id}with the ID you copied above. Your extension archive will be downloaded. - Review the full API specifications to learn more about extensions and themes.

- Using qlik-cli - there is no native command for extension management, however you can use the raw commands on the APIs listed above to connect and manage extensions.

Creating a while loop in Qlik Application Automation 13 Jan 2023 3:00 PM (2 years ago)

The lack of for n or while loops in Qlik's Application Automation, and a method

to create a for n loop was previously covered in creating a for n loop.

This post covers emulating a more adaptable while type approach using the label blocks.

Again, if you don't need to check state and want to run only a set number of iterations, then using the custom code block from the earlier post is easiest. It also has the advantage that all input linking works correctly, whereas with labels you will break lookups within the automation between sections.

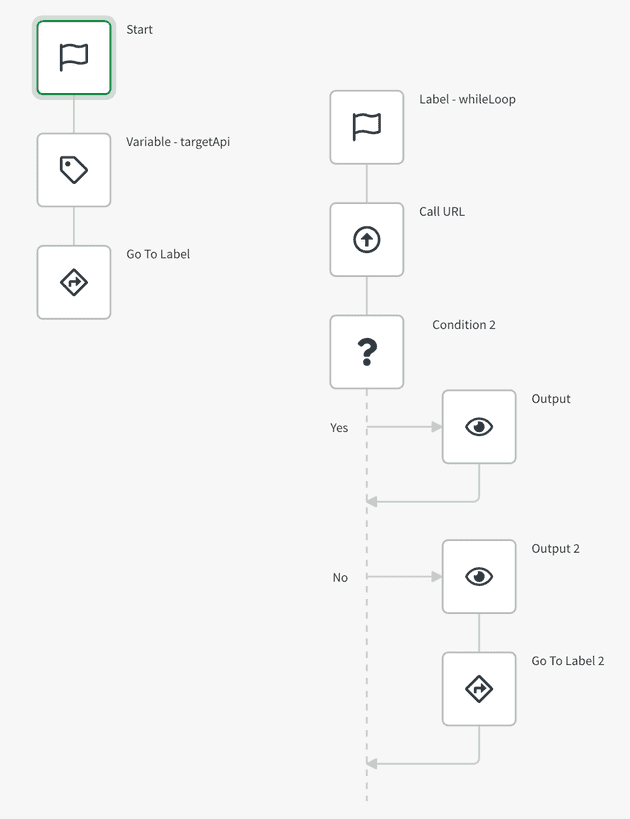

While loop - via label and condition blocks

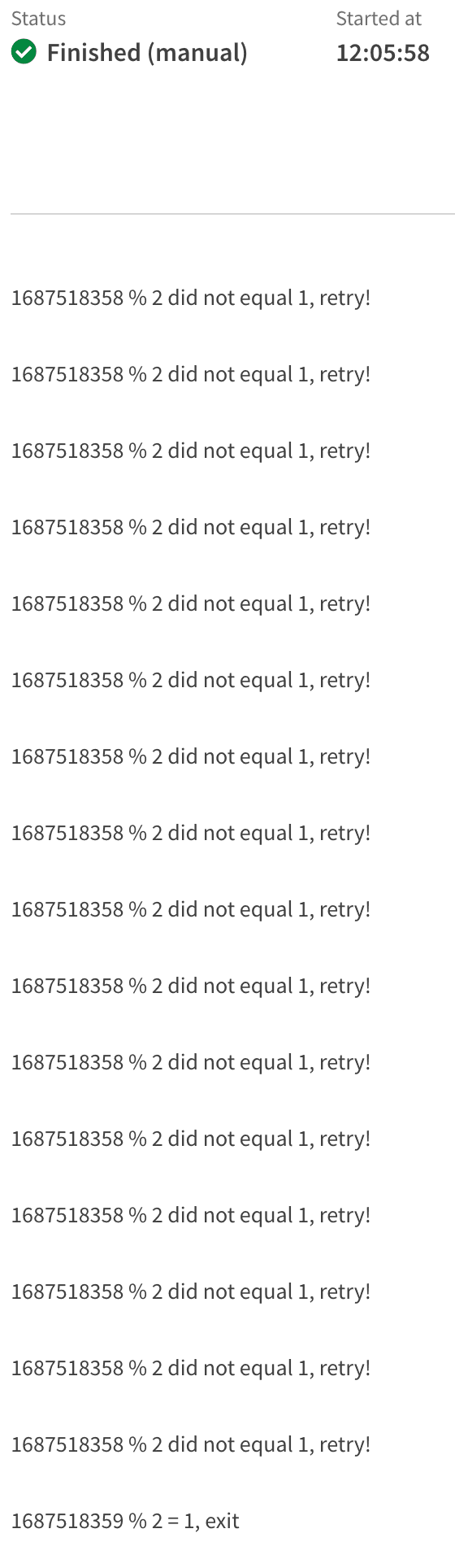

This automation retrieves a value from a public API (current timestamp), and does a simple check on the value.

By using a go to label and a condition block, we can evaluate the result, and either end the automation if true, or retry if false.

This approach is useful when used in conjunction with a sleep block to poll APIs.

To import this example, use the automation snippet below by copying the JSON and pasting it straight into a workspace.

Automation snippet:

{"blocks":[{"id":"A7049C5D-39CE-49CE-9153-278C72ED2748","type":"StartBlock","disabled":false,"name":"Start","displayName":"Start","comment":"","childId":"DD391D04-CE90-4FBD-BEB8-DB7C314675D5","inputs":[{"id":"run_mode","value":"manual","type":"select","structure":{}}],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":100,"y":100},{"id":"DCC7B8D4-28D9-4449-A8E8-72ACFA0DF3A3","type":"GotoBlock","disabled":false,"name":"goToLabel","displayName":"Go To Label","comment":"","childId":null,"inputs":[{"id":"label","value":"C75AC931-7BA3-4672-8BBF-2E9A0C0BCC2C","type":"select","displayValue":"whileLoop","structure":[]}],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":87.88671875,"y":340.31640625},{"id":"C75AC931-7BA3-4672-8BBF-2E9A0C0BCC2C","type":"LabelBlock","disabled":false,"name":"label","displayName":"Label - whileLoop","comment":"","childId":"8A4533EF-0492-46F0-B70D-EDCD031D26C4","inputs":[{"id":"name","value":"whileLoop","type":"string","structure":[]}],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":412.88671875,"y":174.31640625},{"id":"DD391D04-CE90-4FBD-BEB8-DB7C314675D5","type":"VariableBlock","disabled":false,"name":"targetApi","displayName":"Variable - targetApi","comment":"","childId":"DCC7B8D4-28D9-4449-A8E8-72ACFA0DF3A3","inputs":[],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":84.05859375,"y":193.79296875,"variableGuid":"56FD86DC-C2C4-43F7-8F46-7CCF22B7FE49","operations":[{"id":"set_value","key":"FF1A6796-D2FF-4C0D-985D-40D434976769","name":"Set value of { variable }","value":"https://worldtimeapi.org/api/timezone/Europe/London"}]},{"id":"3C80AC6A-1D75-42F1-AC9D-FE95E29F51A2","type":"GotoBlock","disabled":false,"name":"goToLabel2","displayName":"Go To Label 2","comment":"","childId":null,"inputs":[{"id":"label","value":"C75AC931-7BA3-4672-8BBF-2E9A0C0BCC2C","type":"select","displayValue":"whileLoop","structure":[]}],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":643.88671875,"y":598.31640625},{"id":"B792FCE5-46CA-4D96-8B39-D665599B16A4","type":"IfElseBlock","disabled":false,"name":"condition2","displayName":"Condition 2","comment":"","childId":null,"inputs":[{"id":"conditions","value":{"mode":"all","conditions":[{"input1":"{mod: {$.callURL.unixtime}, 2}","input2":"1","operator":"="}]},"type":"custom","structure":[]}],"settings":[],"collapsed":[{"name":"yes","isCollapsed":false},{"name":"no","isCollapsed":false}],"x":636,"y":400,"childTrueId":"D818C1FB-D5AA-453E-AE65-EBEED1D37FA3","childFalseId":"6346A1DB-527E-4BD0-B447-DC289F7F08FA"},{"id":"8A4533EF-0492-46F0-B70D-EDCD031D26C4","type":"CallUrlBlock","disabled":false,"name":"callURL","displayName":"Call URL","comment":"","childId":"B792FCE5-46CA-4D96-8B39-D665599B16A4","inputs":[{"id":"url","value":"{$.targetApi}","type":"string","structure":[]},{"id":"method","value":"GET","type":"select","displayValue":"GET","structure":[]},{"id":"timeout","value":"","type":"string","structure":[]},{"id":"params","value":null,"type":"object","mode":"keyValue","structure":[]},{"id":"headers","value":null,"type":"object","mode":"keyValue","structure":[]},{"id":"encoding","value":"","type":"string","structure":[]}],"settings":[{"id":"blendr_on_error","value":"stop","type":"select","structure":[]},{"id":"full_response","value":false,"type":"checkbox","structure":[]},{"id":"capitalize_headers","value":false,"type":"checkbox","structure":[]},{"id":"automations_censor_data","value":false,"type":"checkbox","structure":[]}],"collapsed":[{"name":"loop","isCollapsed":false}],"x":487,"y":270},{"id":"D818C1FB-D5AA-453E-AE65-EBEED1D37FA3","type":"ShowBlock","disabled":false,"name":"output","displayName":"Output","comment":"","childId":null,"inputs":[{"id":"input","value":"{$.callURL.unixtime} % 2 = 1, exit","type":"string","structure":[]}],"settings":[{"id":"display_mode","value":"add","type":"select","structure":[]}],"collapsed":[{"name":"loop","isCollapsed":false}],"x":585.80859375,"y":510.4296875},{"id":"6346A1DB-527E-4BD0-B447-DC289F7F08FA","type":"ShowBlock","disabled":false,"name":"output2","displayName":"Output 2","comment":"","childId":"3C80AC6A-1D75-42F1-AC9D-FE95E29F51A2","inputs":[{"id":"input","value":"{$.callURL.unixtime} % 2 did not equal 1, retry!","type":"string","structure":[]}],"settings":[{"id":"display_mode","value":"add","type":"select","structure":[]}],"collapsed":[{"name":"loop","isCollapsed":false}],"x":651.65234375,"y":640.31640625}],"variables":[{"guid":"56FD86DC-C2C4-43F7-8F46-7CCF22B7FE49","name":"targetApi","type":"string"}]}Creating a for n loop in Qlik Application Automation 27 Dec 2022 3:00 PM (2 years ago)

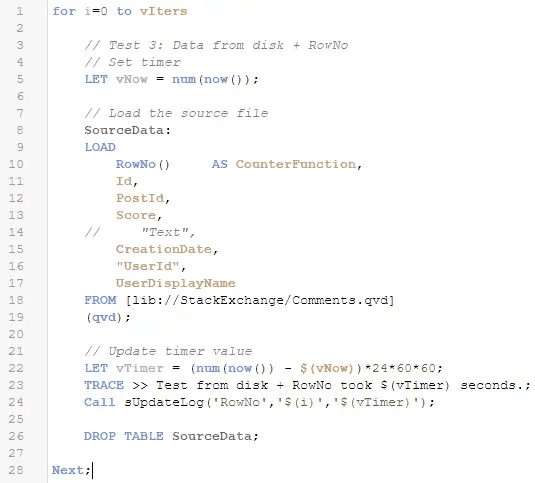

Qlik's Application Automation doesn't support generating a for n loop out with a

block. While there are blocks for looping over existing content (i.e. for each),

you need to use a different approach to run a set number of iterations without a

list or object to iterate over.

There are two common methods:

- Generate a list using a custom code block, and then loop over with a loop block

- Use label and condition blocks to continuously check the state of a variable

If you don't need to check state and want to run only a set number of iterations, then using the custom code block is easiest. It also has the advantage that all input linking works correctly, whereas with labels you will break lookups within the automation between sections.

If you need to check a state/ verify something has completed, then you should use labels and conditions. These are harder to build, but support you being able to modify the state during the run more easily.

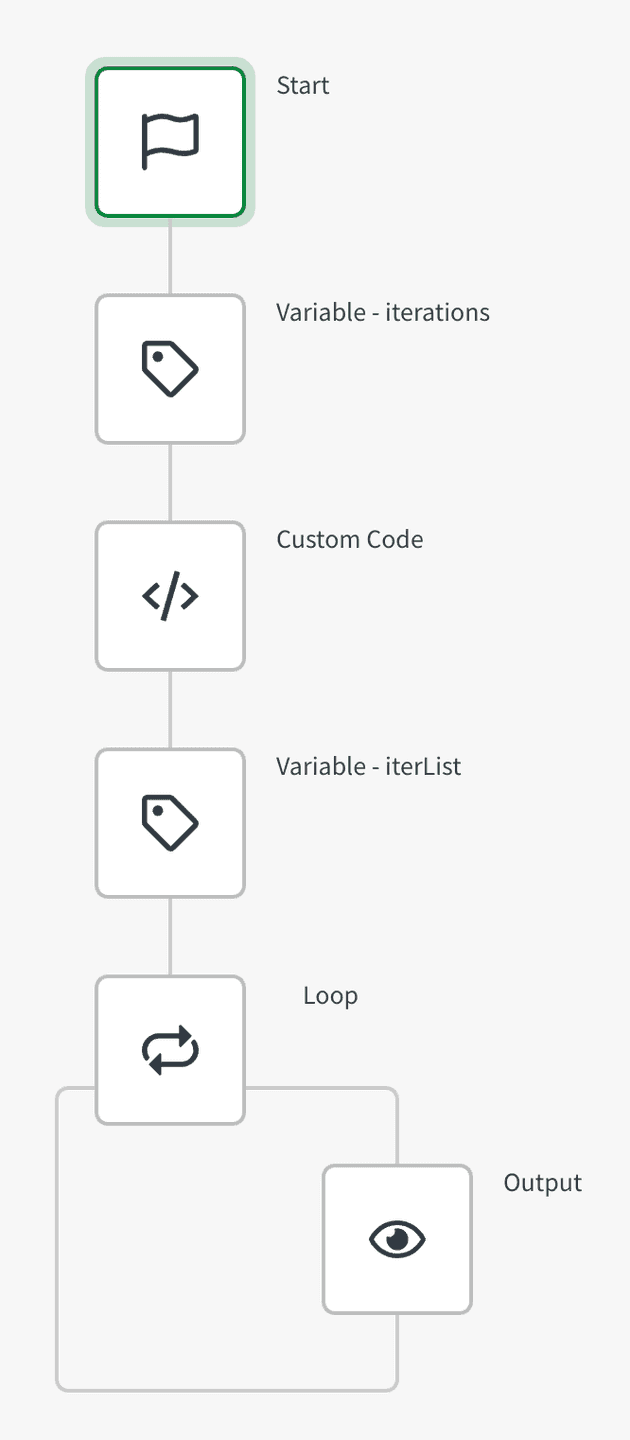

For n loop - via custom code block

This automation snippet accepts an input variable for the number of iterations to

generate, and generates an integer between 1 and that number, which is returned as

iterList.

You can then use a standard loop block to run your iteration.

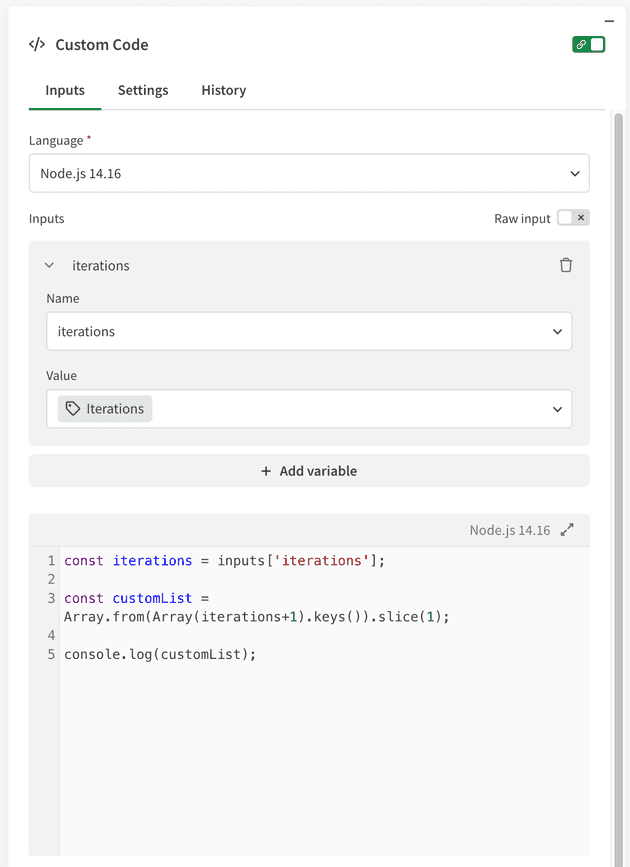

The custom code is javascript, returning the list to the output of the block.

Javascript snippet:

const iterations = inputs['iterations'];

const customList = Array.from(Array(iterations+1).keys()).slice(1);

console.log(customList);If you prefer to copy this whole example, use the automation snippet below by copying the JSON and pasting it straight into a workspace.

Automation snippet:

{"blocks":[{"id":"B9BACC33-529C-483F-8339-A7029E944BE2","type":"VariableBlock","disabled":false,"name":"iterations","displayName":"Variable - iterations","comment":"","childId":"C3E01264-F076-4BB2-9F59-FC236AF1875F","inputs":[],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":250,"y":240,"variableGuid":"EB4BB61B-4EB4-41E9-8F11-7476437AF4B5","operations":[{"key":"1D9C49ED-332A-4C07-967E-F8425B4B16BF","id":"set_value","name":"Set value of { variable }","value":"100"}]},{"id":"C3E01264-F076-4BB2-9F59-FC236AF1875F","type":"CustomCodeBlock3","disabled":false,"name":"customCode","displayName":"Custom Code","comment":"","childId":"4F8812D7-AA6B-4C4D-9F7C-86AF7C48E74B","inputs":[{"id":"language","value":"nodejs","type":"select","displayValue":"Node.js 14.16","structure":{}},{"id":"inputs","value":[{"key":"iterations","value":"{$.iterations}"}],"type":"object","mode":"keyValue","structure":{}},{"id":"code","value":"const iterations = inputs['iterations'];\n\nconst customList = Array.from(Array(iterations+1).keys()).slice(1);\n\nconsole.log(customList);","type":"code","structure":{}}],"settings":[{"id":"blendr_on_error","value":"stop","type":"select","structure":{}},{"id":"automations_censor_data","value":false,"type":"checkbox","structure":{}}],"collapsed":[{"name":"loop","isCollapsed":false}],"x":-293,"y":219},{"id":"4F8812D7-AA6B-4C4D-9F7C-86AF7C48E74B","type":"VariableBlock","disabled":false,"name":"iterList","displayName":"Variable - iterList","comment":"","childId":"5CF740A2-EC7E-4B16-911B-8A730F417D9C","inputs":[],"settings":[],"collapsed":[{"name":"loop","isCollapsed":false}],"x":0,"y":240,"variableGuid":"455BB8DB-169E-47BA-9445-335607D122CC","operations":[{"key":"E737BA8D-EB66-4909-A684-0362DED20DC5","id":"merge","name":"Merge other list into { variable }","value":"{$.customCode}","allow_duplicates":"no"}]},{"id":"5CF740A2-EC7E-4B16-911B-8A730F417D9C","type":"ForEachBlock","disabled":false,"name":"loop","displayName":"Loop","comment":"","childId":null,"inputs":[{"id":"input","value":"{$.iterList}","type":"string","structure":{}}],"settings":[{"id":"automations_censor_data","value":false,"type":"checkbox","structure":{}}],"collapsed":[{"name":"loop","isCollapsed":false}],"x":-417,"y":202,"loopBlockId":"43758FA3-912E-4230-822D-22C12B895487"},{"id":"43758FA3-912E-4230-822D-22C12B895487","type":"ShowBlock","disabled":false,"name":"output","displayName":"Output","comment":"","childId":null,"inputs":[{"id":"input","value":"{$.loop.item}/{count: {$.iterList}}","type":"string","structure":{}}],"settings":[{"id":"display_mode","value":"add","type":"select","structure":{}}],"collapsed":[{"name":"loop","isCollapsed":false}],"x":-391,"y":209}],"variables":[{"guid":"455BB8DB-169E-47BA-9445-335607D122CC","name":"iterList","type":"list"},{"guid":"EB4BB61B-4EB4-41E9-8F11-7476437AF4B5","name":"iterations","type":"number"}]}Creating a while loop via label blocks is discussed in creating a while loop.

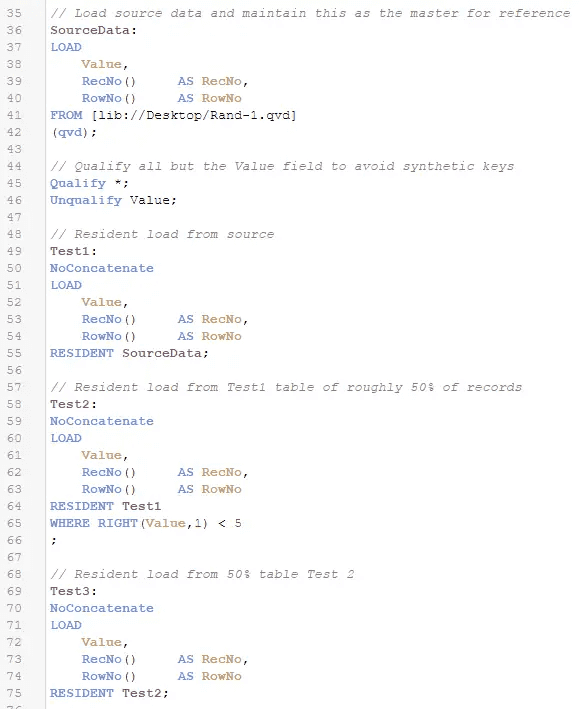

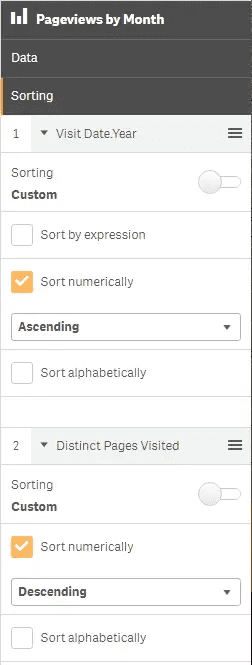

Group by performance in Qlik Sense & QlikView with order by sorting 8 Jan 2021 3:00 PM (4 years ago)

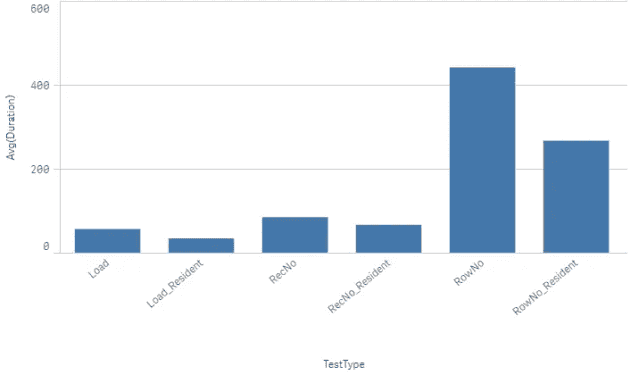

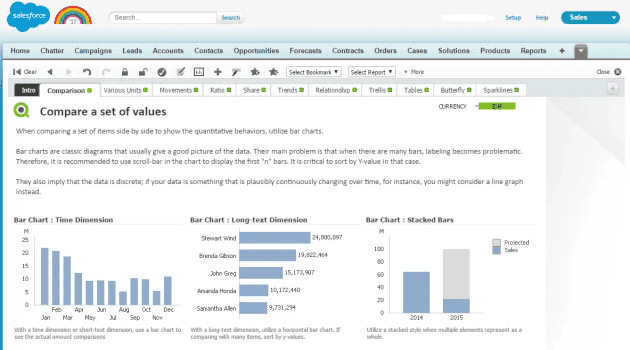

When asked how to aggregate data in Qlik products in the quickest way, the answer is "it depends". While the key factor is the uniqueness/ cardinality of the aggregating dimensions, there are other elements at play.

In general, though, the fastest way to aggregate in the data load script (after loading the data into memory) is:

- When aggregating by a low cardinality dimension in a small data set, resident load and run a group by immediately (this is also the fewest lines of script)

- When aggregating by a higher cardinality dimension, or on one that requires a lot of sorting prior to aggregation, resident load and sort the table by the high cardinality dimension as the first step. Then resident load this table and run your group by as a second step.

The short version: use approach 2 as the default, unless your data is very simple.

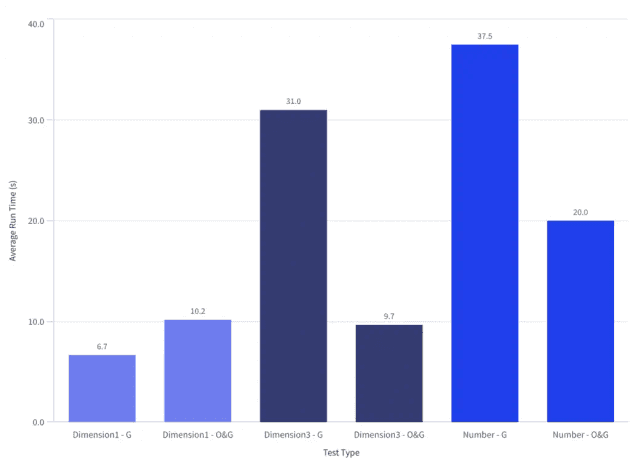

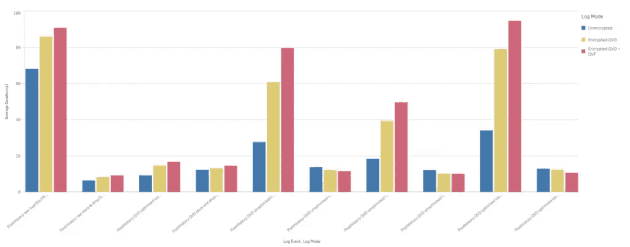

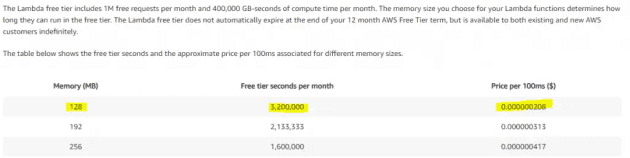

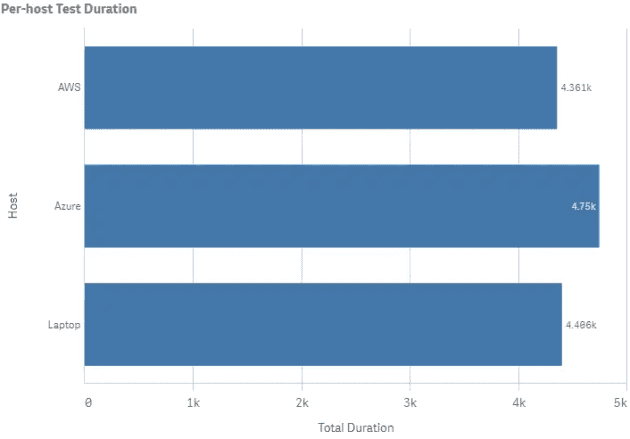

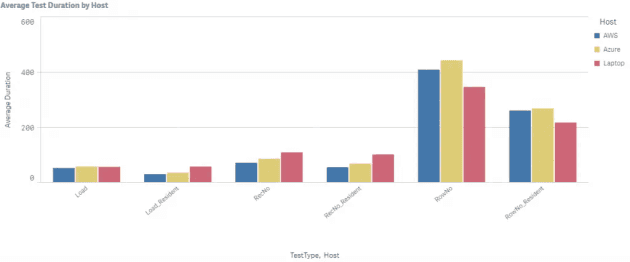

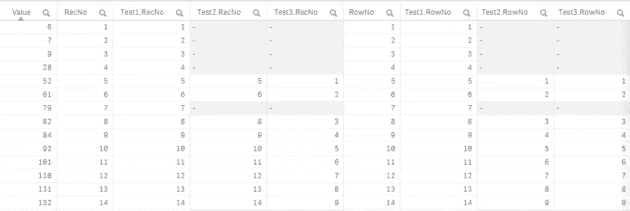

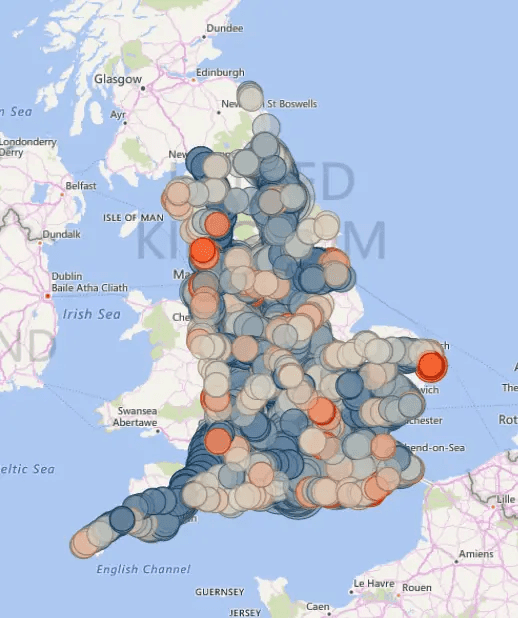

For Dimension1 (low cardinality), a direct group by (G) was fastest. For Dimension3 (high cardinality) and Number (low cardinality) an order by, then group by (O&G) was fastest by a large margin

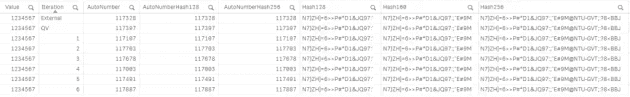

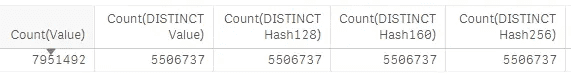

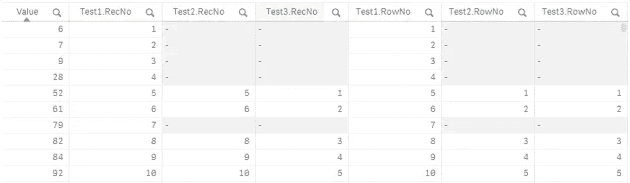

The Test

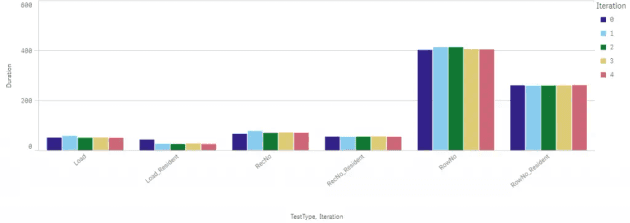

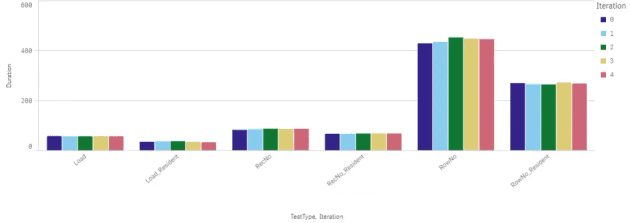

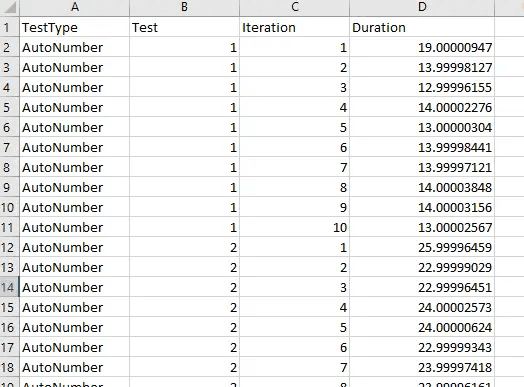

Using a 2 core 8GB RAM virtual machine in Azure, we loaded 5 million rows of randomly generated data from a QVD (which was then loaded using an optimised load). The test is run 5 times per test, with the average used for analysis.

This data came from the random data generator script posted earlier this year. The script used to run the test can be found on Github.

The aggregations were tested on Dimension1 (low cardinality), Dimension3 (high cardinality) and Number (low cardinality)

The tests below explores the statement that it can be significantly faster to load data, sort it, then group it (three steps) rather than just loading and grouping it (two steps).

Tests:

- "G" - Group by (load data from QVD, then group in resident load)

- "OG" - Order by, group by (load data from QVD, then sort in first resident load, then group in second resident load)

Test cases:

- Dimension1 - this is a single upper case letter field (26 possible values). The values are in a random order

- Dimension 3 - this is a four letter letter, both upper and lower case. The values are in a random order

- Number - this is an integer between 1 and 26, which increments to 26 before resetting to 1 and repeating the increment across the data set

The Results

Order by, then group by is fastest in more complex data sets

With the exception of the simple alphabetic field (Dimension1), it is significantly faster to first sort the data, then aggregate it when doing so on a single dimension field.

This means more script in your app - but a faster overall load.

Future questions

To explore at a later date:

- Was it the sort order or the data type that resulted in such a large difference between Dimension1 and Number, as both had 26 unique values?

- Where is the crossover in terms of dimension complexity between the O and O&G approaches?

- How does performance change when grouping by a number of dimensions rather than just one?

- How does peak RAM and CPU differ between the tests?

- Does a preceding load offer similar performance benefits (in which case you'd also benefit from less code)

Task Chaining in Qlik Sense Enterprise SaaS 15 Dec 2020 3:00 PM (4 years ago)

Today, QSE SaaS doesn't do task chaining. Instead you have two options:

- Use a scheduled reload task for every app, and try to make sure the start time for task 2 will always be later than task 1

- Use Qlik SaaS REST APIs to trigger the reload

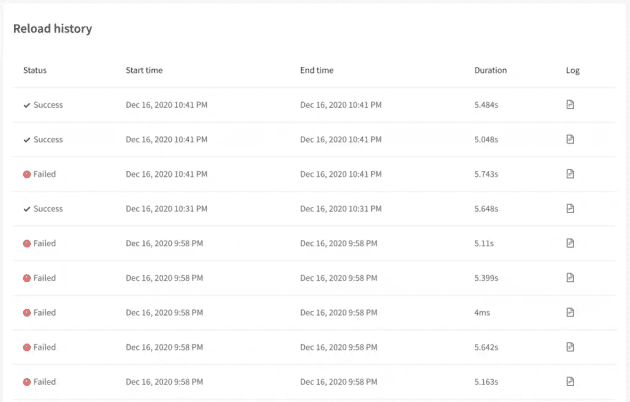

This post covers a simple approach (i.e. Qlik script only) for triggering reloads of downstream apps on completion of an app reload in a way that doesn't require much maintenance or any external systems or integrations.

With the APIs, we can reload as frequently as we wish - to some disastrous results. Be careful.

For those who haven't looked into the QCS APIs in the past, there's a lot of information on the Qlik.dev site; and specifically this video on the topic of task chaining with Qlik-CLI.

The theory

Using the reloads API, we need just one thing to trigger a reload - an app ID. So, all we need to do after a reload is make a call to this API to trigger the next app. This means we only need to put a scheduled reload task on the first app in the chain, with every following app triggered by the proceeding application.

To make this work, we need:

- A REST connection to the /reloads endpoint

- A data file connection to store the logs in

- A parent application (app 1 - this will be triggered by QSE SaaS and a scheduled task)

- A child application (app 2 - this will be triggered by the load script of app 1)

The reason for doing this in the load script of a normal app (and not as a separate "task manager" app) is because scheduling and running a series of task manager apps is tedious, and setting up a large number of these apps may not be supported by Qlik in the QCS environment.

Note: Fair warning, the reloads API is experimental and may change.

If your reloads suddenly stop working, it could be the API.

The REST connection

We are going to be using the /api/v1/reloads endpoint and hitting it with a POST request. To set this connection up, you'll need the following details:

- URL: https://

. .qlikcloud.com/api/v1/reloads - Type: POST

- Body: {"appId":"

"} - Header: Authorization: Bearer

The subroutine

The inputs and outputs of this sub are listed in the script. You should put this as the last script to execute in your load script.

Sub sTriggerReload(sub_appID,sub_connAPI,sub_connLog)

/*

This subroutine triggers the reload of a QCS application (directly, not using scheduled tasks)

INPUTS:

* sub_appID = the GUID for the app to be reloaded

* sub_connAPI = a REST data connection that can access the tenant APIs with appropriate privileges

* sub_connLog = a folder data connection for storing reload trigger log files (these will be stored as "ReloadLog_<AppID>_<ReloadID>.qvd")

OUTPUTS:

* Send a POST message the task API to trigger the relevant app reload

* Store a log file to record the reload trigger to assist with finding this event in audit logs if needed

REST CONNECTION CONFIG

* URL: https://<tenantname>.<region>.qlikcloud.com/api/v1/reloads

* Type: POST

* Body: {"appId":"<valid app GUID>"}

* Header: Authorization: Bearer <API key>

*/

// Connect to the REST connection

LIB CONNECT TO '$(sub_connAPI)';

LET sub_QueryBody = '{""appId"":""$(sub_appID)""}';

// Collect data from the response for logging

// Configure app ID for reload

RestConnectorMasterTable:

SQL SELECT

"id",

"appId",

"tenantId",

"userId",

"type",

"status",

"creationTime",

"__KEY_root"

FROM JSON (wrap on) "root" PK "__KEY_root"

WITH CONNECTION (BODY "$(sub_QueryBody)");

ReloadLog:

LOAD DISTINCT

[id] AS [Reload ID],

[appId] AS [Reload App ID],

[tenantId] AS [Reload Tenant ID],

[userId] AS [Reload User ID],

[type] AS [Reload Type],

[status] AS [Reload Status],

[creationTime] AS [Reload Creation Time],

DocumentName() AS [Reload Trigger App ID],

DocumentTitle() AS [Reload Trigger App Name]

RESIDENT RestConnectorMasterTable

WHERE NOT IsNull([__KEY_root]);

// Set variables to produce log filenames

LET sub_ReloadTime = Timestamp(Peek('Reload Creation Time',0),'YYYYMMDDhhmmss');

LET sub_ReloadID = Peek('Reload ID',0);

// Check to see if the reload request returned rows, and the variables carry data. If not, fail this reload

If (NoOfRows('ReloadLog') <> 1) OR ('$(sub_ReloadTime)' = '') OR ('$(sub_ReloadID)' = '') THEN

// Fail with an error for the log

Call Error('An unexpected number of rows was returned by the reloads API, or invalid data was found.');

END IF;

TRACE >>> Returned reload $(sub_ReloadID) at $(sub_ReloadTime);

// Store logs and clear model

STORE ReloadLog INTO [lib://$(sub_connLog)/ReloadLog_$(sub_appID)_$(sub_ReloadID)_$(sub_ReloadTime).qvd] (qvd);

DROP TABLE ReloadLog;

DROP TABLE RestConnectorMasterTable;

End Sub;

// Call - pass in the app ID, the REST connection name, the folder connection name

Call sTriggerReload('ab77b40d-4a30-46d9-9d2b-2943c6b82902','<rest connection name>','DataFiles');The latest version of this script can be found in this GitHub repo.

What's missing from this?

If you want to take this forward, you'll want a few things:

- Error handling - for when you get a weird response from the API

- An app to blend the log files this produces with the reload event logs to track duration and errors

- To store this script as a text file and load it as an include so it doesn't exist in a million different apps

- To consider whether you want to trigger reloads by app ID or by app and space name

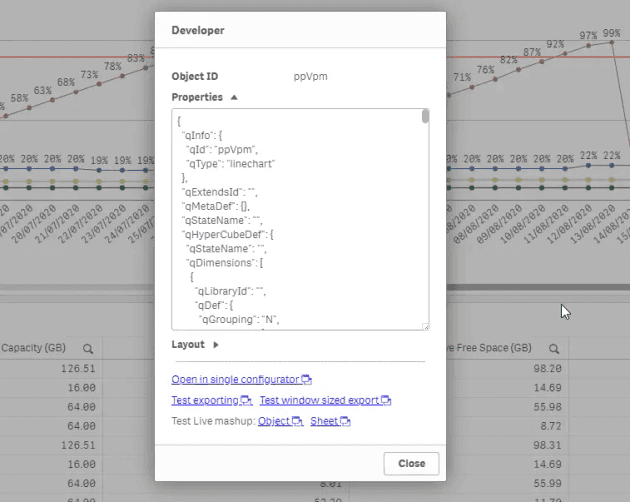

Qlik Sense Object Type Mapping 31 Aug 2020 4:00 PM (4 years ago)

When you're troubleshooting or diving into logs, it's useful to have a mapping of object types. The name of an object is defined by the developer of that object, so there tends to be little convention - other some basic syntax rules and that Qlik Sense only allows one instance of the name per site.

Each object definition references the object type (qType)

Knowing what each type means is useful when inventorying a site for upgrade or migration.

Here's a list put together at the time of writing. The latest version of the list is available on GitHub, and includes descriptions for each object.

| Object Type | Object Name (UI) | Object Origin |

| qlik-date-picker | Date picker | Qlik Dashboard Bundle |

| qlik-share-button | Share button | Qlik Dashboard Bundle |

| qlik-variable-input | Variable input | Qlik Dashboard Bundle |

| idevioanimator | GeoAnalytics Animator | Qlik GeoAnalytics |

| idevioarealayer | GeoAnalytics Area Layer | Qlik GeoAnalytics |

| ideviobubblelayer | GeoAnalytics Bubble Layer | Qlik GeoAnalytics |

| ideviochartlayer | GeoAnalytics Chart Layer | Qlik GeoAnalytics |

| ideviogeodatalayer | GeoAnalytics Geodata Layer | Qlik GeoAnalytics |

| idevioheatmaplayer | GeoAnalytics Heatmap Layer | Qlik GeoAnalytics |

| ideviolinelayer | GeoAnalytics Line Layer | Qlik GeoAnalytics |

| ideviomap | GeoAnalytics Map | Qlik GeoAnalytics |

| auto-chart | Auto Chart | Qlik Native |

| barchart | Bar chart | Qlik Native |

| boxplot | Box Plot | Qlik Native |

| bulletchart | Bullet chart | Qlik Native |

| action-button | Button | Qlik Native |

| combochart | Combo chart | Qlik Native |

| container | Container | Qlik Native |

| distributionplot | Distribution plot | Qlik Native |

| filterpane | Filter pane | Qlik Native |

| gauge | Gauge | Qlik Native |

| histogram | Histogram | Qlik Native |

| kpi | KPI | Qlik Native |

| linechart | Line chart | Qlik Native |

| map | Map | Qlik Native |

| mekkochart | Mekko chart | Qlik Native |

| piechart | Pie chart | Qlik Native |

| pivot-table | Pivot table | Qlik Native |

| scatterplot | Scatter plot | Qlik Native |

| table | Table | Qlik Native |

| text-image | Text & Image | Qlik Native |

| treemap | Treemap | Qlik Native |

| waterfallchart | Waterfall chart | Qlik Native |

| qlik-barplus-chart | Bar & area chart | Qlik Visualization Bundle |

| qlik-bullet-chart | Bullet chart | Qlik Visualization Bundle |

| qlik-funnel-chart-ext | Funnel chart | Qlik Visualization Bundle |

| qlik-heatmap-chart | Heatmap chart | Qlik Visualization Bundle |

| qlik-multi-kpi | Multi KPI | Qlik Visualization Bundle |

| qlik-network-chart | Network chart | Qlik Visualization Bundle |

| sn-org-chart | Org chart | Qlik Visualization Bundle |

| qlik-smart-pivot | P&L pivot | Qlik Visualization Bundle |

| qlik-radar-chart | Radar chart | Qlik Visualization Bundle |

| qlik-sankey-chart-ext | Sankey chart | Qlik Visualization Bundle |

| qlik-trellis-container | Trellis container | Qlik Visualization Bundle |

| qlik-variance-waterfall | Variance Waterfall | Qlik Visualization Bundle |

| qlik-word-cloud | Word cloud chart | Qlik Visualization Bundle |

| backgroundimg | Background Image | Unknown |

| variable | Variable | Unknown |

| qsSimpleKPI | Simple KPI | Unknown |

| qlik-venn | qlik-venn | Unknown |

| cl-finance-report | Vizlib Finance Report | Vizlib |

| VizlibActivityGauge | Vizlib Activity Gauge | Vizlib |

| VizlibAdvancedTextObject | Vizlib Advanced Text Object | Vizlib |

| VizlibBarChart | Vizlib Bar Chart | Vizlib |

| VizlibCalendar | Vizlib Calendar | Vizlib |

| cl-kpi | Vizlib Simple KPI | Vizlib |

| cl-horizontalselectionbar | Vizlib Selection Bar | Vizlib |

| cl-cards | Vizlib Cards | Vizlib |

| VizlibContainer | Vizlib Container | Vizlib |

| VizlibFilter | Vizlib Filter | Vizlib |

| VizlibHeatmap | Vizlib Heatmap | Vizlib |

| VizlibKPI | Vizlib KPI Designer | Vizlib |

| VizlibLineChart | Vizlib Line Chart | Vizlib |

| VizlibPieChart | Vizlib Pie Chart | Vizlib |

| VizlibPivotTable | Vizlib Pivot Table | Vizlib |

| VizlibSankeyChart | Vizlib Sankey Chart | Vizlib |

| vizlibstorytimeline | Vizlib Story Timeline | Vizlib |

| VizlibTable | Vizlib Table | Vizlib |

| VizlibVennDiagram | Vizlib Venn Diagram | Vizlib |

| VizlibGantt | Vizlib Gantt | Vizlib |

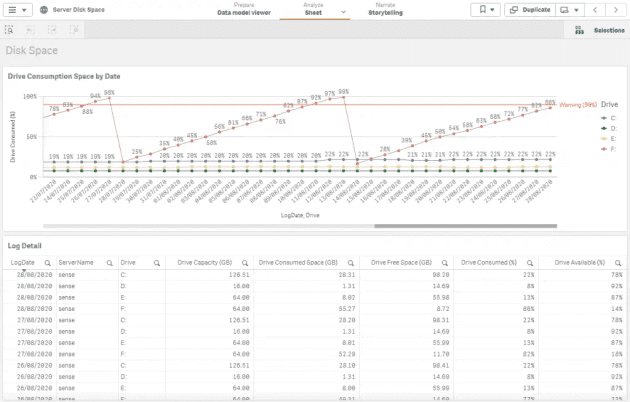

Qlik Sense app for disk space monitoring 27 Aug 2020 4:00 PM (4 years ago)

I previously posted a bat file that logs disk capacity and utilisation. I thought I'd share the QVF file that loads those simple logs.

Some simple visualisation of drive consumption by date

The file is available on GitHub here.

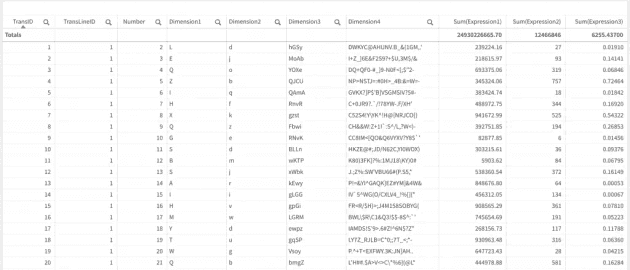

Generate random data in Qlik Sense & QlikView 28 Jun 2020 4:00 PM (4 years ago)

This simple snippet provides a randomised data set for testing purposes.

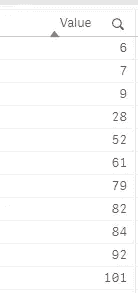

The Data

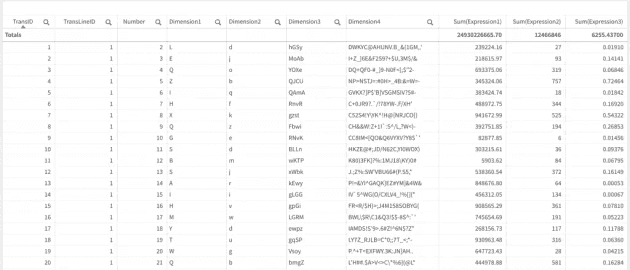

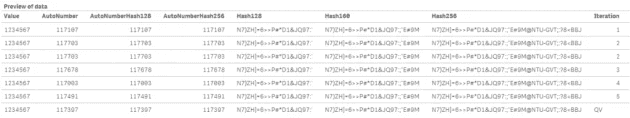

The field definitions, based on a data set of 5 million rows:

| Field Name | Unique Values | Description |

| TransID | 5m | Incrementing integer |

| TransLineID | 1 | Single integer |

| Number | Up to 26 | Integer between 1 and 26 |

| Dimension1 | Up to 26 | Random upper-case letter |

| Dimension2 | Up to 26 | Random lower-case letter |

| Dimension3 | Up to 5m | Random four letter string |

| Dimension4 | Up to 5m | Random string (hash128) |

| Expression1 | Up to 5m | Random value between 0 and 1000000 (2dp) |

| Expression2 | Up to ~1k | Random value between 0 and 1000 (0dp) |

| Expression3 | Up to ~100k | Random value between 0 and 1 (5dp) |

Sample based on using the script to generate 5 million rows

The Script

// Generate some random data

// This is roughly based off of the Qlik Ctrl+O+O default script, but with a bit more variety

SET vRecordCount = 50000;

Transactions:

Load

IterNo(). as TransLineID,

RecNo() as TransID,

mod(RecNo(),26)+1 as Number,

chr(Floor(26*Rand())+65) as Dimension1,

chr(Floor(26*Rand())+97) as Dimension2,

chr(Floor(26*Rand())+pick(round(rand())+1,65,97))

&chr(Floor(26*Rand())+pick(round(rand())+1,65,97))

&chr(Floor(26*Rand())+pick(round(rand())+1,65,97))

&chr(Floor(26*Rand())+pick(round(rand())+1,65,97)) as Dimension3,

Hash128(Rand()) as Dimension4,

round(1000000*Rand(),0.01) as Expression1,

Round(1000*Rand()*Rand()) as Expression2,

Round(Rand()*Rand()*Rand(),0.00001). as Expression3

Autogenerate $(vRecordCount);

// Add comments to describe each field

Comment Field Dimension1 With "Random upper-case letter";

Comment Field Dimension2 With "Random lower-case letter";

Comment Field Dimension3 With "Random four letter string";

Comment Field Dimension4 With "Random string (hash128)";

Comment Field Expression1 With "Random value between 0 and 1000000 (2dp)";

Comment Field Expression2 With "Random value between 0 and 1000 (0dp)";

Comment Field Expression3 With "Random value between 0 and 1 (5dp)";This snippet is also available on GitHub here.

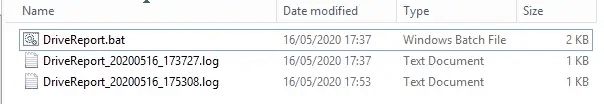

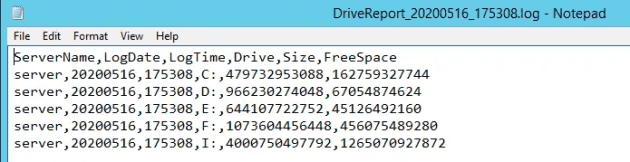

Windows Server Reporting - multi-server total and free space logging via batch script 15 May 2020 4:00 PM (4 years ago)

To help track disk space on a cluster, here's a simple batch script that works on Windows. It accepts a list of hostnames, and outputs the size and free space on each server to a log file.

This is a test server, aptly named "server". 5 drives in Windows Explorer.

The script is available on GitHub; the version at time of writing is here:

@echo off

:: Batch file to log out a local drive report to a .log file in the same directory

:: Log files are named based on the date and time

:: Specify which servers to attempt to return disk space for (delimit with spaces)

:: Enter hostname, and ensure the account running this script has local or domain admin rights

set SERVER_LIST=server

:: Set output date time (YYYYMMDD_hhmmss)

set LOG_TIMESTAMP=%date:~-4,4%%date:~-7,2%%date:~0,2%_%time:~0,2%%time:~3,2%%time:~6,2%

set LOG_DATE=%date:~-4,4%%date:~-7,2%%date:~0,2%

set LOG_TIME=%time:~0,2%%time:~3,2%%time:~6,2%

:: Specify output prefix - this uses an absolute path, if you prefer to use relative then ensure the scheduler includes a "start in" path

set LOG_LOCATION=C:\DriveReport\DriveReport_%LOG_TIMESTAMP%.log

:: Create empty output file

>nul copy nul %LOG_LOCATION%

:: Loop over each server to return stats and append to log file

echo ServerName,LogDate,LogTime,Drive,Size,FreeSpace>>%LOG_LOCATION%

for %%i in (%SERVER_LIST%) do (

for /f "tokens=1,2,3" %%a in ('wmic /node:"%%i" LogicalDisk Where DriveType^="3" Get DeviceID^,Size^,FreeSpace^|find ":"') do @echo %%i,%LOG_DATE%,%LOG_TIME%,%%a,%%c,%%b>>%LOG_LOCATION%

)This outputs one log file per run, containing a row per drive.

One .log file is produced per run of the batch script

Inside, there's a row per drive

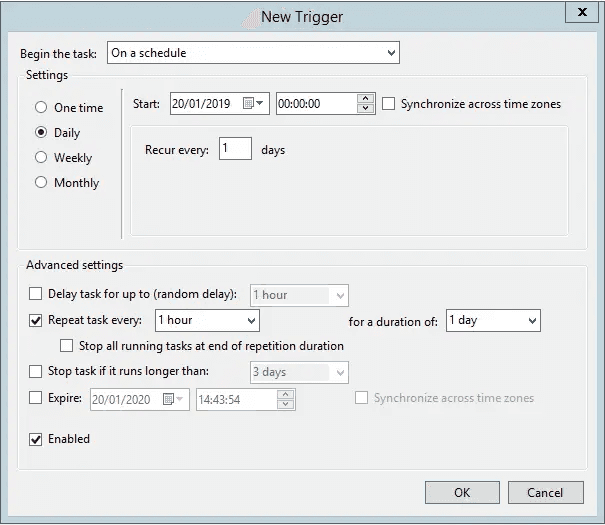

This can be scheduled using Windows Task Scheduler and has been tested on Windows 10, Windows Server 2012R2, Windows Server 2016.

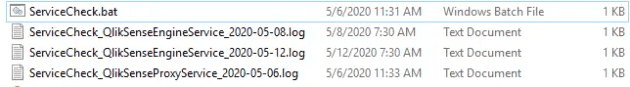

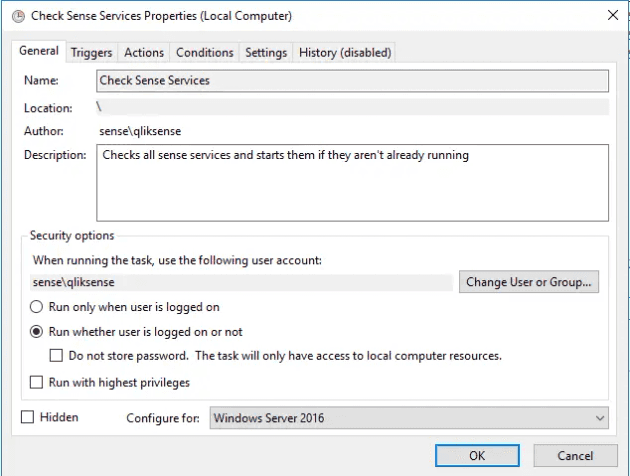

Check a Windows Service, if it's not running, start it via batch script 29 Apr 2020 4:00 PM (4 years ago)

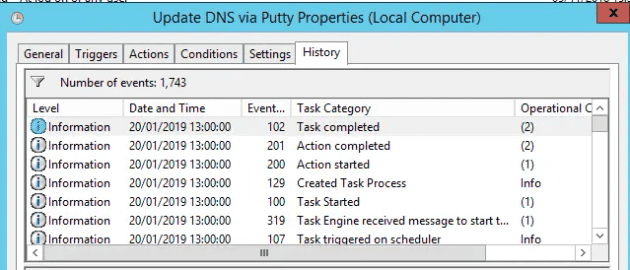

Following a system restart, an overnight shutdown or a system update, some Windows services don't always come up successfully. This script runs via Windows Task Scheduler and accepts one or more service names.

Log files are produced when a service is found to not be running (updated 16/05)

If the service isn't running, it attempts to start it - and produces a log file to capture the incident.

The script is available on GitHub; the version at time of writing is here:

@Echo Off

:: ServiceCheck.bat

:: Accepts a service name, if it's running, exits. If it's not running, attempts to start it and creates a log file.

:: Uplift to check and retry?

Set ServiceName=%~1

::Set ServiceName=QlikSenseProxyService

:: Get date in yyyyMMdd_HHmm format to use with file name.

FOR /f "usebackq" %%i IN (`PowerShell ^(Get-Date^).ToString^('yyyy-MM-dd'^)`) DO SET LogDate=%%i

SC queryex "%ServiceName%"|Find "STATE"|Find /v "RUNNING">Nul&&(

echo %ServiceName% not running

echo Start %ServiceName%

Net start "%ServiceName%">nul||(

Echo "%ServiceName%" wont start

exit /b 1

)

echo "%ServiceName%" started

:: Now log out to a file so we have some sort of history

echo ### Service [%ServiceName%] not running on %LogDate% & echo %Time% Attempting to start service.>>"%~dp0ServiceCheck_%ServiceName%_%LogDate%.log"

exit /b 0

)||(

:: All OK, let's just write to console and exit

echo "%ServiceName%" working

exit /b 0

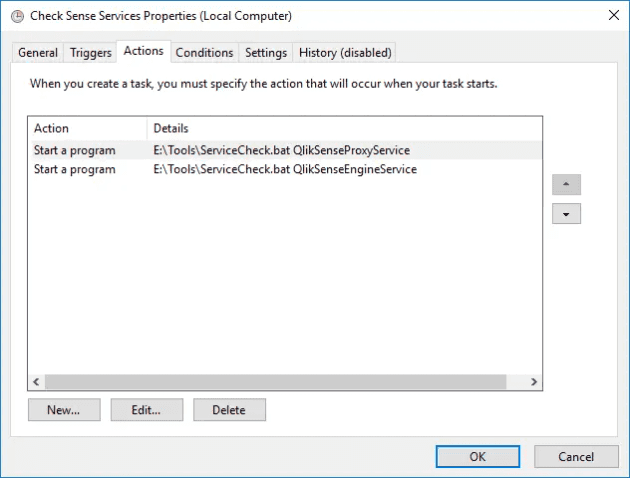

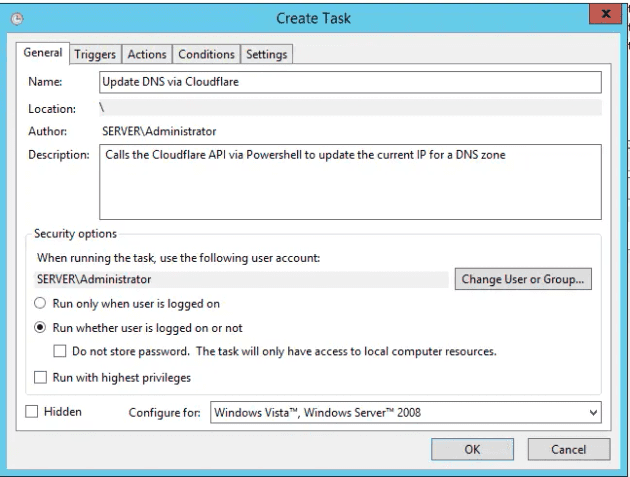

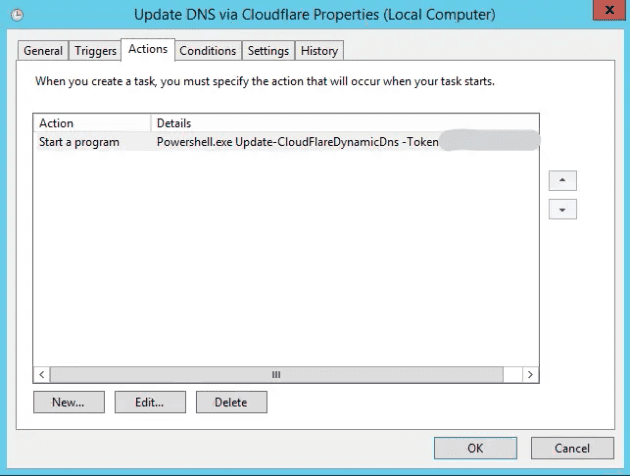

)Configure the task (this example checks two Qlik Sense services) to accept one or more actions.

Checked "Run whether user is logged on or not"

Add one action per service

An updated Version Control tab for Qlik Sense & QlikView 28 Apr 2020 4:00 PM (4 years ago)

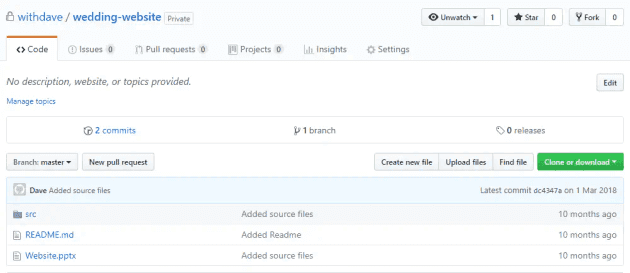

This updated snippet (see original post from 2018) is available on GitHub, and included in this post.

The main benefits of this script are that it requires a single row addition per log, with no manual increment of the version number, and the version control information can be surfaced by the engine either to the UI or via the APIs.

This assumes a HidePrefix of %.

/*

********************* INFORMATION *********************

CREATED BY: withdave

CREATED DATE: 19/03/2020

PURPOSE: RAW Extractor for Teradata T3 datamarts

********************* CHANGE LOG *********************

*/

// Update this version control table after each edit. Avoid using the ; delimiter in your change log

Island_VersionControl:

LOAD

*

INLINE [

%Ver_Version; %Ver_Date; %Ver_Author; %Ver_Change

1; 19/03/2020; withdave; Initial version

2; 21/04/2020; withdave; Changed store file names and added mapping for region from contact

3; 28/04/2020; withdave; Added transformed contact file

] (delimiter is ';');

// Do not edit the section below as this loads and sets the version variable in the app using the table above

// Identify the maximum version

Temp_Version:

LOAD

Max(%Ver_Version) AS Temp_Max_Version

RESIDENT Island_VersionControl;

// Set the version variable

LET vVersion = Peek('Temp_Max_Version',0,'Temp_Version');

// Drop the temporary table

DROP TABLE Temp_Version;Recursive QVD index script snippet for Qlik Sense & QlikView 15 Feb 2020 3:00 PM (5 years ago)

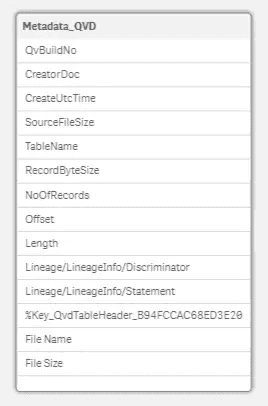

This simple snippet works with Qlik Sense and QlikView 12+ to recursively collect metadata from QVD files.

The simple table produced includes only basic QVD metadata

The snippet is available below, or the latest version is on GitHub.

// Sub to recursively load QVD file metadata from a directory

SUB sLoadQVDMetadata(vSub_Path)

TRACE >> Loading files in path [$(vSub_Path)].;

// Iterate over each QVD file in the directory and load metadata

// Use backslash for compatibility with QlikView 12

FOR EACH vSub_File in FileList('$(vSub_Path)\*.qvd')

// For use with QlikView 12, comment out the two lineage rows

Metadata_QVD:

LOAD

QvBuildNo,

CreatorDoc,

CreateUtcTime,

SourceFileSize,

"TableName",

RecordByteSize,

NoOfRecords,

Offset,

"Length",

"Lineage/LineageInfo/Discriminator",

"Lineage/LineageInfo/Statement",

%Key_QvdTableHeader_B94FCCAC68ED3E20,

FileName() AS [File Name],

FileSize() AS [File Size]

FROM [$(vSub_File)]

(XmlSimple, table is [QvdTableHeader]);

// Set a count and print to console

LET vLoad_Rows = NoOfRows('Metadata_QVD');

TRACE >>> Loaded $(vLoad_Rows) rows, last file found: [$(vSub_File)].;

NEXT vSub_File;

// Now recursively call the function for each directory found in this path

// Use backslash for compatibility with QlikView 12

FOR EACH vSub_Directory in DirList('$(vSub_Path)\*')

// Resursively call sub

CALL sLoadQVDMetadata('$(vSub_Directory)');

NEXT vSub_Directory;

END SUB;

// Qlik Sense - i.e. lib, do not include trailing slash

Call sLoadQVDMetadata('lib://Dir_QlikFiles');

// QlikView - i.e. path (do not include trailing slash)

// Call sLoadQVDMetadata('D:\QlikFiles');Qlik Sense Release Version & Support List 29 Jan 2020 3:00 PM (5 years ago)

As of February 2022, the information shown below is now available on the new Qlik download site at https://community.qlik.com/t5/Downloads/tkb-p/Downloads, and the releases can also be found directly on GitHub at https://github.com/qlik-download/qlik-sense-server/releases

Qlik Sense log files usually contain a reference to a build or engine number, and I've not yet found a complete list where you can see all version numbers at the same time - so I've created one.

This list contains the current (at time of writing) list of Qlik Sense external version names to build number, release dates and end of support dates.

For the latest version of this list (and in CSV format), visit https://github.com/withdave/qlik-releases

Qlik Sense Release List

| Major Version | Minor Version | Build Number | Release date | End of support |

|---|---|---|---|---|

| Qlik Sense February 2022 | Patch 1 | 14.54.4 | 23/02/2022 | As per initial release |

| Qlik Sense February 2022 | Initial release | 14.54.2 | 15/02/2022 | |

| Qlik Sense November 2021 | Patch 4 | 14.44.9 | 27/01/2022 | As per initial release |

| Qlik Sense November 2021 | Patch 3 | 14.44.8 | 05/01/2022 | As per initial release |

| Qlik Sense November 2021 | Patch 2 | 14.44.7 | 17/12/2021 | As per initial release |

| Qlik Sense November 2021 | Patch 1 | 14.44.6 | 06/12/2021 | As per initial release |

| Qlik Sense November 2021 | Initial release | 14.44.5 | 08/11/2021 | 08/11/2023 |

| Qlik Sense August 2021 | Patch 8 | 14.28.15 | 23/02/2022 | As per initial release |

| Qlik Sense August 2021 | Patch 7 | 14.28.14 | 27/01/2022 | As per initial release |

| Qlik Sense August 2021 | Patch 6 | 14.28.13 | 05/01/2022 | As per initial release |

| Qlik Sense August 2021 | Patch 5 | 14.28.12 | As per initial release | |

| Qlik Sense August 2021 | Patch 4 | 14.28.10 | As per initial release | |

| Qlik Sense August 2021 | Patch 3 | 14.28.9 | As per initial release | |

| Qlik Sense August 2021 | Patch 2 | 14.28.7 | As per initial release | |

| Qlik Sense August 2021 | Patch 1 | 14.28.5 | As per initial release | |

| Qlik Sense August 2021 | Initial release | 14.28.3 | 23/08/2021 | 23/08/2023 |

| Qlik Sense May 2021 | Patch 12 | 14.20.20 | 27/01/2022 | As per initial release |

| Qlik Sense May 2021 | Patch 11 | 14.20.19 | 05/01/2022 | As per initial release |

| Qlik Sense May 2021 | Patch 10 | 14.20.18 | As per initial release | |

| Qlik Sense May 2021 | Patch 9 | 14.20.17 | As per initial release | |

| Qlik Sense May 2021 | Patch 8 | 14.20.16 | As per initial release | |

| Qlik Sense May 2021 | Patch 7 | 14.20.15 | As per initial release | |

| Qlik Sense May 2021 | Patch 6 | 14.20.14 | As per initial release | |

| Qlik Sense May 2021 | Patch 5 | 14.20.13 | As per initial release | |

| Qlik Sense May 2021 | Patch 4 | 14.20.10 | As per initial release | |

| Qlik Sense May 2021 | Patch 3 | 14.20.9 | As per initial release | |

| Qlik Sense May 2021 | Patch 2 | 14.20.8 | As per initial release | |

| Qlik Sense May 2021 | Patch 1 | 14.20.6 | As per initial release | |

| Qlik Sense May 2021 | Initial release | 14.20.5 | 10/05/2021 | 10/05/2023 |

| Qlik Sense February 2021 | Patch 16 | 14.5.30 | As per initial release | |

| Qlik Sense February 2021 | Patch 15 | 14.5.29 | As per initial release | |

| Qlik Sense February 2021 | Patch 14 | 14.5.27 | As per initial release | |

| Qlik Sense February 2021 | Patch 13 | 14.5.26 | As per initial release | |

| Qlik Sense February 2021 | Patch 12 | 14.5.25 | As per initial release | |

| Qlik Sense February 2021 | Patch 11 | 14.5.24 | As per initial release | |

| Qlik Sense February 2021 | Patch 10 | 14.5.22 | As per initial release | |

| Qlik Sense February 2021 | Patch 9 | 14.5.21 | As per initial release | |

| Qlik Sense February 2021 | Patch 8 | 14.5.20 | As per initial release | |

| Qlik Sense February 2021 | Patch 7 | 14.5.19 | As per initial release | |

| Qlik Sense February 2021 | Patch 6 | 14.5.18 | As per initial release | |

| Qlik Sense February 2021 | Patch 5 | 14.5.16 | As per initial release | |

| Qlik Sense February 2021 | Patch 4 | 14.5.10 | As per initial release | |

| Qlik Sense February 2021 | Patch 3 | 14.5.9 | As per initial release | |

| Qlik Sense February 2021 | Patch 2 | 14.5.7 | As per initial release | |

| Qlik Sense February 2021 | Patch 1 | 14.5.6 | As per initial release | |

| Qlik Sense February 2021 | Initial release | 14.5.4 | 09/02/2021 | 09/02/2023 |

| Qlik Sense November 2020 | Patch 19 | 13.102.27 | As per initial release | |

| Qlik Sense November 2020 | Patch 18 | 13.102.26 | As per initial release | |

| Qlik Sense November 2020 | Patch 17 | 13.102.25 | As per initial release | |

| Qlik Sense November 2020 | Patch 16 | 13.102.24 | As per initial release | |

| Qlik Sense November 2020 | Patch 15 | 13.102.23 | As per initial release | |

| Qlik Sense November 2020 | Patch 14 | 13.102.22 | As per initial release | |

| Qlik Sense November 2020 | Patch 13 | 13.102.21 | As per initial release | |

| Qlik Sense November 2020 | Patch 12 | 13.102.20 | As per initial release | |

| Qlik Sense November 2020 | Patch 11 | 13.102.19 | As per initial release | |

| Qlik Sense November 2020 | Patch 10 | 13.102.17 | As per initial release | |

| Qlik Sense November 2020 | Patch 9 | 13.102.16 | As per initial release | |

| Qlik Sense November 2020 | Patch 8 | 13.102.15 | As per initial release | |

| Qlik Sense November 2020 | Patch 7 | 13.102.14 | As per initial release | |

| Qlik Sense November 2020 | Patch 6 | 13.102.13 | As per initial release | |

| Qlik Sense November 2020 | Patch 5 | 13.102.12 | As per initial release | |

| Qlik Sense November 2020 | Patch 4 | 13.102.11 | As per initial release | |

| Qlik Sense November 2020 | Patch 3 | 13.102.10 | As per initial release | |

| Qlik Sense November 2020 | Patch 2 | 13.102.8 | As per initial release | |

| Qlik Sense November 2020 | Patch 1 | 13.102.6 | As per initial release | |

| Qlik Sense November 2020 | Initial release | 13.102.5 | 10/11/2020 | 10/11/2022 |

| Qlik Sense September 2020 | Patch 15 | 13.95.23 | As per initial release | |

| Qlik Sense September 2020 | Patch 14 | 13.95.22 | As per initial release | |

| Qlik Sense September 2020 | Patch 13 | 13.95.21 | As per initial release | |

| Qlik Sense September 2020 | Patch 12 | 13.95.19 | As per initial release | |

| Qlik Sense September 2020 | Patch 11 | 13.95.15 | As per initial release | |

| Qlik Sense September 2020 | Patch 10 | 13.95.14 | As per initial release | |

| Qlik Sense September 2020 | Patch 9 | 13.95.13 | As per initial release | |

| Qlik Sense September 2020 | Patch 8 | 13.95.12 | As per initial release | |

| Qlik Sense September 2020 | Patch 7 | 13.95.10 | As per initial release | |

| Qlik Sense September 2020 | Patch 6 | 13.95.9 | As per initial release | |

| Qlik Sense September 2020 | Patch 5 | 13.95.8 | As per initial release | |

| Qlik Sense September 2020 | Patch 4 | 13.95.7 | As per initial release | |

| Qlik Sense September 2020 | Patch 3 | 13.95.6 | As per initial release | |

| Qlik Sense September 2020 | Patch 2 | 13.95.5 | As per initial release | |

| Qlik Sense September 2020 | Patch 1 | 13.95.4 | As per initial release | |

| Qlik Sense September 2020 | Initial release | 13.95.3 | 09/09/2020 | 09/09/2022 |

| Qlik Sense June 2020 | Patch 18 | 13.82.29 | As per initial release | |

| Qlik Sense June 2020 | Patch 17 | 13.82.28 | As per initial release | |

| Qlik Sense June 2020 | Patch 16 | 13.82.26 | As per initial release | |

| Qlik Sense June 2020 | Patch 15 | 13.82.23 | As per initial release | |

| Qlik Sense June 2020 | Patch 14 | 13.82.22 | As per initial release | |

| Qlik Sense June 2020 | Patch 13 | 13.82.21 | As per initial release | |

| Qlik Sense June 2020 | Patch 12 | 13.82.20 | As per initial release | |

| Qlik Sense June 2020 | Patch 11 | 13.82.19 | As per initial release | |

| Qlik Sense June 2020 | Patch 10 | 13.82.18 | As per initial release | |

| Qlik Sense June 2020 | Patch 9 | 13.82.17 | As per initial release | |

| Qlik Sense June 2020 | Patch 8 | 13.82.16 | As per initial release | |

| Qlik Sense June 2020 | Patch 7 | 13.82.15 | As per initial release | |

| Qlik Sense June 2020 | Patch 6 | 13.82.14 | As per initial release | |

| Qlik Sense June 2020 | Patch 5 | 13.82.13 | As per initial release | |

| Qlik Sense June 2020 | Patch 4 | 13.82.12 | As per initial release | |

| Qlik Sense June 2020 | Patch 3 | 13.82.11 | As per initial release | |

| Qlik Sense June 2020 | Patch 2 | 13.82.8 | As per initial release | |

| Qlik Sense June 2020 | Patch 1 | 13.82.6 | As per initial release | |

| Qlik Sense June 2020 | Initial release | 13.82.4 | 10/06/2020 | 10/06/2022 |

| Qlik Sense April 2020 | Patch 17 | 13.72.26 | As per initial release | |

| Qlik Sense April 2020 | Patch 16 | 13.72.25 | As per initial release | |

| Qlik Sense April 2020 | Patch 15 | 13.72.22 | As per initial release | |

| Qlik Sense April 2020 | Patch 14 | 13.72.21 | As per initial release | |

| Qlik Sense April 2020 | Patch 13 | 13.72.20 | As per initial release | |

| Qlik Sense April 2020 | Patch 12 | 13.72.19 | As per initial release | |

| Qlik Sense April 2020 | Patch 11 | 13.72.18 | As per initial release | |

| Qlik Sense April 2020 | Patch 10 | 13.72.17 | As per initial release | |

| Qlik Sense April 2020 | Patch 9 | 13.72.16 | As per initial release | |

| Qlik Sense April 2020 | Patch 8 | 13.72.15 | As per initial release | |

| Qlik Sense April 2020 | Patch 7 | 13.72.14 | As per initial release | |

| Qlik Sense April 2020 | Patch 6 | 13.72.13 | As per initial release | |

| Qlik Sense April 2020 | Patch 5 | 13.72.12 | As per initial release | |

| Qlik Sense April 2020 | Patch 4 | 13.72.9 | As per initial release | |

| Qlik Sense April 2020 | Patch 3 | 13.72.8 | 03/06/2020 | As per initial release |

| Qlik Sense April 2020 | Patch 2 | 13.72.5 | 20/05/2020 | As per initial release |

| Qlik Sense April 2020 | Patch 1 | 13.72.4 | 06/05/2020 | As per initial release |

| Qlik Sense April 2020 | Initial release | 13.72.3 | 14/04/2020 | 14/04/2022 |

| Qlik Sense February 2020 | Patch 13 | 13.62.26 | As per initial release | |

| Qlik Sense February 2020 | Patch 12 | 13.62.25 | As per initial release | |

| Qlik Sense February 2020 | Patch 11 | 13.62.22 | As per initial release | |

| Qlik Sense February 2020 | Patch 10 | 13.62.21 | As per initial release | |

| Qlik Sense February 2020 | Patch 9 | 13.62.20 | As per initial release | |

| Qlik Sense February 2020 | Patch 8 | 13.62.18 | As per initial release | |

| Qlik Sense February 2020 | Patch 7 | 13.62.17 | As per initial release | |

| Qlik Sense February 2020 | Patch 6 | 13.62.16 | As per initial release | |

| Qlik Sense February 2020 | Patch 5 | 13.62.14 | 03/06/2020 | As per initial release |

| Qlik Sense February 2020 | Patch 4 | 13.62.12 | 20/05/2020 | As per initial release |

| Qlik Sense February 2020 | Patch 3 | 13.62.11 | 06/05/2020 | As per initial release |

| Qlik Sense February 2020 | Patch 2 | 13.62.9 | 08/04/2020 | As per initial release |

| Qlik Sense February 2020 | Patch 1 | 13.62.7 | 11/03/2020 | As per initial release |

| Qlik Sense February 2020 | Initial release | 13.62.6 | 25/02/2020 | 25/02/2022 |

| Qlik Sense November 2019 | Patch 18 | 13.51.31 | As per initial release | |

| Qlik Sense November 2019 | Patch 17 | 13.51.30 | As per initial release | |

| Qlik Sense November 2019 | Patch 16 | 13.51.27 | As per initial release | |

| Qlik Sense November 2019 | Patch 15 | 13.51.26 | As per initial release | |

| Qlik Sense November 2019 | Patch 14 | 13.51.25 | As per initial release | |

| Qlik Sense November 2019 | Patch 13 | 13.51.22 | As per initial release | |

| Qlik Sense November 2019 | Patch 12 | 13.51.21 | As per initial release | |

| Qlik Sense November 2019 | Patch 11 | 13.51.19 | 20/05/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 10 | 13.51.18 | 06/05/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 9 | 13.51.15 | 22/04/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 8 | 13.51.14 | 08/04/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 7 | 13.51.13 | 25/03/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 6 | 13.51.12 | 04/03/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 5 | 13.51.10 | 12/02/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 4 | 13.51.8 | 29/01/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 3 | 13.51.7 | 15/01/2020 | As per initial release |

| Qlik Sense November 2019 | Patch 2 | 13.51.6 | 18/12/2019 | As per initial release |

| Qlik Sense November 2019 | Patch 1 | 13.51.5 | 04/12/2019 | As per initial release |

| Qlik Sense November 2019 | Initial release | 13.51.4 | 11/11/2019 | 11/11/2021 |

| Qlik Sense September 2019 | Patch 12 | 13.42.19 | As per initial release | |

| Qlik Sense September 2019 | Patch 11 | 13.42.18 | 03/06/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 10 | 13.42.17 | 06/05/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 9 | 13.42.16 | 08/04/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 8 | 13.42.15 | 25/03/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 7 | 13.42.14 | 04/03/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 6 | 13.42.12 | 12/02/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 5 | 13.42.10 | 29/01/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 4 | 13.42.9 | 15/01/2020 | As per initial release |

| Qlik Sense September 2019 | Patch 3 | 13.42.8 | 18/12/2019 | As per initial release |

| Qlik Sense September 2019 | Patch 2 | 13.42.6 | 04/12/2019 | As per initial release |

| Qlik Sense September 2019 | Patch 1 | 13.42.5 | 08/11/2019 | As per initial release |

| Qlik Sense September 2019 | Initial release | 13.42.1 | 30/09/2019 | 30/09/2021 |

| Qlik Sense June 2019 | Patch 13 | 13.32.19 | 08/04/2020 | As per initial release |

| Qlik Sense June 2019 | Patch 12 | 13.32.18 | 25/03/2020 | As per initial release |

| Qlik Sense June 2019 | Patch 11 | 13.32.16 | 05/03/2020 | As per initial release |

| Qlik Sense June 2019 | Patch 10 | 13.32.15 | 12/02/2020 | As per initial release |

| Qlik Sense June 2019 | Patch 9 | 13.32.12 | 29/01/2020 | As per initial release |

| Qlik Sense June 2019 | Patch 8 | 13.32.11 | 15/01/2020 | As per initial release |

| Qlik Sense June 2019 | Patch 7 | 13.32.10 | 18/12/2019 | As per initial release |

| Qlik Sense June 2019 | Patch 6 | 13.32.9 | 04/12/2019 | As per initial release |

| Qlik Sense June 2019 | Patch 5 | 13.32.8 | 20/11/2019 | As per initial release |

| Qlik Sense June 2019 | Patch 4 | 13.32.7 | 06/11/2019 | As per initial release |

| Qlik Sense June 2019 | Patch 3 | 13.32.6 | 11/10/2019 | As per initial release |

| Qlik Sense June 2019 | Patch 2 | 13.32.4 | 10/09/2019 | As per initial release |

| Qlik Sense June 2019 | Patch 1 | 13.32.3 | 29/08/2019 | As per initial release |

| Qlik Sense June 2019 | Initial release | 13.32.2 | 28/06/2019 | 28/06/2021 |

| Qlik Sense April 2019 | Patch 9 | 13.21.20 | 25/03/2020 | As per initial release |

| Qlik Sense April 2019 | Patch 8 | 13.21.19 | 05/03/2020 | As per initial release |

| Qlik Sense April 2019 | Patch 7 | 13.21.18 | 12/02/2020 | As per initial release |

| Qlik Sense April 2019 | Patch 6 | 13.21.14 | 15/01/2020 | As per initial release |

| Qlik Sense April 2019 | Patch 5 | 13.21.13 | 04/12/2019 | As per initial release |

| Qlik Sense April 2019 | Patch 4 | 13.21.12 | 20/11/2019 | As per initial release |

| Qlik Sense April 2019 | Patch 3 | 13.21.10 | 30/08/2019 | As per initial release |

| Qlik Sense April 2019 | Patch 2 | 13.21.9 | 12/07/2019 | As per initial release |

| Qlik Sense April 2019 | Patch 1 | 13.21.2 | 14/05/2019 | As per initial release |

| Qlik Sense April 2019 | Initial release | 13.21.1 | 25/04/2019 | 25/04/2021 |

| Qlik Sense February 2019 | Patch 9 | 13.9.20 | 25/03/2020 | As per initial release |

| Qlik Sense February 2019 | Patch 8 | 13.9.19 | 04/03/2020 | As per initial release |

| Qlik Sense February 2019 | Patch 7 | 13.9.18 | 12/02/2020 | As per initial release |

| Qlik Sense February 2019 | Patch 6 | 13.9.16 | 18/12/2019 | As per initial release |

| Qlik Sense February 2019 | Patch 5 | 13.9.14 | 20/11/2019 | As per initial release |

| Qlik Sense February 2019 | Patch 4 | 13.9.8 | 09/07/2019 | As per initial release |

| Qlik Sense February 2019 | Patch 3 | 13.9.6 | 23/05/2019 | As per initial release |

| Qlik Sense February 2019 | Patch 2 | 13.9.3 | 16/04/2019 | As per initial release |

| Qlik Sense February 2019 | Patch 1 | 13.9.2 | 26/03/2019 | As per initial release |

| Qlik Sense February 2019 | Initial release | 13.9.1 | 12/02/2019 | 12/02/2021 |

| Qlik Sense November 2018 | Patch 8 | 12.44.17 | 04/12/2019 | As per initial release |

| Qlik Sense November 2018 | Patch 7 | 12.44.16 | 06/11/2019 | As per initial release |

| Qlik Sense November 2018 | Patch 6 | 12.44.11 | 27/06/2019 | As per initial release |

| Qlik Sense November 2018 | Patch 5 | 12.44.9 | 23/05/2019 | As per initial release |

| Qlik Sense November 2018 | Patch 4 | 12.44.7 | 16/04/2019 | As per initial release |

| Qlik Sense November 2018 | Patch 3 | 12.44.5 | 28/02/2019 | As per initial release |

| Qlik Sense November 2018 | Patch 2 | 12.44.3 | 13/12/2018 | As per initial release |

| Qlik Sense November 2018 | Patch 1 | 12.44.2 | 15/11/2018 | As per initial release |

| Qlik Sense November 2018 | Initial release | 12.44.1 | 13/11/2018 | 12/11/2020 |

| Qlik Sense September 2018 | Patch 4 | 12.36.10 | 16/04/2019 | As per initial release |

| Qlik Sense September 2018 | Patch 3 | 12.36.9 | 18/03/2019 | As per initial release |

| Qlik Sense September 2018 | Patch 2 | 12.36.6 | 12/11/2018 | As per initial release |

| Qlik Sense September 2018 | Patch 1 | 12.36.3 | 16/10/2018 | As per initial release |

| Qlik Sense September 2018 | Initial release | 12.36.1 | 11/09/2018 | 11/09/2020 |

| Qlik Sense June 2018 | Patch 3 | 12.26.9 | 16/04/2019 | As per initial release |

| Qlik Sense June 2018 | Patch 2 | 12.26.4 | 12/11/2018 | As per initial release |

| Qlik Sense June 2018 | Patch 1 | 12.26.3 | 30/08/2018 | As per initial release |

| Qlik Sense June 2018 | Initial release | 12.26.1 | 26/06/2018 | 26/06/2020 |

| Qlik Sense April 2018 | Patch 3 | 12.16.7 | 16/04/2019 | As per initial release |

| Qlik Sense April 2018 | Patch 2 | 12.16.4 | 21/11/2018 | As per initial release |

| Qlik Sense April 2018 | Patch 1 | 12.16.3 | 20/06/2018 | As per initial release |

| Qlik Sense April 2018 | Initial release | 12.16.2 | 19/04/2018 | 19/04/2020 |

| Qlik Sense February 2018 | Patch 4 | 12.5.10 | 16/04/2019 | As per initial release |

| Qlik Sense February 2018 | Patch 3 | 12.5.8 | 29/10/2018 | As per initial release |

| Qlik Sense February 2018 | Patch 2 | 12.5.5 | 05/06/2018 | As per initial release |

| Qlik Sense February 2018 | Patch 1 | 12.5.3 | 27/02/2018 | As per initial release |

| Qlik Sense February 2018 | Initial release | 12.5.2 | 13/02/2018 | 13/02/2020 |

| Qlik Sense November 2017 | Patch 4 | 11.24.14 | 29/05/2019 | As per initial release |

| Qlik Sense November 2017 | Patch 3 | 11.24.5 | 13/02/2018 | As per initial release |

| Qlik Sense November 2017 | Patch 2 | 11.24.4 | 18/01/2018 | As per initial release |

| Qlik Sense November 2017 | Patch 1 | 11.24.3 | 01/12/2017 | As per initial release |

| Qlik Sense November 2017 | Initial release | 11.24.1 | 14/11/2017 | 14/11/2019 |

| Qlik Sense September 2017 | Patch 4 | 11.14.8 | 19/09/2017 | As per initial release |

| Qlik Sense September 2017 | Patch 3 | 11.14.7 | 15/02/2018 | As per initial release |

| Qlik Sense September 2017 | Patch 2 | 11.14.5 | 08/12/2017 | As per initial release |

| Qlik Sense September 2017 | Patch 1 | 11.14.4 | 31/10/2017 | As per initial release |

| Qlik Sense September 2017 | Initial release | 11.14.3 | 19/09/2017 | 19/09/2019 |

| Qlik Sense June 2017 | Patch 3 | 11.11.4 | 17/11/2017 | As per initial release |

| Qlik Sense June 2017 | Patch 2 | 11.11.3 | 28/08/2017 | As per initial release |

| Qlik Sense June 2017 | Patch 1 | 11.11.2 | 25/07/2017 | As per initial release |

| Qlik Sense June 2017 | Initial release | 11.11.1 | 29/06/2017 | 29/06/2019 |

QVD read/write performance comparison in Qlik Sense Enterprise with QVD and QVF encryption 30 Dec 2019 3:00 PM (5 years ago)

Following on my previous post, I had a look at the performance impact of enabling QVD and QVF encryption in Qlik Sense.

In this test, I'm using Qlik Sense Enterprise November 2019 release on an Azure B4ms (4 vCPU, 16GB RAM) VM running Windows Server 2019. Qlik Sense encryption settings were left at default.

A sneak peak of the results for the largest data set tested

The questions

I'll prepare a follow up post running through the questions and findings, this post summarises the test structure and high level findings.

The tests & source data

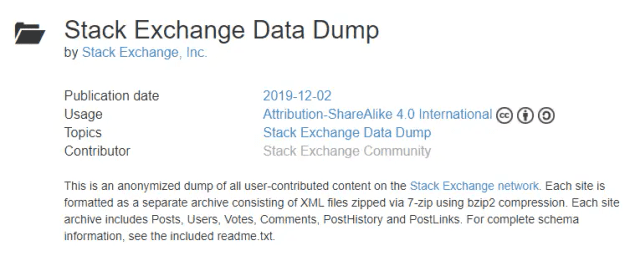

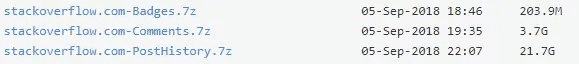

The data I'm loading is one of the freely available data sets on archive.org from StackExchange (in this case, a 618MB serverfault 7z archive).

Stack Exchange makes a huge amount of anonymized data available via Archive.org

Uncompressed, it's 3.13GB, or 2.5GB for just the XML files I'm running tests against.

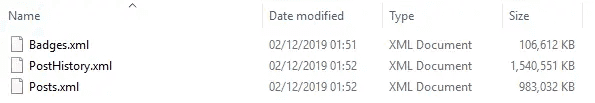

The three test subjects totalled around 2.5GB uncompressed

Each of the tests below was run a minimum of three times, on XML based data sets of three different sizes (PostHistory, Posts and Badges - in order of decreasing size).

The following tests were run:

- Load from XML (no transformation)

- Store loaded XML data into QVD (no transformation)

- Load from QVD using optimised load

- Store loaded QVD data into a second QVD (no transformation)

- Load from QVD using unoptimised load and perform transformations (using a wide range of functions)

- Store transformed QVD data into a third QVD

- Load from QVD using unoptimised load and perform transformation/where reduction (only two functions)

- Store transformed QVD data into a fourth QVD

- Load from QVD using optimised load, then resident to perform matching transformation to #5

- Store transformed QVD data into a fifth QVD

The QVF file and load scripts to run these tests are available on GitHub.

Test results

The results (when assessing ONLY PostHistory - the largest input file), with the exception of tests 6, 8 and 10 (all store operations on data originally loaded from a QVD), show that enabling encryption for QVDs increases load time, and enabling both QVD and QVF encryption increases this further.

No surprises there.

Average test duration grouped by test and test mode

I'll look into this in more depth in a follow up post.

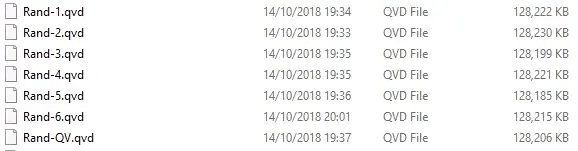

Observation on QVD file size

There was no noticeable increase in QVD file size following encryption - see screenshots of before and after below.

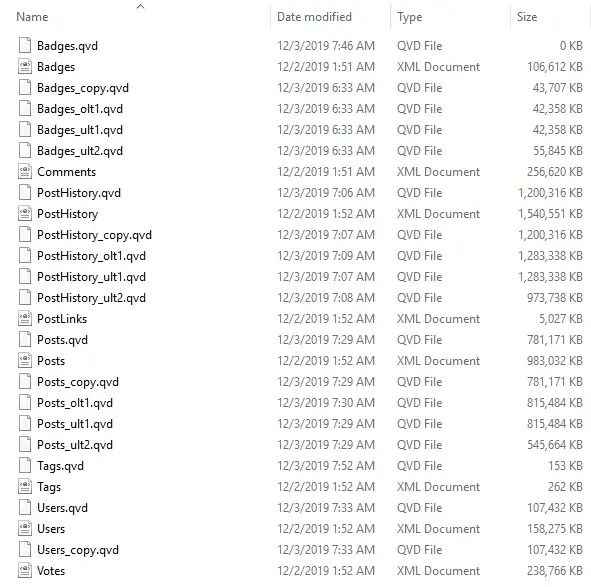

File sizes without QVD encryption (excluding the 0 value for the first file as this was being written while the screenshot was taken)

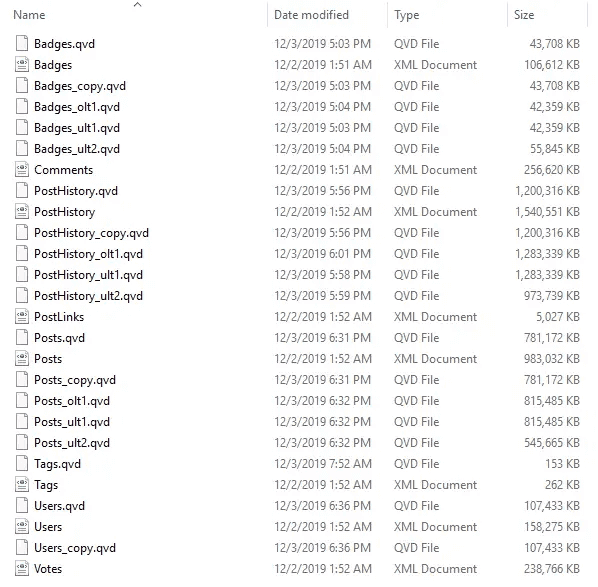

File sizes with encryption - at most a few KB out

Considerations for next time

- Instead of using a burstable instance (B4ms), I should have used a general instance such as a DS3 to ensure a baseline level of performance

- The server size was likely too large for the smallest data set I used, meaning that operations completed too quickly for any variation to be meaningful, while Posts and PostHistory were more suitable

- This time, I used Azure files for the primary read/write location. While we should assume performance remains consistent over time, testing with a provisioned disk attached to the VM would be a better test to remove any potential variability

- Services were not restarted between every test, only between test modes (i.e. encrypted, unencrypted) - it would be a better control to begin all tests following a restart of at least the engine service

- The initial test created the QVD files which were then overwritten by all following tests - ideally these would have been deleted between tests (incidentally, no obvious variation appeared between tests 1 and 2)

- There was no system monitoring set up - this would provide insights as to CPU and IO utilisation throughout and would be a useful addition to the time statistics

Qlik Sense QVD / QVF Encryption (Qlik Sense Enterprise on Windows) 29 Nov 2019 3:00 PM (5 years ago)

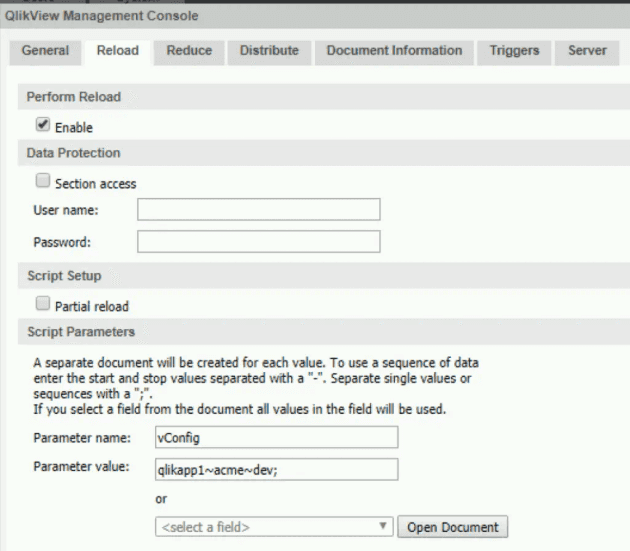

From Qlik Sense Enterprise on Windows September 2019 (the option became configurable via the QMC in November 2019), users have the option of enabling one or both of QVD and QVF encryption - adding at-rest protection from people who shouldn't have access to your valuable files. This is enabled server-wide and applies to all content on the environment.

The big issue that I see is that any security office is going to require that any and all encryption is enabled - without understanding the issues this could cause.

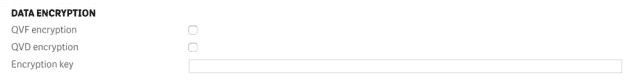

Here's the new DATA ENCRYPTION section under Service cluster in the QMC

What can I set in the QMC?

Although the Qlik Help site offers plenty of detail, it's surprising that there's no validation on the encryption key you enter in the QMC itself - you can enter anything you want and apply it, without Qlik Sense verifying that a corresponding certificate exists.

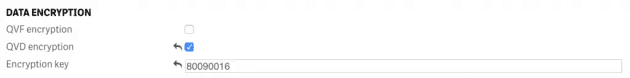

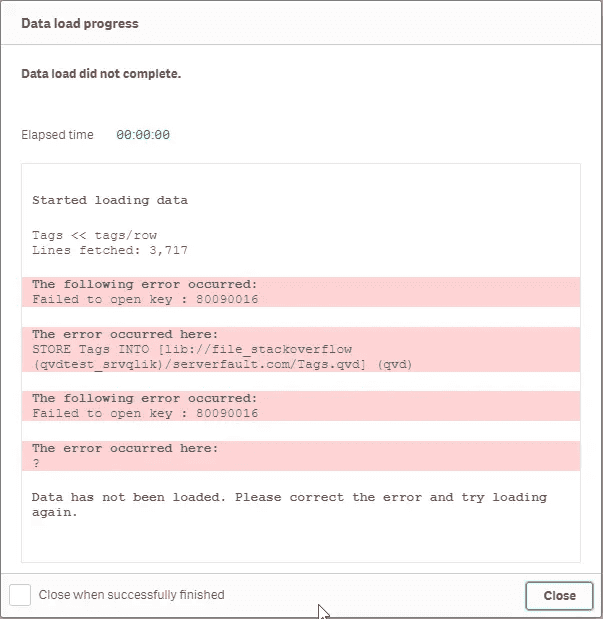

Let's say, in my wisdom, I do not read the help pages, and enable QVD encryption with an encryption key of say, 80090016. What happens?

Rather than a certificate thumbprint, I'll enter my own "key"

Well, everything looks OK, until you actually try to store a QVD and you get the message "Failed to open key : 80090016".

As the person who configured said key, I'd eventually work out that it's because I didn't put in a certificate thumbprint from the system (it would be better if Qlik Sense actually validated this).

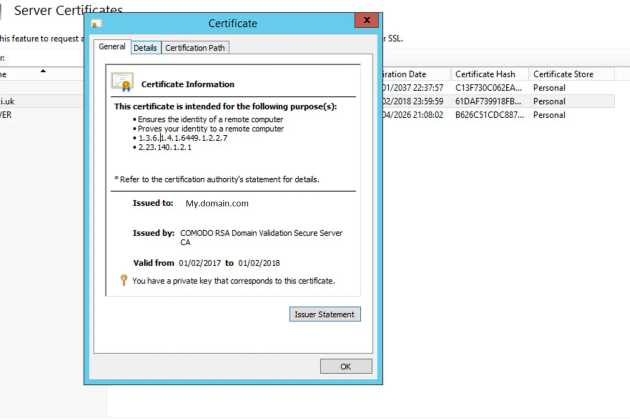

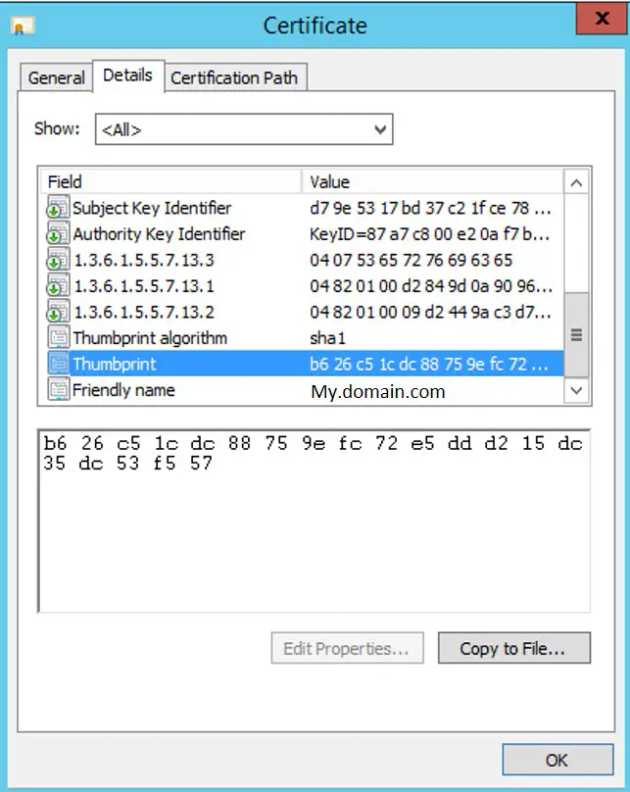

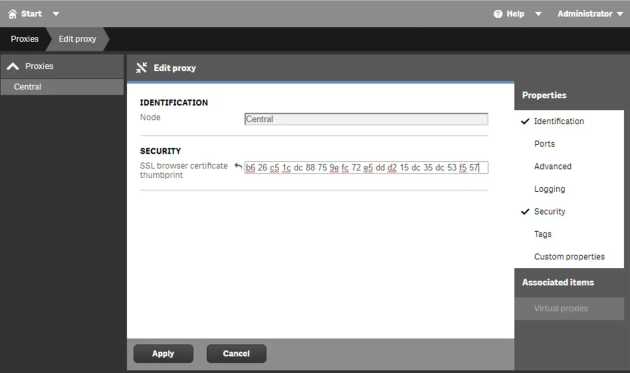

The actual process is to create or import a certificate and add the correct thumbprint:

New-SelfSignedCertificate -Subject QlikSenseEncryption -KeyAlgorithm RSA -KeyLength 4096 -Provider "Microsoft Software Key Storage Provider" -KeyExportPolicy ExportableEncrypted -CertStoreLocation "cert:\CurrentUser\My"The resulting thumbprint can be bussed into the QMC

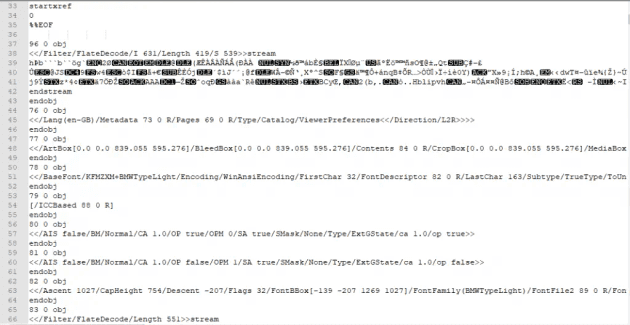

Do QVDs look any different?

We know that QVDs are formed of an XML header containing metadata related to the QVD and it's content, followed by the data itself in a proprietary Qlik format.

Once encrypted, a QVD retains the same XML header plus one additional node - EncryptionInfo. You can inspect the header in a text editor such as notepad - but if you try to use the Qlik Sense load data or load editor to open the file in XML format you'll get the error listed above.

Example EncryptionInfo from a QVD generated on a test server running Qlik Sense (Qlik Sense Enterprise on Windows, November 2019) with QVD encryption enabled:

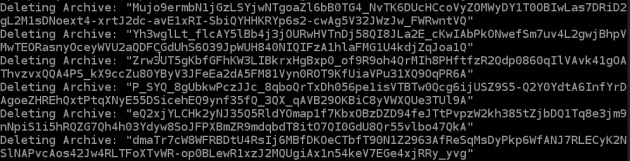

<EncryptionInfo>{"ciphertext":"CG9pOkmubU1+3pYtB4ui4/mwAR6y7C5SCm8HV112JKvh432LxjVAgZosXIoL+sMLf34uDgdzv7viSLeRm4ny3gAMGMTJin3HdUnx5pwfQ6Ynp+OjgIW2isVo0h1H4zllzLtqTBT9cIw736dfCDCvASyb3f5/ELfPIegaRYmThAHIrsgFQXV3VW3JRw7009Nu19oSwDqEGmt2puTPh6FJD7DaTMiQQo+nHWD3MjNQKw9Be0cbTi2/agtO0J8dsrHgP+N5C45V8W/gLXWhAQEBm0pNQssM08YK4fnoFX+VqEVpL5NMjiw9QzZDfwqhi45Cb7bECKJXKyPxPU6wC+fCtelASzKzaJTaSrWHs7OCqiE6dKhcQBHh9Y1eBYK+1Q2l44UJmcDhYVpzjDg84lVJvIwSNgayMgJGNWwC9AUo0+JdUHr0dAHYDkEmUgsXEkBAIvVgsuAdHiO+JWeJZrxorbr/mMyhDikKULO7KNlA+XoTxlxcomwEt4oGsNEU2jVMF67f2TEQ7eE3fUAs3f4MpVoYwgxgwHuDzDrOps+ChYInen8hJmzi+mUU3sAt6u+81/XhIXUuO8sQ1Xbp7upS7x97FXxgMeZxS4FSIMPI7ckR0Wri4q8xPmnN0BzgGRUaTcHxOfTTmasUkFHZMbhAZcPKUjW1AjGFNcjRwSuhy4M=","thumbprint":"7FD06E9A238256F70EECA4BEBD0652AB82F4848F","store":"MY"}</EncryptionInfo>This appears to contain the following information:

- cipertext - presumably used for encryption/decryption on another system against the cert

- thumbprint - a hexadecimal key that uniquely identified a certificate

- store - which certificate store the cert is located in

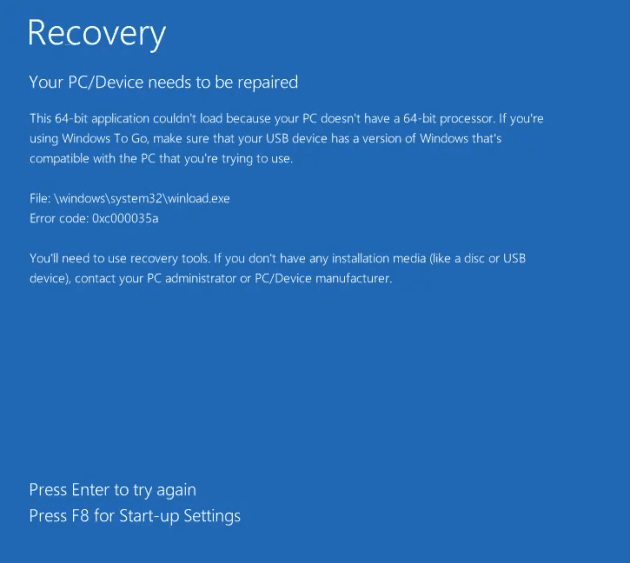

What happens if I try to open an encrypted QVD on a system without the encryption certificate?

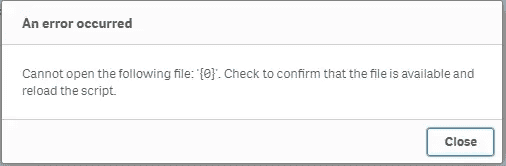

You get a rather ungraceful "Cannot open the following file: '{0}'." error.

Enter the cryptic "Cannot open the following file: '{0}'. Check to confirm that the file is available and reload the script."

Note that to read encrypted QVDs on a system, you just need to import the correct certificate onto the Qlik Sense server - you do not need to enable QVD encryption on the QMC unless you wish to write encrypted QVDs.

There are a few topics I'll look to follow up on:

- LOAD / STORE performance

- File size impact

- QVD migration pains (to QlikView, to Qlik Sense environments with many certs)

- QVF performance

- QVF import / export

AWS Lightsail snapshots - now automatic for Linux 10 Nov 2019 3:00 PM (5 years ago)

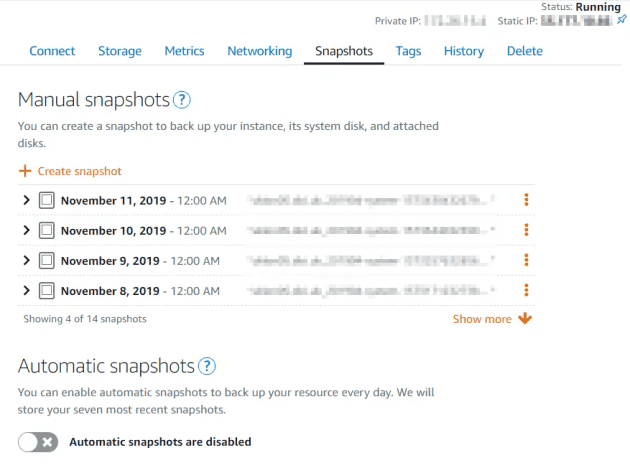

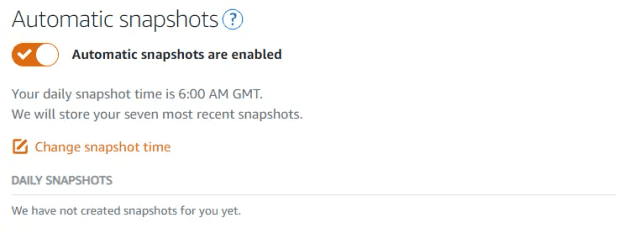

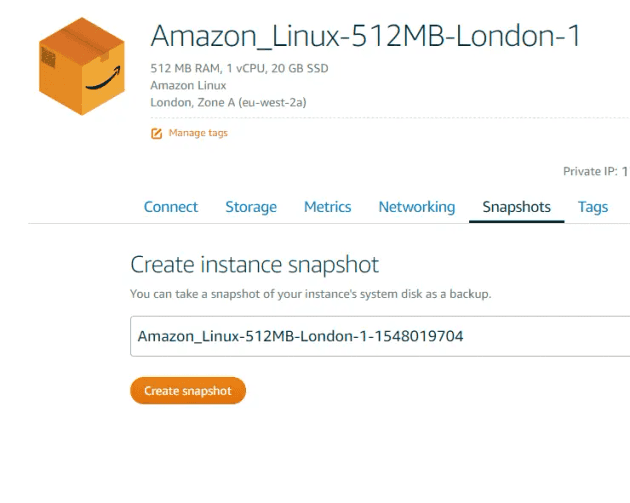

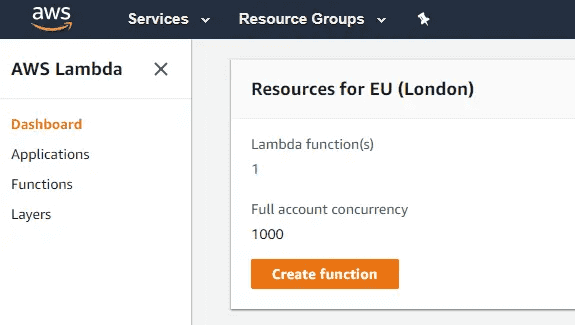

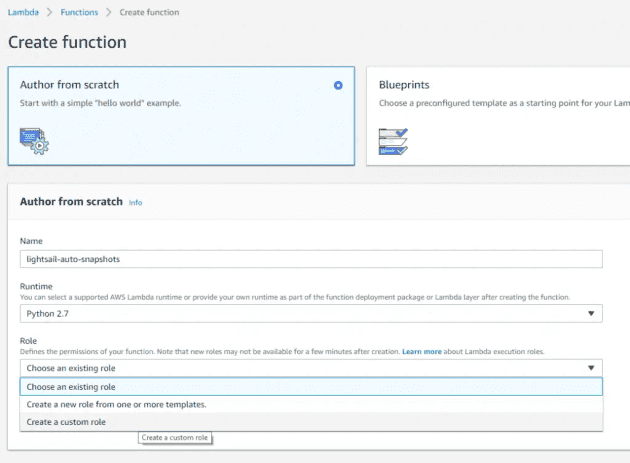

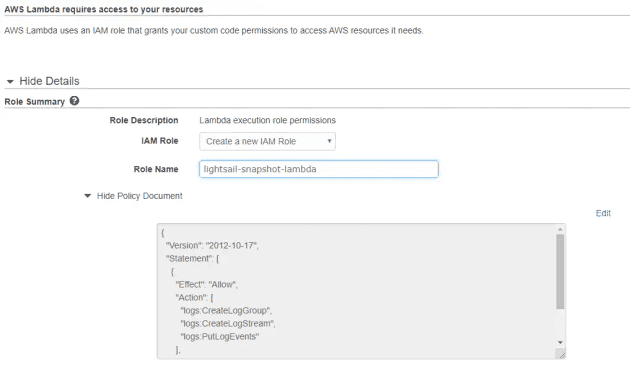

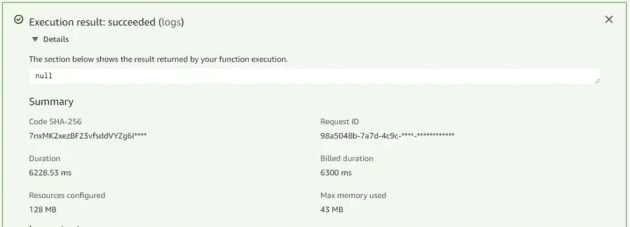

As an update to my previous post on using lambda to automate snaphots, I've finally logged back into the Lightsail console and seen they have added a new feature - automatic snapshots.

There's now an automatic snapshot section - note that the manual snapshots above are the 14 days dutifully maintained by the lambda script

It looks like the feature was added in October, based on the help site.

You're able to change the time the snapshot is taken, but not the amount of history maintained

I won't be switching from the lambda function as I require 14 days on Lightsail boxes, but if you're happy with the following constraints, then it's a great option:

- Pre-set to 7 days of snapshot retention (no more, no less)

- Must be set to run at a specific time (no triggers)

- Available on Linux boxes only (no Windows as yet)

Remove (destroy) all variables in a Qlik Sense application 5 Nov 2019 3:00 PM (5 years ago)

In QlikView, you had the ability to select multiple variables and delete them en-mass. In Qlik Sense, the UI permits you to undertake this activity one-by-one, and each takes two or three clicks.

This bookmarklet (Chrome only this time, not tried elsewhere) removes ALL variables from a Qlik Sense application by cheating the APIs used by the Qlik Sense client.

Demo showing addition of bookmark and destruction of variables in a Qlik Sense app

The best principle to follow when building apps is to ensure that all variables are recoverable - this means that the definition is set during the reload process in the load script.

How do you use it?

- Navigate to a Qlik Sense sheet and enter edit mode

- Click on the variables menu to bring up the list of user-facing variables

- Click the bookmarklet in your browser, and confirm you wish to proceed

- qsvd will destroy your variables, and print them out to the console just in case you made a mistake

Click here to get the bookmarklet, or go to the qlik-sense-variable-destroy repository for the code.

.gitignore for Qlik Sense and QlikView projects 19 Oct 2019 4:00 PM (5 years ago)

This .gitignore is based on experience of using various Windows, Mac and Linux OS - and a few unexpected commits. It is my go-to for Qlik projects, and was built using the GitHub gitignore repo.

Script below, or latest version on GitHub.

## GitIgnore built from own experience and using samples from https://github.com/github/gitignore

## DC - 20190920

## Qlik exclusions

# Ignore all Qlik data files

*.qvd

## Windows exclusions

# Windows thumbnail cache files

Thumbs.db

Thumbs.db:encryptable

ehthumbs.db

ehthumbs_vista.db

# Dump file

*.stackdump

# Folder config file

[Dd]esktop.ini

# Recycle Bin used on file shares

$RECYCLE.BIN/

# Windows Installer files

*.cab

*.msi

*.msix

*.msm

*.msp

# Windows shortcuts

*.lnk

## Dropbox exclusions

# Dropbox settings and caches

.dropbox

.dropbox.attr

.dropbox.cache

## MacOS exclusions

# General

.DS_Store

.AppleDouble

.LSOverride

# Icon must end with two \r

Icon

# Thumbnails

._*

# Files that might appear in the root of a volume

.DocumentRevisions-V100

.fseventsd

.Spotlight-V100

.TemporaryItems

.Trashes

.VolumeIcon.icns

.com.apple.timemachine.donotpresent

# Directories potentially created on remote AFP share

.AppleDB

.AppleDesktop

Network Trash Folder

Temporary Items

.apdisk

# Finally I want to exclude any packages I might make

*.zipGenerating consistent Qlik Sense app icons 20 Sep 2019 4:00 PM (5 years ago)

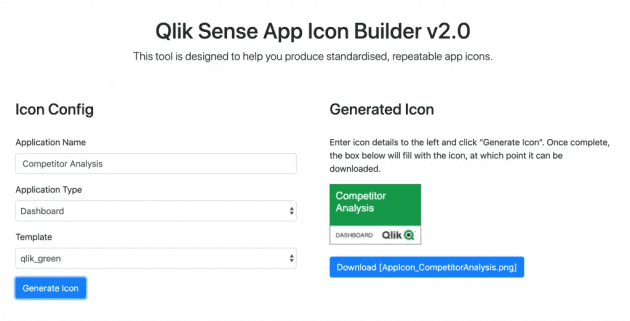

Application icons are prominently displayed throughout the Qlik Sense hub, and they are usually either the default blue, or some horrendously stretched icon that has no business being on an enterprise system.

This simple tool (packaged as an extension and accessible as a mashup) helps users generate consistent, appropriate app icons for use in their apps.

Without peering at the text, the default icons are rather generic

The App Icon Builder prints an application name (and optional application type) onto a background image. The tool allows you to set various templates to allow customisation by business function, app purpose, data source, governance level, etc.

The mashup allows users to generate consistent .png app icons which are styled and sized correctly

Each icon is provided as a png and is sized appropriately for the Qlik Sense Hub. Incidentally, the aspect ratio of the these images is not as per the documentation, nor does it match the aspect ratio of the app icon in the app overview screen (update - see here for more).

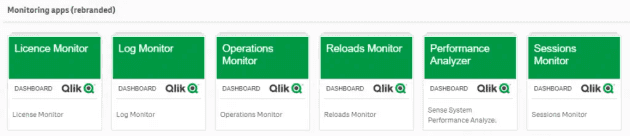

Here's the default monitoring apps rebranded with a consistent theme

How do you use it?

- Download the package and customise by editing the .json config file and adding any additional backgrounds

- Re-zip and upload to the QMC

- Navigate to /extensions/qs-icon-generator/index.html to create icons

Click here to go to the qlik-sense-icon-generator repository - you can download a zip ready for import from the releases section.

Qlik Sense Repository Explorer (PostgreSQL extractor) 30 Aug 2019 4:00 PM (5 years ago)

Forewarning - loading data directly from the repository is not recommended. Most requirements can be met through the QRS APIs.

There's a lot of tables, all qualified - plus an index table. Smart search and insights are recommended!

This script loads all QRS data from the repository into an app. The code is below, or the latest is available on GitHub.

// Qlik SenseRepository Explorer Script

// Version date: 27/06/2019

// This script loads each table from the repository to give you the ability to find field values and identify issues without downloading PgAdmin

// Even in big deployments this app shouldn't be too large, as we're avoiding the logs database

// Config steps:

// 1) Create a data connection to the repository and note the name. For a simple single node install:

// Connection Type: PostgreSQL

// Host name: localhost

// Port: 4432

// Database: QSR

// User name: postgres

// Password: defined during Qlik Sense install (superuser password)

// 2) Update the connection name in the script below, then paste it into an app and reload

// -- Script start

// Create a connection to localhost:4432 with postgres user and the superuser password

LIB CONNECT TO 'PostgreSQL_QSR';

// Load the table listing from the default Postgres directory

TableList:

LOAD

RowNo() AS %KeyTableList,

table_catalog AS [Table Catalog],

table_schema AS [Table Schema],

table_name AS [Table Name],

table_type AS [Table Type];

SELECT

"table_catalog",

"table_schema",

"table_name",

"table_type"

FROM "information_schema"."tables"

WHERE "table_catalog" = 'QSR' // Only load from repository tables

AND "table_schema" = 'public' // Only load public tables

;

// Set a variable with the table count and print this to the console

LET vCount_Tables = NoOfRows('TableList');

TRACE >> Found $(vCount_Tables) tables in QSR.Public.;

// Create an empty table to concatenate table rows to

TableRows:

LOAD

null() AS %KeyTableList

AutoGenerate 0;

// Now loop over these tables and load their contents!

FOR i=0 to vCount_Tables - 1

LET vLoop_TableKey = Peek('%KeyTableList',i,'TableList');

LET vLoop_TableSchema = Peek('Table Schema',i,'TableList');

LET vLoop_TableName = Peek('Table Name',i,'TableList');

TRACE >>> Loading from $(vLoop_TableSchema).$(vLoop_TableName).;

// Set qualify statement for all Qlik data tables

QUALIFY *;

// Get the data from the table

[$(vLoop_TableName)]:

LOAD

*;

SELECT

*

FROM "$(vLoop_TableSchema)"."$(vLoop_TableName)";

// Set unqualify statement now that we've done the data load

UNQUALIFY *;

// Get a row count from the table and join back to the table listing

Concatenate(TableRows)

LOAD

'$(vLoop_TableKey)' AS %KeyTableList,

num(tablerows,'#,##0') AS [Table Row Count];

SELECT

COUNT(*) as tablerows

FROM "$(vLoop_TableSchema)"."$(vLoop_TableName)";

Next i;

// -- Script endGet Qlik Sense Object IDs quickly 5 Jul 2019 4:00 PM (5 years ago)

If you're doing anything but vanilla Qlik Sense development, it's likely you'll need to get to the object IDs at some point. You can do this by appending /options/developer to the end of the page URL and clicking on each object in turn, or using dev tools - but that's slow.

This bookmarklet makes getting the object IDs fast in Chrome (and, begrudgingly, IE11).

Animated demo showing adding of bookmarklet to chrome and displaying of object IDs

If you're using NPrinting, creating mashups or poking around in the repository you'll need to get to Objects IDs eventually. Each object in an app has a unique ID or GUID, and if you duplicate the app they won't change (unlike the app ID).

How do you use it?

- First click on the bookmarklet pops up the object ID for every object on the sheet

- User can click on an object ID to copy it to the clipboard (in chrome)

- Second click on the bookmarklet will hide the object IDs

This requires nothing installed on the server, no extensions, no mashups, just a client side bookmarket. Click here to get the bookmarket, or click here to see the qlik-sense-object-id-grabber repository and code.

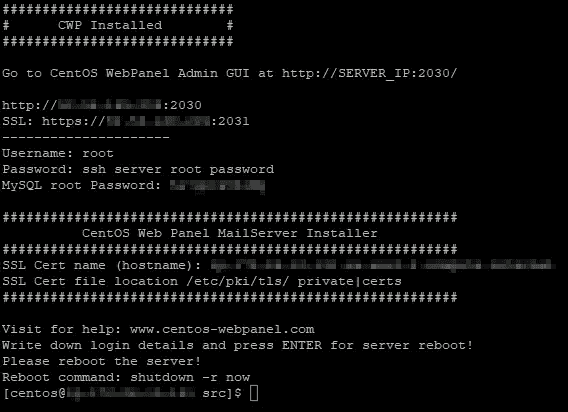

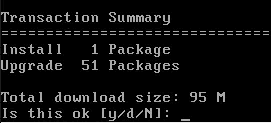

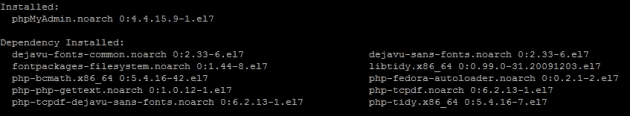

Adding CentOS Web Panel to a fresh CentOS 7 box 14 Jun 2019 4:00 PM (5 years ago)

Revisiting an earlier post on configuring a LAMP server, and on a recent post on installing Webmin, this time I'm dropping CentOS Web Panel (CWP) onto a new CentOS 7 box.

So, on a fresh CentOS 7 1901-01 box on AWS, let’s begin.

# Set the hostname correctly before we start off, otherwise CWP will pick up the wrong name

hostname sub.host.com

# Update existing packages

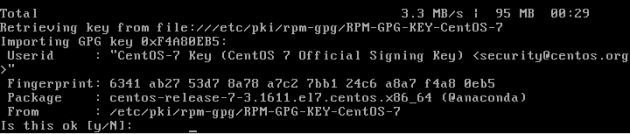

sudo yum -y update

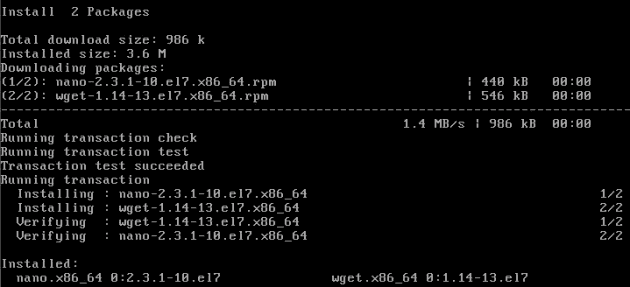

# Install wget and nano (as I like nano and wget is needed for the CWP install)

sudo yum -y install wget nano

# Reboot the box to sort out any updates requiring reboot

sudo rebootReconnect, then continue - this step will take a little longer.

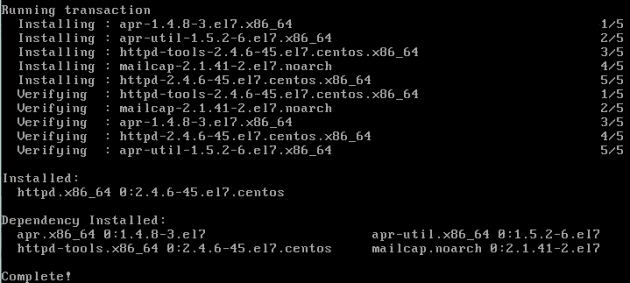

# Change directory, grab a copy of the latest CWP and run the script

cd /usr/local/src

sudo wget http://centos-webpanel.com/cwp-el7-latest

sudo sh cwp-el7-latestOn a 1 core, 1GB RAM box this took 3 minutes until the system prompted for a restart. You can add a flag to the last command to auto-restart.

#############################

# CWP Installed #

#############################

Go to CentOS WebPanel Admin GUI at http://SERVER_IP:2030/

http://YOURIP:2030

SSL: https://YOURIP:2031

---------------------

Username: root

Password: ssh server root password

MySQL root Password: YOURPASS

#########################################################

CentOS Web Panel MailServer Installer

#########################################################

SSL Cert name (hostname): YOURHOSTNAME

SSL Cert file location /etc/pki/tls/ private|certs

#########################################################

Visit for help: www.centos-webpanel.com

Write down login details and press ENTER for server reboot!

Please reboot the server!

Reboot command: shutdown -r nowCWP requesting restart

After opening any required ports (2031 for SSL), you're good to go.

Fresh CWP login screen

If you're using private key auth, you might need to reset the root password before logging in.

# Reset root password

sudo passwdOnce reset, you can start dealing with alerts and issues in the panel.

Logged in and ready to configure

As CWP installs many supporting modules (e.g. MySQL, PHP, etc) automatically, the install takes longer and results in a build that uses more RAM and CPU out of the box.

Adding Webmin to a fresh CentOS 7 Box 10 May 2019 4:00 PM (5 years ago)

As a quick refresh from an earlier post, I'm going to use a new CentOS image with Webmin as the control panel of choice.

Happily, the only thing that's changed in the documentation is the way the gpg key is imported - documentation is here: https://doxfer.webmin.com/Webmin/Installation