Modeling the COVID-19 / Coronavirus pandemic – 4.Modeling with at time variable infection rate. 17 Apr 2020 10:31 AM (5 years ago)

A more realistic assumption than the approximation above is to allow the infection rate to be time dependent. This time dependency was derived in the first post, by using the results of Cereda et al. 2020 who used a $\Gamma$-distribution to fit the interval between the appearance of symptoms in infectors and infectees. After removing the widening by the incubation period, we derived the serial interval of infections, which is the normalized infection probability, namely

\begin{equation}

\beta(t) = R_0 {b^a t^{a-1} \exp(-b t) \over \Gamma(a)},

\end{equation}

with $a = 3.1 \pm 0.8 $ and $b = 0.47 \pm 0.12$ days$^{-1}$.

Given this infection rate, can we derive the relation between the exponential growth rate $r$ and the basic reproduction number $R_0 = \int_0^\infty \beta(t) dt$? Can we predict by how much the growth will slow down if we quarantine at a given rate, or decrease the infection rate (e.g., through social distancing)?

To get the $r$, we assume that the number infected at a given time is $I = I_0 \exp(rt)$. This means that the rate at which people are infected is its derivative $\dot{I} \equiv dI/dt = r I_0 \exp(rt)$. The basic equation for the infection rate is the following. At each instant $t$, there are people who were infected at a previous time $t-\tau$ who now infect at a rate $\beta(\tau)$. We therefore have the equation

\begin{equation}

\dot{I}(t) = \int_{0}^\infty \beta(\tau) \dot{I}(t-\tau) d\tau .

\end{equation}

We now insert our ``guess" which is the exponential growth and find that

\begin{equation}

r I_0 \exp(rt) = \int_{0}^\infty \beta(\tau) r I_0 \exp\left(r(t-\tau)\right) d\tau ,

\end{equation}

which after cancellation of $r I_0\exp(rt)$ gives

\begin{equation}

1 = \int_{0}^\infty \beta(\tau) \exp \left( -r \tau \right) d\tau .

\end{equation}

This is the basic equation relating the growth rate $r$ to the infection rate function $\beta(t)$, which itself depends on the basic reproduction number $R_0$.

For the $\Gamma$ distribution given above, the equation becomes:

\begin{equation}

{1\over R_0} = \int_{0}^\infty {b^a \tau^{a-1} \exp\left(-(b+r) \tau \right) \over \Gamma(a)} d\tau = {b^{a} \over (b+r)^a}.

\label{eq:R0timedependent}

\end{equation}

With the above values of $a$ and $b$, this implies that the basic reproduction number is high and equal to $R_0 = 4.6 \pm 2.7$.

If we take the growth observed in Japan, we find $R_{0,J} = 1.6 \pm 0.3$.

Since the errors on $R_0$ and $R_{0,J}$ are correlated, it is also worth while looking directly at the ratio:

\begin{equation}

{R_{0,J} \over R_0} = \left(b+r_{0,J} \over b+r_0\right)^a = 0.34 \pm 0.15

\end{equation}

Namely, the Japanese social norms implies that they are about 3 times less infectious than typical societies.

The effect of quarantining and "social distancing" can also be included in the calculation by modifying the infection rate. For example, suppose that there is a quarantining rate $\kappa$, and that we reduce the reproduction number to a fraction $\epsilon$, namely that $R = \epsilon R_0$. We then get a modified infection rate of

\begin{equation}

\beta_\mathrm{mod}(t) = \epsilon R_0 {b^a t^{a-1} \exp \left(-b t\right) \over \Gamma(a)} \exp(-\kappa t).

\end{equation}

Since a $\Gamma$-distribution times an exponent is another (but not normalized) $\Gamma$-distribution, we can easily integrate and find that

\begin{equation}

{1\over \epsilon R_0} = {b^{a} \over (b+r +\kappa)^a}.

\end{equation}

The solution is

\begin{equation}

r = b \left[ (\epsilon R_0)^{1/a} -1\right] -\kappa = (r_0 + b) \epsilon^{1/a} - b - \kappa.

\end{equation}

For the second equality we plugged in the solution for $R_0$ from the observed $r_0$ - the growth under natural conditions.

Clearly, for no social distancing ($\epsilon=1$), we need $\kappa$ as fast as the $r_0$ of the base case (without any social modifications). Namely, we need to quarantine people as fast as $1/r_0 = 3.3 \pm 0.7$ days. A place like Iran or Bnei Brak requires quarantining as fast as $2.25 \pm 0.25$ days from the day of infection, while Japan or Sweden, more like $13.5 \pm 5$ days.

We can look at it differently. Without quarantining we need to reduce the social interactions to a fraction $\epsilon = (b/(r_0+b)^a = 1/R_0$, which is the above result.

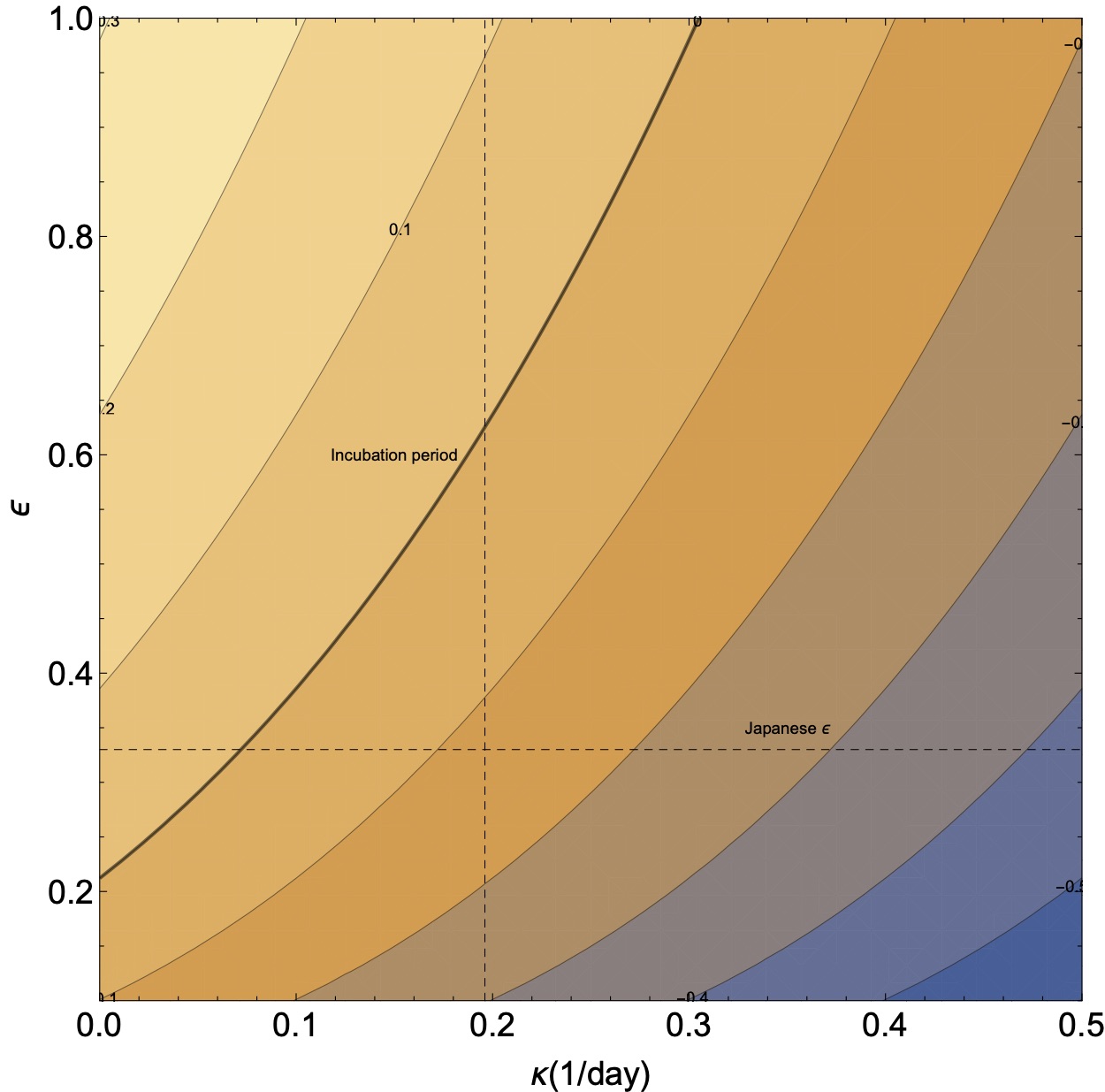

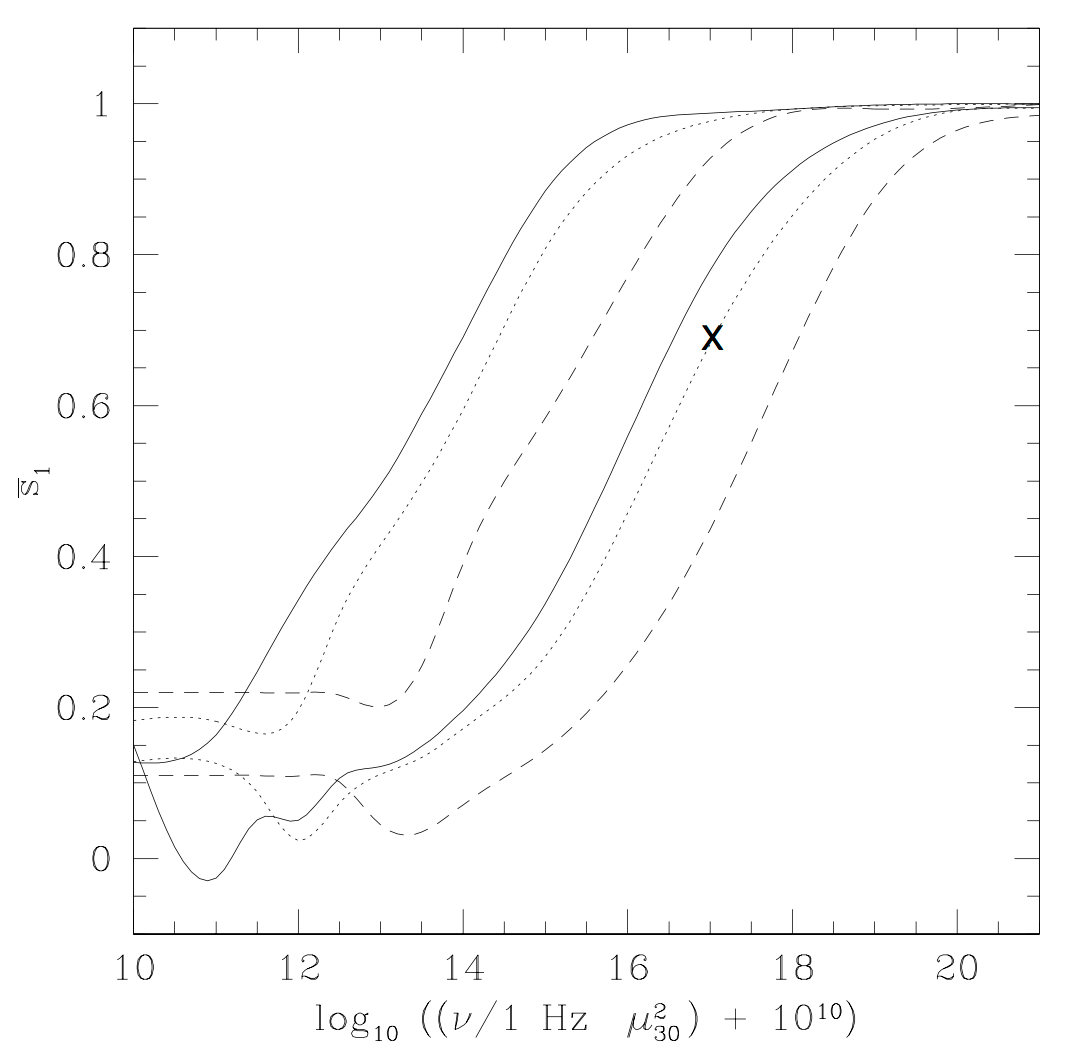

In fig. 1 we plot the growth rate as a function of the quarantining time $1/\kappa$ and social distancing factor $1/\epsilon$.

One apparent conclusion is that under normal conditions (i.e., for $R=R_0$), asking anyone who has any coronavirus like symptoms to quarantine himself is insufficient to stop outbreaks. This is because the typical incubation period is 5 days, which is larger than the necessary quarantining time. The exception might be a society like Japan in which the quarantining time is longer than the incubation time. However, this is still without having taken the asymptomatic coronavirus carriers.

Effect of asymptomatic carriers If we have asymptomatic carriers, then we will not be able to detect and quarantine them (unless they are discovered by a more sophisticated protocol, such as checking all those who were in contact with a sick person). This means that the quarantining fraction does not decay as $\exp(-\kappa t)$ but as $f + (1-f) \exp(-\kappa t)$. Once we plug this factor to $\beta_\mathrm{mod}(t)$ and integrate over, we obtain \begin{equation} {1\over \epsilon R_0} = f {b^{a} \over (b+r)^a} + (1-f){b^{a} \over (b+r +\kappa)^a}. \end{equation} This equation does not have an analytical solution for $r$. We can however solve for $r=0$, and find the quarantining rate necessary to stop the outbreak. It is \begin{eqnarray} \nonumber \kappa_\mathrm{crit} &=& \left[\left(\frac{(1-f) R_0 \epsilon }{1-f R_0 \epsilon }\right)^{{1}/{a}}-1\right] b \\ & = & \left[\left(\frac{(1-f) \epsilon (b+r_0)^a}{b^a - f \epsilon (b+r_0)^a}\right)^{{1}/{a}}-1\right] b. \end{eqnarray}

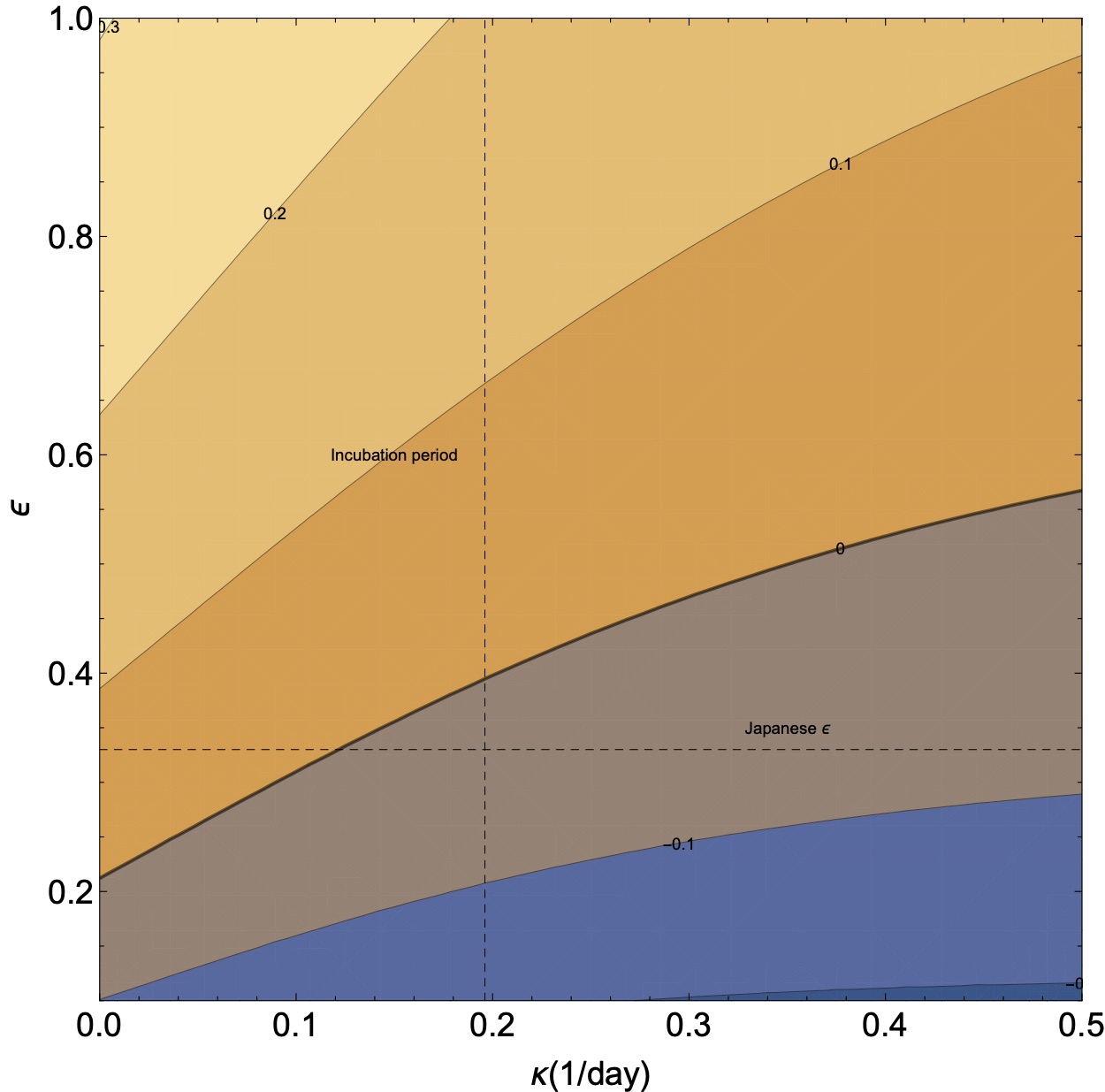

In fig. 2 we plot the rate $r$ as a function of the quarantining and social distancing. For our canonical value of the asymptomatic infected, we find that there is no $\kappa$ that will give $r=0$. Namely, the growth just from the asymptomatic is sufficient to cause an epidemic if the social interaction stays the same. If we use the rate observed in Japan, $r_J$, we find that $\kappa_\mathrm{crit} = 0.13 \pm 0.06$, which gives a typical critical time of 8 days to quarantine.

Let us now switch gears and simulate the pandemic numerically. This will allow us to easily incorporate more complex scenarios, such as different conditions for quarantining.

Additional posts in the series include

- Background data

- Simple Modeling

- Effects of several populations with a variable infection rate

- Modeling with at time variable infection rate (this page)

- Numerical Model (coming soon!)

- Discussion and Conclusions (coming soon!)

Modeling the COVID-19 / Coronavirus pandemic – 3.The effects of several populations 12 Apr 2020 11:39 PM (5 years ago)

The next interesting question to ask is what is the effect of a mixed population which has different infection rates. That is, that some individuals are more infectious than others (e.g., a cashier in the supermarket vs. a farmer). For simplicity, we return back to the simpler case where there is no latent period. Let us supposed we have $n$ population that can interact between (i.e., infect) themselves. The equations describing their temporal behavior will then be

\begin{eqnarray}

{d I_1 \over dt} &=& \beta_{11} I_1 + \beta_{12} I_2 + \ldots + \beta_{1n} I_n - \gamma I_1 \nonumber \\

{d I_2 \over dt} &=& \beta_{21} I_1 + \beta_{22} I_2 + \ldots + \beta_{2n} I_n- \gamma I_2 \nonumber \\

&\vdots & \nonumber \\

{d I_n \over dt} &=& \beta_{n1} I_1 + \beta_{n2} I_2 + \ldots + \beta_{nn} I_n- \gamma I_n

\end{eqnarray}

If we now guess exponential behavior for the solution, namely, $I_i \propto \exp(r t)$, we get

\begin{equation}

\newcommand{\matr}[1]{\mathbf{#1}}

(\gamma - r) \matr{I} - \boldsymbol\beta = 0,

\end{equation}

which of course means that $r - \gamma$ are a the eigenvalues of the interaction (infection coefficient) matrix $\boldsymbol\beta$.

This boils down to what are the eigenvalues of random matrices. The easiest way to study their behavior is simply to run "experiments".

As a sanity check, the first case to consider is case for which the infection coefficients are constant. This implies taking the simple case we studied above and partition the population. This should not change anything. Suppose they are equally sized. In such a case, $\beta_{ij} = \beta_0 /n$. The eigenvalues one obtains numerically are $n-1$ zeros, and one $\beta_0$, as expected.

For the next cases, we can consider $\beta{ij}$'s that are random. Since the $\beta{ij}$ have to be positive (no one can "uninfect" an infected patient), we can look at a log normal distribution.

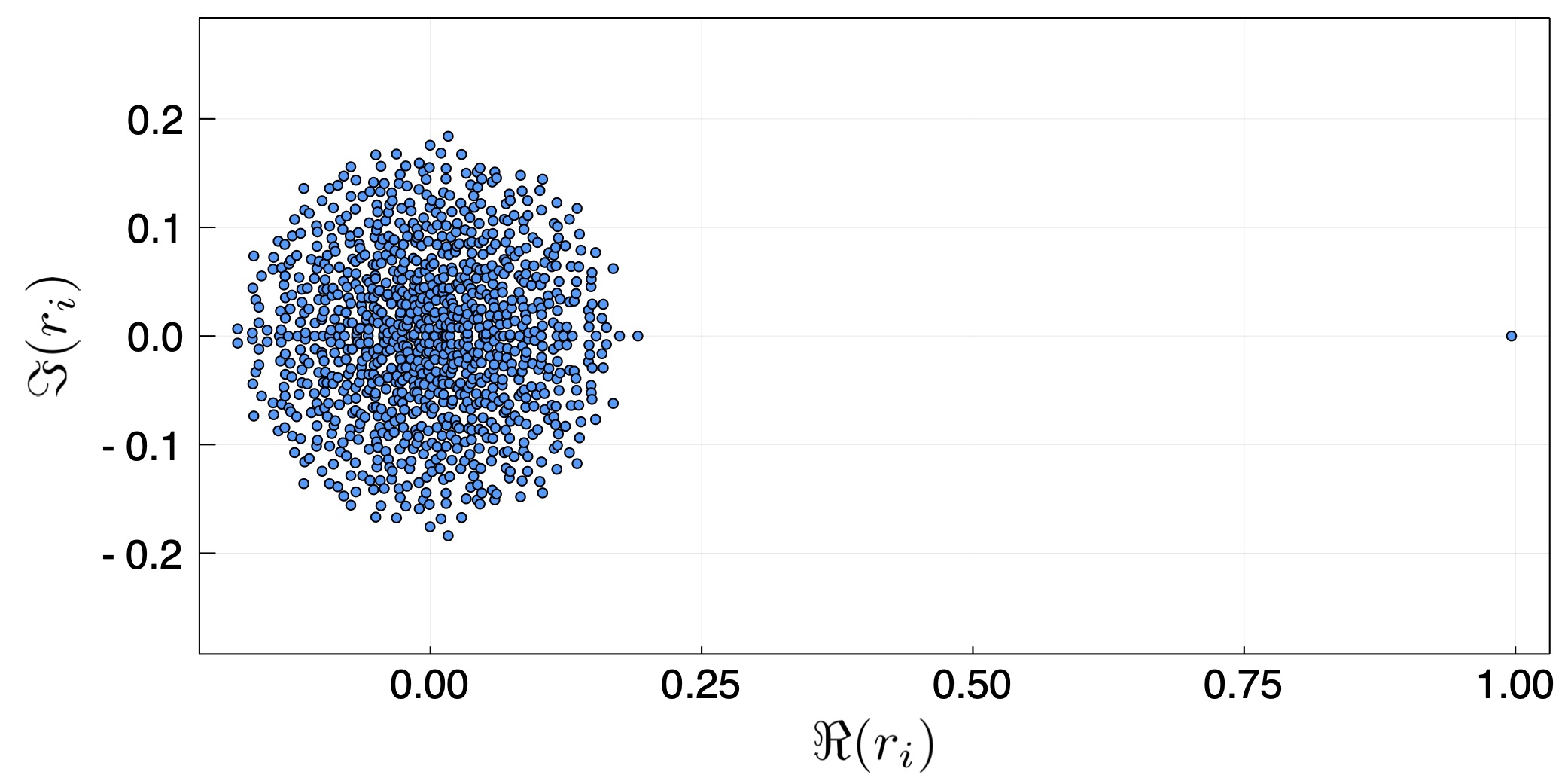

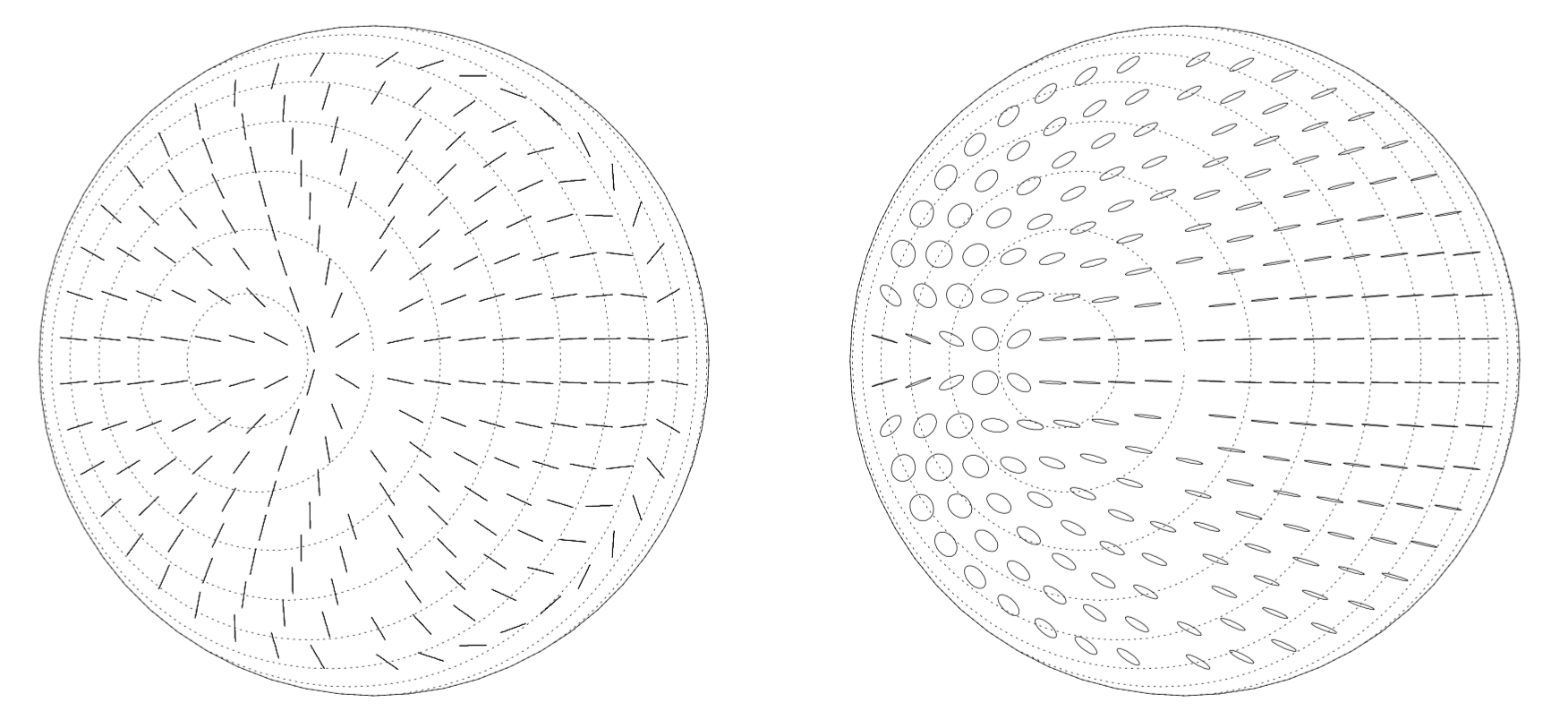

The first random case we take is of a general, non-symmetric matrix. In principle, there is no reason why the coefficients should be symmetric, that is, $\beta_{ij}=\beta_{ji}$. This is because when two people interact, the probability that one will infect the other is not necessarily the same, either because of different habits (one washes her hands while another doesn't), or because of asymmetric interaction (e.g., person providing food vs. a person eating it). Fig. 1 depicts the eigenvalues of a 1000 by 1000 interaction matrix $\boldsymbol\beta$. We see that all but one eigen value fill a circle around the origin, while one eigenvalue is unity. In fact, under some realizations, it can be larger than unity.

The first interesting take away point is that even if the interactions are random, the average interaction sets the maximal growth rate, and it will dominate the solution very early in the evolution. Namely, the initial conditions will cause an oscillatory behavior (because the eigenvalues have an imaginary component), however after a few e-folds at most, the largest eigenmode having an eigenvalue of unity (as the average was normalized), will dominate the growth. Without normalization, we will have $r_{max} = n \overline{\beta_{ij}}$

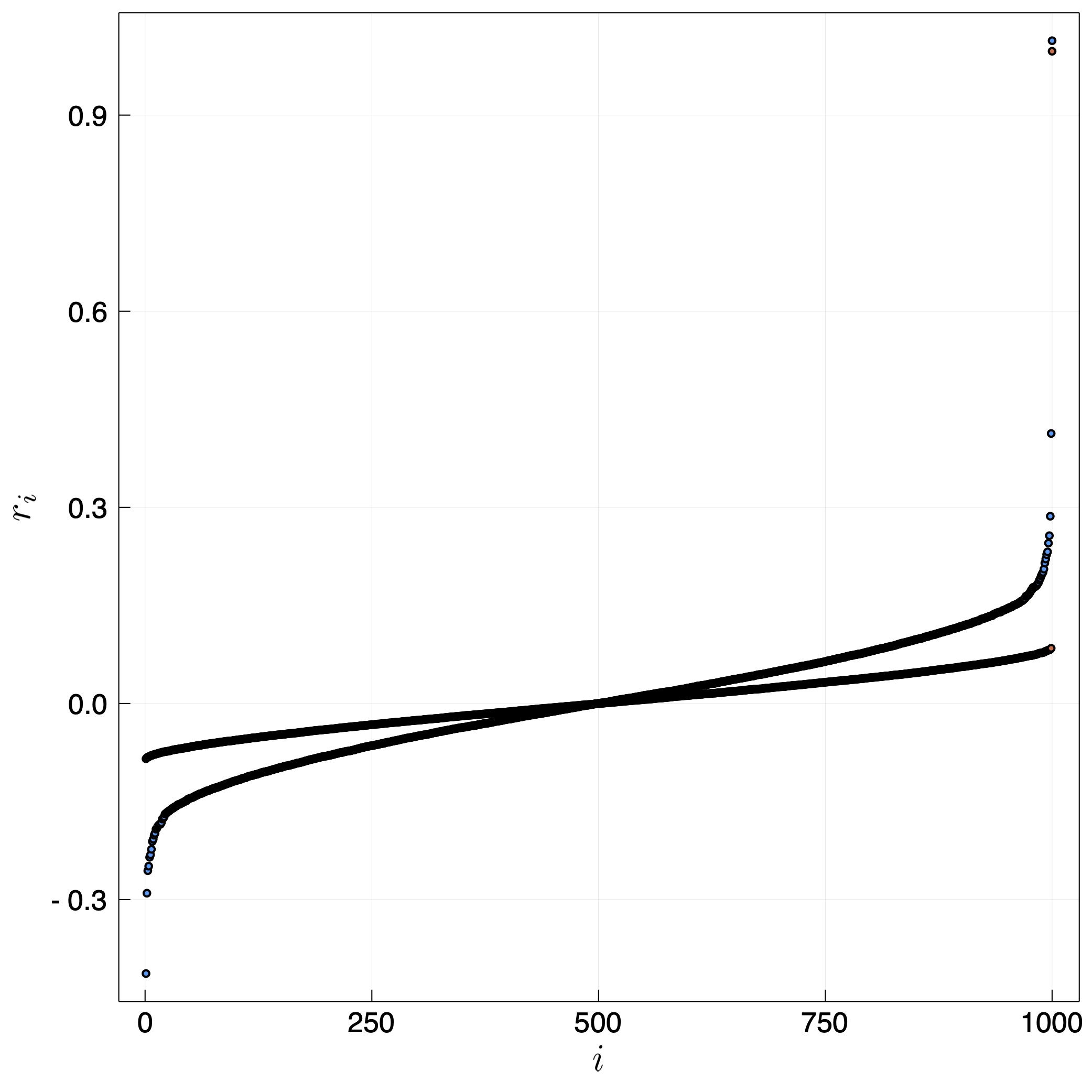

The second random case is considering a symmetric $\boldsymbol\beta$ which will give rise to real eigenvalues (in epidemiological terms, it means that there is symmetric probability that person A and B infect each other if one is sick.

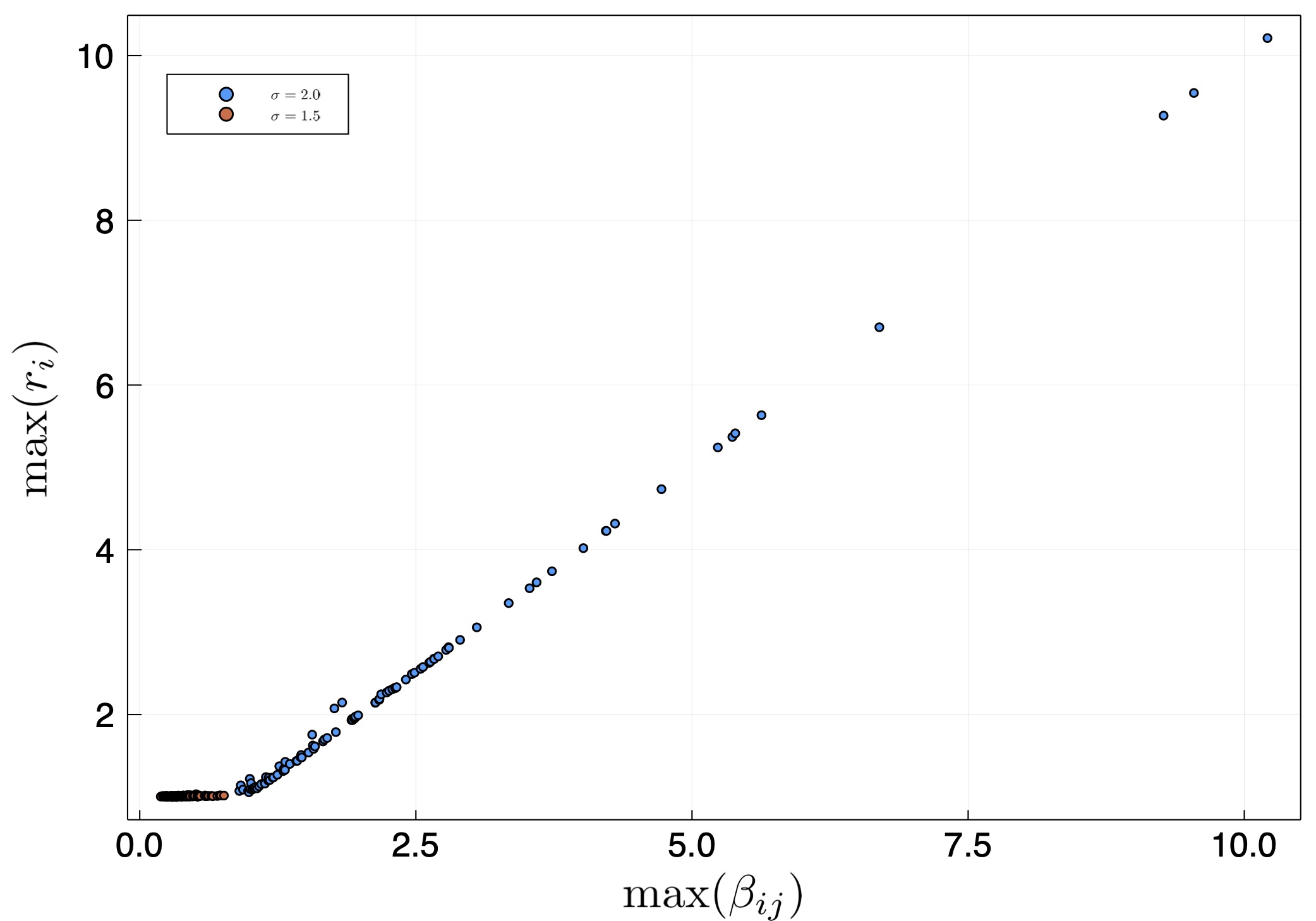

The most interesting aspect is that in some realizations we find that the largest eigenvalue can be larger than unity. We therefore plot in fig. 3 the largest eigenvalue in many random realizations. We find that if the largest element in $\beta_{ij}$ is larger than unity, then the largest eigenvalue is roughly the largest element. Otherwise, it is unity. Interestingly, the distribution width doesn't change this, it only changes the probability that there will be a realization with a very large $\beta_{ij}$.

This result implies that the internal interaction is not critical unless there is a super-spreader, which is someone or some group that has a probability of infecting which is larger than the reciprocal of its size in the relevant population. For example. Suppose a town has 1000 people and 10 delivery guys. If the probability that a single delivery guy will infect someone is larger than the probability that a random person will infect another random person, by a factor which is larger than 100, then the growth exponent will be larger than the exponent that is obtained from the average infection coefficient. It will correspond to delivery guys infecting average people who infect other delivery guys, etc.

Additional posts in the series include

- Background data

- Simple Modeling

- Effects of several populations with a variable infection rate (this page)

- Modeling with at time variable infection rate

- Numerical Model (coming soon!)

- Discussion and Conclusions (coming soon!)

Modeling the COVID-19 / Coronavirus pandemic – 2.Simple Models 9 Apr 2020 10:47 AM (5 years ago)

Armed with the data on the coronavirus such as the serial interval, incubation period, and the base growth rate, we are now in a position to start modeling the pandemic. Note that as the title suggests, these are simple models. Any conclusions drawn from this specific page should be taken with a grain of salt. More realistic modeling will be carried out in subsequent posts.

SIR - A very simple model

Using the above numbers, we are pretty much ready to start modeling the pandemic. We start with the simplest model that can encapsulate the exponential growth.

The simplest model for the pandemic growth is the well known SIR model, which includes the number of uninfected people $S$, the total population $N$, the number of infected and contagious individuals $I$ and the number of recovered individuals $R$. The set of ordinary differential equations (ODEs) describing the behavior is:

\begin{eqnarray}

{dS \over dt} &=& - \beta \left(S \over N \right) I , \\

{dI \over dt} &=& + \beta \left(S \over N \right) I - \gamma I, \\

{dR \over dt} &=& + \gamma I .

\end{eqnarray}

Here $\beta$ is the transmitting coefficient, which depends on the social behavior and of course some inherent characteristics of the virus. $\gamma$ is the recovery rate or the rate at which the contagious person leaves the contagious state (e.g., gets hospitalized or quarantined), in units of one over time.

This equation is nonlinear because when a large fraction of the population gets infected, $S/N$ starts decreasing, quenching the epidemic. We want (at least at first) to better understand the behavior when only a small fraction of the population is infected.

Thus, the equation of interest, assuming $S/N \approx 1$, is

\begin{equation}

{dI \over dt} = + \beta I - \gamma I.

\end{equation}

If we guess an exponential behavior (since it is a homogeneous linear ODE) of the form $X \propto \exp(r t)$ (where $X$ is any variable), we find:

\begin{equation}

r I = (\beta - \gamma)I ~~~\rightarrow~~~ r = \beta - \gamma.

\end{equation}

This immediately tells us that the infection can grow and become an epidemic if $\beta$ is larger than $\gamma$.

In fact, we can relate $r$ to the basic reproduction number $R_0$, which is the initial number of people that will be infected by an infectious individual (before any measures are taken). It is

\begin{equation}

R_0 = \int_0^{\infty} \beta \exp(-\gamma t) dt = {\beta \over \gamma} = {r + \gamma

\over \gamma}.

\end{equation}

This is because the probability that an infected individual remains contagious at time $t$ is proportional to $\exp(-\gamma t)$.

If we compare our results to the nominal growth rate of 0.3 ± 0.07 day$^{-1}$ and take $\gamma$ to be the reciprocal of the serial interval, i.e., 1 / (6.6 ± 1.3) day$^{-1}$ (assuming the errors on the fit for the distribution are uncorrelated), we obtain that $R_0$ = 3.0 ± 0.6. This is the average number of infections from a contagious person. We also find $\beta$ = 0.46 ± 0.07 day$^{-1}$.

Based on this simple model, we see that in order to guarantee overcoming the pandemic growth, we need to reduce $\beta - \gamma$ and make it negative. This requires either reducing $R_0$ (i.e., $\beta$), by a factor of 3 or even 4, which is not really reasonable (effectively making the infected people less contagious) or increasing $\gamma$, which implies shortening the time that an infected person is contagious (by quarantining him), or a combination of both. Let us see how this changes if we introduce a latent period where the person is non-contagious.

Adding a non-contagious latent period

One generalization of the simplest model is to include a period when the infected person is noncontagious, namely, it is a latent period. (This isn't the clinical incubation period, which is the time until the onset of symptoms, as people can be contagious even before symptoms develop, if they develop). Thus, our model now includes the number of uninfected people $S$, the number of infected people $L$, in the "latent period", that are still noncontagious, the number $C$ of contagious infected people, and the number of recovered individuals $R$. The equations describing the behavior here will be

\begin{eqnarray}

{dS \over dt} &=& - \beta \left(S \over N \right) C , \\

{dL \over dt} &=& + \beta \left(S \over N \right) C - \lambda L, \\

{dC \over dt} &=& + \lambda L - \gamma C, \\

{dR \over dt} &=& + \gamma C .

\end{eqnarray}

Here, $\lambda$ is the rate at which infected people become contagious.

Also, we again guess exponential behavior for the linear case (for which $\left(S / N \right) \rightarrow 1$), and get

\begin{eqnarray}

r L &=& + \beta C - \lambda L, \\

r C &=& + \lambda L - \gamma C, \\

\end{eqnarray}

Because this is a homogeneous set of equations, it is an eigenvalue problem. The solution is obtained when the determinant vanishes:

\begin{equation}

\left | \begin{array}{c c}

\lambda + r & - \beta \\

- \lambda & \gamma +r \\

\end{array} \right| = 0

\end{equation}

This gives two solutions. The positive one (describing the pandemic) is:

\begin{equation}

r = {1\over 2} \left( -(\lambda + \gamma) + \sqrt{(\lambda - \gamma)^2 + 4 \lambda \beta } \right)

\end{equation}

We can invert this relation to find $\beta$ given the growth rate $r$ which we measure:

\begin{equation}

\beta = { (\lambda + r)(r+\gamma)\over \lambda}.

\end{equation}

For a very short latent period, the rate at which noncontagious become contagious, $\lambda$, is very large and we recover the equation from the previous section.

We can also see that we still obtain $r=0$ for $\lambda = \beta$. However, for other values of $\beta$ we get $|r(\gamma)| < |r(\lambda \rightarrow \infty)|$. This is because the latent period slows things down, without affecting the overall behavior of the system. Once a person becomes contagious it is a race between the infection rate $\beta C$ and the recovery rate $\gamma C$. For this reason, the basic reproduction number $R_0$ is still

\begin{equation}

R_0 = \int_0^{\infty} \beta \exp(-\gamma t) dt = {\beta \over \gamma}.

\end{equation}

If we consider the serial interval distribution we derived in the background data post, we see that taking $1/\lambda \sim 2 \pm 1$ day is reasonable. If we now take $\lambda + \gamma = 1/(6.6\pm 1.3)$ day$^{-1}$, we get

\begin{eqnarray}

\beta &=& 0.75 \pm 0.22 \mathrm{~day}^{-1}\\

R_0 & = & 4.6 \pm 1.6.

\end{eqnarray}

Namely, we obtain a higher basic reproduction number. This is because the introduction of a latent period (of order 2 days) implies that for the same infection rate and recovery rate, the overall growth rate is slower. In order to compensate for it, the infection rate and basic reproduction numbers have to be higher in order to have the same growth rate $r$. In the next post we will consider having a distribution of infection coefficient $\beta$, and in the subsequent, we will also calculate the infection with a more appropriate time dependent infection rate.

Adding Quarantining

The next step is to add the effects of quarantining of sick people. If we want to stay within the framework of the linear equations, the easiest way to incorporate quarantining is to add an additional rate $\kappa$ which describes the rate at which an infectious person is quarantined. In fact, this number can be different in the latent period (when the person hasn't developed symptoms) and in the contagious period, when he could have. Thus, we introduce $\kappa_{L}$, $\kappa_{C}$ and now consider the equations:

\begin{eqnarray}

{dL \over dt} &=& - \lambda L - \kappa_{L} L + \beta C , \\

{dC \over dt} &=& + \lambda L - \gamma C - \kappa_{C} C.

\end{eqnarray}

If we now guess ${L,C \propto \exp(r t)}$, we again find ourselves with an eigenvalue problem, of which the solution is:

\begin{eqnarray}

r&=&{1\over 2}\left(-(\lambda + \kappa_{L}) - (\gamma +\kappa_{C})\right) \\ \nonumber && +

\sqrt{\left(+(\lambda+\kappa_{L}) - (\gamma +\kappa_{C})\right)^2 + 4 \lambda \alpha}.

\end{eqnarray}

This gives $r=0$ for

\begin{equation}

\beta_{crit} = {(\lambda + \kappa_{L}) (\gamma + \kappa_{C}) \over \lambda}.

\end{equation}

If for example, we cannot detect people in the latent phase ($\kappa_L = 0$), and it takes 2 days to discover that people might be infected with the coronavirus, then $\kappa_C = 1/2$ day$^{-1}$. We also have $1/\lambda = 2 \pm 1$ day and $1/\lambda + 1/\gamma = 6.6 \pm 1.3 $ days which leads to $\beta_{crit} = 0.664 \pm 0.036$. However the value of $\beta$ without social distancing and other such measures is $\beta \approx 0.75$ (in the simple model with a latent / contagious period). In other words, quarantining 2 days after a person becomes infectious, which is 4 days after he is infected is barely sufficient to increase the $\beta_{crit}$ to the base value, and probably not enough to stop the pandemic without additional means (e.g. social distancing). We will return to this calculation once we have a better description of the $\beta$, allowing it to be a function of time since the infection.

- Background data

- Simple Modeling (this page)

- Effects of a population with a variable infection rate

- Modeling with at time variable infection rate

- Numerical Model (coming soon!)

- Discussion and Conclusions (coming soon!)

Modeling the COVID-19 / Coronavirus pandemic – 1.Background Data 7 Apr 2020 1:40 PM (5 years ago)

This is the first in a series of posts in which I study the COVID-19 (coronavirus) pandemic. My original goal was to understand the behavior of the pandemic. As a scientists my curiosity forces me to not to leave such problems untouched. I wanted to know what are the possible outcome scenarios possible and what are the steps required to reach them. Is there a reasonable solution in which we avoid the collapse of health systems and/or the economies? I am sure (well, I hope) that professional epidemiologists know all of this, I decided to share with whomever is interested in my insights. A note of caution. First year college education in harder sciences or engineering is needed to appreciate everything.

Just as a background. I am a professor of physics at the Hebrew University. My bread and butter are problems in astrophysics (massive stars, cosmic rays) as well as understanding how the sun has a large effect on climate (though modulation of the cosmic ray flux) and its repercussions on our understanding of 20th century climate change, and climate in general.

As I write this text (Early April), the pandemic is raging. It infected over 1.5 million people world wide and killed over 80000. In many places it is still growing exponentially. In Israel (where I live), the situation appears to be getting under control, with around 10000 infected, of order a few % daily infection rate (and decreasing), and 70 or so dead, i.e., just over half percent, which is actually good compared with other countries, as can be seen here for example).

Anyway, the goal of the notes is to model the pandemic, understand it, and hopefully reach positive constructive conclusions. These are especially important if we are to understand how we leave the lockdowns most of us are now in. In order to so, we need some useful data. So, the rest of this post is dedicated to summarize various useful results I found in various preprints, as well as the pandemic growth data from different countries that I plotted using available data. The subsequent posts will be dedicated to understanding the pandemic with models of various complexity.

Number of Infected and its growth rate in different countries.

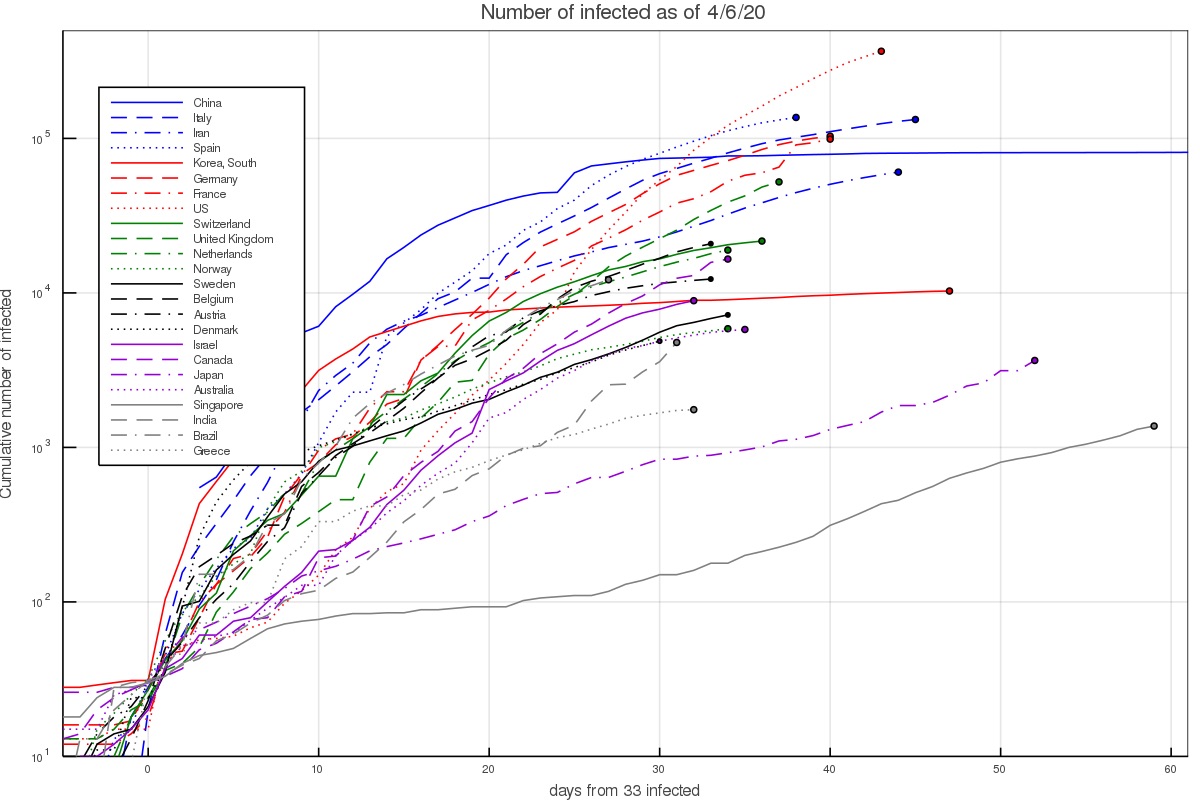

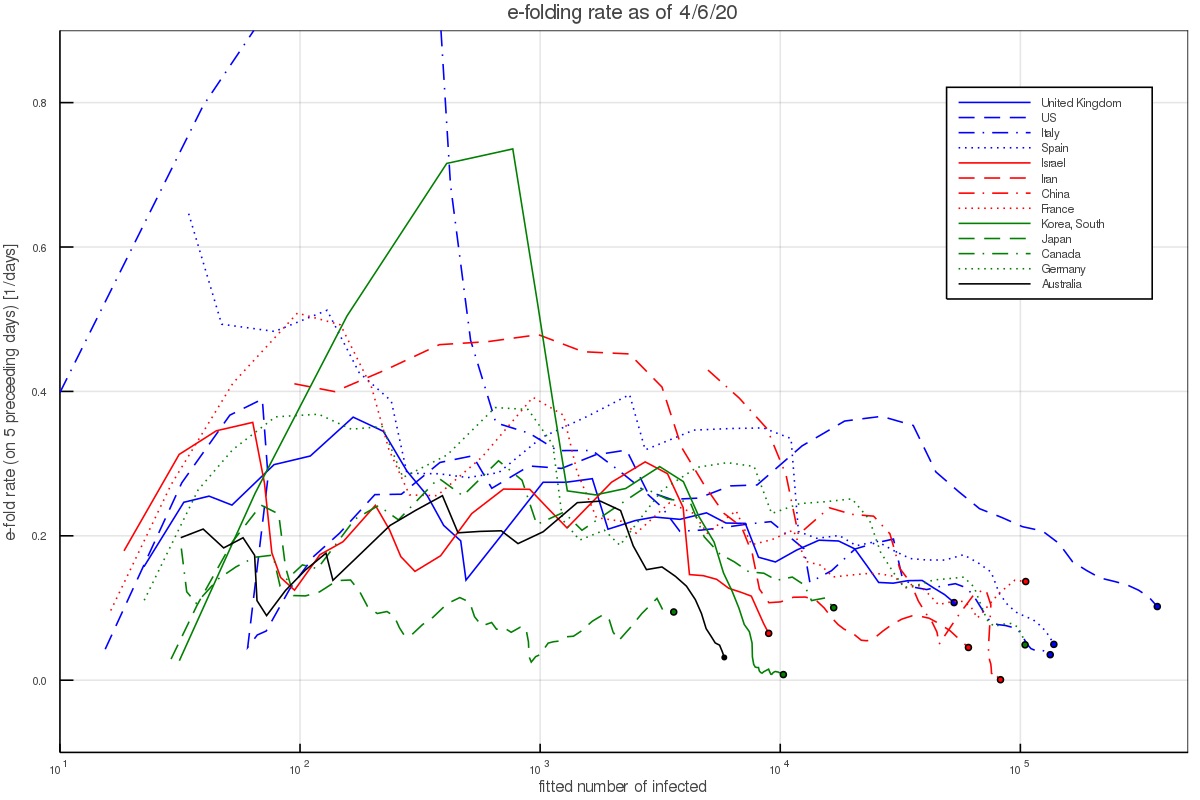

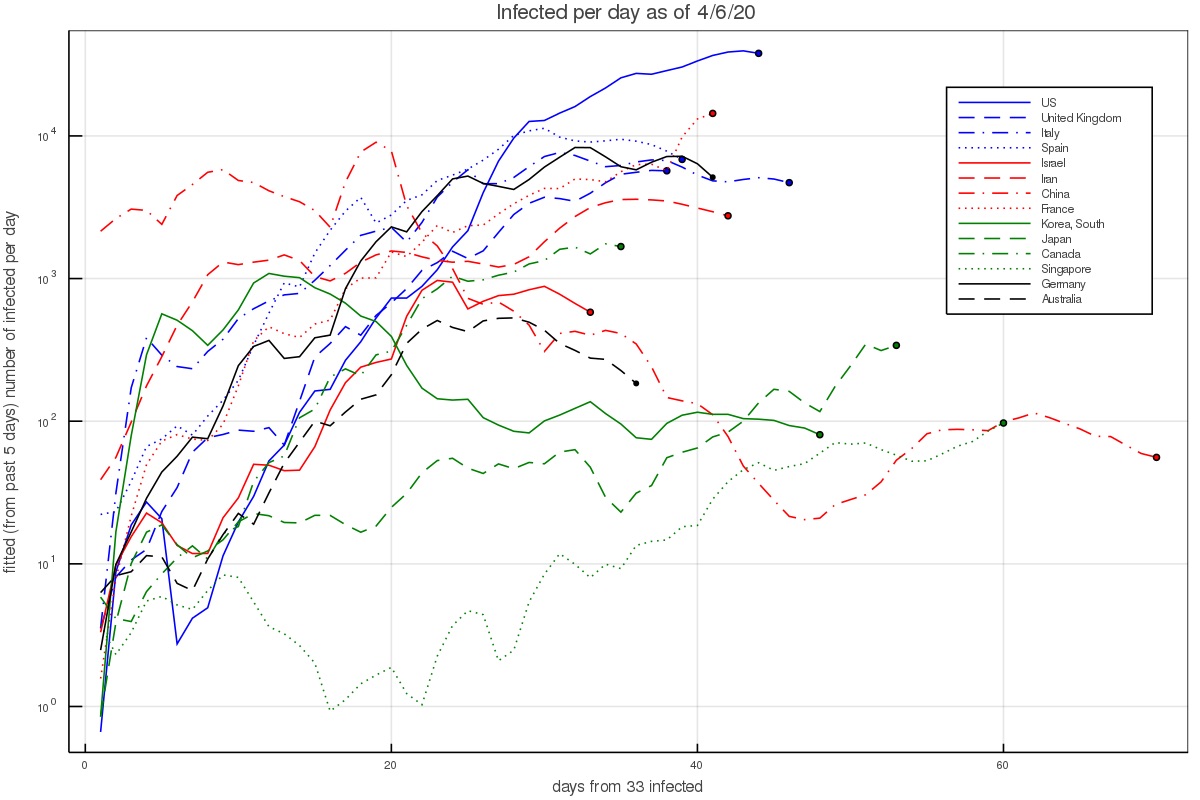

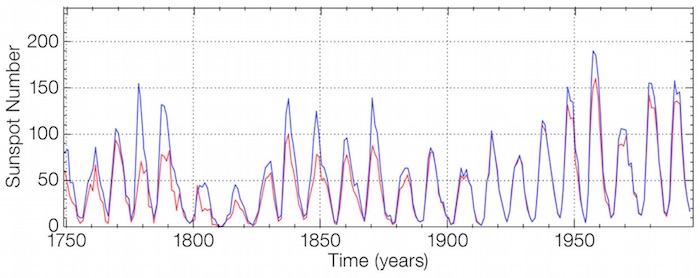

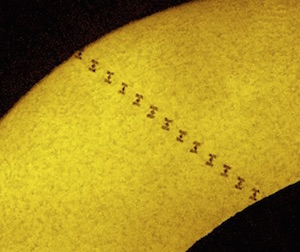

Data on the infection at different countries is collected by the John Hopkins University Center for Systems Science and Engineering, and kept in a data repository on Github. This data can then be used to plot the number of infected as a function of time. This is done in fig. 1 below. The majority of western countries appear to have grown from 100 to 1000 infected in 7.5 ± 2 days, or a rate of about 0.305 ± 0.065 e-folds per day.

There are several interesting exceptions. First, there are countries in which the growth was notably faster. In Iran this can be explained given the dense environment and significant interaction at religious places. The infection growth started in the city of Qom which is an important Shia center. In South Korea it started with a super spreader at the city of Daegu (in a Christian center). In Italy and Spain fast initial growth is attributed to the Champion league match between Atalanta of Bergamo and Valencia, taking place in Milan.

On the other hand, there are several countries with slower growth. This includes Israel which already had quarantine measures taking place before the first community infections took place, as well as Australia and Canada. It could be slower in the latter countries either because the weather is notably hotter/colder, or perhaps the typical interaction in those societies is lower. The lower infection in Japan and Singapore can probably be attributed to the social standards requiring for example facial masks by anyone who has any cold or flu like symptoms. In Japan, without any quarantining or lockdowns, the growth rate was around 0.075 ± 0.025 e-folds per day.

Another way of depicting the growth is to calculate the growth rate at each day, based on the preceding 5 days. This results in figs. 2 and 3, which depict the growth rate and the number of infected per day based on the 5 day fit (and thus average out some of the day to day variations). During the time of the pandemic, the figures are updated almost everyday and published on twitter under my handle @nirshaviv.

Fig. 2 shows that when there are a few hundred to a few thousand people, which is after the pandemic took hold in a country but before countries took measures or have them affect the growth, the aforementioned rate consistently describes the data (except for the outlier countries mentioned above).

Incubation period

The incubation period is the time between exposure and infection by an infected person and the appearance of the first symptoms. Given that self isolation can take place after the first symptoms show up, the incubation period is crucial for estimating whether a corona outbreak can be reined in naturally.

For the incubation period we take the probability distribution function fitted for by Lauer et al. 2020. They fitted several functional forms which give similar fits. We shall work with the Γ-distribution for consistency with the serial interval fits we use below. Their best fit is a gamma distribution with a shape parameter of 5.807 (95% CI of 3.585-13.865) and a scale parameter of 0.948 days (95% CI of 0.368-1.696 days). This gives a mean of 5.1 days.

These results are consistent with Li et al. 2020 who find an incubation period (mean time between primary infection and appearance of symptoms ) of 5.2 days (with 95% confidence between 4.1 to 7.0 days).

Serial Interval and infection as a function of time}

Another extremely important piece of information in how infectious are people with the corona virus, and how it depends on time.

By limiting themselves to reliable infection lines, Nishiura et al. 2020 find a serial interval of 4.6 days (with a 95% CI of 3.5 to 5.9). This is somewhat shorter than Li et al. 2020, who find a serial interval (mean time between primary and secondary infection) of 7.5 days (with 95% confidence between 5.3 to 19.0 days).

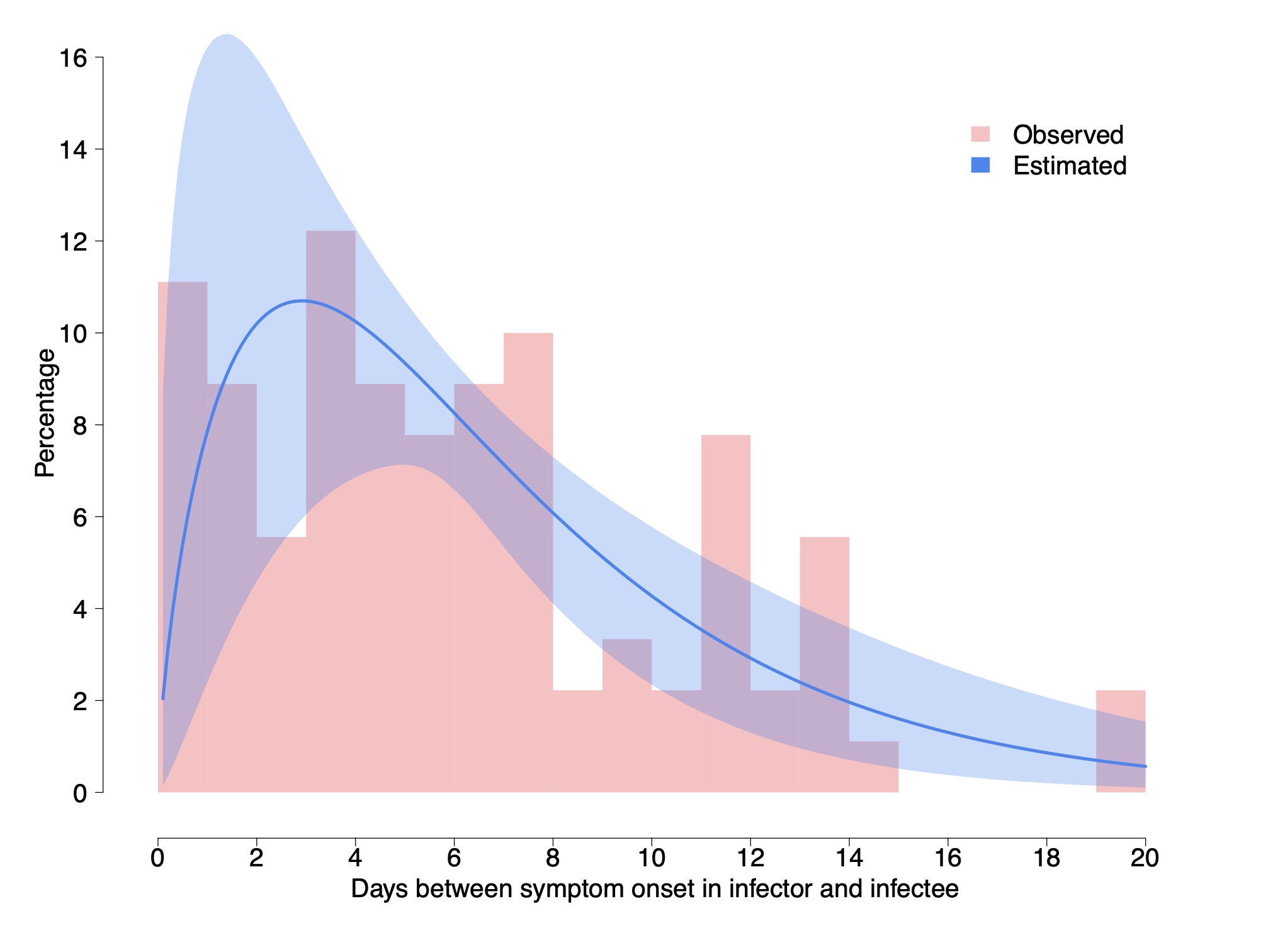

Cereda et al. 2020 carry an analysis of over 5000 cases from the early outbreak in Lombardy Italy from which they derive 90 pairs of cases with known infector-infectee relationship, which is much larger than above. They derive a distribution of cases and fit it with a Γ distribution having a shape parameter of 1.87 ± 0.26 and a rate of 0.28 ± 0.04 day$^{-1}$. This gives a mean of 6.7 days and a median of 5.5 days. In what follows, we work with these distribution and values.

We do note however that although the authors claim it is the serial interval, it is the interval between the appearance of symptoms and not the between the infections. Although the two have the same mean, the interval between the appearance of symptoms should have a somewhat wider distribution because the incubation periods in the infector and infectee are not the same. Using the data on the incubation we can actually correct for this.

The variance of a Γ distribution describing the interval between appearance of symptoms, with the above shape is 23.9 day$^2$, while that of the incubation period is 6.46 day$^2$. The variance of the serial interval should be $\sigma_{serial-int}^2 \approx \sigma_{sympt-int}^2 - (1~\mathrm{to}~2)~\sigma_{incub}^2 = 11.0~\mathrm{to}~ 17.4$ day$^2$. The factor 1 or 2 depends on whether there is a correlation between the infected being infectious and developing symptoms, (1) or whether there is no correlation (2). Thus, we take middle ground, which is a variance of around 14.2 ± 3.2 day$^2$. If we keep the mean to be 6.7 days, we need a shape parameter of 3.1 ± 0.8 and a rate of 0.47 ± 0.12 day$^{-1}$.

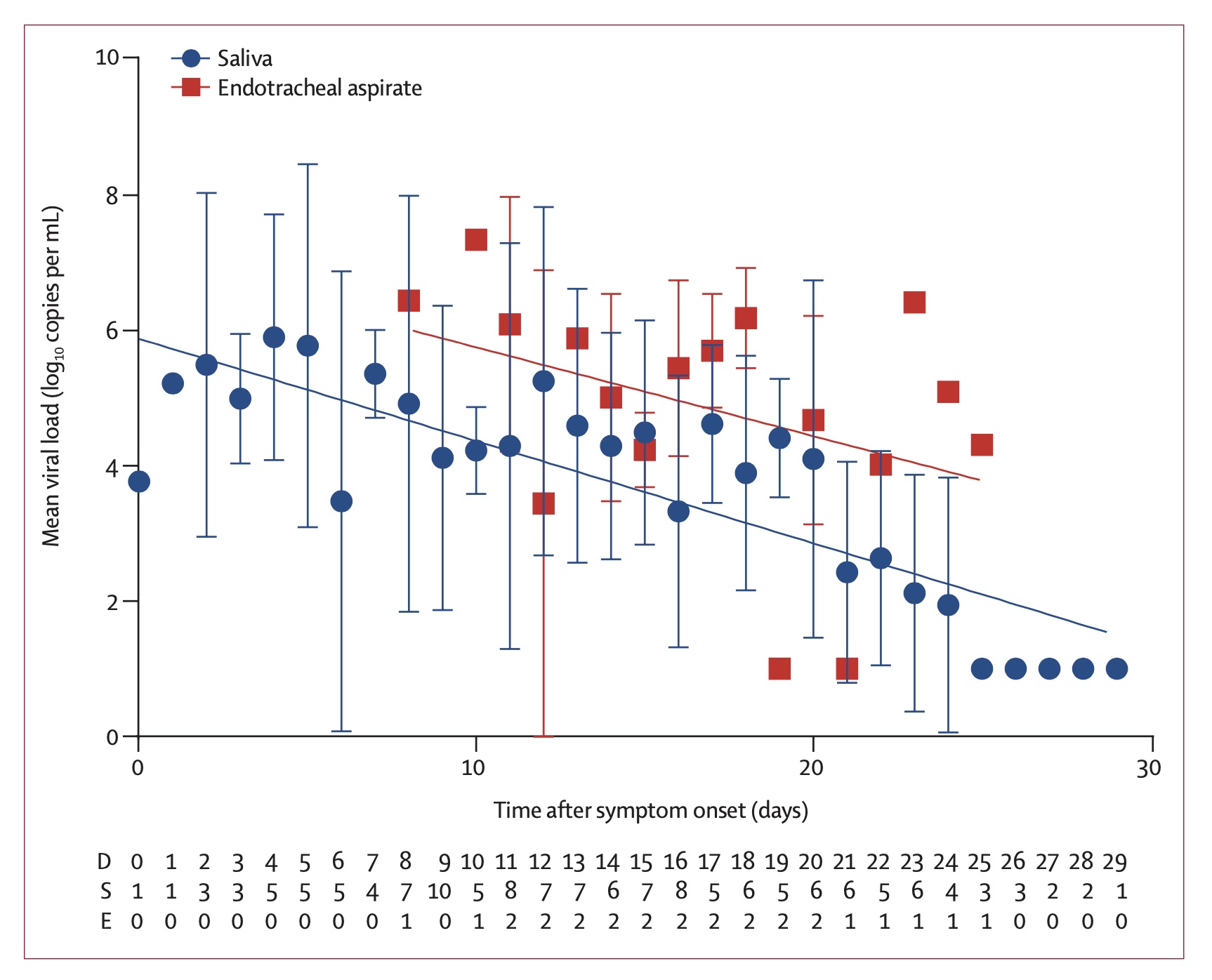

As a consistency check, this rate which describes the exponential decay of how the infected is contagious can be compared with the decay of the viral load measured in infected people To et al. 2020. It was found to be 0.15 ± 0.02 decades per day, which corresponds to a rate of 0.345 ± 0.046 e-folds per day. Note however that the fit here is to a simple exponential decay. If we wish to compare the above fit to the viral load decay, namely, fit an exponential decay to the Γ distribution (say between day 7 and 24, the range over which the viral load decay was measured and fitted), we find an offset of about 0.11. That is, the Γ distribution appears like an exponential with a rate of 0.36 ± 0.12 day$^{-1}$ over this period. It is therefore consistent with the decay of the viral load.

Fraction of asymptomatic infections

Another characteristic of the coronavirus infection which is crucial for the modeling of the pandemic growth is the fraction of asymptomatic infections, as these are individuals which can spread the infection without realizing that they are doing so.

The Royal Princess quarantined in Japan offers the possibility of analyzing a relatively complete sample for the appearance of symptoms in infected individuals. Although it requires some modeling (to model those infected who would develop symptoms after taken off board given the incubation period), the value that they found was 17.5% (95% confidence of 15.5–20.2%, Mizumoto et al. 2020). Although relatively complete and with a small uncertainty, the problem here is that the age distribution of the infected people is highly top weighted. It is not unreasonable that younger populations (of which fewer become critically ill) are also more likely to be asymptomatic. Namely, this number could be suffering from a large systematic bias.

Another less biased estimate is based on the Japanese citizens evacuated from Wuhan gives a fraction of 41.6% (95% Confidence interval 16.7-66.7%, Nishiura et al 2020). This estimate has however a relatively large uncertainty.

Although not officially published, it has recently been circulating that the Chinese authorities estimate that one quarter don't develop symptoms. According to the head of the CDC, Dr. Robert Redfield, this number has been "pretty much confirmed" (e.g., see quote on NPR).

In what follows, we take the the fraction of asymptomatic coronavirus infections is f = 0.3 ± 0.1.

Additional posts in the series include

- Background data (this page)

- Simple Modeling

- Effects of a population with a variable infection rate

- Modeling with at time variable infection rate

- Numerical Model (coming soon!)

- Discussion and Conclusions (coming soon!)

References

- Cereda, D., Tirani, M., Rovida, F., et al. 2020, The early phase of the COVID-19 outbreak in Lombardy, Italy. https://arxiv.org/abs/2003.09320

- Lauer, S. A., Grantz, K. H., Bi, Q., et al. 2020, Annals of Internal Medicine, doi:10.1056/NEJMoa2001316

- Li, Q., Guan, X., Wu, P., et al. 2020, New England Journalof Medicine, 382, 1199, doi: 10.1056/NEJMoa2001316

- Mizumoto, K., Kagaya, K., Zarebski, A., & Chowell, G.2020, Eurosurveillance, 25, doi: 10.2807/1560-7917.es.2020.25.10.2000180

- Nishiura, H., Linton, N. M., & Akhmetzhanov, A. R.2020a, International Journal of Infectious Diseases, 93,284, doi: 10.1016/j.ijid.2020.02.060

- Nishiura, H., Kobayashi, T., Miyama, T., et al. 2020b, doi: 10.1101/2020.02.03.20020248

- To, K. K.-W., Tsang, O. T.-Y., Leung, W.-S., et al. 2020, The Lancet Infectious Diseases, doi: 10.1016/s1473-3099(20)30196-1

How Climate Change Pseudoscience Became Publicly Accepted 24 Sep 2019 12:07 PM (6 years ago)

The I recently wrote an OpEd for the Epoch Times which tries to succinctly capture my main grievances with the global warming scare. Here is brought again with a few comments (and references) added at its end.

––––––––––

The climate week that is being held in New York City has urged significant action to fight global warming. Given the high costs of the suggested solutions, could it be that the suggested cure is worse than the disease?

As a liberal who grew up in a solar house, I have always been energy conscious and inclined towards activist solutions to environmental issues. I was therefore extremely surprised when my research as an astrophysicist led me to the conclusion that climate change is more complicated than we are led to believe. The disease is much more benign; and a simple palliative solution lies in front of our eyes.

To begin with, the story we hear in the media, that most of the 20th century warming is anthropogenic, that the climate is very sensitive to changes in CO2, and that future warming will therefore be large and will happen very soon, is simply not supported by any direct evidence, only a shaky line of circular reasoning. We “know” that humans must have caused some warming, we see warming, we don’t know of anything else that could have caused the warming, so it adds up.

However, there is no calculation based on first principles that leads to a large warming by CO2, none. Mind you, the IPCC (Intergovernmental Panel on Climate Change) reports state that doubling CO2 will increase the temperatures by anywhere from 1.5 to 4.5°C, a huge range of uncertainty that dates back to the Charney committee from 1979.

In fact, there is no evidence on any time scale showing that CO2 variations or other changes to the energy budget cause large temperature variations. There is however evidence to the contrary. 10-fold variations in the CO2 over the past half billion years have no correlation whatsoever with temperature; likewise, the climate response to large volcanic eruptions such as Krakatoa.

Both examples lead to the inescapable upper limit of 1.5°C per CO2 doubling—much more modest than the sensitive IPCC climate models predict. However, the large sensitivity of the latter is required in order to explain 20th century warming, or so it is erroneously thought.

In 2008 I showed, using various data sets that span as much as a century, that the amount of heat going into the oceans in sync with the 11-year solar cycle is an order of magnitude larger than the relatively small effect expected from just changes in the total solar output. Namely, solar activity variations translate into large changes in the so called radiative forcing on the climate.

Since solar activity significantly increased over the 20th century, a significant fraction of the warming should be then attributed to the sun, and because the overall change in the radiative forcing due to CO2 and solar activity is much larger, climate sensitivity should be on the low side (about 1 to 1.5°C per CO2 doubling).

In the decade following the publication of the above, not only was the paper uncontested, more data, this time from satellites, confirmed the large variations associated with solar activity. In light of this hard data, it should be evident by now that a large part of the warming is not human, and that future warming from any given emission scenario will be much smaller.

Alas, because the climate community developed a blind spot to any evidence that should raise a red flag, such as the aforementioned examples or the much smaller tropospheric warming over the past two decades than models predicted, the rest of the public sees a very distorted view of climate change — a shaky scientific picture that is full of inconsistencies became one of certain calamity.

With this public mindset, phenomena such as that of child activist Greta Thunberg are no surprise. Most bothersome however is that this mindset has compromised the ability to convey the science to the public.

One example from the past month is an interview I gave Forbes. A few hours after the article was posted online, it was removed by the editors “for failing to meet our editorial standards”. The fact that it has become politically incorrect to have any scientific discussion has led the public to accept the pseudo-argumentation supporting the catastrophic scenarios.

Evidence for warming doesn’t tell us what caused the warming, and any time someone has to appeal to the so called 97 percent consensus he or she is doing so because his or her scientific arguments are not strong enough. Science is not a democracy.

Whether or not the Western world will overcome this ongoing hysteria in the near future, it is clear that on a time scale of a decade or two it would be a thing of the past. Not only will there be growing inconsistencies between model and data, a much stronger force will change the rules of the game.

Once China realizes it cannot rely on coal anymore it will start investing heavily in nuclear power to supply its remarkably increasing energy needs, at which point the West will not fall behind. We will then have cheap and clean energy producing carbon neutral fuel, and even cheap fertilizers that will make the recently troubling slash and burn agriculture redundant.

The West would then realize that global warming never was and never will be a serious problem. In the meantime, the extra CO2 in the atmosphere would even increase agriculture yields, as it has been found to do in arid regions in particular. It is plant food after all.

Comments and links:

- More about the problems with the standard picture in my summary for a debate held at the Cambridge Union.

- Specifically, more about climate sensitivity on this blog, as well as comments on the IPCC range of sensitivity.

- On Forbes removing an interview with me.

- More on the 2008 paper on how the oceans can be used to quantify the solar forcing and prove that it is large, as well as a recent discussion on its implications.

- This is an example presentation of the dual fluid reactor, a 4th generation concept of nuclear power plant producing cheap, clean and safe energy, which I learned about from Dr. Götz Ruprecht when I visted the Bundestag last year.

Critique of “Discrepancy in scientific authority and media visibility of climate change scientists and contrarians” 17 Aug 2019 1:21 PM (6 years ago)

A paper that recently received some media attention is the “Discrepancy in scientific authority and media visibility of climate change scientists and contrarians” by Alexander Michael Petersen, Emmanuel M. Vincent & Anthony LeRoy Westerling, Nature Communications, volume 10, Article number: 3502 (2019). Here is what I think of it.

The critique of this paper is going to be very short, because it has a MAJOR flaw that renders all the results totally meaningless (even as an anecdotal curiosity). The underlying problem with the whole analysis is the way that the lists were composed. Here is how they composed each list:

“Selection of contrarians (CCC). We compiled a list of 386 contrarians by merging three overlapping name lists obtained from three public sources. The first source is the list of former speakers at The Heartland Institute ICCC conference (http://climateconferences.heartland.org/speakers/) over the period 2008–present, providing a representative sample across time; the second source is the list of individuals profiled by the DeSmogblog project; and the third source is drawn from the list of lead authors of the most recent 2015 NIPCC report (the principal summary of CC denial argumentation produced in conjunction with The Heartland Institute, http://climatechangereconsidered.org/).”

“Selection of scientists (CCS). We ranked individuals’ publication profiles according to the net citations $C_i = \sum_{i \in p} c_p$ calculated by summing individual article citation totals ($c_p$) for only the individual articles (indexed by p) included within our WOS CC dataset. In this way, the CCS group is comprised of the 386 most-cited CC scientists, based solely on their CC research.”

As you can see, the selection criteria is completely different. While the list of alarmists, acryonymed CCS (climate change shouters, I think) is selected by the the citations, the list of anti-alarmists, acronymed CCC (Climate Change Comforters, I think ;-) was selected by those who already have more exposure to the media. Then they compare the groups, and what do you know, the group that was selected according to bibliometric impact has a higher bibliometric impact and those selected through public exposure, namely, because they were active in the media, have more public exposure. Duh! (https://www.youtube.com/watch?v=nE7J5zLaefs). This is one of the most obvious selection biases I have seen in my scientific life. It's not a compliment.

Because of this distorted selection, the top CCC is Marc Morano. He isn’t a scientist nor does he pretend to be one, so why do the authors of this “research” compare his null scientific citation record to media appearance ratio with that of scientists? I don’t see that they put Al Gore in the top of the CCS list! He too has a very poor bibliometric impact.

A correct methodology would have been to comprise similar length lists of the top CCC and CCS based on citations alone, and then compare. But I guess it was a little too hard. Let me quote Mark Twain who said that there are “Lies, damned lies, and statistics”. In this case, it is statistics based on highly biased data.

I said before and I’ll say it again. Alarmists should use scientific arguments to bolster their case. The more they use chaff arguments, the more it reflects badly on their ability to defend their scientific case, perhaps because they can’t (e.g., see this).

Solar Debunking Arguments are Defunct 11 Aug 2019 5:58 AM (6 years ago)

An article interviewing me was removed yesterday from forbes. Instead, they published an article by Meteorologist Prof. Marshall Shepherd that claims that the sun has no effect on climate. That article, however, falls to the same pitfalls that pointed out on my blog yesterday.

Specifically, why is Shepherd’s arguments faulty? Although I addressed them yesterday, here they are brought again more explicitly and with figures.

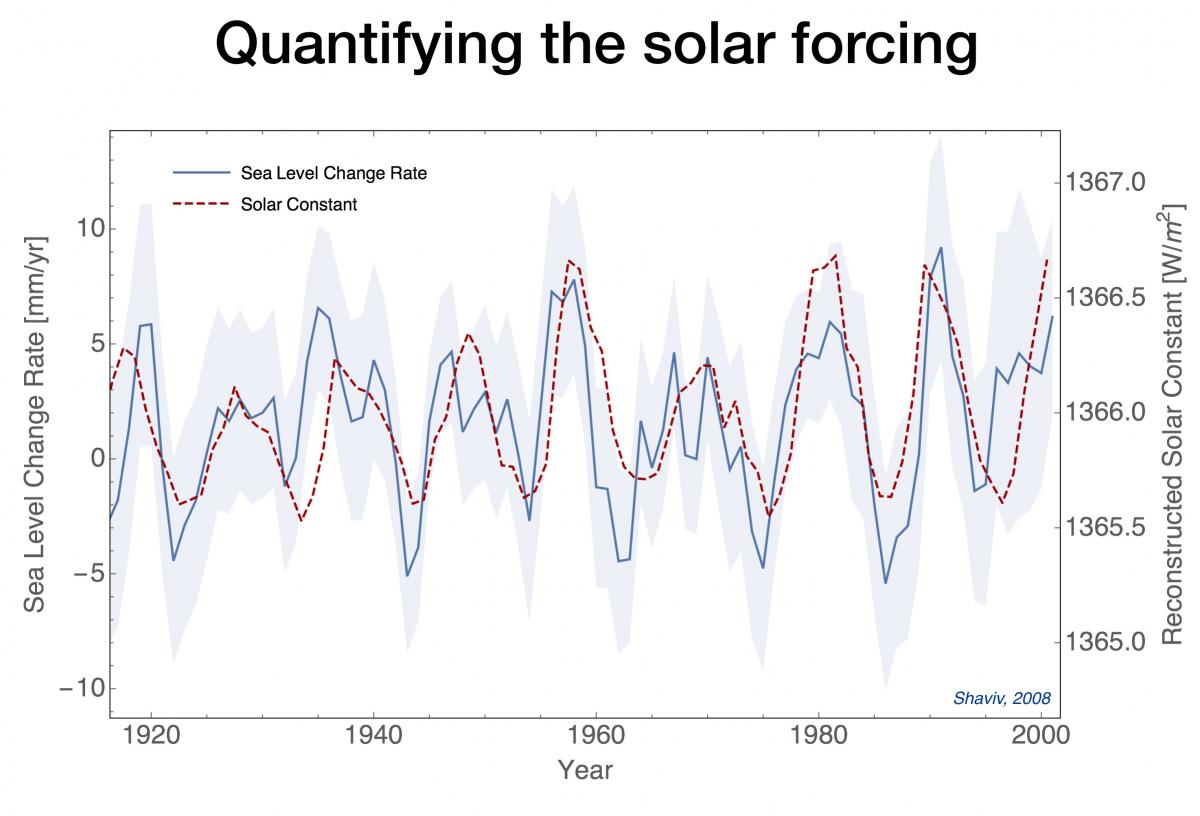

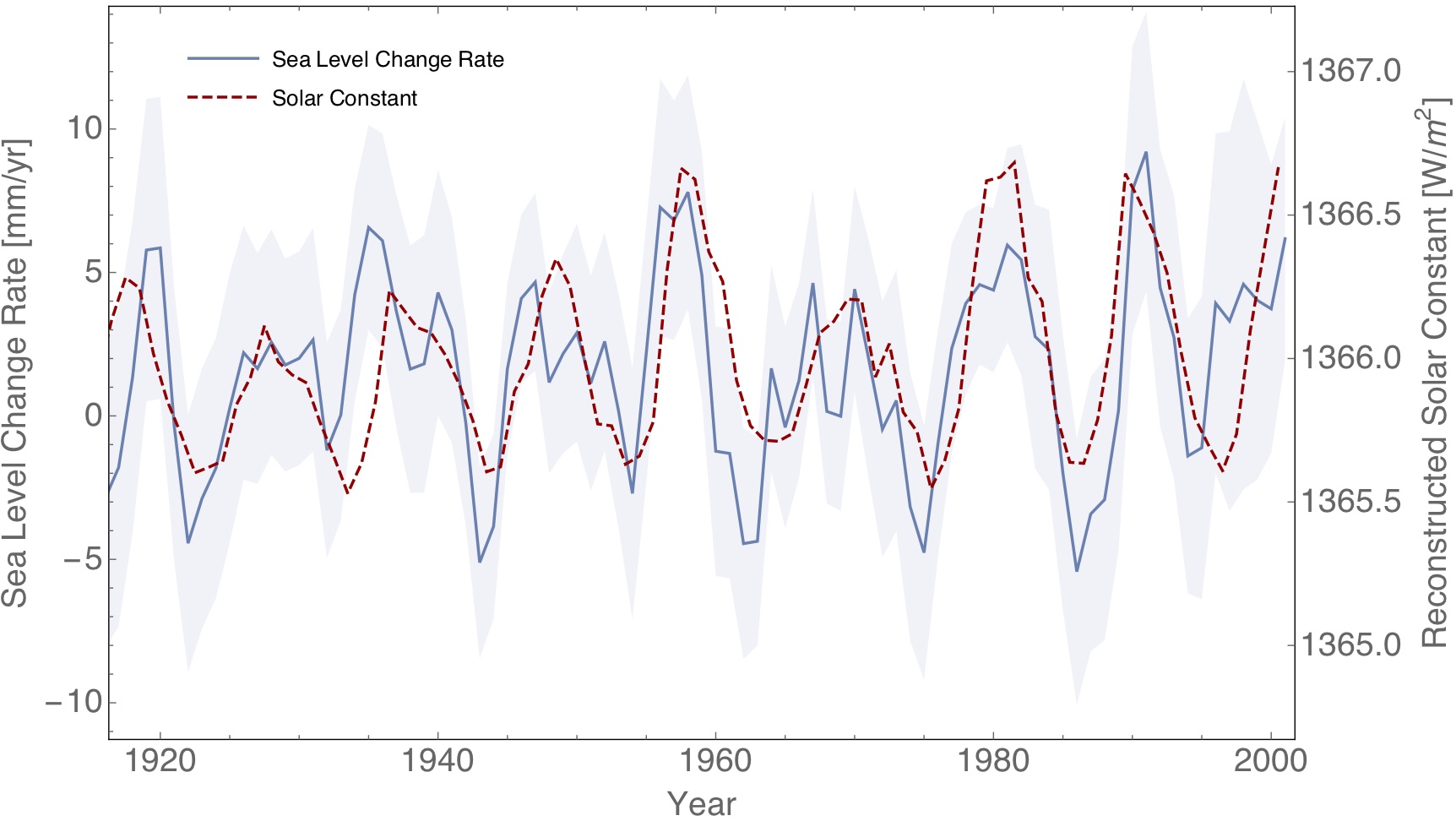

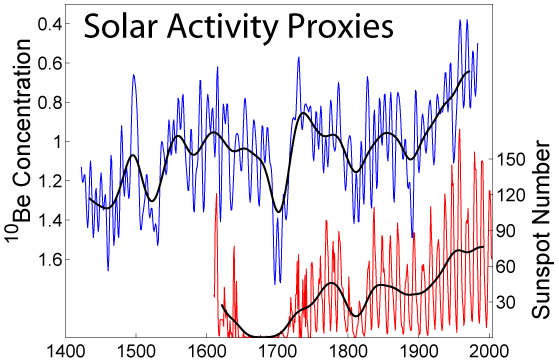

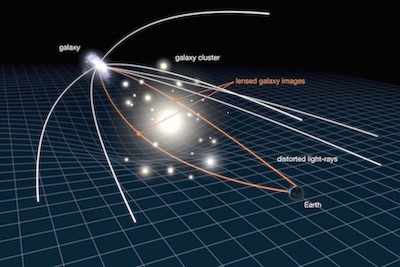

First, and foremost, Shepherd ignores the clear evidence that shows that the sun has a large effect on climate, and quantifies it. This graph is from the Shaviv 2008 (#1 in the reference below):

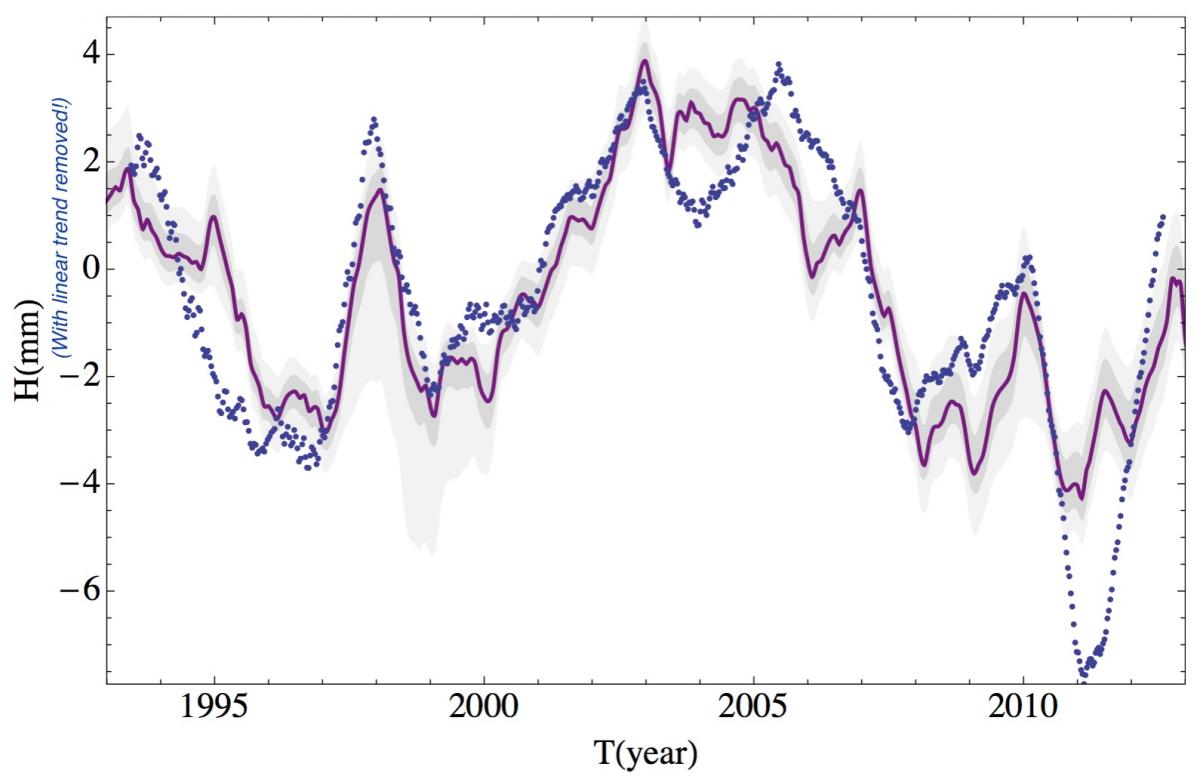

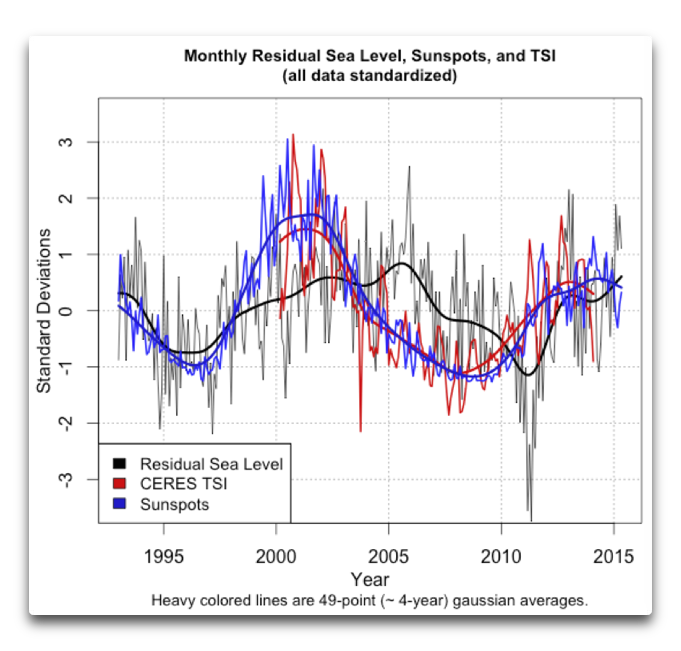

Figure 1: Reconstructed Solar constant (dashed red line) and sea level change rate based on Tide Gauge records as a function of time (solid blue line with 1 sigma error region in gray).

As you can see, there is a very clear correlation between solar activity and the rate of change of the sea level. On short time scales most of the sea level change is due to changes in the heat going into the oceans, such that we can quantify the solar radiative forcing this way. It is found to be an order of magnitude larger than changes in the irradiance, which is what the IPCC is claiming is to be the solar contribution.

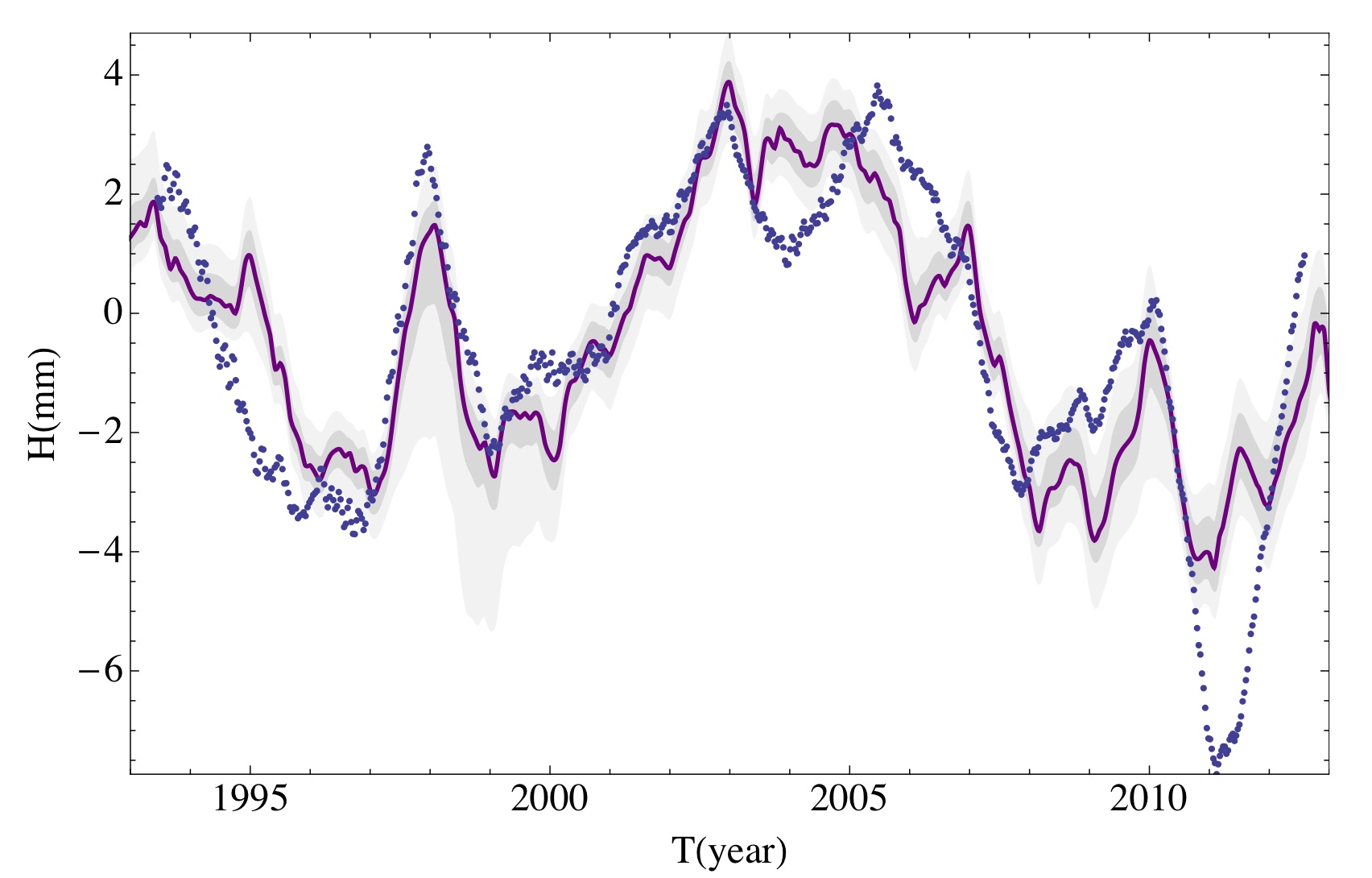

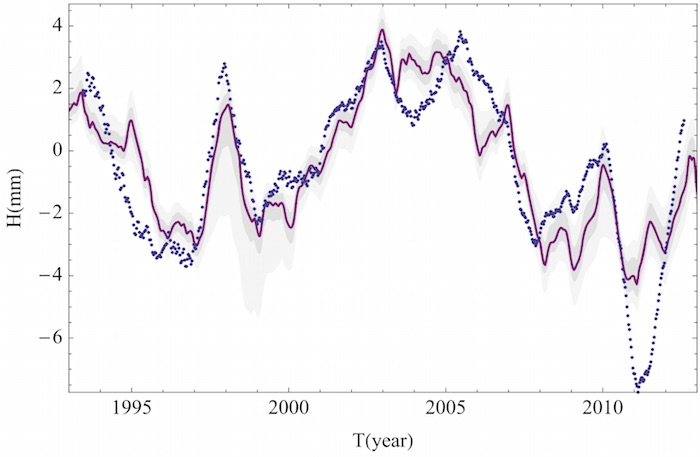

After that work was published there was not a single paper that tried to refute it. Instead, additional satellite altimetry data covering two more solar cycles just revealed the same. In fact, the sun + el Niño Southern Oscillation can explain almost all the sea level variations minus the long term linear trend (caused by ice caps melting). This is from Howard et al. 2015 (see ref. #2 at the end):

Figure 2: Satellite Altimetry based sea level (minus linear trend) in dashed blue points. Red is best fit model which includes solar cycle + el niño souther oscillation.

Clearly, the sun continues to have a clear effect on the climate. Note that it is impossible to explain the large variations through a feedback in the system because that would give the wrong phase in the heat content response.

What does that imply?

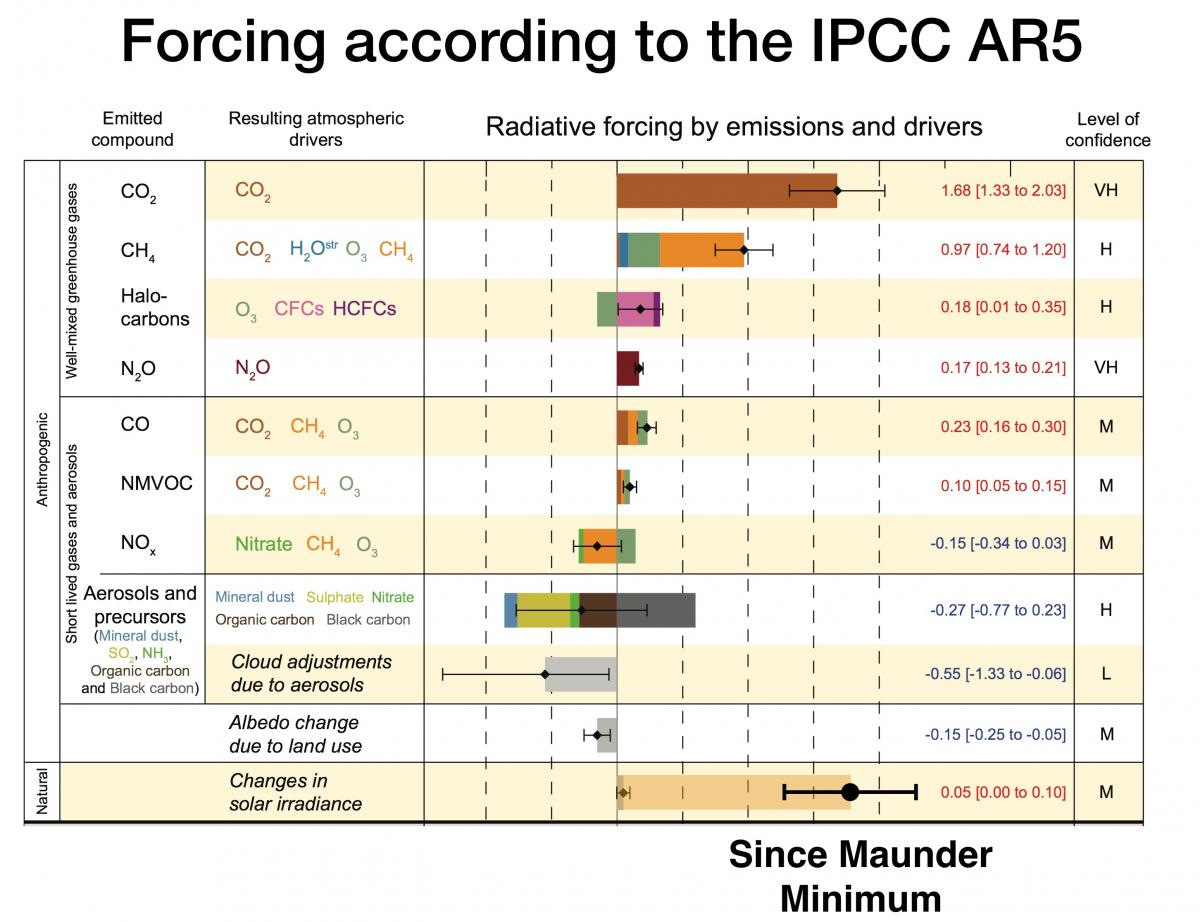

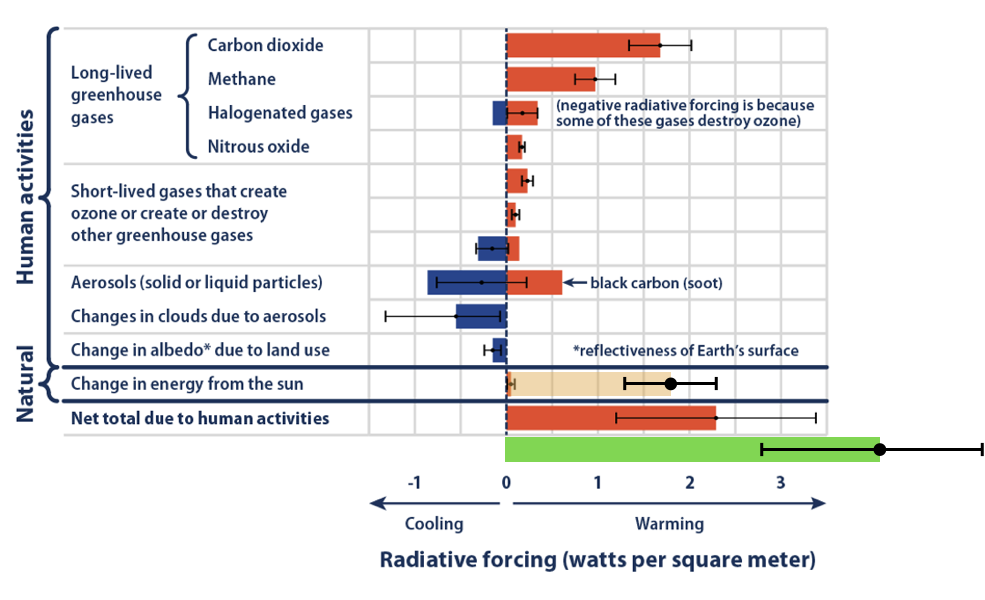

First, since solar activity increased over the 20th century, it should be taken into account. Shepherd’s radiative forcing graph should be modified to be:

Figure 3: Radiative forcing contributions (graph from Shepherd's article) with the following added. The beige is the real solar contribution over the 20th century. The green is the total forcing (natural + anthropogenic) we get once we include the real solar effect.

The next point to note is that Shepherd claimed that because solar activity stopped increasing from the 1990’s it cannot explain any further warming. This is plain wrong. Consider this example in false logic. The sun cannot be warming us because between noon and 2pm (or so), solar flux decreases while the temperature increases. As a Professor of meteorology, Prof. Shepherd should know about the heat capacity of the oceans such that assuming that the global temperature is something times the CO2 forcing plus something else times the solar forcing is too much of a simplification.

Instead, one can and should simulate the 20th century, and beyond, and see that when taking the sun into account, it explains about 1/2 to 2/3s of the 20th century warming, and that the best climate sensitivity is around 1 to 1.5°C per CO2 doubling (compared with the 1.5 to 4.5°C of the IPCC). Two points to note here. First, although the best estimate of the solar radiative forcing is a bit less than the combined anthropogenic forcing, because it is spread more evenly over the 20th century, its contribution is larger than the anthropogenic contribution the bulk of which took place more recently. That's why the best fit gives that the solar contribution is 1/2 to 2/3s of the warming. Second, the reason that the best fit requires a smaller climate sensitivity is because the total net radiative forcing is about twice larger. This implies that a smaller sensitivity is required to fit the same observed temperature increase.

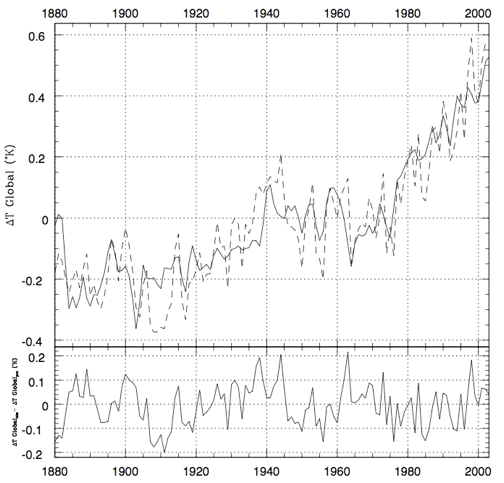

Here is my best fit to the 20th century. Solid line is model and dashed is the observed global temperate (See Ziskin & Shaviv, ref. #3 below).

Figure 4: Best fit for a model which allows for a larger solar forcing and a smaller climate sensitivity than the IPCC is willing to admit is there. Top: Model = solid line, NCDC Observations = dashed line). The bottom is the different between the two.

As you can see, the residual of the fit is typically 0.1°C, which is twice smaller than typical fits by CMIP 5 models.

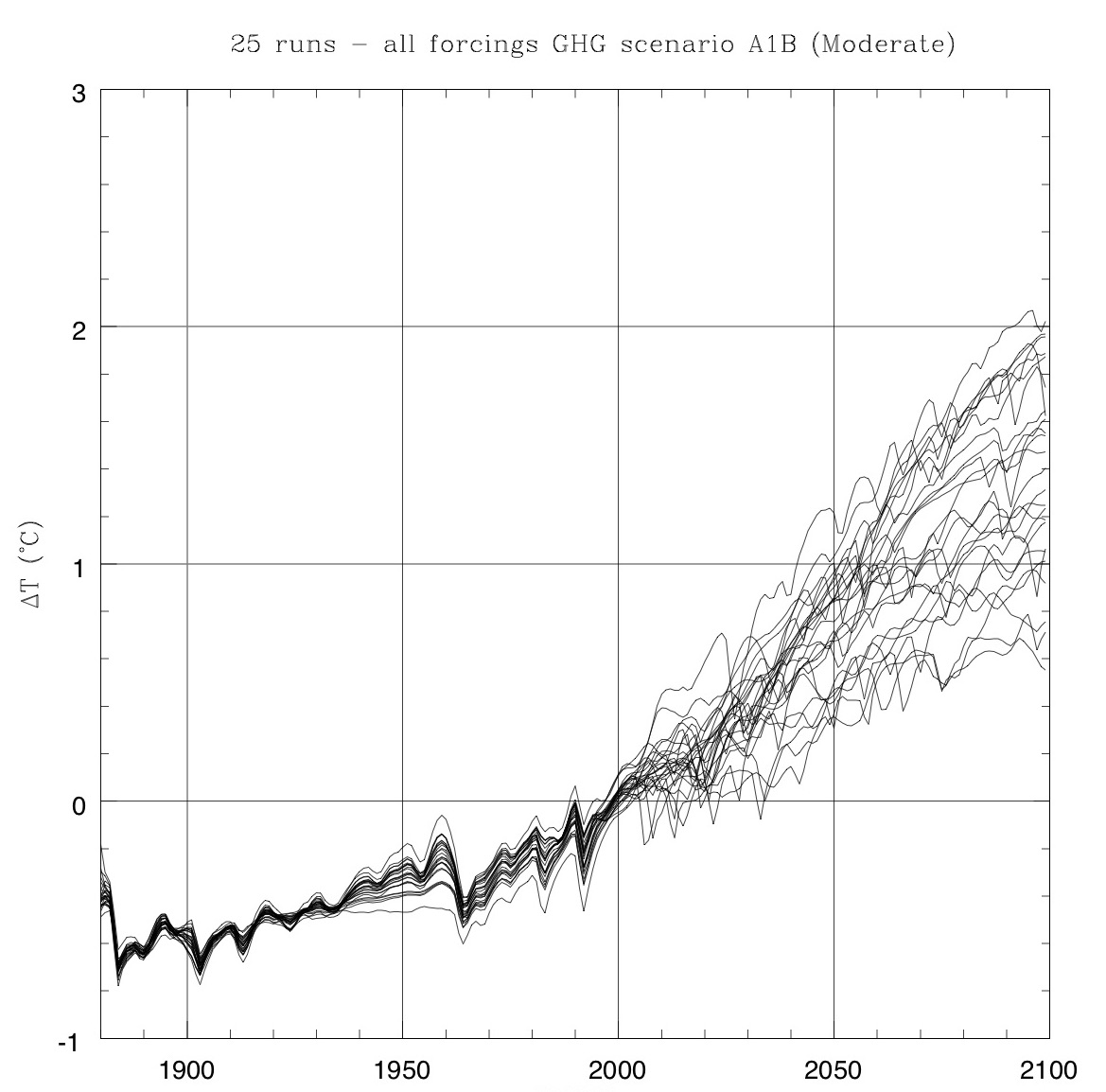

Once we fit the 20th century, we can integrate forward in time. Here I plot the expected warming for many realizations assuming a vanilla flavored emission scenario:

Figure 5: Using best fit models for the 20th century, we can integrate forward in time while making random realizations for volcanoes, solar activity etc.

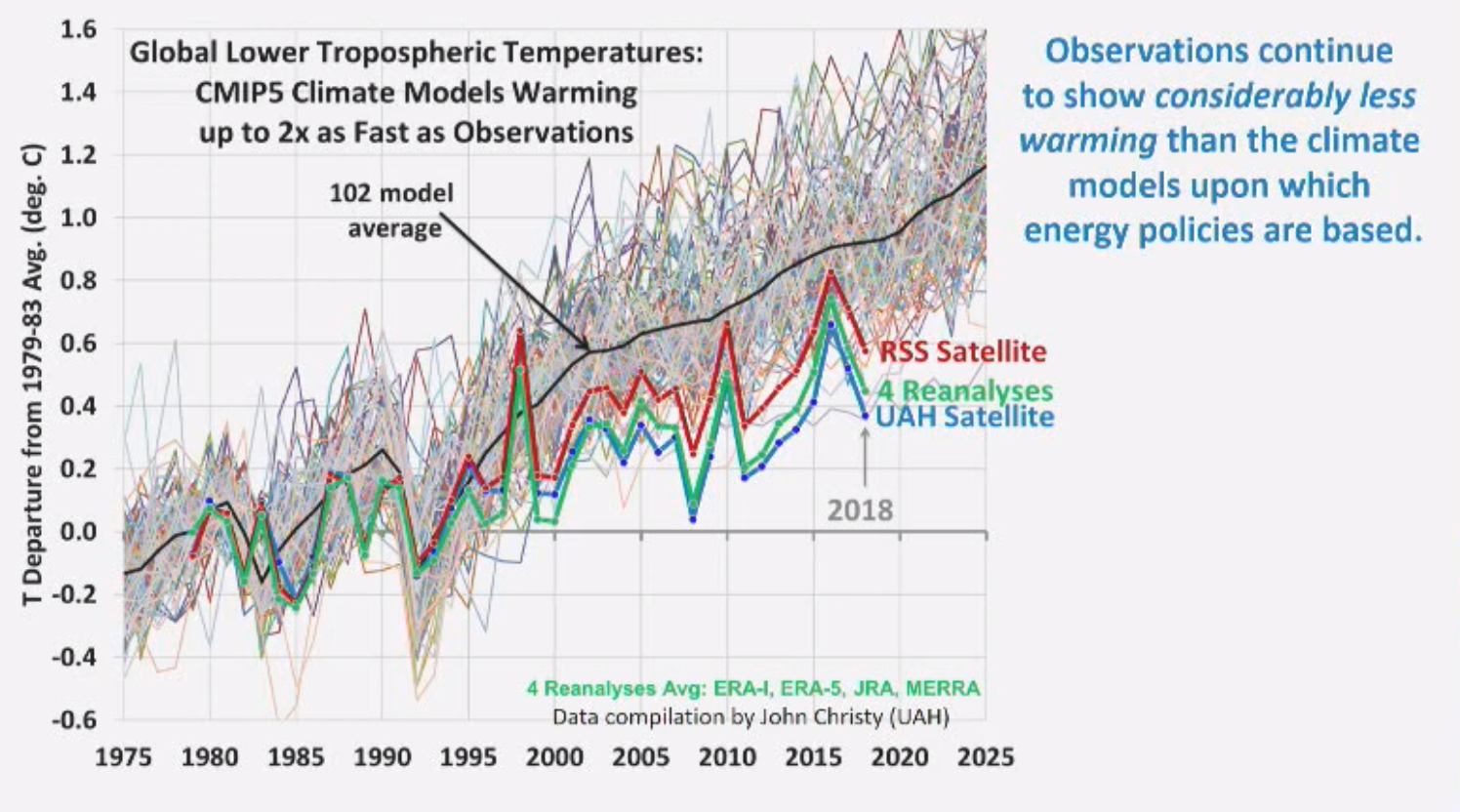

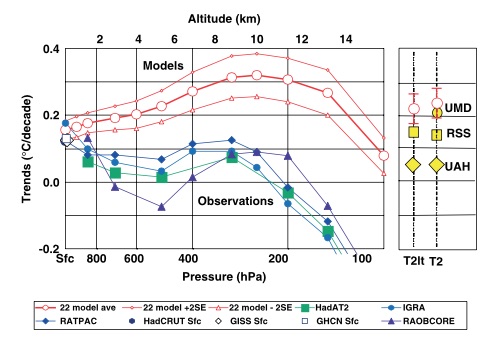

The actual temperature increase witnessed is totally consistent with the observations. It is much smaller than the CMIP 5 models which the IPCC is using. See image capture from Roy Spencer’s ICCC13 talk:

Figure 6: CMIP5 models vs. actual temperature change based on satellite (RSS/UAH) or reanalyses datasets.

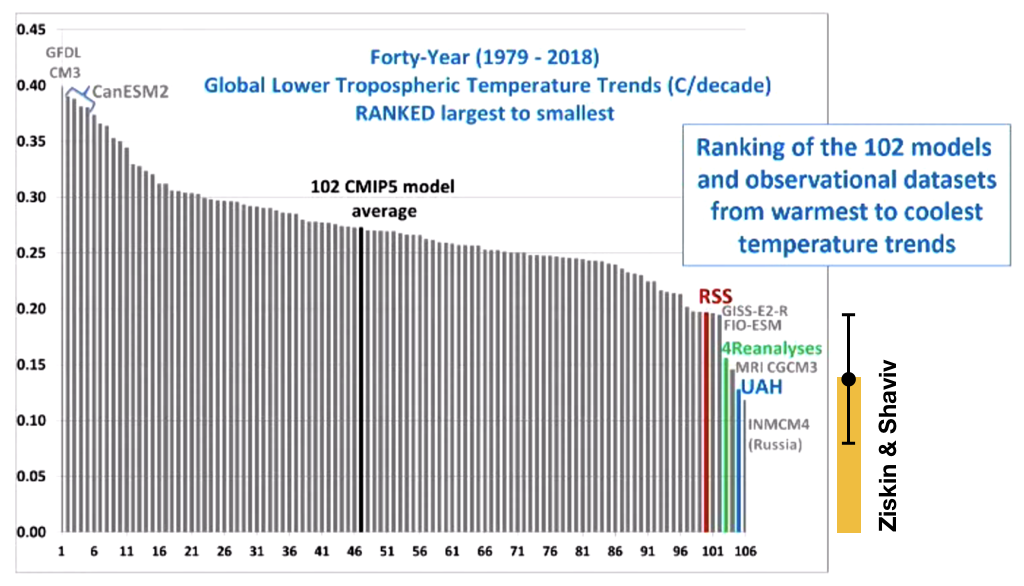

And average warming slopes, together with my predictions:

Figure 7: Warming trends in CMIP5 models vs. actual warming trends based on satellite (RSS/UAH) or reanalyses datasets. The orange bar is our predicted warming trend. Error is from the range of realizations.

Namely, our predictions are totally consistent with the satellite (RSS / UAH, whichever you prefer) and the Reanalyses datasets. Remember, this was obtained for a model which included the real solar contribution which requires a small climate sensitivity.

Shepherd also mentions that the link through cosmic ray flux variations has been debunked. I point the reader to a summary of why those attacks don’t hold any water, which I wrote yesterday.

To summarize, Shepherd did not debunk the solar forcing. His arguments are defunct. Unless he comes up with a very good explanation to the first graph above, he should instead advocate taking solar forcing into account. The fact that forbes hushes up any possibility for having a scientific debate should be considered truly bothersome by anyone who values free speech and scientific debate. Truth will prevail irrespectively.

References:

- Shaviv, N. J. Using the oceans as a calorimeter to quantify the solar radiative forcing. J. Geophys. Res. (Space Phys.) 113, 11101 (2008) local version (not paywalled)

- Howard, D., Shaviv, N. J., Svensmark, H., The solar and Southern Oscillation components in the satellite altimetry data, J. Geophys. Res. Space Physics, 120, 3297–3306 (2015)

- Ziskin, S., Shaviv, N. J., Quantifying the role of solar radiative forcing over the 20th century, Advances in Space Research 50, 762–776, (2012). local version (not paywalled)

Forbes censored an interview with me 10 Aug 2019 8:13 AM (6 years ago)

A few days ago I was interviewed by Doron Levin, for an article to appear online on forbes.com. After having seen a draft (to make sure that I am quoted correctly), I told him good luck with getting it published, as I doubted it will. Why? Because a year ago I was interviewed by a reporter working for Bloomberg, while the cities of San Francisco and Oakland were deliberating a climate change lawsuit against Exxon-Mobil (which the latter won!), only to find out that their editorial board decided that it is inappropriate to publish an interview with a heretic like me. Doron’s reply was to assure me that Forbes’ current model of the publication online allows relative freedom with “relatively little interference from editors”. Yeah Sure.

After the article went online yesterday and Doron e-mailed so, I saw how much relative exposure it received. It had already more than 40000 impressions in a matter of a couple of hours. Impressive. All that took place while I was relaxing with my family on a Tel-Aviv beach. But this didn’t last long. Although I continued to relax at the beach, the article was taken down for “failing to meet our editorial standards”, which apparently means conforming to whatever is considered politically correct about climate change.

The piece itself is (or was, or will be?) found here. A copy was posted here.

In any case, the main goal of this post is to provide the scientific backing for the main points I raised in the interview. Here it comes.

First and foremost, I claim that the sun has a large effect on climate and that the IPCC is ignoring this effect. This I showed when I studied the heat going into the oceans using 3 independent datasets - ocean heat content, sea surface temperature, and most impressively, tide gauge records (see reference #1 below), and found the same thing in a subsequent study based on another data set, that of satellite altimetry (see reference #2 below). Note that both are refereed publications in the journal of geophysical research, which is the bread and butter journal of geophysics. So no one can claim it was published in obscure journals, yet, even though the first paper has been published already in 2008, it has been totally ignored by the climate community. In fact, there is no paper (right or wrong) that tried to invalidate it. Clearly then, the community has to take it into consideration. Moreover, when one considers that the sun has a large effect on climate, the 20th century warming is much better explained (with a much smaller residual). See reference #3 below, again refereed).

I should add that there are a few claims that the sun cannot affect the climate because of various reasons, none holds water. Here is why:

- The first claims is that “the sun cannot have a large effect on climate because changes in the irradiance are too small to do so, and we don’t know of a mechanism that can”. This is irrelevant because given that the oceans prove that the sun has a large effect on climate, we must consider it even if we don’t know how it comes about. Often in science we are forced to accept a theory we don’t fully understand because the empirical evidence suggests so. Mendelian genetics explained reality pretty well (though we now know it is a bit more complicated) a century before Watson and Crick showed what the underlying mechanism is. Does it mean that we should have discarded Mendelian genetics for a century without knowing the mechanism? Pauli postulated the existence of the neutrino a quarter of a century before it was actually detected. Similarly, almost all cosmologists and particle physicists assume that dark matter exists, because an overwhelming amount of evidence suggests so, and because alternatives simply don’t work (mainly MOND, e.g., as a post-doc and I have shown in a paper as well as many others). However, we don’t really know what dark matter really is (there are many suggestions), but its existance has to be considered. Having said that, we actually do see very clear empirical evidence pointing to the link, as I describe below.

- The second claim is that “solar activity decreased from the 1990’s but the temperature continued to increase. So the sun cannot be the reason for the heating”. It is wrong at several levels. First, one has to realize that the temperature anomaly at a given time is not some fixed factor times the forcing at the time. This is because the system has a finite heat capacity and various interesting feedbacks. Without properly modeling it, erroneous conclusions can be reached. A simple example is ruling out the solar flux as the major source of heat because between noon time and say 2pm, the solar flux is decreasing but the temperature is increasing! (Similarly, the average solar flux is decreasing during the month of July in the northern hemisphere, but the temperature is increasing). Solar activity has been high over the latter half of the 20th century such that even after solar activity started to decreases, the temperature should continue increasing for a decade or so, albeit at a lower pace. Second, the above argument is extremely simplistic. Proper modeling has to consider that human have contributed as well to the net positive forcing. And indeed, when one considers both the large effect that the sun has, and the anthropogenic forcing, one can explain 20th century climate change if climate sensitivity is on the low side, much better than the IPCC models that exclude the large effect that the sun has, but assume a large climate sensitivity instead. See reference #3 below, as well as Roy Spencer’s short talk showing that climate models generally give a much larger temperature increase than has been observed over the past 2 decades.

- The third claim is that when 20th temperature changes are compared with solar activity and anthropogenic forcing, one doesn’t see the 11 year solar cycle in the temperature data, which can be used to place an upper limit on the solar effect. This faulty argument is related to the previous one. It too assumes that the temperature should be proportional to the radiative forcing at any instant, and because the temperature variations over the 11 year solar cycle are only of order 0.1°C, the contribution to 20th century warming should be similar since the secular increase in the solar forcing is comparable to the variations over the 11 year solar cycle. However, the large heat capacity of the oceans damps any temperature variations on short time scales. Proper modeling reveals that an 0.1°C variation over the solar cycle should actually correspond to a variation much larger on the centennial time scale, in fact, about half to two thirds of the warming (see reference #3 below and my comments about the BEST analysis from Berkeley who “proved” that the sun cannot have a large climate effect based on the above argument).

[Edit: See my more detailed rebuttal of the attack on solar forcing that appeared a day later on Forbes]

As I said above, we now know from significant empirical data where the solar climate link comes from. It is through solar wind modulation of the galactic cosmic ray flux which governs the amount of atmospheric ionization, and which in turn affects the formation of cloud condensation nuclei and therefore cloud properties (e.g., lifetime and reflectivity). How do we know that?

- When the sun has gusts in the solar wind, it causes several day long reductions in the flux of cosmic rays reaching Earth, called Forbush decreases. We see as a response changes in the aerosols and in cloud properties, just as expected. See references 4 & 5 below.

- There are large cosmic ray flux variations over geological time scales that are not related to solar activity but instead to our location in the Milky Way and the changing galactic environment. You can reconstruct the cosmic ray flux using meteorites and find that the 7 ice-age epochs over the past 1 billion years all appeared when the cosmic ray flux was high (see references 6 & 7 below). On a bit shorter time scales, the vertical motion of the solar system clearly manifests itself as a 32 million year oscillations in the temperature (15 periods over the past half billion years! See reference 8 below). Namely, there are very clear indications that independent variations in the cosmic ray flux affect the climate.

- Cloud cover varies over the 11 year solar cycle (e.g., reference 9 below). This by itself is not proof that the link is through cosmic rays, since there are several things that change with the solar cycle. However, one particularly interesting aspect is that the cloud cover variation are asymmetrical between odd and even cycles, just as cosmic rays are, and unlike other solar related variables that are blind to the fact that the real cycle is 22 years (Polarity returns back to the same state after two switches, hence, 22 years. The asymmetry arises from the fact that cosmic rays are primarily positive particles, and the sun is rotating such that there is a clear helicity to the field configuration).

- There are several experimental results showing that ions increase the nucleation and formation of a few nm sized aerosols and increase the survival of those aerosols as they grow to become 50 nm sized cloud condensation nuclei. A few examples are given in references 10-13.

One should be aware that we are still missing the last piece of the puzzle, which is to take the various mechanisms, plug them into a global aerosol model and see that there is a sufficiently large variation in the cloud condensation nuclei. This takes time, but compared with the aforementioned examples of genetics, neutrinos or dark matter, it will definitely take us much less to provide this last piece, but in any case, the evidence should have forced the community to seriously consider it already.

Nonetheless, even with the above large body of empirical evidence, the link has been attacked left and right. A really small number has been valid and interesting, but not to the extent to invalidate the existence of a cosmic ray climate link, just to modify our understanding of it. The rest has been mostly bad science, as I exemplify below.

- One of the main critiques arises when people look for the cosmic ray climate link but find none. In all those cases were no effect is seen, the authors didn’t estimate the size of the effect they expected and compare it with the noise level in the data. For example, if one considers only a small patch of the atmosphere above oceans, then the day to day fluctuations in the cloud cover are large compared with the Forbush decrease signal. Similarly, not seeing an effect over 10’s of thousands of years because of Earth’s magnetic field changes, is not surprising because switching off Earth’s magnetic field altogether is expected to give rise to a 1°C effect, which is notably smaller than the climate variations seen over these time scales (presumably because of the Milankovich cycles).

- The cosmic ray climate link over geological time scales was attacked by several papers. Only one raised a valid scientific point, which is that the original analysis of Jan Veizer and I didn’t consider the effect that the ocean pH (affected by atmospheric CO2) has on the Oxygen 18 data. When that was taken into account, we modified our best estimate for climate sensitivity to be 1 to 1.5°C per CO2 doubling. Other analyses are blatantly wrong, such as faulty statistical analysis or data handling (see summaries here and here), or even simple arithmetic mistakes! (see here).

- The last set of critiques are actually part of a healthy scientific discourse about the mechanism that is responsible for linking atmospheric ionization with cloud condensation nuclei. Papers like this discuss the possibility that ion induced nucleation could be the physical mechanism linking ionization changes with variations in the cloud condensation nuclei number density. However, even if we don’t fully understand the underlaying mechanism, ruling out a particular suggested mechanism doesn’t mean that other possibilities do not exist (in fact, they do, see ref #13 below). When Pauling and Corey suggested the triple helix model for DNA in 1953, they were off, but it wasn’t a reason to discard the whole idea of genetics.

References:

- Shaviv, N. J. Using the oceans as a calorimeter to quantify the solar radiative forcing. J. Geophys. Res. (Space Phys.) 113, 11101 (2008) local version (not paywalled)

- Howard, D., Shaviv, N. J., Svensmark, H., The solar and Southern Oscillation components in the satellite altimetry data, J. Geophys. Res. Space Physics, 120, 3297–3306 (2015)

- Ziskin, S., Shaviv, N. J., Quantifying the role of solar radiative forcing over the 20th century, Advances in Space Research 50, 762–776, (2012). local version (not paywalled)

- Svensmark, H., Bondo, T. & Svensmark, J. Cosmic ray decreases affect atmospheric aerosols and clouds. Geophys. Res. Lett. 36, 15101–1510 (2009)

- Svensmark, J., Enghoff, M. B., Shaviv, N. J. & Svensmark, H. The response of clouds and aerosols to cosmic ray decreases. J. Geophys. Res.: Space Phys. 121, 8152–8181 (2016).

- Shaviv, N. J. Cosmic ray diffusion from the galactic spiral arms, iron meteorites, and a possible climatic connection. Phys. Rev. Lett. 89, 051102–05110 (2002)

- Shaviv, N. J. The spiral structure of the Milky Way, cosmic rays, and ice age epochs on Earth. New Astron. 8, 39–77 (2003)

- Shaviv, N. J., Prokoph, A., Veizer, J., Is the Solar System's Galactic Motion Imprinted in the Phanerozoic Climate? Scientific Reports volume 4, Article number: 6150 (2014)

- Svensmark, H. & Friis-Christensen, E. Variation of cosmic ray flux and global cloud coverage—a missing link in solar-climate relationships. J. Atmos. Sol. -Terr. Phys. 59, 1225–1232 (1997).

- Svensmark, H., Pedersen, J. O. P., Marsh, N. D., Enghoff, M. B. & Uggerhøj, U. I. Experimental evidence for the role of ions in particle nucleation under atmospheric conditions. Proc. R. Soc. A 463, 385–396 (2007)

- Kirkby, J. et al. Role of sulphuric acid, ammonia and galactic cosmic rays in atmospheric aerosol nucleation. Nature 476, 429–433 (2011).

- Svensmark, H., Enghoff, M. B. & Pedersen, J. O. P. Response of cloud condensation nuclei (>50 nm) to changes in ion-nucleation. Phys. Lett. A 377, 2343–2347 (2013).

- Svensmark, H., Enghoff, M. B., Shaviv, N. J., Svensmark J., Increased ionization supports growth of aerosols into cloud condensation nuclei, Nature Communications 8, Article number: 2199 (2017)

22 minute talk summarizing my views on global warming 4 Aug 2019 12:46 PM (6 years ago)

My experience at the German Bundestag's Environment Committee in a pre-COP24 discussion 7 Dec 2018 5:17 AM (7 years ago)

Last week I had the opportunity to talk in front of the Environment committee of the German Bundestag. It was quite an interesting experience, and frankly, something I would have considered unlikely before receiving the invitation. It was in fact the first time a climate "skeptic" like myself appeared behind those doors in many years.

As I understand, the committee was used to inviting Prof. Schellnhuber, formerly the director of the Potsdam Institute for Climate Impact Research. However, as he recently retired, there were voices that the committee should freshen up and invite someone else, and the name that came up was that of Prof. Anders Levermann, also from the same PIK. That however triggered some of the parties to request other people as well, and the committee ended up inviting 6 specialists. Two were bona fide scientists (including myself and Levermann) while the four other were experts on other topics. My name popped up by the right wing AfD party who's climate agenda is consistent with my climate findings—that global warming is a highly exaggerated scare.

The earliest flight from Israel that day would have brought me to the Bundestag awfully close to the beginning of the discussion. So I flew in the day before. I landed in a freezing cold Berlin (-3°C) but sunny! Actually exhilarating weather I quite like.

The next day I showed up at the committee. I was interviewed by someone form a local news outlet that I was told has the tendency to distort the interviews with people like myself (if anyone knows about it, I'm curious, so leave a comment about it if you have seen it).

As I entered the committee room and sat down, Levermann past by and told me in hebrew, אתה יודע שאתה טועה (You know that you're wrong). Which of course caught me in a bit of surprise. It turns out that Levermann did his PhD with Prof. Itamar Procaccia at the Weizmann institute, a world expert in turbulence, nonlinear phenomena and statistical mechanics. Anyway, my German can be described as somewhere between nonexistent and really awful (I studied for a year when I was in high school in the US but forgot most), but enough to say, Ich glaube ich bin recht (I believe I am right).

The discussion started with each one of the experts allowed to talk for 3 minutes. It is actually quite a problem. People have been brain washed to think about global warming as mostly anthropogenic and almost unavoidably catastrophic. How do you prove to people that they are all wrong (or more precisely, that they were told highly exaggerated tales) in such a short time? To make things worse, I was told at the last minute that their TV is broken. Thus, the powerpoint slides I prepared were actually printed out and given to the committee members.

Given that, I had I think no choice but to concentrate on what I think is the biggest error you find in the IPCC, and which clearly overturns the standard polemic, and that is that the sun has a large effect on climate.

Here is what I prepared (what I said was pretty close but not all verbatim):

Three minutes is not a lot of time, so let me be brief. I’ll start with something that might shock you. There is no evidence that CO2 has a large effect on climate. The two arguments used by the IPCC to so called “prove” that humans are the main cause of global warming, and which implies that climate sensitivity is high, are that: a) 20th century warming is unprecedented, and b) there is nothing else to explain the warming.

These arguments are faulty. Why you ask?

We know from the climate-gate e-mails that the hockey stick was an example of shady science. The medieval warm period and little ice ages were in fact global and real. And, although the IPCC will not admit so, we know that the sun has a large effect on climate, and on the 20th century warming in particular.

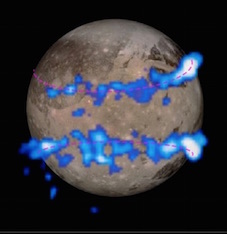

In the first slide we see one of the most important graphs that the IPCC is simply ignoring. Published already in 2008, you can see a very clear correlation between sea level change rate from tide gauges, and solar activity. This proves beyond any doubt that the sun has a large effect on climate. But it is ignored.

To see what it implies, we should look at figure 2.

This is the contribution to the radiative forcing from different components, as summarized in the IPCC AR5. As you can see, it is claimed that the solar contribution is minute (tiny gray bar). In reality, we can use the oceans to quantify the solar forcing, and see that it was probably larger than the CO2 contribution (large light brown bar).

Any attempt to explain the 20th century warming should therefore include this large forcing. When doing so, one finds that the sun contributed more than half of the warming, and climate has to be relatively insensitive. How much? Only 1 to 1.5°C per CO2 doubling, as opposed to the IPCC range of 1.5 to 4.5. This implies that without doing anything special, future warming will be around another 1 degree over the 21st century, meeting the Copenhagen and Paris goals.

The fact that the temperature over the past 20 years has risen significantly less than IPCC models, should raise a red flag that something is wrong with the standard picture.

I should also add that science is not a democracy. The majority is not necessarily right! You should also be careful and make the distinction between evidence for warming and evidence for warming by humans. There is in fact no evidence for the latter. Last, people may frighten you with secondary climate effects associated with global warming, on the sea level, cryosphere, droughts floods or economic effects. However, if the underlying climate model is fundamentally wrong, all the ensuing predictions are irrelevant.

The fear of global warming, and with it the denouncement of any other voice, is now part of our Zeitgeist. However instead of blindly flowing with the flow, we should stop for a minute and think before we waste so much of our precious public resources. Maybe we will find out the that the emperor has new clothes.

When invited, I was also told that I can submit a written statement, which is what I did. It is a few times longer and has a bit more information. You can find it on the Bundestag's website, with a German translation.

Then came the questions, which were mostly guiding questions - each party asked the expert close to its heart to basically continue saying whatever they wanted to hear. One of the questions I was asked was about the determination of the global temperature, but frankly I didn't understand it. I should add that I had to rely on simultaneous translatation (there were two translators brought in especially for me, I think), and the translated question I heard in English sounded like somethig a bit convoluted and hard to address.

Anyway, during the whole discussion I was directly criticized by Levermann and by Lorenz Beutin, MdB (Bundestag member from Die Linke - the "The Left").

The first such critique was prompted by a request to Levermann, to address why I was wrong in my speech. I should say that Levermann seems nice at the personal level. I have nothing against him, but I his response at this round was totally unscientific. He said that everything I said is rubbish (at least that was the English translation I heard), which of course is not a scientific argument.

The second round came from Beutin. He actually raised two interesting specific points which Levermann pickup on as well, which is great, because this is what science is all about. Argue about specific scientific facts and the conclusions that can be drawn from them.

So what were the points that were raised by Beutin and Levermann?

1) The average sea level change rate (in the solar / sea level change rate graph) is above zero, proving that there was long term sea level rise.

2) From about 1990, solar activity has decreased but the temperature increased. So the sun cannot cause the warming.

3) It is all just correlations (and therefore proving nothing).

Why are these arguments either irrelevant or wrong?

1) Indeed as Beutin noted, the average of the sea level rise is above zero. This is of course true. I should say that I am actually really happy that a politician takes notice of such a subtle point. Sea level has increased over the 20th century (because of warming, melting, and glacial rebound), but the sea level rise is not the signal I am looking at. It is an interesting consequence of the global warming. However, I am looking for the drivers of the warming, not the consequences at this point! And the fact that sea level is rising does not contradict the fact that you see the sun’s 11-year signature clearly, with which you can quantify the solar radiative forcing. Clearly then, this argument is irrelevant. The logical leap from a rising sea level to the fact that the sun is not a major climate driver is baseless.

2) Rising temperatures with falling solar activity from the 1990's. The argument here is of course that the negative correlation over this period tells us that the sun cannot be the major climate driver. This too is wrong.

First, even if the sun was the only climate driver (which I never said is the case), this anti-correlation would not have contradicted it. Following this simple logic, we could have ruled out that the sun is warming us during the day because between noon and say 2pm, when it is typically warmest, the amount of solar radiation decreases while the temperature increases. Similarly, one could rule out the sun as our source of warmth because maximum radiation is obtained in June while July and August are typically warmer. Over the period of a month or more, solar radiation decreases but the temperature increases! The reason behind this behavior is of course the finite heat capacity of the climate system. If you heat the system for a given duration, it takes time for the system to reach equilibrium. If the heating starts to decrease while the temperature is still below equilibrium, then the temperature will continue rising as the forcing starts to decrease. Interestingly, since the late 1990’s (specifically the 1997 el Niño) the temperature has been increasing at a rate much lower than predicted by the models appearing in the IPCC reports (the so called “global warming hiatus”).

Having said that, it is possible to actually model the climate system while including the heat capacity, namely diffusion of heat into and out of the oceans, and include the solar and anthropogenic forcings and find out that by introducing the the solar forcing, one can get a much better fit to the 20th century warming, in which the climate sensitivity is much smaller. (Typically 1°C per CO2 doubling compared with the IPCC's canonical range of 1.5 to 4.5°C per CO2 doubling).

You can read about it here: Ziskin, S. & Shaviv, N. J., Quantifying the role of solar radiative forcing over the 20th century, Advances in Space Research 50 (2012) 762–776

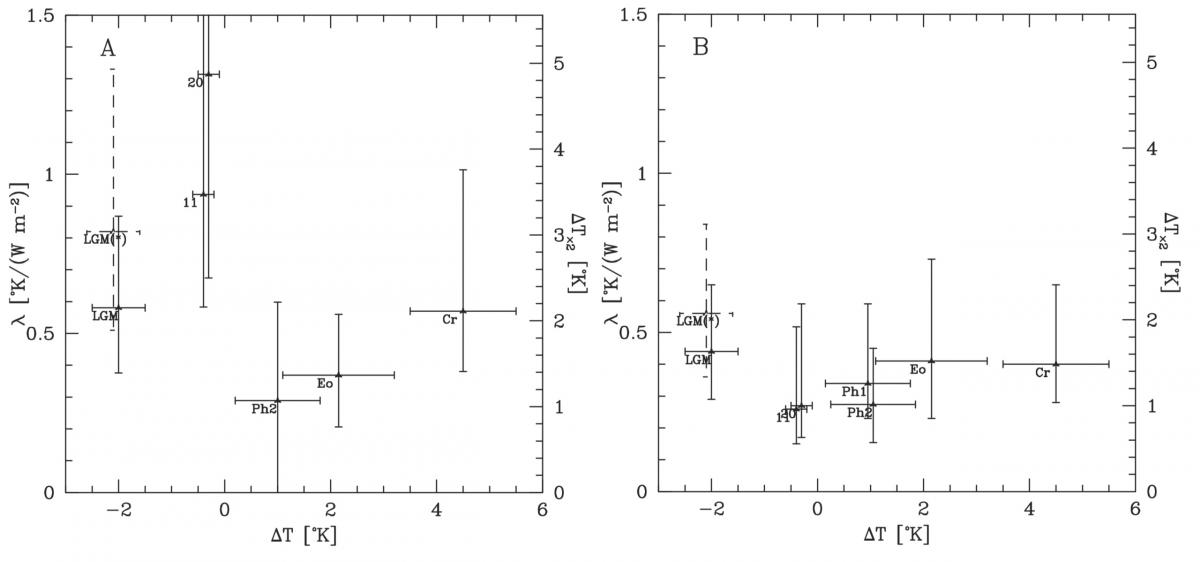

The low climate sensitivity one obtains this way is actually consistent with other empirical determinations, for example, the lack of any correlation between CO2 variations over the past half billion years and temperature variations. See in particular fig. 6 of a sensitivity analysis I published in 2005.

Fig. 6 from Shaviv (2005) in which I carried out a senisitivity analysis assuming that the sun has a large effect on climate through cosmic ray modulation (right) or that it doesn't (left). Each error bar is the 1σ sensitiviy range obtain from radiative forcing variations over different periods as a function of the average tempeature relative to today.

3) The third point raised is that the allegedly large solar climate link is just based on correlations. This is wrong as well.

To begin with, if the correlations where just spurious, then there would have been no reason for them to continue, but since the analysis that gave the above graph was published, a new one based on 2 more solar cycles worth of satellite altimetry was published as well. If the first correlation was a mere fluke, then there should be no reason for the correlation to continue, but they very clearly do. See Howard, D., Shaviv, N. J., & Svensmark, H. (2015). The Solar and Southern Oscillation Components in the Satellite Altimetry Data. Journal of Geophysical Research: Space Physics, 120, 3297-3306.

In fact, the sun + ENSO explain 71% of the variance in the linearly detrended sea level change. You could think that it doesn't get any better than that! But it does.

This correlation has the correct amplitude and phase that you would expect from (a) the low altitude cloud cover variations seen in sync with the solar cycle which were estimate to cause drive a 1W/m2 variation and with (b) the change in the sea surface temperature of 0.1°C over the solar cycle (e.g., see the above paper on climate sensitivity over different time scales where the cloud forcing and sea surface temperatures are discussed). You could again think that it doesn't get any better than that, but it does yet again! We have a mechanism to explain it all. It is through modulation of the cloud cover.

Linearly de-trended altimetry based Sea level (blue dots) and a fit which includes only the solar cycle and el Ñino (from Howard et al. 2015). One can clearly see that the solar cycle has a prominant contribution. It is in fact consistent in phase and in amplitude to the Shaviv (2008) result (local copy).

You can read more about the big picture in a summary I wrote a couple of years ago when on sabbatical at the Inistute for Advanced Studies in Princeton. So it isn't correlations. It is part of a wider consistent picture with endless empirical results and physical mechanisms to explain it.