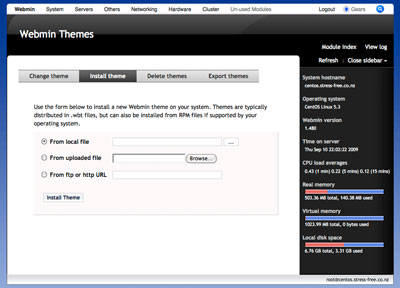

StressFree Webmin theme version 2.09 released 1 Dec 2010 6:50 PM (15 years ago)

This update to the Webmin theme includes a patch from Alon Swartz (from the TurnKey Linux project) that fixes a bug in the display of menu icons. Also included are new icons for the LDAP Client module.

The updated theme can be downloaded from here.

Tuning Ubuntu's software RAID 22 Apr 2010 7:04 PM (15 years ago)

Recently I encountered an issue where the read/write performance of Ubuntu's software RAID configuration was relatively poor. Fortunately, others have encountered this problem and have documented a potential cause and solution here:

The short story is that Ubuntu uses some very conservative defaults for RAID caching. Whilst this may ensure reliable behavior across a range of hardware, it does mean that for many read/write performance will be lacklustre. The solution to this problem is to define a more aggressive caching options on any software RAID partitions that are in use.

Setting the stripe_cache_size and read ahead caches

The following example assumes that the Ubuntu server has two software-based RAID-5 partitions, /dev/md0 (the root partition) and /dev/md1 (the /var partition).

Set the stripe_cache_size and read ahead caches in the /etc/rc.local script. In the example below the stripe_cache_size is set to 8192, and the read ahead cache 4096:

#!/bin/sh -e

#

# rc.local

#

# This script is executed at the end of each multiuser runlevel.

# Make sure that the script will "exit 0" on success or any other

# value on error.

#

# In order to enable or disable this script just change the execution bits.

#

# By default this script does nothing.

# Tune the RAID5 configuration

echo 8192 > /sys/block/md0/md/stripe_cache_size

echo 8192 > /sys/block/md1/md/stripe_cache_size

blockdev --setra 4096 /dev/md0

blockdev --setra 4096 /dev/md1

exit 0

Restart Ubuntu to apply these settings.

Note: It is possible to apply these changes without a restart by executing each directive at the command line.

The pages linked to above explain how to test the influence of these cache changes. In general I have found that the parameters given in the example above have improved performance without influencing the reliability of the system, or the data stored on it.

Bluestreak and the birth of a collaboration kernel 7 Jan 2010 12:11 AM (15 years ago)

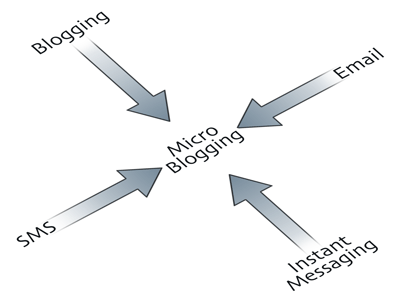

Successful Architecture, Engineering and Construction (AEC) collaboration depends on the timely dissemination of relevant information throughout the project team. This task is made difficult by the number of collaboration interactions that occur and the diverse range of digital tools used to support them. To improve this process it is proposed that a collaboration kernel could weave together these disparate interactions and tools. This will create a more productive and efficient collaboration environment by allowing design discussion, issues and decisions to be efficiently and reliably exchanged between team members and the digital tools they currently use. This article describes how Project Bluestreak, a messaging service from Autodesk Labs, can be transformed into an effective collaboration kernel. To guide this transformation, the principles of the Project Information Cloud have been used to evaluate the existing service and identify areas for future development. These fundamental digital collaboration principles are derived from lessons learnt in the formation of the World Wide Web. When these principles are embodied within a digital collaboration tool, they have demonstrated an ability to improve the timely delivery of relevant information to members of the project team.

Seamless collaboration within a fragmented digital environment

A successful AEC digital collaboration environment brings multiple parties together so that they can productively work towards a satisfactory and achievable design outcome. During this process participants must engage in a variety of interactions between team members and the digital models used to describe the design. These interactions, and the technologies commonly used to enable them, are summarised in the following diagram and table.

Note: The term 'model' refers to a CAD or BIM digital model that represents the proposed design. Digital models play an important role in the collaboration process as they communicate ideas, impose restrictions and can be manipulated to reflect a participant's opinion.

Person to person | |

Purpose | Productive conversations between design participants are critical for the success of any design project. The intention of these interactions is to present, question and debate all aspects of the design. |

Nature | Given the non-linear and bi-directional nature of conversation, the ideas and data communicated are generally fluid and unstructured. To be most effective, the tools used should not introduce latency as this can result in a disjointed conversation. During these exchanges it should be possible for participants to easily reference media such as photographs, documents, diagrams and digital models. |

Enabling technologies | The most common person to person interactions during a design project are physical meetings and telephone conversations. In cases where participants are geographically distributed, Internet-based voice and video conferencing technologies are supplanting these 'traditional' tools. Email, and to a lesser extent instant messaging, are also commonly used in situations where person to person interactions are limited in scope, or do not warrant the interruption of a real-time meeting. |

Person to group | |

Purpose | Individuals must be able to efficiently and reliably communicate information about the design to the project team, such as its status, data and any associated decisions or questions. |

Nature | This interaction is uni-directional because a group cannot directly add to a conversation. If a recipient of a person to group message responds this spawns a new person to person, or person to group interaction. Person to group interactions typically have a specific topic, but the supporting media referenced during the exchange varies depending on the subject and its context. |

Enabling technologies | Email is the most prevalent digital means of communication between a participant and the project team. Messaging systems and discussion forums embedded within project extranets, company intranets or the public Internet are also used. However compared to email their industry adoption is limited. Many document management systems include support for person to group interactions, but this is typically a secondary and underused piece of functionality. |

Person to model | |

Purpose | A participant interacts with the model to understand the design, express new ideas and review the contributed work of others. If the participant cannot efficiently comprehend or manipulate the model, their ability to take part in the broader design discussion is significantly impacted. |

Nature | The nature of this interaction depends on the role and technical ability of the individual. It is common for the majority of an AEC project team to be unable to modify the model. For these participants the model simply communicates the design state, whereas those capable of modifying the model can reshape it to reflect their own opinion, or that of others. |

Enabling technologies | The primary interface between the individual and a digital model is the CAD/BIM software used to create it. Given the complexity and cost of this software, more accessible formats such as DWF and 3D PDF have been developed to allow the entire project team to experience and provide feedback on the model. |

Model to model | |

Purpose | To simplify and distribute the overall process, a design is typically developed using more than one digital model. It is important that these distinct models can be efficiently and consistently integrated so that the team can comprehend the overall design. |

Nature | Given the technical complexity of this task, the flow of data in a model to model interaction typically goes in one direction. This involves extracting the data present in one or more digital models and merging it into a primary 'master' model. |

Enabling technologies | Technologies for model to model interaction vary in complexity, capability and industry penetration. The most common means of consolidation is the manual importing of data from standard digital model formats such as IFC or DWG. Unfortunately, incompatibilities between different CAD/BIM implementations mean such interactions can lead to inconsistent data. Many CAD/BIM tools have functionality for collaboratively editing digital models, but uptake is limited due to their operational complexity and the limitations imposed. |

Model to group | |

Purpose | The overall design needs to be distributed amongst the project team for review and eventual construction. The information conveyed by the model is raw data related to the current state of the design, rather than personal opinion. |

Nature | Given the physical and technical distribution of a project team, it is usually impractical for a group to interact with a digital model in real-time. To compensate, snapshots of the model's design state are created and communicated in a manner that all interested parties can consume. Given its revision-centric nature, the information transfer between model and group is uni-directional. If group members wish to respond to the information conveyed they must establish a new person to person, person to group or person to model interaction. |

Enabling technologies | In larger projects, document management systems such as Buzzsaw, ProjectWise and Aconex are commonly used to ensure the project team is informed of changes to the digital model and supporting documentation. Many of these tools are integrated into CAD/BIM software so that the interaction between model and group is seamless. In smaller projects the cost and complexity of these systems cannot be justified, so manual file transfers using FTP or web servers are often used to distribute the model. |

Given these diverse functional requirements it is understandable that no single technology is capable of satisfying the digital collaboration needs of a project team. This poses a problem because participants stand the greatest chance of receiving timely and relevant data when the digital experience is well integrated. Unfortunately the boundaries between two or more collaboration tools generate inefficiencies, confusion and data loss due to the inability of many digital tools to collaborate with each other. As a consequence, using two or more digital collaboration tools can often lead to the following issues:

- Lack of Process Integration: The decisions or actions taken in one tool are often not reflected in others. In an ideal world, design decisions made during an email exchange would automatically generate outstanding to-do items within the digital model and have the document management service (DMS) notify the team of forthcoming design revisions. When interacting with the digital model or DMS later in the project, this same trail of messages can be used to understand the motivations and justification behind a design element. Currently these actions currently cannot be automatically undertaken, because a simple means of passing messages between the various collaboration tools used by the team does not yet exist.

- No Identity Management: Collaboration tools do not generally use the same system for identifying users or recording information about them. This forces participants to create numerous virtual identities and maintain a record of those used by the team. This becomes problematic when reviewing a series of design decisions that have been made in unison with multiple collaboration tools. For example, a project team using email to exchange thoughts between participants, BIM to develop the digital model and a document management service to distribute the outcomes employs the following identity systems:

Interaction

Software

Identity System

Person to model

BIM software

Account on the local operating system. e.g. COMPANY\username

Person to person

Email

Globally unique email address. e.g. participant.name@company.com

Model to team

Document management service (DMS)

DMS-specific user account. e.g. participant_name

With three different identity systems, tracking a design decision from conception (email) to its finalisation (in the DMS) becomes a complex process. Questioning a design decision is no easier because the participant must first identify who it is they need to talk to, and from there discern that person's virtual identity relative to the collaboration tool being used to conduct the interaction.

- Functional and Data Repetition: The lack of messaging or identity integration between collaboration tools results in the repetition of functionality and data-entry tasks. Common information such as the identities of team members, their project roles and general interests cannot be easily shared or consumed by applications. Similarly, common collaboration functionality used by multiple applications must be continually reimplemented rather than being reused. This occurs because utilising functionality present in third-party applications is difficult, and not all participants have access to the relevant software dependencies. This situation is akin to early desktop computing where system-wide functionality such as copy, paste and printing did not exist. Once this shared functionality was introduced, the capability and productivity of desktop computing was improved because all involved could rely on the presence and consistent behaviour of these familiar tools.

Using a collaboration kernel to integrate collaboration interactions

In an ideal world, the various collaboration interactions which occur during a project would be supported by a single, tightly integrated software application. This 'digital collaboration swiss army knife' would promote an efficient and cohesive collaboration environment by reliably recording and seamlessly communicating relevant design information throughout the team. Unfortunately a universal AEC digital collaboration tool is impractical, both now and in the foreseeable future, because of the complications which arise from bundling so much functionality into a single tool that will be used by a diverse audience. Rather than trying to reinvent the perfect wheel, a more practical approach is needed that provides a means for existing digital tools to exchange design discussion, issues and decisions. This will relieve the integration and replication issues that currently exist without having to start from scratch. The most efficient and reliable means of solving this problem is to establish a collaboration kernel that can act as an intermediary between the disparate tools. This Internet-centric service would in effect become the project's digital post office, overseeing the exchange of messages that support, summarise and promote the collaboration interactions taking place within the project team. A collaboration kernel's presence would be subtle, but its influence on collaboration would be significant. For example, consider the following hypothetical scenario set in the not too distant future:

Pam the project manager reviewed the client's email. The design of the entrance foyer for their multi-storey commercial development needed to be enlarged to accommodate more activities than originally projected. This was not a simple task because the layout of the ground floor was tight, so allocating more space meant sacrificing something else. In her email client she highlighted the email, pressed the New Task button and from the list of names assigned it to Andy the architect. She wrote a quick summary of the task ahead:

"From Pam to Andy: Tomorrow can you identify an alternative foyer design based on the criteria listed in this email?"

She pressed the 'Create Task' button and left work for the evening. As she left, the email client uploaded a copy of the email to the architecture practice's internal server where Andy could access it. It then passed Pam's message, along with a link to the relevant email, to the collaboration kernel which would ensure the task would be brought to Andy's attention the next morning.

The next morning Andy arrived in the office and logged into the Practice's Intranet. His personalised homepage checked in with the collaboration kernel, which promptly returned the task Pam had assigned to him. Andy read the message and followed the link to the referenced email. Being newly assigned to the project he was not fully aware of previous design decisions associated with the foyer. To provide some background he queried the collaboration kernel for all the design interactions related to that specific part of the building. The service returned a chronological history showing who had been involved in the design of this aspect and what input had been recorded. The breakdown revealed two particularly active design periods which included references to early 3D models and preliminary spacial renderings. Reviewing this work and the associated discussions, Andy quickly came to terms with the design concepts and issues at work within this part of the building. He opened the project's Building Information Model (BIM), but before starting work on the revision made the following note in the modelling tool's work-log:

"From Andy to everyone: I am spending this morning redeveloping the entrance foyer as per Pam's instructions."

He attached Pam's task to this note and saved it to the work-log. Behind the scenes the BIM software published the message to the collaboration kernel. The kernel broadcast the message to everyone in the team so that they could be forewarned of the changes afoot.

Meanwhile in another part of town Leny the lighting consultant was finalising the design of the building's ground floor lighting. That morning he had received a phone call from the client requesting a change to some of the fittings, but the proposed foyer changes had not been mentioned. His lighting simulation software displayed a notification from one of the architects:

"From Andy: I am spending this morning redeveloping the entrance foyer as per Pam's instructions."

Lenny could not access Pam's referenced instructions as he worked in another office, but he got the feeling this could affect his lighting design. He contacted Andy over instant messaging, and very quickly they identified the change would be a problem and that they should have a telephone conversation to discuss a practical way forward. After the telephone call Lenny quickly made a couple of notes about the conversation and what changes they had both agreed to make to their respective digital models:

"From Lenny to everyone: Andy and I have just discussed the proposed changes to the foyer and have come to an agreement that will suit the client's needs and code requirements."

"From Lenny to Andy: If you redesign the east side of the foyer as discussed I will be in a position to make the relevant lighting design changes this afternoon."

These notes were published to the collaboration kernel where they were distributed to everyone in the team. The second note was addressed to Andy so that his computer would remind him of Lenny's plans.

Andy spent the morning modifying the digital model to include the revised foyer design. On completion he published the revised model to the project's document management system (DMS) for review. On committing the change he wrote a quick summary of what design aspects had been modified:

"From Andy: This revision to this foyer design takes into account the changes to capacity requested by the client. Accommodating this extra space required changes to the surrounding design, which is forcing Lenny to redesign aspects of the lighting."

News of this change and the accompanying note where automatically published to the collaboration kernel by the DMS. Team members tracking this particular model where then automatically notified of Andy's change by the collaboration kernel. Lenny was one of these people, and on receiving this news he downloaded the revised model for checking against his updated lighting design. After confirming there were no conflicts and the design met code requirements he published a note via the collaboration kernel:

"From Lenny to Andy and Pam: I have reviewed Andy's proposed foyer changes alongside my revised lighting layout. Everything checks out, and as far as I am concerned everything can proceed."

The collaboration kernel delivered the message to Pam to her mobile phone via SMS. She was tied up on the construction site in meetings most of the day, but had been keeping half an eye on Andy and Lenny's activity. She sent an SMS message in reply:

"From Pam to Andy and Leny: Good progress. When I get back to the office I will have the client to review both changes."

The SMS went to a service that automatically forwarded incoming messages from approved numbers to the collaboration kernel for distribution amongst the team.

Establishing a collaboration kernel and attaining this level of integration between the various digital tools in use will take a significant amount of time and resources. Fortunately the early foundations of this cohesive environment may already be in place. For example one promising collaboration kernel candidate is Project Bluestreak, a web-based messaging tool from Autodesk Labs.

The untapped potential of Bluestreak

Autodesk Labs' Project Bluestreak is a Web-based tool for exploring the applicability and usefulness of various 'Web 2.0' and social networking concepts within the context of design collaboration. Whilst unique for Autodesk, this is not the first time these technology concepts have been applied within the AEC industry. For example Vuuch and Kalexo are two established and functionally richer products. However, Autodesk is a dominant and pervasive presence throughout the world of digital design. Therefore if Bluestreak testing proves successful, aspects of it could permeate through their entire software portfolio. This would significantly benefit the workflow of Autodesk's customers, and ultimately influence the direction of collaboration within the industry. In the shorter-term, a key differentiator between Bluestreak and its contemporaries is the support pledged to third-party application development on the platform. Of late, developer ecosystems that leverage information and relationships stored within larger, parent networks have achieved significant business traction. SalesForce's AppExchange and Facebook's Application Directory are prominent examples of this strategy. In both cases, large numbers of independently developed applications have flourished thanks to the popularity of the underlying core service. A collaboration-centric application ecosystem would not garner the same levels of developer or media attention, but within the context of the AEC industry would still be a powerful platform. For Autodesk such an endeavour would add considerable value to their product line, whilst for third-party collaboration tool vendors it would significantly ease development and distribution costs.

When viewed alongside the concept of a collaboration kernel, Bluestreak in its current form is a lost opportunity. Instead of a standalone website, the service should be repositioned as a social messaging service that will be integrated across Autodesk's software portfolio. This would be a strong move as it would expose the service to a broad audience and position it as a viable collaboration kernel. Internally this would benefit Autodesk as it would allow their various development groups to leverage this collaboration-centric functionality via a set of Application Programming Interfaces (API). Once standardised, these same APIs could be publicly exposed to enable third-party application integration, or entirely new collaboration experiences. Third-party software vendors would be eager to build on this platform as it would simplify development and provide a direct, sanctioned link to Autodesk's applications and customer network. Whilst this strategy may sound simple, transforming Bluestreak into a viable collaboration kernel will not be straightforward. The service shows promise but it needs a considerable amount of redevelopment before it can adequately meet this challenge. Rather than blindly working towards this goal, a more productive approach is to analyse Bluestreak's theoretical performance relative to the collaboration principles set down by the Project Information Cloud. This process will identify a set of functional improvements that are required before it can effectively meet the demands of operating as a collaboration kernel.

A Bluestreak in the Project Information Cloud

The intention of a collaboration kernel is to improve the timeliness and relevancy of information delivered to project participants. To achieve this, the kernel must provide a set of common functionality that can be easily leveraged by other AEC software tools. This will efficiently improve the capability of these tools and allow team members to participate in an integrated and consistent collaboration environment. But what functionality does such a kernel require and how will this ensure the collaboration experience is improved?

One solution to this problem is to apply the principles of the Project Information Cloud to the design of the collaboration kernel. The Project Information Cloud is a proposal for an integrated collaboration environment where a project's digital history is readily accessible to those involved (see Using Project Information Clouds to Preserve Design Stories within the Digital Architecture Workplace). The principles of this environment have been derived from the World Wide Web, which in a relatively short space of time has proven to be a very successful and versatile medium for digital collaboration.

The seven principles of the Project Information Cloud are:

- Comprehension: Is the system relatively easy to understand and use by both developers and participants within a project team? Technology should facilitate streamlined and reliable collaboration interactions instead of being an unfortunate necessity.

- Modularity: Can the functionality of the system be extended or replicated by a third-party without interrupting the overall experience of the project team? The concept of a collaboration kernel implies that the extra functionality required to achieve each collaboration interaction can be seamlessly 'bolted on'.

- Decentralisation: Can the collaboration interactions reliably occur without the presence of a central, mediating body? Likewise can one or more parties leave the project team without effecting the consistent flow of information?

- Ubiquity: Can the entire project team access the system from the digital tools that they commonly use? Reliable interaction with the collaboration environment should not require specialised tools that are dependent on a specific software vendor.

- Situational Awareness: Is the system capable of gathering and responding to external information generated by other systems within the project team? A system that stands alone is of marginal value as a collaboration tool.

- Context Sensitivity: Does the system understand the hierarchy and ongoing activities within the project team, and can it tailor its operations and user-interfaces accordingly? AEC project teams are complex and constantly changing. Collaboration systems that cannot adapt during these context shifts are at best a hindrance, and at worst a liability.

- Dynamic Semantics: Can the system's categorisation system change over time so that participants record and navigate information in a way that relates to the current state of the project? No two projects are identical, and as they evolve the vocabulary used to describe the design and associated activities needs to keep pace with this change.

The ability of a collaboration tool to satisfy these principles can be visually illustrated on a seven point spider diagram. Analysing a tool's performance in this manner is a simple yet effective means of identifying its strengths and weaknesses relative to other collaboration technologies. The rating system employed by this spider diagram is illustrated below and described in the following table.

Comprehension | |

0 - Enigma | The purpose, processes and outcomes of the collaboration tool are impossible to understand. |

1 | One or two aspects of the tool's purpose, processes and outcomes are somewhat understood by a few users. |

2 | After significant amount of effort, the tool's purpose, processes and outcomes can be understood by the minority of users. |

3 | After some effort, the purpose, processes and outcomes of the tool can be largely understood by the majority of users. |

4 - Obvious | The purpose, processes and outcomes of the tool are readily understood by all users. |

Modularity | |

0 - Sculpture | The tool is made from a single, large component whose functionality cannot be extended or replicated. |

1 | The tool is made from a single, large component, but with significant effort minor functional aspects can be extended or replicated. |

2 | Parts of the tool are modular and with significant effort some its functionality can be extended or replicated. |

3 | The majority of the tool is modular and with some effort most of its functionality can be extended or replicated. |

4 - Lego | The tool is completely modular and with minimal effort all of its functionality can be extended or replicated. |

Ubiquity | |

0 - Exclusive | The tool is only used by a single party and employs non-standard, proprietary technologies and data formats. |

1 | The tool has some industry use, but it is not readily available and employs non-standard, proprietary technologies and data formats. |

2 | The tool is readily available, but not widely used and generally employs non-standard, proprietary technologies and data formats. |

3 | The tool is readily available and widely used, but it generally employs non-standard, proprietary technologies and data formats. |

4 - Universal | The tool is readily available, widely used and employs freely accessible technologies with standardised data formats. |

Decentralisation | |

0 - Monolith | The tool in its entirety is bound to a single location and cannot be moved or used anywhere else. |

1 | The tool is based in one location, but with significant effort it can be deployed to and used in multiple locations. |

2 | The tool relies on some centralised components, but with moderate effort it can be deployed to and used in multiple locations. |

3 | The tool has a few centralised components that do not stop it from easily being deployed to and used in multiple locations. |

4 - Mesh | The tool's components are distributed and replicated, which presents no single point of failure and allows its use from anywhere. |

Situational Awareness | |

0 - Isolationist | The tool is isolated from the outside world and its processes and interface cannot respond to changes in this environment. |

1 | With significant effort the tool can monitor a few external resources so that its processes or interface can respond to changes in them. |

2 | With moderate effort the tool can monitor some external resources so that its processes or interface can respond to changes in them. |

3 | With minimal effort the tool can monitor a large number of external resources and can automatically respond to changes in them. |

4 - Hive mind | The tool is deeply intertwined with its surrounding environment and its processes and interface automatically responds to changes in it. |

Context Sensitivity | |

0 - Oblivious | The tool has no understanding of the project situation and its processes and interface only operate one way. |

1 | The tool has no understanding of the project situation, but with significant effort, its processes and interface can be tuned. |

2 | The tool has a very limited understanding of the project situation, but with moderate effort, its processes and interface can be tuned. |

3 | The tool has a limited understanding of the project situation, and in response can change some processes and interface aspects. |

4 - Aware | The tool has a strong understanding of the project situation, and in response automatically changes its processes and interface. |

Dynamic Semantics | |

0 - Meaningless | The tool employs no semantic system to organise the data it collects or transfers. |

1 | The tool employs a single semantic system that cannot be modified without considerable effort or planning. |

2 | The tool employs a single semantic system that can be modified with minimal effort or planning. |

3 | The tool employs multiple semantic systems specific to the user and their context, but modifying them requires considerable effort. |

4 - Expressive | The tool employs multiple semantic systems specific to the user and their context, and if need be they can be easily modified. |

How each of these Project Information Cloud principles is embodied within collaboration tools currently used by the AEC industry is illustrated in the following diagrams. In this diagrammatic analysis an ideal digital collaboration tool would form a perfect heptagon, but in each case one or more areas are found to be lacking.

These same principles can be applied to Bluestreak to identify its collaboration strengths and weaknesses. Adequately satisfying these principles will ensure the service has a strong chance of performing well as a collaboration kernel. Bluestreak's immediate and long-term ability to satisfy the principles of the Project Information Cloud are illustrated in the following diagram and proceeding text.

Comprehension

Bluestreak is currently easy to understand because it has only just been released and therefore lacks functionality or historical 'cruft'. Given this spartan beginning, the greatest challenge facing Bluestreak's developers is identifying what functionality does not need to be added. This is important because a collaboration kernel should be concise so that those using it have a clear understanding of what services it provides and why. A limited scope will help to ensure the Bluestreak platform is easily adopted by developers and end-users appreciate its role in collaboration. This strategy has been very successful for Twitter, which has flourished thanks to the ease by which developers and users alike have understood what it has to offer and how to leverage it to achieve their desired results.

The difficultly ahead for Bluestreak is that becoming a successful collaboration kernel requires it integrate with a diverse range of AEC tools in a number of ways (as illustrated by the diagram above). This integration breaks down into three forms:

- Components: Autodesk and third-parties will build components on top of the Bluestreak API that will form a critical part of its web interface and functionality.

- Web Service API: For basic operations many Autodesk and third-party web applications will interact with Bluestreak using a set of web service functions. Web services are a ubiquitous and accessible means of exchanging data between different systems, but these same properties makes it an inefficient means of programming complex tasks.

- Client API Libraries: Learning a set of low-level web services and writing custom code poses a significant learning curve and development hurdle. To ease this burden Autodesk needs to provide a set of software libraries which allow developers to reliably and quickly perform a set of complex Bluestreak operations using only a few lines of code.

To improve the comprehension of developers and users it is important that these three integration points are well designed and documented. A developer should not be expected to understand the entire Bluestreak platform if all they wish to do is achieve quick results using a Client API library. In contrast, the experience of the end-user should be such that they are unaware these even interfaces exist. To them Bluestreak should be as transparent as possible so that collaboration across different applications appears to "just work".

Modularity

Bluestreak's capacity to be modular hinges on its API which will allow third-parties to develop new components. As this API is currently not publicly available judgement cannot be passed on its success. However, it is promising that Bluestreak's own file upload component has been developed using a subset of it. Beyond allowing independent parties to add new functionality, a well documented and public API can be reimplemented by other collaboration systems such as ProjectWise, Aconex and Vuuch. If these services reimplemented the API then, at least in theory, Bluestreak components would be able to integrate with, or run inside of these other services. The benefit of this modularity is that a 'killer application' written on top of the Bluestreak API would not necessarily be restricted to Autodesk's collaboration environment. In the programming world cross-platform APIs and runtime environments are popular and powerful platforms. These range from fully portable programming runtimes such as Java, to ports of traditional APIs like WINE, which enables Windows applications run unmodified on other operating systems.

A diagram illustrating the relationship between the Bluestreak service, its API and various Autodesk and third-party applications.

Beyond the as yet unreleased API, Bluestreak employs OpenID which is an open standard for authenticating to websites. This is currently limited to Autodesk's own OpenID provider, but a future iteration could permit third-party OpenID services to be used, for example Google, Yahoo or an internal corporate account. Enabling authentication modularity in this manner lowers barriers to entry, as potential collaborators will not necessarily have to create a new online identity to participate in an online conversation.

Decentralisation

Like most web applications, Bluestreak cannot be installed onto a private server and migrating data stored on it to another service is not straightforward. This may suffice for a consumer application, but it poses a significant problem in the context of the AEC industry. Companies require reliable systems that adhere to entrenched processes and policies. Therefore to be successful Bluestreak must be decentralised so that it can be run 'in-house' or integrated into other systems.

The first step in this process would be to offer Bluestreak as a standalone application that can be installed on a local server. This sounds straightforward, but in practice it would require significant changes to the way Bluestreak is designed and implemented. An isolated copy of Bluestreak is of limited value if it cannot "talk" to other Bluestreak installations. For example if architects and engineers cannot exchange information because they are running different Bluestreak instances, then the service as a whole is of limited collaboration value. Unfortunately enabling this level of reliable and timely data exchange is fraught with challenges. Google Wave captured headlines due to its rich user-interface, but ultimately its long-term success hinges on the ability of the Wave Federation Protocol to allow users on different Wave servers to seamlessly collaborate in near real-time. A viable option would be for Autodesk to follow Novell's lead and implement the Wave Federation Protocol within Bluestreak. This would solve the decentralisation problem, however this would be a complex, costly and inherently risky undertaking.

Ubiquity

Bluestreak shows promise as a collaboration kernel because it is built on ubiquitous technologies and places minimal restrictions on what can be exchanged. Being a Javascript-based web application, it can be accessed from any standards compliant web browser with an Internet connection. Likewise, when using the tool participants are free to exchange whatever data their team can readily access, instead of being forced into specific formats.

Micro-blogging is one area where Bluestreak could enhance its ubiquity. Micro-blogging is a promising AEC collaboration medium (see Using micro-blogging to record architectural design conversation alongside the BIM), but the implementation within Bluestreak is hamstrung by its isolation and inconsistencies. There is currently no means of posting a message without visiting the Bluestreak website, and for no discernible reason 'status' and 'group' messages have different maximum lengths - 150 vs 250 characters respectively. A more ubiquitous approach would be to implement an existing, albeit immature, micro-blogging standard such as StatusNet (formerly Laconi.ca). Extending an established platform would allow Bluestreak to leverage this existing functionality and community. Project teams would then be able to use desktop or mobile-based software clients rather than just the Bluestreak website. From the perspective of decentralisation, initiatives like StatusNet also allow different micro-blogging systems to exchange messages. These federated micro-blogging solutions are simpler than Google's Wave Federation Protocol, and could prove 'good enough' for the purposes of digital design collaboration.

Beyond the promotion of ubiquitous formats and processes, the concept of Bluestreak needs to become ubiquitous across Autodesk's software line. Similar to Ray Ozzie's Mesh initiative within Microsoft, Bluestreak should be portrayed as a collaboration umbrella that touches upon all aspects of Autodesk's activities. Conversations currently taking part within the Bluestreak web application need to be brought to the 3D CAD and BIM tools where the majority of design development, analysis and documentation is taking place. For example, when using Revit an architect should be able to review and participate in Bluestreak discussions without leaving the application. Then when the model is exported to DWF for sending to the contractor, relevant aspects of that discussion could be embedded within the file to preserve its context relative to the overall design process.

Situational Awareness

Currently Bluestreak depends on manual data input and there is no way of externally monitoring the discussion taking place within it. This is a considerable shortcoming because collaboration takes place over multiple communication channels. A successful collaboration kernel should make the team aware of the activities taking place on these other channels instead of being oblivious to them. The API could significantly boost situational awareness by allowing components to pull data from external services, or push data into Bluestreak. Examples of potential components are:

- Changes: An agent that monitors files in a third-party document management service and informs the team when modifications take place. Most project documentation will not reside within Bluestreak, so knowing it has changed and to what degree is an important consideration during collaboration.

- Progress: An agent that parses the project manager's Microsoft Project file or shared calendar and alerts the team of significant events. The project timeline is continually evolving and those involved cannot be expected to maintain it in multiple locations. Monitoring a project's timeline also ensures the collaboration service satisfies the principle of context sensitivity.

- External Activity: An agent monitors an external email account, collaboration tool, or web service for information contributed by a third-party. A sub-contractor may not warrant full Bluestreak project membership, but they could be provided an email address for submitting information and questions. The component could then automatically monitor this email account and publish correspondence to Bluestreak.

Situational awareness is a two-way street, so beyond acting as a data sponge, Bluestreak should expose data to trusted third-parties. Presently users can manually monitor conversations via the website, or elect to have all status/group messages emailed to them. Both of these options are problematic because for many team members Bluestreak will not form a part of their daily workflow. As a result most will not visit the website regularly and will soon ignore, or disable, email notifications. These attention issues cannot be resolved by Bluestreak alone. Instead it must work towards exposing its data and functionality to applications that are regularly used by the team. A prime example of this is that a large portion of Twitter use takes place within third-party tools. Similar results can only be achieved by Bluestreak if it exposes the collaboration interactions it records in machine readable formats (RSS, XML, JSON) that can be parsed by other software used within the project team.

Context Sensitivity

Bluestreak's only nod towards context sensitivity is the use of groups to divide people and conversations. In the future it needs to make better use of the contextual information within a project so that participants can easily navigate, filter and target collaboration interactions. For example project teams have clearly defined, hierarchical relationships that reflect the roles and expertise of each participant. A collaboration kernel that successfully leverages this knowledge will be more able to deliver timely and relevant information to the team. Bluestreak users have profiles, but these lack expertise or fields of interest which would help to bring relevant messages to their attention. Alternatively this information could identify people within the team who are the most capable of resolving a specific design problem.

Beyond filtering and highlighting conversations, context is a useful means of stopping information from reaching participants in the first place. In its current form, a Bluestreak project is like working with a group of people in a large auditorium - anybody can say or hear anything. Whilst fine for general situations, when large numbers of people or sensitive data is involved it becomes important that certain interactions occur in private. At present multiple Bluestreak groups can be created to achieve this, but practically this is unwieldy. A more flexible approach would be to allow messages to be addressed to people within the team based on their profile's meta-data or the project's hierarchical structure. This could be achieved by combining micro-blogging's address (@) and subject (#) syntax at the beginning of a message. For example, a message beginning with @#architect would signify it should be brought to the attention of architects within the team. This same mechanism could be extended to specific phases in the project (@#construction), or fields of interest (@#concept). Borrowing again from micro-blogging, a leading 'd' character (for Direct Message) would signify that the message was intended for a restricted audience. Whilst this syntax is simple, it is compatible with micro-blogging standards and can be clearly presented by software agents.

Dynamic Semantics

At present Bluestreak lacks any means for categorising contributed content. When navigating or searching large amounts of AEC collaboration data this soon becomes a problem because the content of many messages does not reflect its subject matter. For example a discussion centered around "indoor and outdoor flow" maybe conceptual (the floor layout), or specific (the detailing of a door). Micro-blogging services like Twitter have demonstrated that semantics can be embedded within messages via hash (#) tags which Bluestreak could easily support. Components could then be developed using the API that allows the project's semantic structure to be visualised and navigated.

Embedding hash tags within messages is a flexible means of publishing semantics, but participants must also be able to retrospectively apply meaning to content. For example a project's taxonomy will initially focus on conceptual ideas, but as the design is refined, so too will the semantics used to describe it. Semantics are also relative depending on the perspective of the participant, therefore it must be possible to assign multiple semantic layers to content. Achieving this semantic flexibility requires users possess the ability to manually re-categorise any content. To assist in this process the collaboration kernel itself should infer meaning based on a message's context and any assigned relationships.

Applied Semantics

Within Bluestreak users should be able to tag any content that has been contributed so it can be referenced by other data. In a distributed environment embedding new semantic information within existing content is problematic because these changes must be replicated across the team. A more efficient means of solving this problem is to assign all content published to Bluestreak a globally unique URL. These simple URL references can then be categorised multiple times using an existing bookmarking/tagging service such as Delicious, or a native Bluestreak tool.

Inferred Semantics

Beyond manual tagging, semi-intelligent agents could categorise collaboration data based on where and when it was created and what it is related to. This would require Bluestreak to be integrated into other software so that information can be automatically included from this environment. For example, an architect using Revit may identify and highlight an issue with the design's foundations. On posting the issue to Bluestreak using a tool built into Revit, relevant meta-data such as the components affected (foundations), materials used (concrete) and the model's revision details (revision #432) would be included automatically.

Conclusion

A collaboration kernel communicates key design ideas, issues and decisions between the disparate digital tools used by the AEC industry. If it became as digitally prevalent as copy and paste is today, such a service would be an efficient and reliable median between the various collaboration interactions which occur. By helping to weave together these various communication channels, the collaboration kernel would improve the timeliness and relevancy of information delivered to members of the project team. The principles of the Project Information Cloud proved very useful in isolating the key characteristics of a collaboration kernel and its benefit to information flow within the team. Using these principles to assess Bluestreak identified a set of changes that would allow it to better fill the role of collaboration kernel. By implementing these changes and integrating the service across its line of software products, Autodesk could be the first to establish a collaboration kernel, and in doing so ultimately improve the AEC industry's overall collaboration capability.

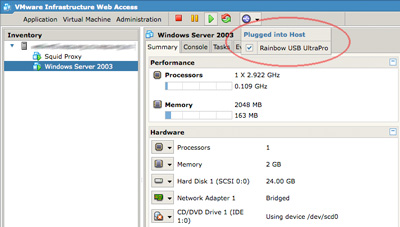

Synchronise two SilverStripe CMS instances 8 Dec 2009 3:40 PM (16 years ago)

This script allows you to operate two distinct, fully functional SilverStripe instances and have the content of one synchronised with the other. When running a content management system in a corporate environment it is useful to have an internal 'development' site and a public 'production' site. SilverStripe has a couple of caching options, namely StaticPublisher and StaticExporter, but these generate static HTML files that cannot be easily modified by content editors.

This approach allows the development and production SilverStripe servers to be easily synchronised, but in between times, content editors are free to make different changes at each end. This is useful when internally the content of the website is undergoing significant change, but during this time the production website content must be 'maintained'.

i.e. You are not forced to 'freeze' your production website, or push internal changes out before they have been properly vetted.

The script copies the local SilverStripe MySQL database (sans page revisions) to the production site and synchronises the assets/Uploads directory.

Note: Page revisions are not sent to the production site because this takes a significant amount of time and bandwidth. Considering these revisions are stored on the internal development server, storing them in both locations is not necessary.

A flow diagram of the actions that take place during this synchronisation process is provided below.

This script assumes that SilverStripe's StaticPublisher caching mechanism is enabled on both local and remote sites, otherwise the sync process will fail.

Requirements

- The script requires SSH, MySQL and RSync, awk, and grep on both servers to function correctly.

- For the email notifications to work, either sendmail or postfix will need to be running locally so that the mail command can deliver notifications.

- For the script run without SSH prompting for passwords, key-based authentication between the two servers will need to be configured. (The key should not have a password.)

- The local MySQL user needs to be able to access two databases:

- Read-only access to the local SilverStripe database.

- All permissions to an empty database where the script can make a copy of the SilverStripe database and strip the page revisions from.

Configuration

The sssync.sh script pulls configuration information from a supplied config file. Below is an example configuration file that lists the various options that should be tuned to your environment.

config

# The local directory where the SilverStripe website is installed

Local_SilverStripe_Directory /var/www

# The local temp directory which the script has write access to

Local_Temp_Directory /tmp

# The local MySQL user (must have write permissions to temp database)

Local_MySQL_User localuser

# The local MySQL password

Local_MySQL_Password localpassword

# The local MySQL hostname

Local_MySQL_Host localhost

# The local MySQL port

Local_MySQL_Port 3306

# The local (primary) MySQL database

Local_MySQL_Database silverstripe

# The local temporary database used to store a revisionless version of the site

Local_MySQL_TempDatabase silverstripe_tmp

# The local user who owns the cache files

Local_User www

# The local group who owns the cache files

Local_Group www

# The remote SSH username

Remote_SSH_User remoteuser

# The remote SSH hostname

Remote_SSH_Host remote.host.name

# The remote SSH port

Remote_SSH_Port 22

# The remote directory where the SilverStripe website is installed

Remote_SilverStripe_Directory /var/www

# The remote directory where backups of the website and database are stored

Remote_Backup_Directory /var/backup/silverstripe

# The remote MySQL username

Remote_MySQL_User remoteuser

# The remote MySQL password

Remote_MySQL_Password remotepassword

# The remote MySQL hostname

Remote_MySQL_Host localhost

# The remote MySQL port

Remote_MySQL_Port 3306

# The remote MySQL database

Remote_MySQL_Database silverstripe

# The remote user who owns the cache files

Remote_User www

# The remote group who owns the cache files

Remote_Group www

# The email address(es) of recipients for sssync email

Recipient_Email_Address notify@user

# The sssync from email address

From_Email_Address sssync@domain.com

# The SMTP server (assumes the Heirloom Mailx utility is used)

SMTP_Server smtp.server.com

The sssync.sh script

The sssync.sh script performs all the described synchronisation functions. Copy and paste the following into a file on your local server named sssync.sh. Make sure you mark it as executable (chmod 777).

sssync.sh (this file can be downloaded from here)

#!/bin/sh

#

# This program is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# (at your option) any later version.

#

# This program is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# A copy of the GNU General Public License is available at

# <http://www.gnu.org/licenses/>.

#

#

###########################################################

# sssync - SilverStripe Site Sync #

###########################################################

#

# Author: David Harrison

# Date: 9 December 2009

#

# This script synchronises a remote SilverStripe installation

# with a local copy. It is assumed that SilverStripe's caching

# mechanism is enabled.

# For SSH authentication to occur without a password prompt,

# SSH keys should be generated to allow password-less login.

#

# ---------------------------------------------------------

#############################################

# Variables pulled from the supplied config #

#############################################

# Local website directory

localSSDir=`awk '/^Local_SilverStripe_Directory/{print $2}' $1`

# Local temp directory

tempDir=`awk '/^Local_Temp_Directory/{print $2}' $1`

# Local MySQL configuration

localMySQLUser=`awk '/^Local_MySQL_User/{print $2}' $1`

localMySQLPassword=`awk '/^Local_MySQL_Password/{print $2}' $1`

localMySQLHost=`awk '/^Local_MySQL_Host/{print $2}' $1`

localMySQLPort=`awk '/^Local_MySQL_Port/{print $2}' $1`

localMySQLDatabase=`awk '/^Local_MySQL_Database/{print $2}' $1`

localMySQLTempDatabase=`awk '/^Local_MySQL_TempDatabase/{print $2}' $1`

# Remote SSH configuration

remoteSSHUser=`awk '/^Remote_SSH_User/{print $2}' $1`

remoteSSHHost=`awk '/^Remote_SSH_Host/{print $2}' $1`

remoteSSHPort=`awk '/^Remote_SSH_Port/{print $2}' $1`

# Remote directories

remoteSSDir=`awk '/^Remote_SilverStripe_Directory/{print $2}' $1`

remoteBackupDir=`awk '/^Remote_Backup_Directory/{print $2}' $1`

# Remote MySQL configuration

remoteMySQLUser=`awk '/^Remote_MySQL_User/{print $2}' $1`

remoteMySQLPassword=`awk '/^Remote_MySQL_Password/{print $2}' $1`

remoteMySQLHost=`awk '/^Remote_MySQL_Host/{print $2}' $1`

remoteMySQLPort=`awk '/^Remote_MySQL_Port/{print $2}' $1`

remoteMySQLDatabase=`awk '/^Remote_MySQL_Database/{print $2}' $1`

# The email options - email requires sendmail or postfix running locally

emailRecipient=`awk '/^Recipient_Email_Address/{print $2}' $1`

fromAddress=`awk '/^From_Email_Address/{print $2}' $1`

smtpServer=`awk '/^SMTP_Server/{print $2}' $1`

# The sendEmail function delivers an email notification.

# This function assumes the Heerloom mailx utility is installed on the system.

# It takes the following parameters:

# 1- Subject

# 2- Message

sendEmail() {

echo "Sending email to $emailRecipient:"

echo " Subject - ${1}"

echo " Message - ${2}"

echo ${2} | mail -s "${1}" -S "smtp=$smtpServer" -r $fromAddress $emailRecipient

}

# The buildStripVersionsSQL function constructs a temporary SQL file that contains

# commands for removing the revisions from the temp database.

#

# Note: If you have custom page types include the relevant SQL statements below

buildStripVersionsSQL() {

echo "DELETE FROM ErrorPage_versions;" > ${tempDir}/sssync.sql

echo "DELETE FROM GhostPage_versions;" >> ${tempDir}/sssync.sql

echo "DELETE FROM RedirectorPage_versions;" >> ${tempDir}/sssync.sql

echo "DELETE FROM SiteTree_versions;" >> ${tempDir}/sssync.sql

echo "DELETE FROM VirtualPage_versions;" >> ${tempDir}/sssync.sql

}

# The cleanTemp function removes the temporary error file.

cleanTemp() {

rm ${tempDir}/sssync.err

}

# The rollBackChanges function restores the file and database backup of the remote website.

rollBackChanges() {

echo "Rolling back the remote file changes"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"tar -xzf ${remoteBackupDir}/html.tgz -C ${remoteSSDir}"

echo "Rolling back the remote database changes"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mysql -u ${remoteMySQLUser} -h ${remoteMySQLHost} -p${remoteMySQLPassword} \

-P ${remoteMySQLPort} \

${remoteMySQLDatabase} < ${remoteBackupDir}/backup.sql"

}

echo

echo "--------------------------------------------"

echo "| SilverStripe Sync process initiated |"

echo "--------------------------------------------"

echo

echo "Local SilverStripe directory: ${localSSDir}"

echo "Temporary directory: ${tempDir}"

echo "--------------------------------------------"

echo

logger "Initiating sssync script..."

echo "Rebuilding the local SilverStripe cache"

cd ${localSSDir}

sapphire/sake dev/buildcache flush=1 > ${tempDir}/sssync.err 2>&1

chown -R $localUser:$localGroup cache

localCacheRebuilt=`tail ${tempDir}/sssync.err | grep "== Done! =="`

cleanTemp

if [ "${localCacheRebuilt}" != "== Done! ==" ]

then

echo

echo "** Error rebuilding the SilverStripe cache - Exiting **"

echo

sendEmail "Error rebuilding local SilverStripe cache"\

"There was an error rebuilding the SilverStripe cache. \

The sync process was not undertaken."

exit

fi

#############################################

# Create a local, revisionless SS database #

#############################################

echo "Creating a temporary, revisionless database"

mysqldump -C -u ${localMySQLUser} -p${localMySQLPassword} -h ${localMySQLHost} -P ${localMySQLPort} ${localMySQLDatabase} | \

mysql -u ${localMySQLUser} -p${localMySQLPassword} -h ${localMySQLHost} -P ${localMySQLPort} ${localMySQLTempDatabase} > ${tempDir}/sssync.err 2>&1

# Create the SQL file to pass to the temp database

buildStripVersionsSQL

# Stip the versions from the temp database

mysql -u ${localMySQLUser} -p${localMySQLPassword} -h ${localMySQLHost} -P ${localMySQLPort} ${localMySQLTempDatabase} \

< ${tempDir}/sssync.sql > ${tempDir}/sssync.err 2>&1

# Remove the temporary SQL file

rm ${tempDir}/sssync.sql

localDBCreated=$(cat ${tempDir}/sssync.err)

cleanTemp

if [ "${localDBCreated}" != "" ]

then

echo

echo "** Error creating a revisionless database - Exiting **"

echo

sendEmail "Error creating revisionless database"\

"There was an error creating a revisionless version of the local database. \

The sync process was not undertaken."

exit

fi

#############################################

# Before performing the sync, make a backup #

#############################################

echo "Moving HTML backup ${remoteBackupDir}/html.tgz to ${remoteBackupDir}/html.tgz.old"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mv ${remoteBackupDir}/html.tgz ${remoteBackupDir}/html.tgz.old"

echo "Moving SQL backup ${remoteBackupDir}/backup.sql to ${remoteBackupDir}/backup.sql.old"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mv ${remoteBackupDir}/backup.sql ${remoteBackupDir}/backup.sql.old"

echo "Creating backup of the remote website"

remoteBackupMade=`ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"tar -czf ${remoteBackupDir}/html.tgz -C ${remoteSSDir} ."`

echo "Creating backup of the remote database"

remoteBackupMade="${remoteBackupMade}`ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mysqldump -u ${remoteMySQLUser} -h ${remoteMySQLHost} -P ${remoteMySQLPort} -p${remoteMySQLPassword} \

${remoteMySQLDatabase} > ${remoteBackupDir}/backup.sql"`"

if [ "${remoteBackupMade}" != "" ]

then

echo

echo "** Error creating remote backup - Exiting **"

echo

echo "Moving the old remote backups into place"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mv ${remoteBackupDir}/html.tgz.old ${remoteBackupDir}/html.tgz"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mv ${remoteBackupDir}/backup.sql.old ${remoteBackupDir}/backup.sql"

sendEmail "Error creating remote backup"\

"There was an error creating a backup of the remote website files or database. \

The sync process was not undertaken."

exit

fi

# Variable to hold sync failure flag

syncFailure="false"

##############################################

# Perform the synchronisation of file assets #

##############################################

echo "Synchronising remote website assets/Uploads directory with local copy"

remoteFileSync=`rsync -aqz --delete -e "ssh -p ${remoteSSHPort}" ${localSSDir}/assets/Uploads/ \

${remoteSSHUser}@${remoteSSHHost}:${remoteSSDir}/assets/Uploads/`

if [ "${remoteFileSync}" != "" ]

then

syncFailure="true"

echo

echo "** Error synchronising website assets/Uploads - Rolling back changes **"

echo

sendEmail "Error synchronising website assets/Uploads"\

"There was an error synchronising the remote website's asset directory. \

The sync process was rolled back."

fi

##############################################

# Synchronise the local and remote databases #

##############################################

echo "Synchronising the remote database with the local (temp) database"

mysqldump -C -u ${localMySQLUser} -p${localMySQLPassword} -h ${localMySQLHost} \

-P ${localMySQLPort} ${localMySQLTempDatabase} | \

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"mysql -u ${remoteMySQLUser} -h ${remoteMySQLHost} -p${remoteMySQLPassword} \

-P ${remoteMySQLPort} ${remoteMySQLDatabase}" > ${tempDir}/sssync.err 2>&1

remoteMySQLSync=$(cat ${tempDir}/sssync.err)

cleanTemp

if [ "${remoteMySQLSync}" != "" ]

then

syncFailure="true"

echo

echo "** Error synchronising the MySQL databases - Rolling back changes **"

echo

sendEmail "Error synchronising the MySQL databases"\

"There was an error synchronising the two MySQL databases. \

The sync process was rolled back."

fi

if [ "${syncFailure}" == "true" ]

then

echo

echo "** Error synchronising the SilverStripe site - Rolling back & exiting **"

echo

# Roll back the file and database changes

rollBackChanges

sendEmail "Error synchronising the remote website"\

"There was an error performing the synchronisation process. \

The sync process was rolled back."

exit

fi

##############################################

# Rebuild the remote SilverStripe web cache #

##############################################

echo "Rebuilding the remote SilverStripe cache"

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"cd ${remoteSSDir}; sapphire/sake dev/buildcache flush=1" > ${tempDir}/sssync.err 2>&1

ssh ${remoteSSHUser}@${remoteSSHHost} -p ${remoteSSHPort} \

"chown -R $remoteUser:$remoteGroup ${remoteSSDir}/cache"

remoteCacheRebuilt=`tail ${tempDir}/sssync.err | grep "== Done! =="`

cleanTemp

if [ "${remoteCacheRebuilt}" != "== Done! ==" ]

then

echo

echo "** Error rebuilding the remote SilverStripe cache - Rolling back changes **"

echo

# Roll back the file and database changes

rollBackChanges

sendEmail "Error rebuilding the remote SilverStripe cache"\

"There was an error rebuilding the remote SilverStripe cache."

exit

fi

sendEmail "SilverStripe was successfully synchronised"\

"Congratulations, the remote website was synchronised without any issues."

logger "sssync script completed"

echo

echo "------------------------------------------------"

echo "SilverStripe was successfully synchronised"

echo "=============================="

echo

Running the script

Assuming your configuration file is in the same directory as the sssync.sh script, run the sync process with the following command:

./sssync.sh config

Assuming the requirements have been met and sync process takes place without error, the following output should be generated:

--------------------------------------------

| SilverStripe Sync process initiated |

--------------------------------------------

Local SilverStripe directory: /var/www

Temporary directory: /var/backup/sssync

------------------------------------------

Rebuilding the local SilverStripe cache

Creating a temporary, revisionless database

Moving HTML backup /var/backup/silverstripe/html.tgz to /var/backup/silverstripe/html.tgz.old

Moving SQL backup /var/backup/silverstripe/backup.sql to /var/backup/silverstripe/backup.sql.old

Creating backup of the remote website

Creating backup of the remote database

Synchronising remote website assets/Uploads directory with local copy

Synchronising the remote database with the local (temp) database

Rebuilding the remote SilverStripe cache

Sending email to recipient@user.com:

Subject - SilverStripe was successfully synchronised

Message - Congratulations, the remote website was synchronised without any issues.

------------------------------------------------

SilverStripe was successfully synchronised

==============================

It is possible to have multiple configuration files and store them in a different directory to the sssync.sh script. For example:

./ssync.sh /etc/sssync/production

./ssync.sh /etc/sssync/testing

The above commands will execute the sync process using the "production" and "testing" configuration files stored in the /etc/sssync directory.

Handling error pages

The sssync.sh script only synchronises the assets/Uploads directory as this is where file and image uploads are stored by default. SilverStripe error pages are stored in the root of the assets directory which is not synchronised. If an error page is changed, make sure it is republished using the SilverStripe admin interface.

StressFree Webmin theme version 2.05 released 18 Oct 2009 2:36 PM (16 years ago)

This update is minor but addresses a few issues:

- Adds a Javascript patch submitted by Rob Shinn that adds sidebar support for multiple servers.

- Fixes the missing footer link back to the module index bug.

- Also included is compressed Javascript and CSS files which should slightly reduce load times.

The updated theme can be downloaded from here.

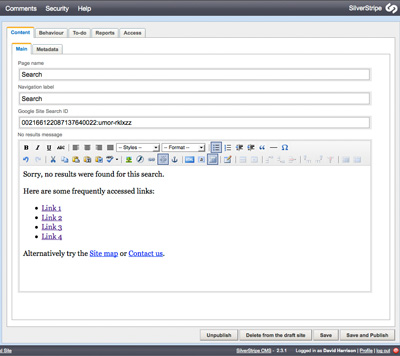

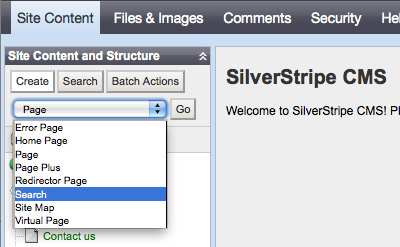

Integrating Google Site Search into SilverStripe 15 Sep 2009 12:32 AM (16 years ago)

SilverStripe is an excellent, user-friendly content management system but its internal search functionality is, to put it kindly, useless. Fortunately with Google Site Search you can embed a Google-powered custom search engine into your SilverStripe site. Doing so requires a paid Site Search account, pricing for which starts at $100/year.

This tutorial explains how to integrate this Google Site Search XML feed into your SilverStripe site. Doing so has a number of benefits over the standard means of integrating Site Search, namely:

- No Javascript is required to display results within the SilverStripe site.

- The user is not taken to a separate, Google operated website to view results.

- The look and feel is consistent with the rest of the SilverStripe site.

- Multiple Site Search engines can be integrated into a single SilverStripe site.

- Site Search results pages are integrated into SilverStripe's management console.

Note: To integrate Site Search into SilverStripe using the described method a Site Search plan must be purchased as this provides results in XML. The free, advertising supported, Site Search engine does not provide search results in XML and cannot be used.

Loading XML data from an external source

Before the search page can be added to SilverStripe we need a reliable means of loading XML content. This is complicated by the fact many Web hosts disable PHP's built in URL fetcher (fopen) with the following php.ini directive:

allow_url_fopen = Off

Assuming it is installed, the cURL can get around this restriction, hence the XmlLoader helper library includes both methods (cURL is used by default in search.php).

Create a XmlLoader.php file in your SilverStripe's mysite/code directory with the following contents:

mysite/code/XmlLoader.php

<?php

class XmlLoader {

public function pullXml($url, $parameters, $useCurl) {

$urlString = $url."?".$this->buildParamString($parameters);

if ($useCurl) {

return simplexml_load_string($this->loadCurlData($urlString));

} else {

return simplexml_load_file($urlString);

}

}

private function loadCurlData($urlString) {

if ($urlString == -1) {

echo "No url supplied<br/>"."/n";

return(-1);

}

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, $urlString);

curl_setopt($ch, CURLOPT_TIMEOUT, 180);

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

$data = curl_exec($ch);

curl_close($ch);

return $data;

}

private function buildParamString($parameters) {

$urlString = "";

foreach ($parameters as $key => $value) {

$urlString .= urlencode($key)."=".urlencode($value)."&";

}

if (trim($urlString) != "") {

$urlString = preg_replace("/&$/", "", $urlString);

return $urlString;

} else {

return (-1);

}

}

}

?>

With the helper library in place to load the XML, it is now time to implement the SilverStripe "search" page type and logic. Create a search.php file in your SilverStripe's mysite/code directory with the following contents:

mysite/code/search.php

<?php

require_once 'XmlLoader.php';

class Search extends Page {

static $db = array(

'GoogleSearchId' => 'Text',

'NoResults' => 'HTMLText',

);

static $has_one = array(

);

function getCMSFields() {

$fields = parent::getCMSFields();

$fields->addFieldToTab('Root.Content.Main', new TextField(

'GoogleSearchId', 'Google Site Search ID'), 'Content');

$fields->addFieldToTab('Root.Content.Main', new HtmlEditorField(

'NoResults', 'No results message'), 'Content');

# Remove the content field

$fields->removeFieldFromTab("Root.Content.Main","Content");

return $fields;

}

}

class Search_Controller extends Page_Controller {

function SearchForm() {

$input = array_merge($_GET, $_POST);

$query = $input['q'];

$output = "<form class=\"search\" action=\"/search/results\"><fieldset>";

$output .= "<input type=\"text\" size=\"40\" name=\"q\" value=\"$query\"/>";

$output .= "<input type=\"hidden\" name=\"p\" value=\"1\"/>";

$output .= "<input type=\"submit\" value=\"Search\"/>";

$output .= "</fieldset></form>";

return $output;

}

function SearchResults() {

$output = "";

$input = array_merge($_GET, $_POST);

$page = isset($input['p']) ? $input['p'] : '1';

$query = $input['q'];

$perPage = 10;

if ($page < 1) { $page = 1; }

$xml = $this->getGoogleSearchResults($this->GoogleSearchId, $perPage, $page, $query);

$results = $this->parseGoogleSearchResults($xml);

$totalResults = $this->getResultCount($xml);

$output .= $this->getFormattedResults($results);

if (count($results) == 0) {

// Show no results message

$output .= $this->NoResults;;

} else {

// Append paging

$output .= $this->getPagingForResults($totalResults, $query, $perPage, $page);

}

return $output;

}

private function getGoogleSearchResults($googleId, $perPage, $page, $query) {

$startingRecord = ($page - 1) * $perPage;

$url = "http://www.google.com/search";

$parameters = array();

$parameters["client"] = "google-csbe";

$parameters["output"] = "xml_no_dtd";

$parameters["num"] = $perPage;

$parameters["cx"] = $googleId;

$parameters["start"] = $startingRecord;

$parameters["q"] = $query;

$XmlLoader = new XmlLoader();

return $XmlLoader->pullXml($url, $parameters, true);

}

private function parseGoogleSearchResults($xml) {

$results = array();

$attr["title"] = $xml->xpath("/GSP/RES/R/T");

$attr["url"] = $xml->xpath("/GSP/RES/R/U");

$attr["desc"] = $xml->xpath("/GSP/RES/R/S");

foreach($attr as $key => $attribute) {

$i = 0;

foreach($attribute as $element) {

$results[$i][$key] = (string)$element;

$i++;

}

}

return $results;

}

private function getFormattedResults($results) {

$output = "";

if (count($results) > 0) {

$output .= "<ul class=\"results\">";

foreach($results as $i => $result) {

$title = "";

$url = "";

$desc = "";

foreach($result as $key => $value) {

if ($key == "title") {

$title = $value;

}

if ($key == "url") {

$url = $value;

}

if ($key == "desc") {

$desc = $value;

}

$output .= "<li><a href=\"$url\">$title</a><p>";

$output .= str_replace("<br>", "<br/>", $desc);

$output .= "</p></li>\n";

}

$output .= "</ul>";

}

return $output;

}

private function getResultCount($xml) {

$totalResults = 0;

$count = $xml->xpath("/GSP/RES/M");

foreach($count as $value) {

$totalResults = $value;

}

return $totalResults;

}

private function getPagingForResults($totalResults, $query, $perPage, $page) {

$maxPage = ceil($totalResults/$perPage);

if ($totalResults > 1) {

$output = "<div class=\"searchPaging\"><p>";

for($pageNum = 1; $pageNum <= $maxPage; $pageNum++) {